The Best Place to Build and Run AI with Ray

From the creators of Ray – Anyscale gives you a platform to run and scale all your ML and AI workloads, from data processing to training and inference.

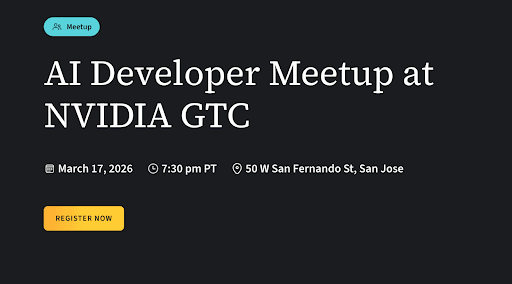

Mar 17. Anyscale @ Nvidia GTC

Join us for an AI developer meetup, organized in partnership with Weights & Biases for networking as well as technical talks on RL, robotics & more

Ray is the Unified Compute Engine for the AI Era

Ray is an open-source framework that helps developers scale data processing, training, and inference workloads from laptops to tens or thousands of nodes.

- Python-native. Distribute Python functions using familiar frameworks and data structures.

- Multimodal. Process all data modalities, including images, video, text, audio, tabular datasets, and more.

- Heterogeneous. Coordinate task execution across CPUs, GPUs, or other accelerators in a single cluster.

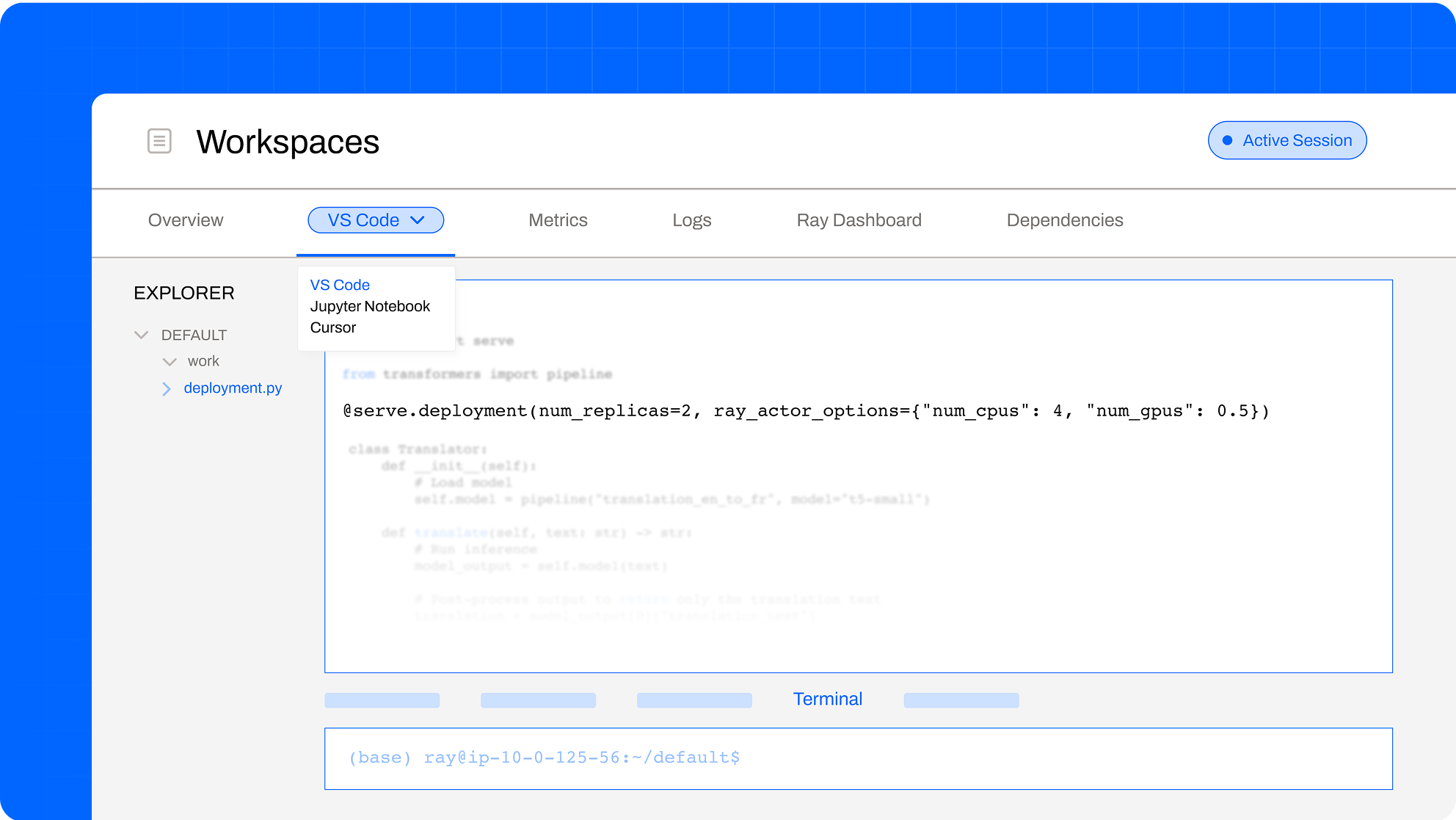

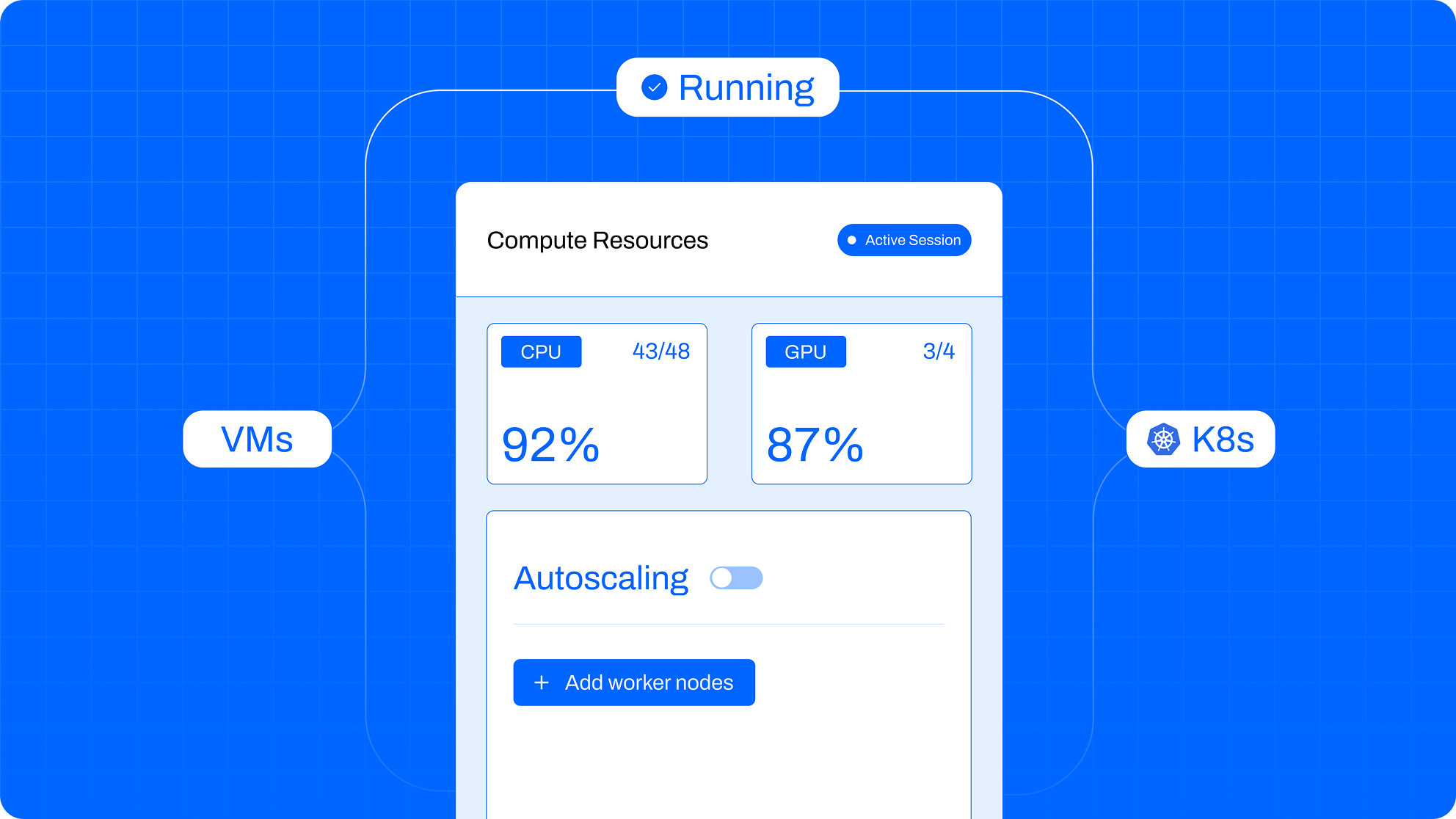

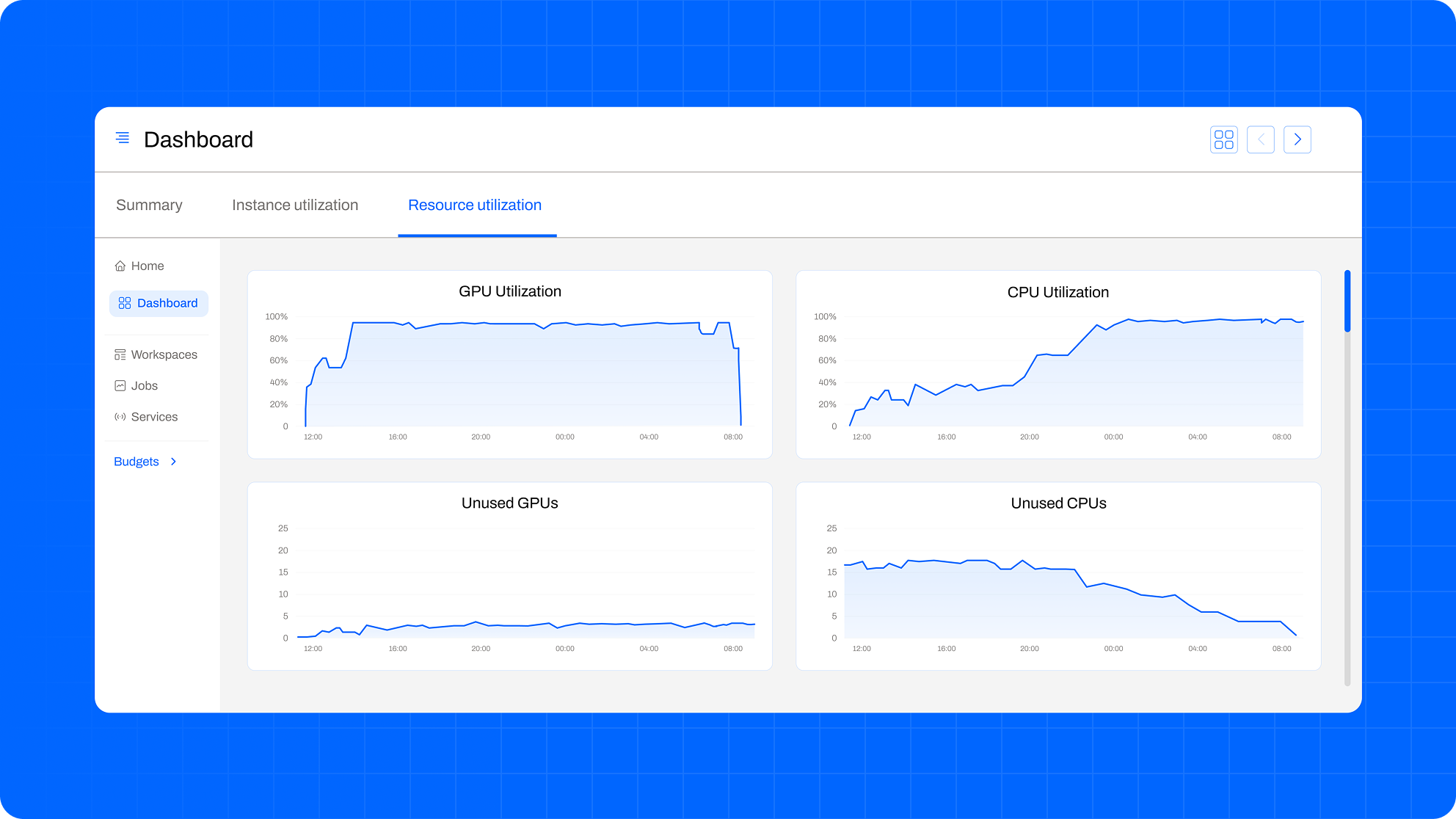

Anyscale is the Best Platform for Ray

Ready from day one to help you build faster, scale easier, and operate with confidence.

One Platform. Every AI Workload.

From data prep to inference — if it’s Python, it runs better with Ray on Anyscale.

LLM training and inference

Fine-tune an LLM to perform batch inference and online serving for entity recognition.

Audio batch inference

Use LLMs as judges to curate and filter audio datasets for quality and relevance.

Reinforcement Learning for LLMs with SkyRL

Reinforcement Learning for LLMs using SkyRL and Ray.

Text-to-text LLM Batch Inference

Run text-to-text LLM offline inference on large-scale text data using Ray Data LLM.

Built with Ray. Deployed on Anyscale.

See how leading organizations take AI to production

12x

faster iteration to deliver over 100+ production models

5x

faster iteration for AI workloads

Wenyue Liu

Senior ML Platform Engineer

“Ray and Anyscale aligned with our vision: to iterate faster, scale smarter, and operate more efficiently.”

Sarah Sachs

Engineering Leader, AI Modeling

"We chose Anyscale not just for what we needed today, but for where we know we’re heading. As our AI workloads grow more complex, Anyscale gives us the infrastructure to scale without limits."

99%

reduction in cost

13x

faster model loading

The Team Behind Ray –

On Your Team

Built by the creators of Ray.

Supported by the people who know it best.

With Anyscale, you don’t just get a platform — you get a partner. Our team works hands-on with yours to troubleshoot, tune, and scale every part Ray-based platform, whether you're launching your first cluster or operating a large-scale deployment.

Try Anyscale Today

Unlock your potential – run AI and other Python applications on your cloud or on our fully-managed compute platform.