How Ray and Anyscale Make it Easy to Do Massive-Scale Machine Learning on Aerial Imagery

Richard Decal is a machine learning scientist on a mission to fight against climate change. He is the Lead ML Engineer at Dendra Systems, where he is working on scaling ecosystem-restoring drones to the planetary scale.

LinkThe challenge: Large scale ecological restoration

Our species has dedicated a tremendous amount of ingenuity and money to planet-scale resource extraction. The rate of ecological destruction we’re seeing today far exceeds past levels. Coupled with climate change, this has resulted in 2 billion hectares of degraded land, and other cascading phenomena such as wildfires and mass extinction.

By contrast, the ecological restoration and monitoring rates haven’t kept up, in part because traditional methods do not scale. Ecosystem restoration work tends to be manual, and with little automation. These ecological restoration methods also tend to fail in places like cliffs where you can’t go with a machine or on foot.

In addition, ecological traditional methods of ecological monitoring do not scale. Satellite and plane imagery do not provide high enough resolution for certain tasks, such as determining the species of grass. Invasive weed monitoring, which is typically done by someone driving around on a truck and trying to spot weeds, is error prone and not comprehensive.

LinkDendra’s mission: Scalable ecological restoration and monitoring

Dendra Systems’ mission is to enable faster, high-quality, transparent and scalable restoration. We do this using a variety of tools — drones for ultra-high resolution mapping at an unprecedented scale; machine learning algorithms that analyze imagery to derive insights; specialized seeding drones for targeted restoration at scale. Our approach is safer, more targeted and robust. It’s also 11x faster and 3x cheaper than traditional methods — allowing us to significantly accelerate our restoration efforts in a cost-effective way.

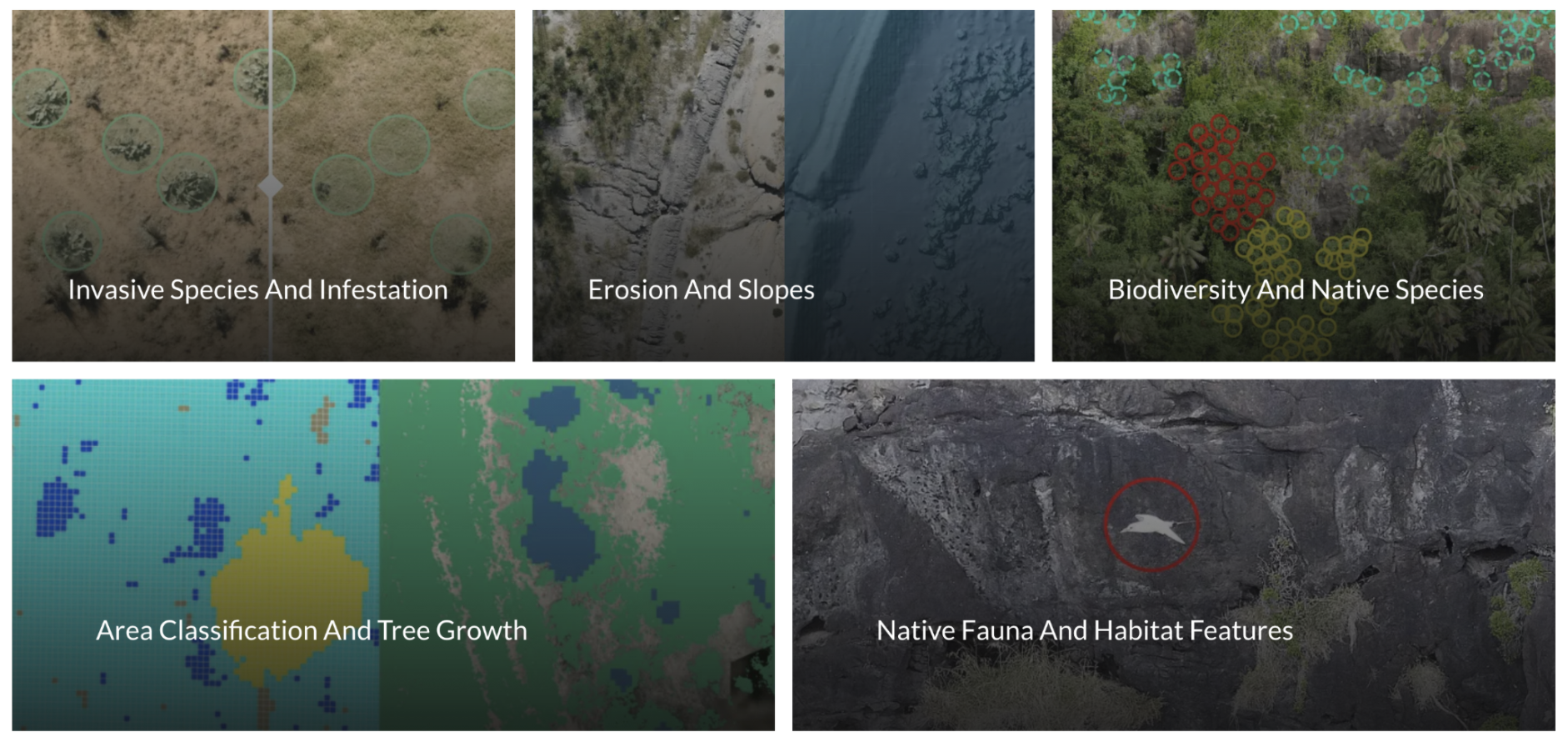

Using these approaches, we are able to offer a whole suite of ecosystem analytics services such as biodiversity assessments, identifying migratory routes, weed management, pest management, erosion and soil health monitoring, and more.

As part of our ecosystem restoration services, Dendra does ecosystem monitoring through a holistic suite of ecosystem analytics.

As part of our ecosystem restoration services, Dendra does ecosystem monitoring through a holistic suite of ecosystem analytics.Caption: As part of our ecosystem restoration services, Dendra does ecosystem monitoring through a holistic suite of ecosystem analytics.

Technology allows us to provide these services at a massive scale. A single drone can capture 400 soccer fields of imagery per day. 10 of our seeding drones flying in a swarm could plant as many as 300,000 trees in a day — which is orders of magnitude greater than what is manually possible.

LinkDendra’s machine learning platform

As the founding lead of the machine learning team at Dendra, I was tasked with building a machine learning platform that could handle terabyte-scale image datasets (and beyond). I looked for a library that would easily let me write code that could scale from my laptop to a cloud, without requiring me to be a distributed systems expert or computer scientist. I managed to accomplish this by using the Ray ecosystem and Anyscale platform.

LinkTraining Deep Neural Networks at Scale

When I first started to build the platform, I started on AWS Sagemaker. I think Sagemaker is great if you are trying to bootstrap from 0 because they have many templated solutions. However, Sagemaker wasn’t flexible enough to easily let us use specialized models and annotation workflows. In looking for alternatives, I found Ray Train (formerly known as Ray SGD) and Ray Tune.

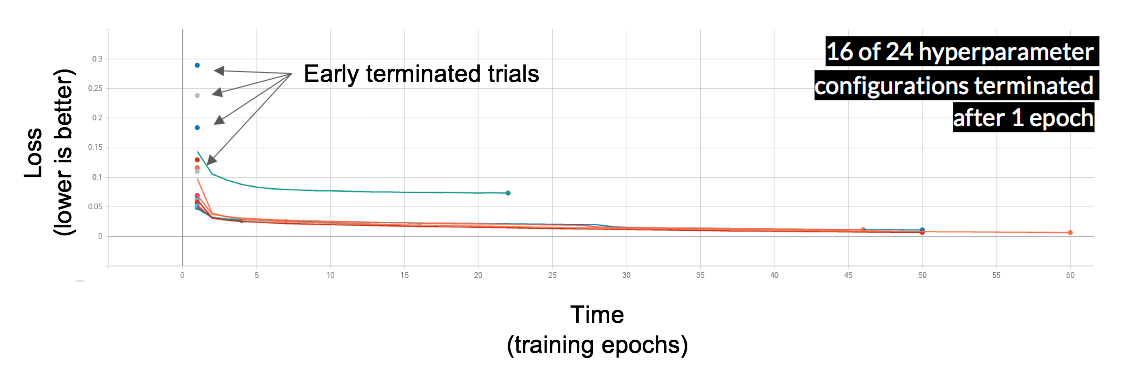

It was easy to pair our PyTorch code with Ray Train, and scale it from a single replica on my local machine to dozens of GPUs in the cloud without changing any code. We also used Ray Tune for hyperparameter search, which significantly reduced infrastructure costs because of its early trial termination feature.

Each line is a different trial with a different hyperparameter configuration. Terminating underperforming trials (higher loss) has saved us a lot of time and money.

Each line is a different trial with a different hyperparameter configuration. Terminating underperforming trials (higher loss) has saved us a lot of time and money.Caption: Each line is a different trial with a different hyperparameter configuration. Terminating underperforming trials (higher loss) has saved us a lot of time and money

Ray Tune enables us to aggressively terminate underperforming trials instead of letting them train to completion. For one workload, we estimated that the early termination feature on Ray Tune reduced our infrastructure costs by 80%. The early termination also made it more affordable to sample a larger hyperparameter space and then do a fine search. This is particularly useful when we are training a new architecture or when we make major changes to a model or data processing pipeline.

LinkScaling inference to millions of images

Given our positive experience with Ray for model training and tuning, we decided to also use Ray Serve for our inference and model serving because it met our key requirements:

Scalability: We needed an inference solution that could easily handle hundreds of millions of images, and parallelize this work across many workers in a cluster.

GPU utilization: We needed a solution that would maximize GPU usage across the cluster, even as we added / removed nodes.

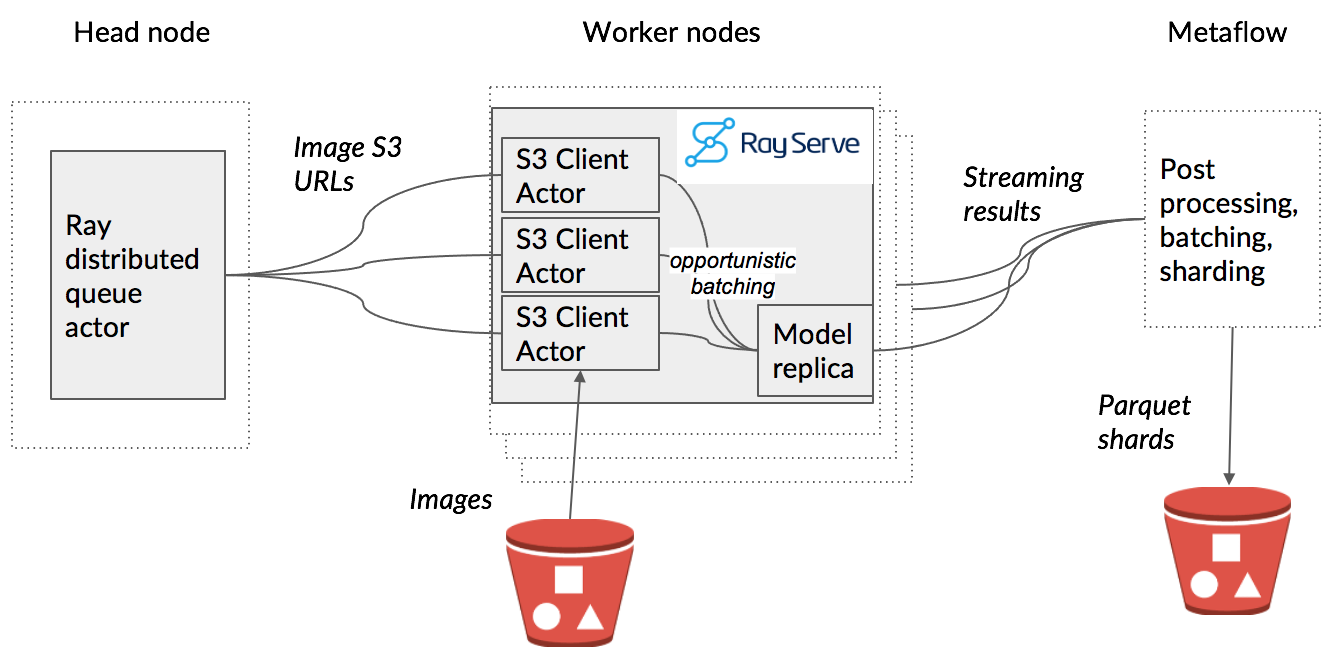

Dendra’s inference pipeline where dotted lines represent machines on the cluster and gray boxes represent Ray actors)

Dendra’s inference pipeline where dotted lines represent machines on the cluster and gray boxes represent Ray actors)Our inference pipeline is architected as follows: A Ray actor farms out image S3 URLs to different worker nodes. The S3 clients on these nodes fetch the images from S3. Those images then get batched and put on model replicas and the results of those models get streamed out for post processing, batching, and sharding. At the end, they get saved as Parquet shards on S3.

This approach allowed us to maximize our network I/O and GPUs usage across the cluster.

LinkWhy we became Anyscale customers

Open source Ray is great, but we needed additional features that were missing in the open source version.

1. Administration - We wanted programmatic control of the cluster, and the functionality we got through Ray CLI was not enough.

2. Performance - We wanted to hotstart our clusters more quickly, without waiting for the all nodes to go through the setup steps. While we could work around this by keeping EC2 instances in a stopped state, this solution was neither cost-effective nor robust.

Going with Anyscale helped address both these requirements. The Anyscale SDK allowed us to programmatically control the cluster, making it very easy to set up clusters, check their status, execute things on them, shut them down, and more. As a bonus, Anyscale abstracts away containerization and image management for us.

LinkContinuously testing pipelines that require GPUs

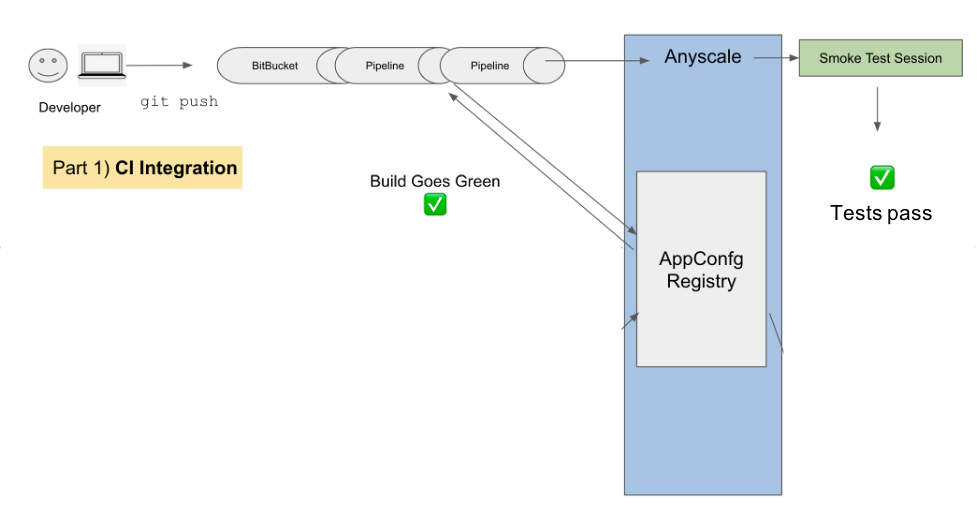

One use case for the Anyscale SDK is to address a limitation in our Bitbucket CI pipelines: they do not provision machines with GPUs. As a result, we were unable to test our inference and training pipelines on Bitbucket CI machines. To mitigate this issue, we used to do manual testing whenever we had to merge a new pull request into the master branch, which was tedious.

Our goal was to be able to setup machines with GPUs, run these pipeline tests, and do other neural-network specific tests. To do this, we utilize Anyscale. The image below shows what it looks like when we push to Bitbucket.

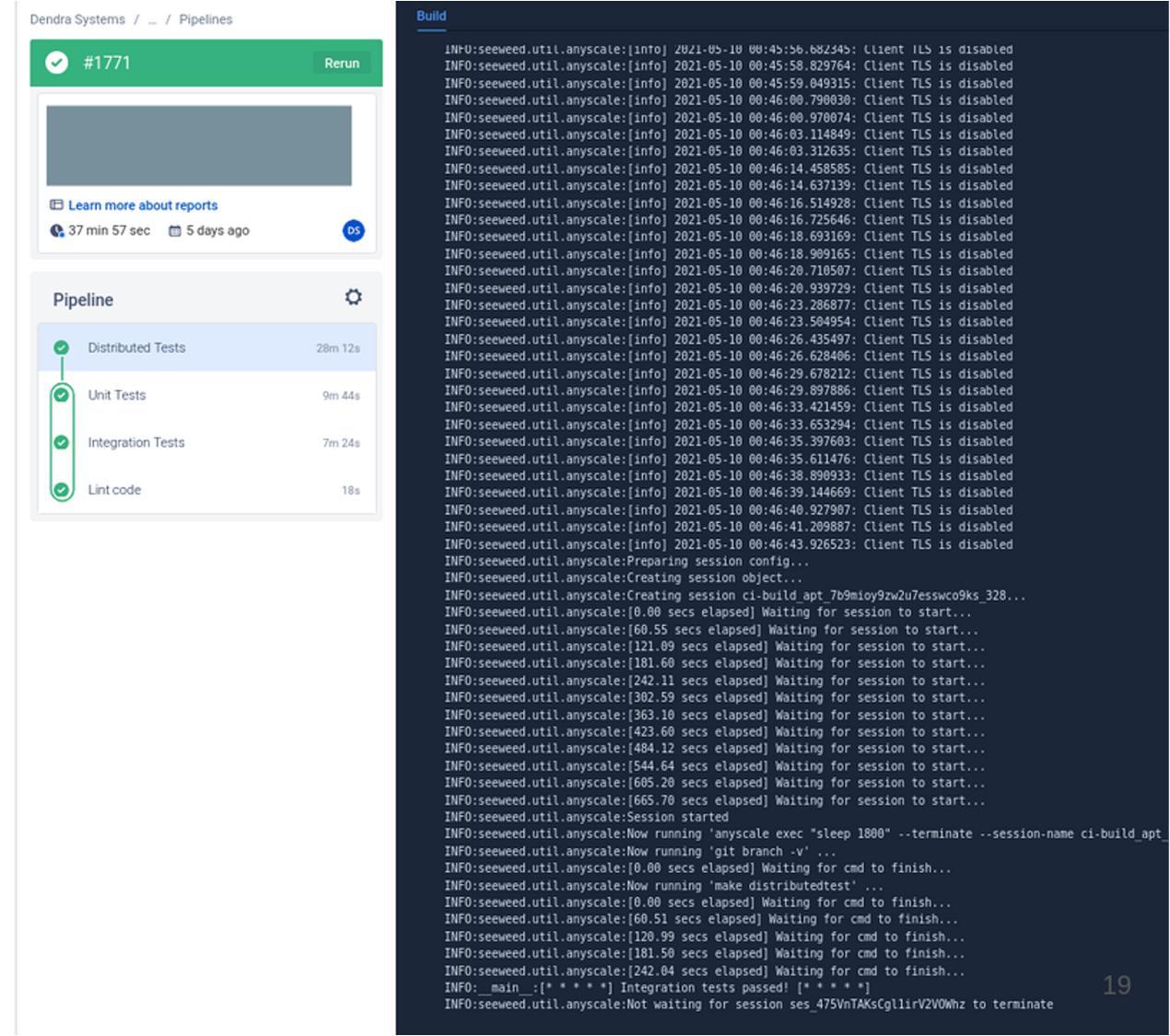

Our ML pipelines get activated and the Anyscale SDK creates an appconfig and compiles a build. If it passes, then we use Anyscale to start a session with GPUs on them and run our tests to see if they pass. These results get propagated back to Bitbucket's CI. The image below shows Bitbucket when tests are passing.

Passing build and tests on Bitbucket CI. Bitbucket’s machines spin up an Anyscale cluster, which does the GPU work.

Passing build and tests on Bitbucket CI. Bitbucket’s machines spin up an Anyscale cluster, which does the GPU work.Caption: Passing build and tests on Bitbucket CI. Bitbucket’s machines spin up an Anyscale cluster, which does the GPU work.

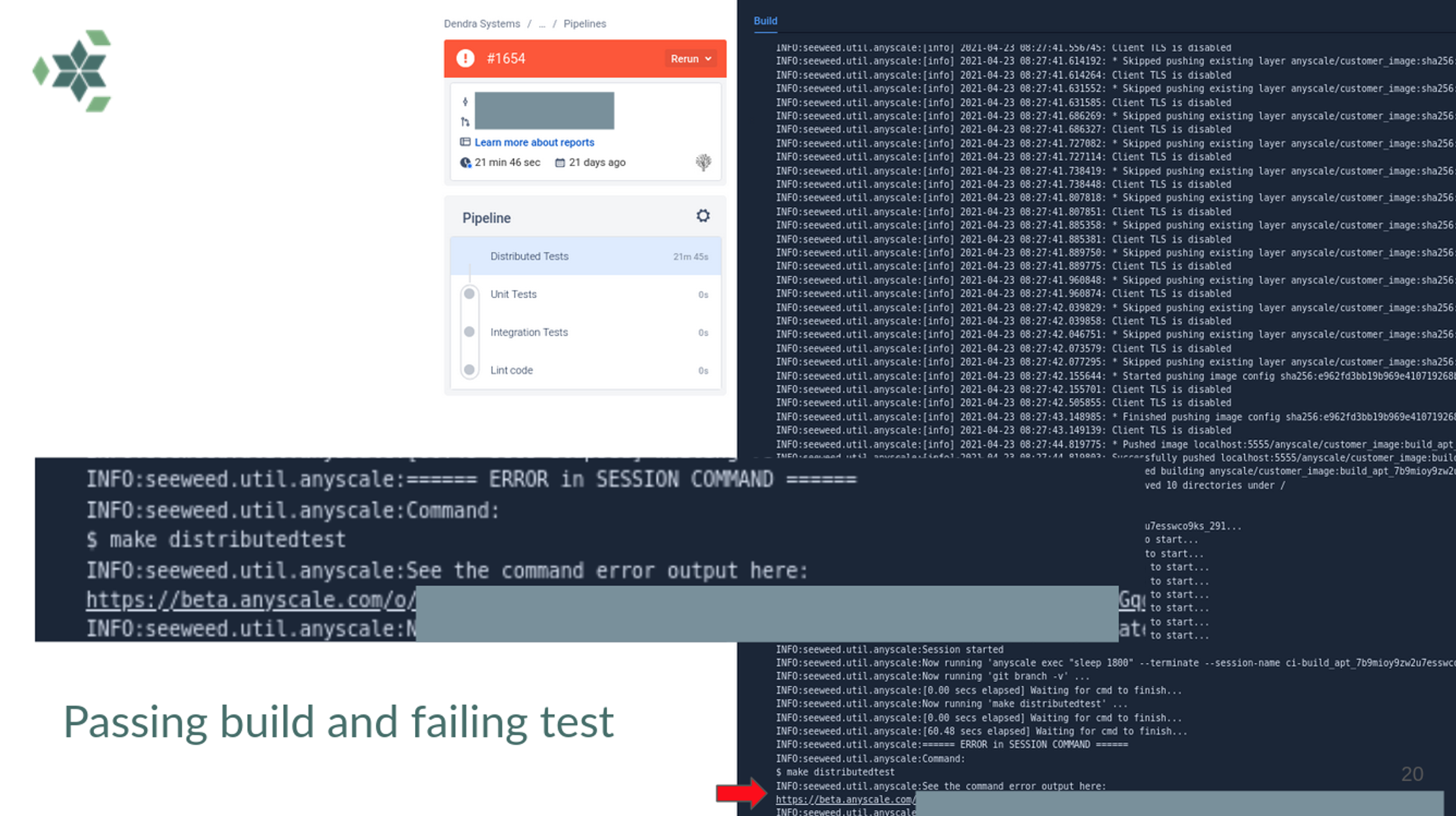

If instead you have failing tests, it will give you a link to the session.

Failed cluster build. The error message links us to the relevant Anyscale session, where we can do a post-mortem.

Failed cluster build. The error message links us to the relevant Anyscale session, where we can do a post-mortem.Caption: failed cluster build. The error message links us to the relevant Anyscale session, where we can do a post-mortem.

Introducing Anyscale has made our systems much more robust and prevented any catastrophic failures from happening since it catches errors early.

LinkProgrammatically standing up clusters and running jobs

Next, we turned our attention to a major pain point: automating inference requests, which we were doing manually.

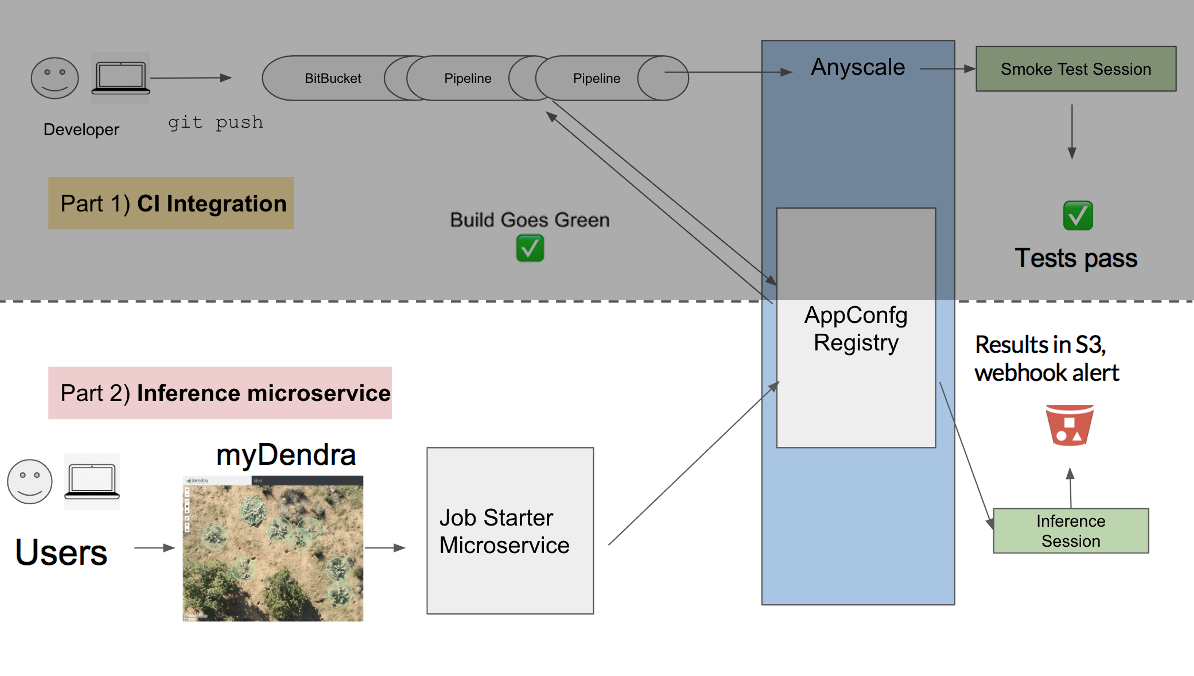

One of our goals was to enable all our systems to talk to each other via APIs. We also wanted a microservices approach to keep everything decoupled and modular, such that other teams could just use our service to fetch results without having to understand its internals.

We built our inference microservice using Anyscale. When users on our platform want model predictions for a certain area for a certain type of model, the system will call the microservice to request those results. The microservice fetches a prebuilt app config (that had passed on the production branch) from Anyscale, hotstart a cluster, and runs the inference pipeline. A webhook alert loads the results into the system when they are ready. It takes a human out of the loop and automates this tedious task.

With that build, it was easy to extend the microservice with new endpoints for other tasks, such as triggering model re-training (which involves a database export, feature engineering, model selection, and validation-- all automated at scale using Ray/Anyscale).

LinkConclusion

Dendra Systems’ mission is to enable efficient and fast ecosystem restoration at an unprecedented scale. Our machine learning platform, along with other technologies like ultra-high resolution mapping and advanced drones, is a core enabler of that mission. Ray and Anyscale have helped us execute on our machine learning platform vision in record time with a very lean team. Ray Tune has helped us efficiently and cost effectively conduct hyperparameter tournaments on large search spaces without changing any code. Ray Serve has helped us future-proof our inference pipelines and it has given us the ability to do any job from 10 thousand to 100 million images without having to change our underlying infrastructure. Anyscale has further accelerated our journey by allowing us to offload the infrastructure management piece, and focus on our core business.

LinkAcknowledgement

We’d like to thank Will Drevo, Bill Chambers, Simon Mo, Ian Rodney, Charles Greer, Amog Kamsetty, Edward Oakes, Richard Liaw, SangBin Cho from the Anyscale team for always giving us advice and helping get us unstuck on slack.