Loading Llama-2 70b 20x faster with Anyscale Endpoints

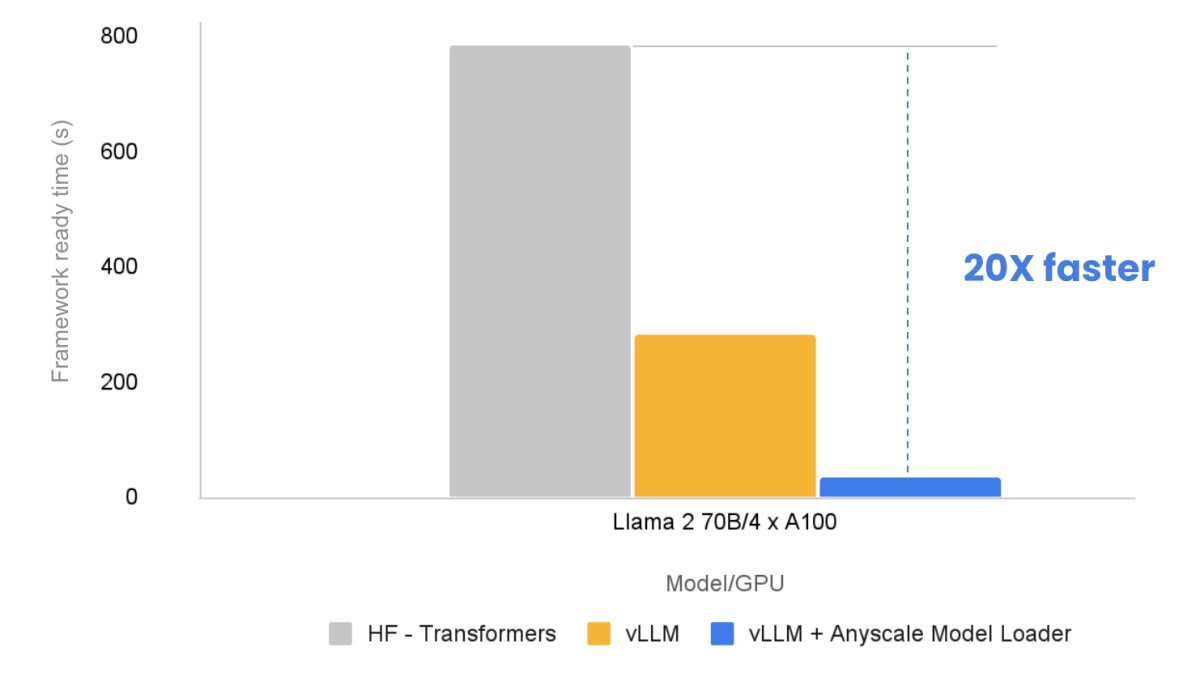

In this post, we discuss the importance of speed when loading large language models and what techniques we employed to make it 20x faster. In particular, we use the Llama 2 series of models. We share how you can reduce latency and costs using the Anyscale platform.

LinkWhat is model loading?

To serve a large language model (LLM) in production, the model needs to be loaded into the GPU of a node. Depending on the model size and infrastructure, the entire process can take up to 10 minutes. For the cold start, the model is typically stored in a remote storage service like S3. The framework then proceeds to download the model to the local node. After that, it loads the model into the CPU memory and subsequently transfers it to the GPU. Once it's cached locally, next time, the framework can load from the disk.

LinkWhy does it matter?

Although it’s only a one-time effort per node per model, it’s important for the production workload in two ways: more responsive autoscaling and cheaper model multiplexing.

Real-world traffic is highly dynamic. Usually, an autoscaler will be used to scale the cluster up and down according to the current traffic. If the scale-up is slow, the latency and throughput will degrade during that period, and the end user will have a bad experience.

Additionally, model multiplexing is very sensitive to the loading speed. In this use case, there are more models that can't fit into the GPU directly. So loading and off-loading the models during runtime will be very frequent. If the loading of a model takes a long time, the overall performance of the system will be poor, and more machines will be needed to compensate.

It’s also crucial to save costs. GPU machines are very expensive; for example, p4de.32xlarge can cost as high as $32/hour [1].

From the benchmark results, 95% of the time when starting vLLM is spent on initializing the model. Making the loading process as fast as possible can save money.

Next, let’s understand where the time is spent, and how we can fix each issue.

LinkWhere is the time spent?

To solve this problem, we need to understand how the time is spent when we load a model, which involves several steps as discussed below.

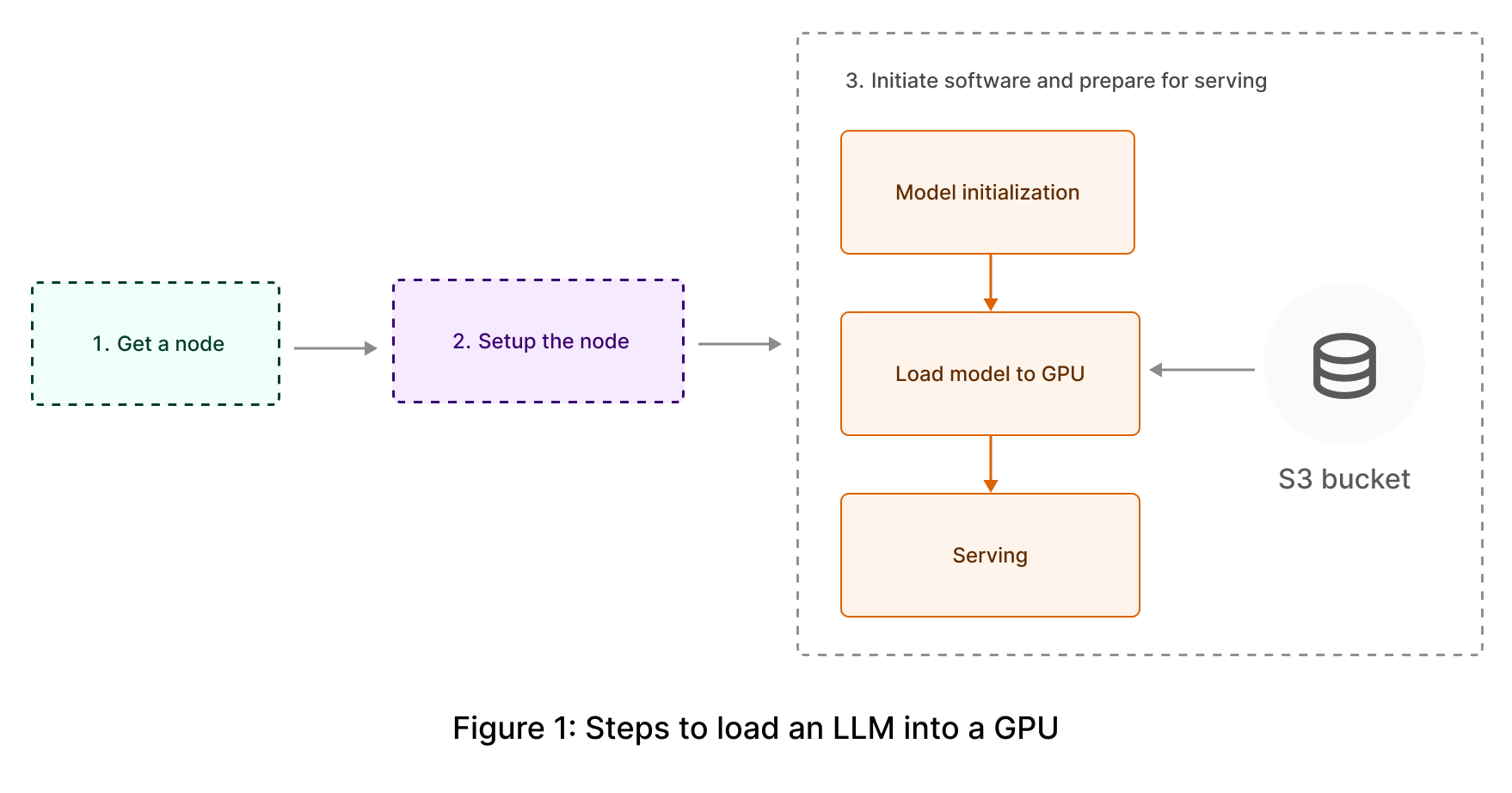

To load a model into GPU, usually there are several steps:

Get a node from the cluster. For example, get a p4de.24xlarge [1] from AWS.

Then, the docker image which has the environment to run the model needs to be pulled down from the cluster.

After the environment is ready, the serving framework, such as Ray LLM or vLLM, will start and it’ll:

Fetch the data from the S3 and store it onto disk

Decode the model and load them into CPU memory. For example, PyTorch will do the unpickling with the .bin files

Move the data from CPU memory to the GPU memory

In step 3, there are additional steps required for initializing the model class and tokenizers which don’t take as much time so they are ignored here.

Note that step 1(node setup) and step 2 (environment setup) have been optimized in the Anyscale Platform [2] so that an ML environment can start very fast, which has been covered in this blog post.

LinkHow to load a model faster?

Let’s view the whole process end-to-end first.

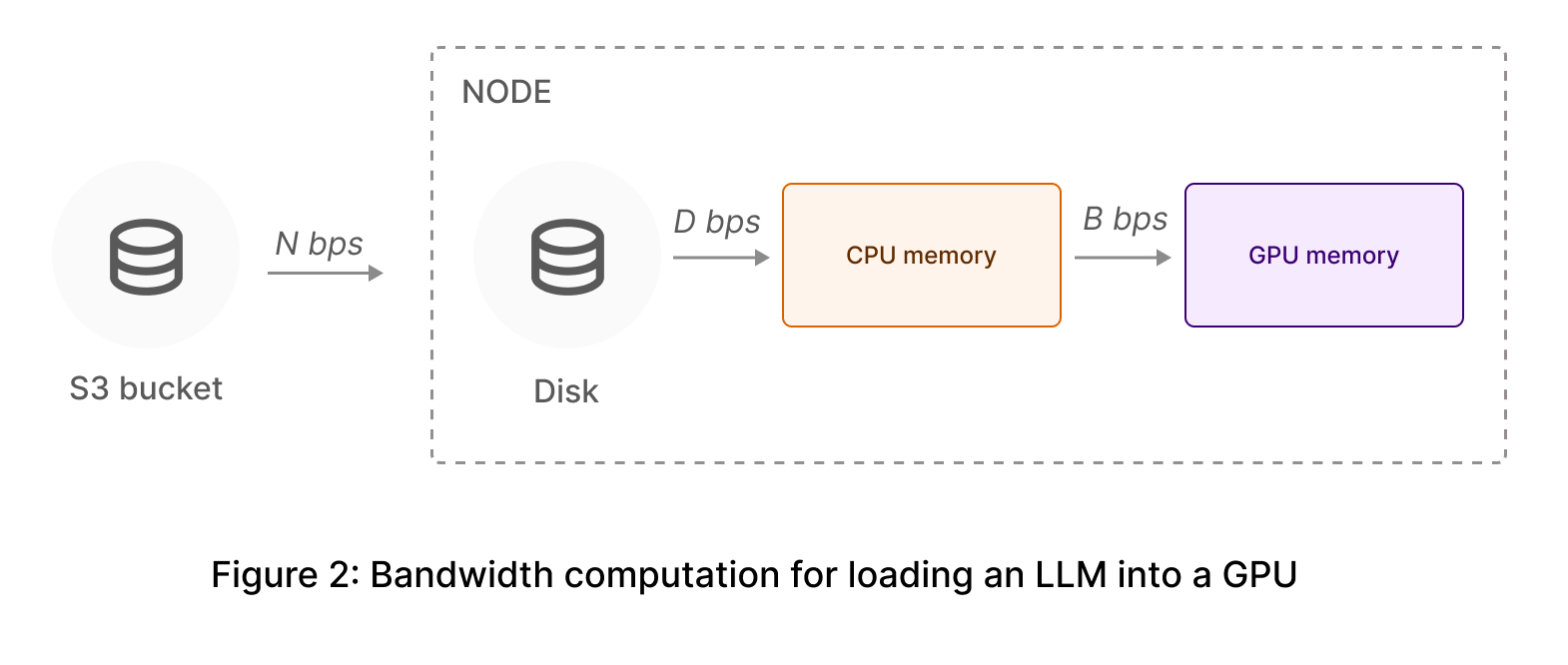

Suppose the bandwidth between S3 and the node is N and the bandwidth between CPU memory and the GPU memory is B, and the model size is M, then the cold start theoretically should finish within M / min(N, D_write) + M / D_read + M / B. Here the downloading bandwidth is min(N, D_write) because downloading and writing are streaming.

Let’s use p4de for an example. With p4de, N is 10 GB/s, and B is 16GBs for PCIe4x8. D can be 16GB/s, but in practice, it’s only 5GB/s. For Llama2 70B, the theoretical status quo should be 130GB / 5GB/s (S3 -> Disk) + 130GB / 5GB/s (Disk -> CPU) + 130GB / 16GB/s (CPU -> GPU) which is 60s. In reality it’s worse because it can’t saturate the disk bandwidth because the downloading is slow.

In the following section, we’ll see how we fix it and get it loaded in a short time.

LinkFetch the data from the S3 to Node

The built-in downloading in Huggingface's transformer library is very slow because it doesn't do parallel downloading, and the CPU becomes the bottleneck. vLLM [3] uses Huggingface's library to download the data, but it starts multiple Ray workers to fetch the data, so the overall performance is better than a single process doing the work. From the benchmark, for LLama 2 70b, vLLM's downloading speed is 127s, which is far better than transformer's speed 600s when tested with p4de.24xlarge.

But this still doesn't fully utilize the network bandwidth provided by EC2. For example, p4de.24xlarge is equipped with 4 NICs, and each has 100 Gbps throughput. To make the downloader faster, each process needs to have multiple threads downloading the data from S3. There are tools like s5cmd or awscliv2 which can fetch the data with multiple threads, giving you much higher throughput.

Given one NIC at 100 Gbps bandwidth, the theoretical writing speed should reach 100Gbps (i.e.,12.5GB/s), but in reality, even with these tools, it's still much less because the disk sometimes becomes the bottleneck. Although on AWS blog [4] , it's claimed to have 8GB/s bandwidth on p4de.24xlarge, from the experiment testing using awscliv2, at most 2.8GB/s can be reached when downloading Llama 2 70B to the NVMe SSD, a premium storage running at a read/write speed of a few GB/s . However, when downloading to memory directly by writing to /dev/shm, it can reach 4.5GB/s. Although it's still smaller than the ideal throughput, it's much higher than writing to disk.

LinkLoading models into GPU

After downloading the model to the local node, the model needs to be loaded into the GPU. The default PyTorch format uses pickle [5] to serialize the tensors into bin files and later uses unpickle to deserialize them. This means the model has to be put into CPU memory first to deserialize and then move them to the GPU.

The big part of the model usually is tensors. And tensors are very simple: they are composed of metadata and a blob for the actual data. Therefore, they can be stored with floats and metadata. Safetensors is a new simple format for storing tensors safely in a library provided by Hugging Face [6]. In the original Hugging Face implementation, the metadata is stored at the beginning of the files, along with the offset of the data buffers in the file. In the loading step, the library itself uses mmap to map it to CPU memory and then copies it to GPU memory once it is loaded as a CPU tensor. The CPU part can be further optimized if we do it differently. Firstly, the metadata can be retrieved and used to construct a GPU tensor. Later, all that needs to be done is to copy the data from the disk to the GPU, which can be done in a streaming way.

LinkStreaming to rescue

After fixing each part, we should reach the theoretical throughput. But this is still slow. The problem is that the next step can only begin once the previous step has fully finished. It means that in the cold start case, the loading should finish within (M / min(N, D)) + (M / D) + (M / B), which is 52s from the above analysis. And this also requires holding an amount of CPU memory just for storing the model which might be a lot if it’s a large model.

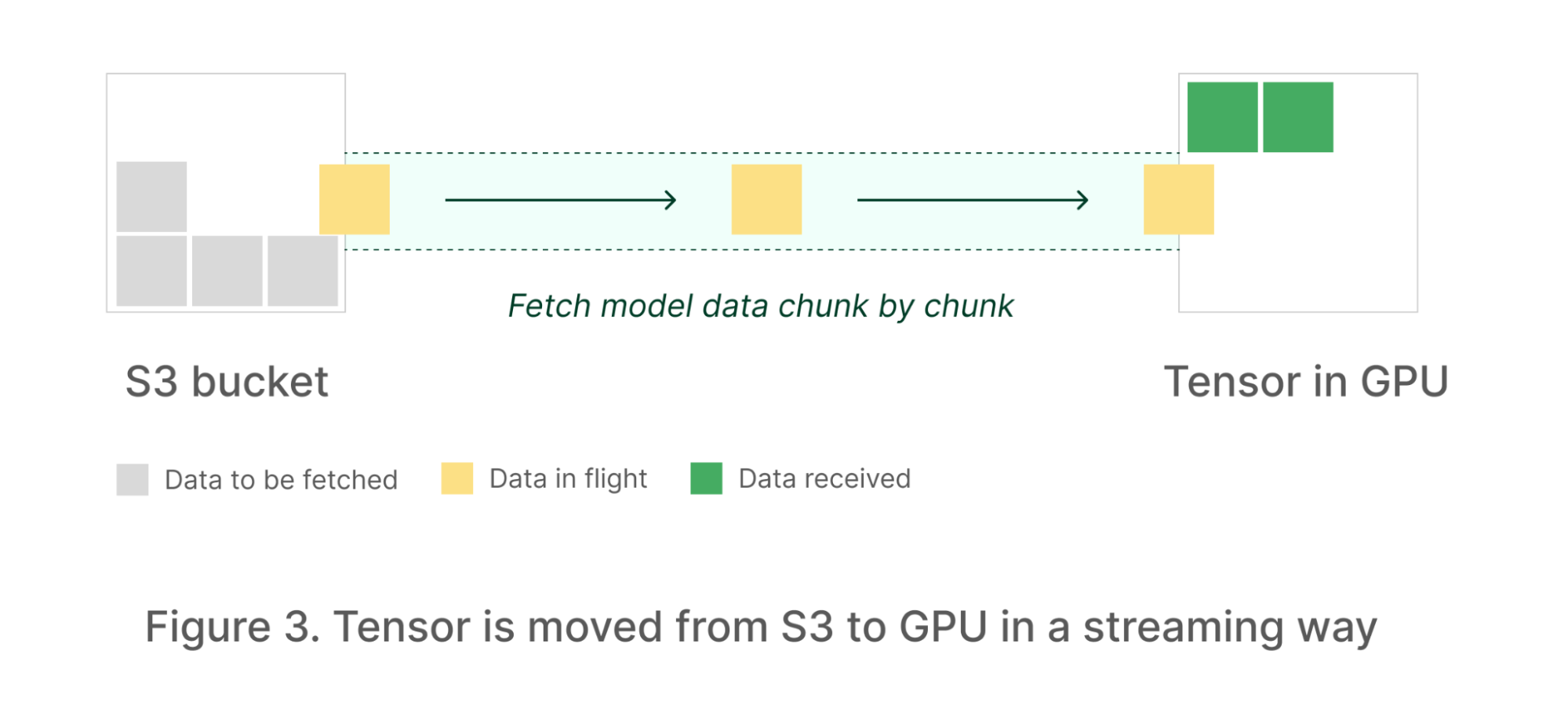

To resolve this problem, we should avoid storing the data in disk and instead load it directly into the CPU memory. A tensor consists of metadata and a memory blob. Achieving this with safetensors is certainly possible. Furthermore, we can take it a step further by copying the chunk of data from the S3 directly into the GPU memory.

When loading from S3, the library will fetch the data chunk by chunk and write it directly to the preallocated memory in the GPU. At the same time, optionally, it can write the data to disk in another asynchronous thread for caching purposes, so that we can later read it directly from disk. This is useful when the bandwidth between S3 and the node is lower than disk. With this, we don’t need to wait for the downloading to be finished before moving the data into the GPU. Thus, we are streaming the data from S3 to GPU memory.

In this way, we can achieve the following:

The CPU memory used is very small. It’s the O(C * T), where C is the chunk size and T is the number of downloading threads. In practice, we use 250 threads and 8MB chunk size. So in total 2GB CPU memory.

Data is copied directly to the GPU, and the bandwidth usually is much higher than network bandwidth. For example, PCIe v4.0 can reach up to 32GB/s[8]. This also removes disk as the bottleneck.

If S3 to local node’s bandwidth is smaller than the disk to CPU’s bandwidth, the data can be cached in disk for later usage and this will remove network as the bottleneck.

Once all this is implemented, the uploading is similar but just reverses the directions. The library will read the data from GPU memory chunk by chunk and stream it to S3 in an ordered manner.

In this way, the bottleneck will be the S3 - EC2 bandwidth, which is very high in AWS. To address this bottleneck, we propose Anyscale Model Loader as a solution.

LinkAnyscale Model Loader

At Anyscale, we have built Anyscale Model Loader (AML) to speed up the loading models more efficiently. There is a downloader, part of AML, pulling data from S3 concurrently with more than 250 threads. Each thread holds an 8MB buffer. Once the fetching is finished, it’ll write it to the GPU buffer directly and then start to fetch the next chunk. It’s fully integrated with vLLM for the loading and being used in Anyscale Endpoint.

LinkBenchmark and measurements

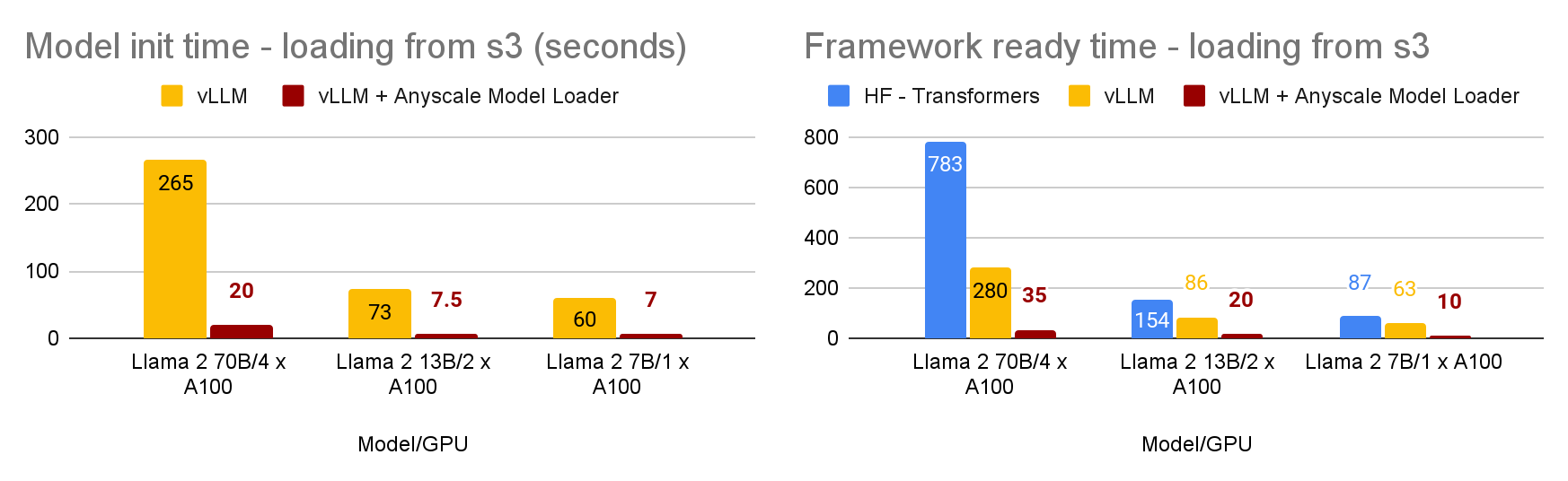

The benchmark is done with Llama 2 models on p4de.24xlarge. When the framework starts, there is additional time spent setting up the environment, including NCLL clusters and Ray actors. Here, as we focus on optimizing the model loading process, we utilize model initialization time as one of the metrics. Model initialization encompasses the creation of a model from the model class and the loading of all weights into the GPU. Framework ready time is also measured, besides model initialization time.

The version of vLLM used in this testing is v0.1.4 and the version of transformers is v4.31.0. For transformers, safetensors are used.

As the charts above indicate, we can see that the model initialization is much faster than both the vLLM built-in library and HF transformer library.

Another observation is that the overhead of the framework actually becomes noticeable after we make the loading part faster. Now, 50% of the time is spent on the work other than initializing the model and downloading the weights.

LinkConclusions

In this blog, we discussed why model loading is important, why it matters, and what are the bottlenecks that prevent you from making it faster and cost-efficient. We showed that with Anyscale Model Loader, we can achieve a speed increase of over 20x. You can try it out with Anyscale Endpoints today!

LinkReferences

[1] AWS P4de instances: https://aws.amazon.com/ec2/instance-types/p4/

[2] Anyscale Platform: https://www.anyscale.com/platform

[3] vLLM: https://github.com/vllm-project/vllm

[4] Amazon EC2 P4d instances deep dive: https://aws.amazon.com/blogs/compute/amazon-ec2-p4d-instances-deep-dive/

[5] Pickle, Python object serialization: https://docs.python.org/3/library/pickle.html

[6] Safetensors: https://huggingface.co/docs/safetensors/index

[7] Row- and column-major order: Row- and column-major order - Wikipedia

[8] PCIe Explained - https://www.kingston.com/en/blog/pc-performance/pcie-gen-4-explained

Table of contents

Sharing

Sign up for product updates

Ready to try Anyscale?

Access Anyscale today to see how companies using Anyscale and Ray benefit from rapid time-to-market and faster iterations across the entire AI lifecycle.