Ray 2.8 features Ray Data extensions, AWS Neuron cores support, and Dashboard improvements

The Ray 2.8 release features focus on a number of enhancements and improvements across the Ray ecosystem. In this blog, we expound on a few key highlights, including:

Reading external data sources like BigQuery and Databricks tables

Supplying Ray data metrics on the Ray Dashboard

Adding AWS neuron cores accelerators

Profiling GPU tasks or actors using Nvidia Nsight

LinkReading external data stores with Ray Data

Ray data now supports reading from external data stores like BigQuery and Databricks tables. To read from BigQuery, simply install the Python Client for Google BigQuery and the Python Client for Google BigQueryStorage. After installation, you can query it by using functions like read_bigquery() and providing the project id, dataset, and query (if needed).

1import ray

2

3# Read the entire dataset (do not specify query)

4ds = ray.data.read_bigquery(

5 project_id="my_gcloud_project_id",

6 dataset="bigquery-public-data.ml_datasets.iris",

7)

8

9# Read from a SQL query of the dataset (do not specify dataset)

10ds = ray.data.read_bigquery(

11 project_id="my_gcloud_project_id",

12 query = "SELECT * FROM `bigquery-public-data.ml_datasets.iris` LIMIT 50",

13)

14

15# Write back to BigQuery

16ds.write_bigquery(

17 project_id="my_gcloud_project_id",

18 dataset="destination_dataset.destination_table",

19)

20Similarly, you can read Databricks tables simply by calling ray.data.read_databricks_tables() to read from the Databricks SQL warehouse.

1import ray

2

3dataset = ray.data.read_databricks_tables(

4 warehouse_id='a885ad08b64951ad', # Databricks SQL warehouse ID

5 catalog='catalog_1', # Unity catalog name

6 schema='db_1', # Schema name

7 query="SELECT title, score FROM movie WHERE year >= 1980",

8)

9These capabilities extend Ray Data to ingest and supplement additional structured data from external data stores for last-mile ingestion for machine learning training.

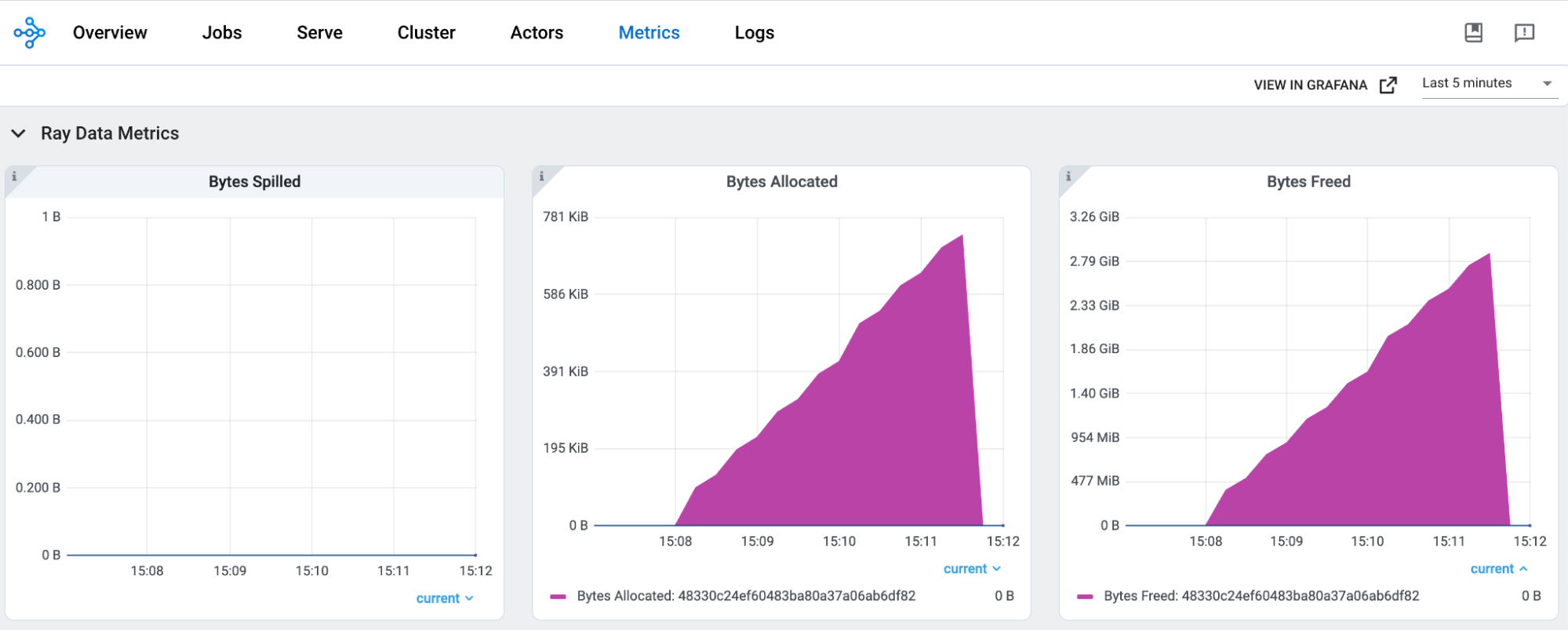

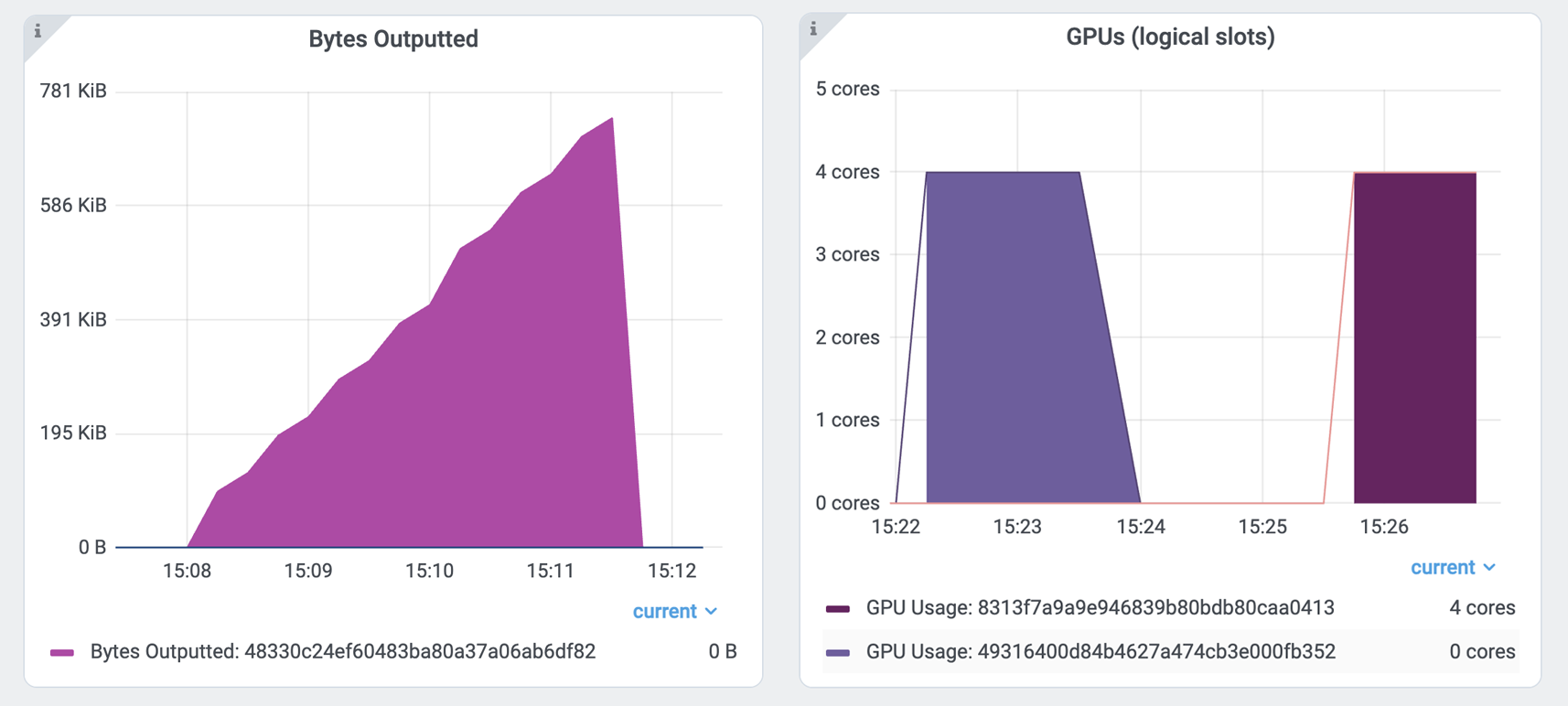

LinkSupplying Ray Data Metrics on the Ray Dashboard

You can monitor Ray Data consumption, including metrics like bytes spilled, consumed, allocated, outputted, freed, as well as CPU and GPU usage, in real-time during operations such as map(), map_batches(), take_batch(), and read_data().

The Ray data metrics below are emitted from Ray Data examples run on an Anyscale cluster. For setup instructions, read the Ray Dashboard documentation and series of videos.

LinkAdding AWS Neuron Core accelerator (experimental)

Ray supports a range of GPU accelerators to improve heterogeneous training and batch processing performance and efficiency. As an experimental feature, Ray can also schedule Tasks and Actors on AWS neuron core accelerators by specifying them as resources in the @ray.remote(...) decorator. For example:

1import ray

2import os

3from ray.util.accelerators import AWS_NEURON_CORE

4

5# On trn1.2xlarge instance, there will be 2 neuron cores.

6ray.init(resources={"neuron_cores": 2})

7

8@ray.remote(resources={"neuron_cores": 1})

9class NeuronCoreActor:

10 def info(self):

11 ids = ray.get_runtime_context().get_resource_ids()

12 print("neuron_core_ids: {}".format(ids["neuron_cores"]))

13 print(f"NEURON_RT_VISIBLE_CORES: {os.environ['NEURON_RT_VISIBLE_CORES']}")

14

15@ray.remote(resources={"neuron_cores": 1}, accelerator_type=AWS_NEURON_CORE)

16def use_neuron_core_task():

17 ids = ray.get_runtime_context().get_resource_ids()

18 print("neuron_core_ids: {}".format(ids["neuron_cores"]))

19 print(f"NEURON_RT_VISIBLE_CORES: {os.environ['NEURON_RT_VISIBLE_CORES']}")

20

21neuron_core_actor = NeuronCoreActor.remote()

22ray.get(neuron_core_actor.info.remote())

23ray.get(use_neuron_core_task.remote())

24Such an addition immensely extends Ray’s capabilities to support an array of accelerators suited optimally for large language model fine-tuning, inference, and other compute-intensive batch processing.

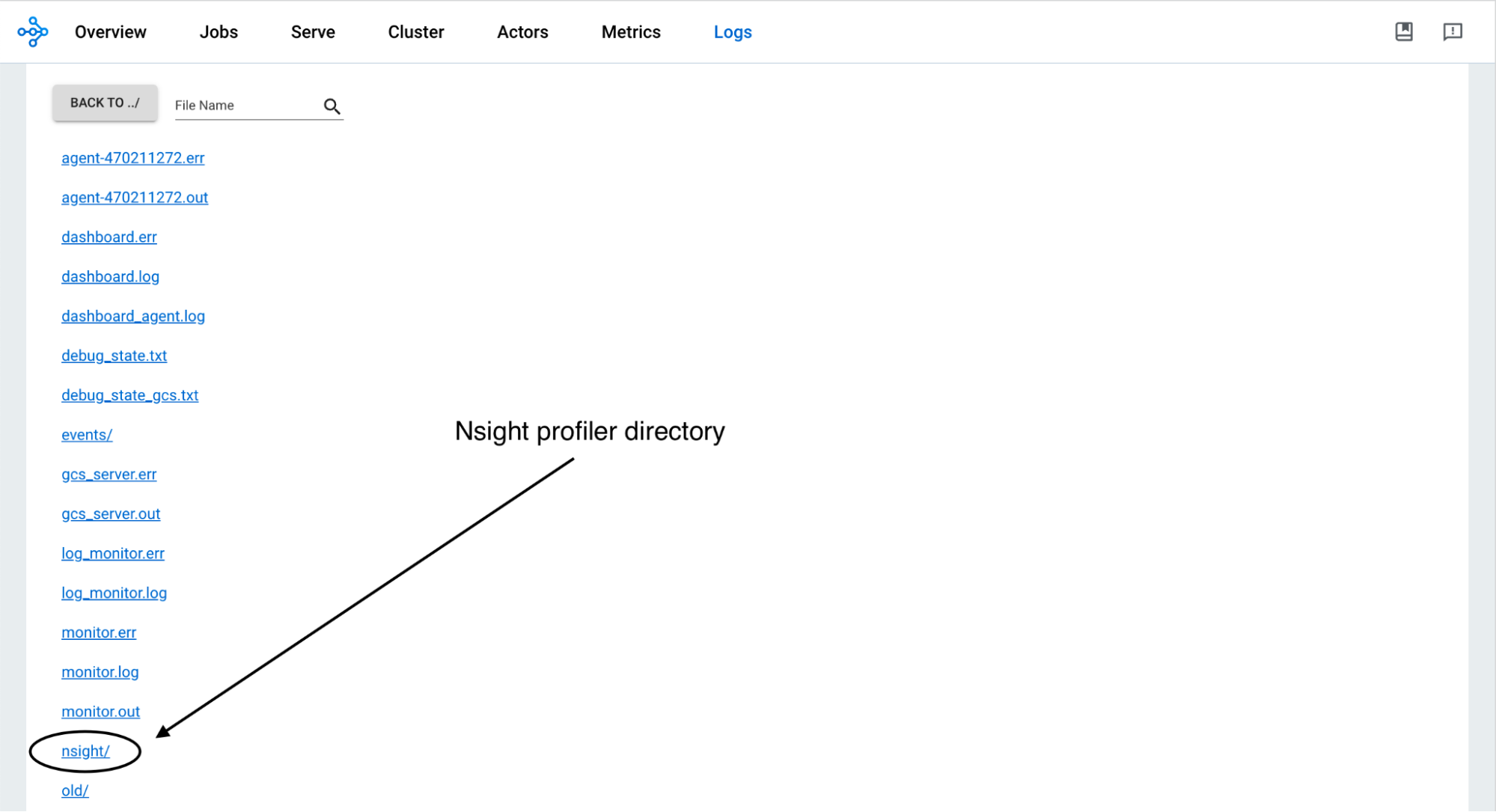

LinkProfiling GPU tasks or actors with NVIDIA Nsight

Observability is crucial for distributed systems. It provides a means to assess job progress and resource consumption, offering insights into overall health and progress. Profiling GPU bound tasks and actors is one lens through which to view your job’s health and progress.

NVIDIA Nsight System is now natively supported on Ray. For brevity, we refer to our Ray GPU profiling documentation on how to install, configure, customize, run it, and display it on the Ray Dashboard.

To enable GPU profiling for your Ray Actors, specify the config in the runtime_env as follows:

1import torch

2import ray

3

4ray.init()

5

6@ray.remote(num_gpus=1, runtime_env={ "nsight": "default"})

7class RayActor:

8 def run():

9 a = torch.tensor([1.0, 2.0, 3.0]).cuda()

10 b = torch.tensor([4.0, 5.0, 6.0]).cuda()

11 c = a * b

12

13 print("Result on GPU:", c)

14

15ray_actor = RayActor.remote()

16# The Actor or Task process runs with : "nsys profile [default options] ..."

17ray.get(ray_actor.run.remote())

18LinkConclusion

With each release of Ray, we strive toward ease of use, performance, and stability. And this release, as previous ones, marched towards that end by:

Extending Ray Data functionality to accessing common data stores such as BigQuery and Databricks Tables. This enables to augment additional structured data for data ingestion to train your models

Augmenting Ray Databoard with additional metrics to examine your Ray Data operations

Adding experimental AWS neuron core accelerators support to bolster training and batch processing, extending your choice of resources available for target scheduling

Profiling GPU usage by Ray Tasks and Actors with NVIDIA Nsight, adding lenses to perceive the health and progress of your Ray jobs

Thank you to all contributors for your valuable contributions in Ray 2.8. Try the latest release with "pip install ray[default]" and share your feedback on Github or Discuss. We appreciate your ongoing support.

Join our Ray Community and the Ray #LLM slack channel.

LinkWhat’s Next?

Stay tuned for additional Ray blogs, meanwhile take a peek at the following material for Ray edification:

Deep dive on Ray Data user guides

Walk through the Ray Serve tutorials, including batching and streaming examples

Peruse the new Ray example gallery