Benchmarking Multimodal AI Workloads on Ray Data

Multimodal AI workloads are pushing the boundaries of today’s infrastructure, demanding systems that can handle multimodal data (text, images, audio, video), high-volume throughput pipelines, and CPU and GPU scheduling at scale.

Ray Data is a data processing engine targeted towards these workloads. It has the following key features:

A streaming batch execution model, which effectively pipelines execution across heterogeneous CPU and GPU resources, maximizing resource utilization and reducing costs for multimodal AI workloads.

Ecosystem Integrations: performant, first-class integration with key AI projects such as vLLM, SGLang, Lance, and PyArrow to efficiently process multimodal data - whether that’s preprocessing data for training or running batch inference with cutting edge AI models

Built for scale: Ray Data can independently scale CPU and GPU workers with no code changes while offering first-class support for fault tolerance and autoscaling. Code runs unchanged from one machine to hundreds of nodes processing hundreds of TB of data.

Recently, we’ve seen interest in the community for performance benchmarks comparing Ray Data with alternative solutions in the Ray ecosystem such as Daft, a distributed DataFrame library. Daft’s choice to build on Ray underscores the strength of the ecosystem, and we’re excited to see their innovations expand it.

The Daft team recently published benchmarks comparing their new Flotilla execution engine with Ray Data and EMR Spark. In reproducing these results, we’ve observed that compared to Daft, Ray Data is:

30% faster, on average, multimodal AI workloads processing.

3x faster when increasing GPU utilization with reduced CPU starvation e.g. image batch inference.

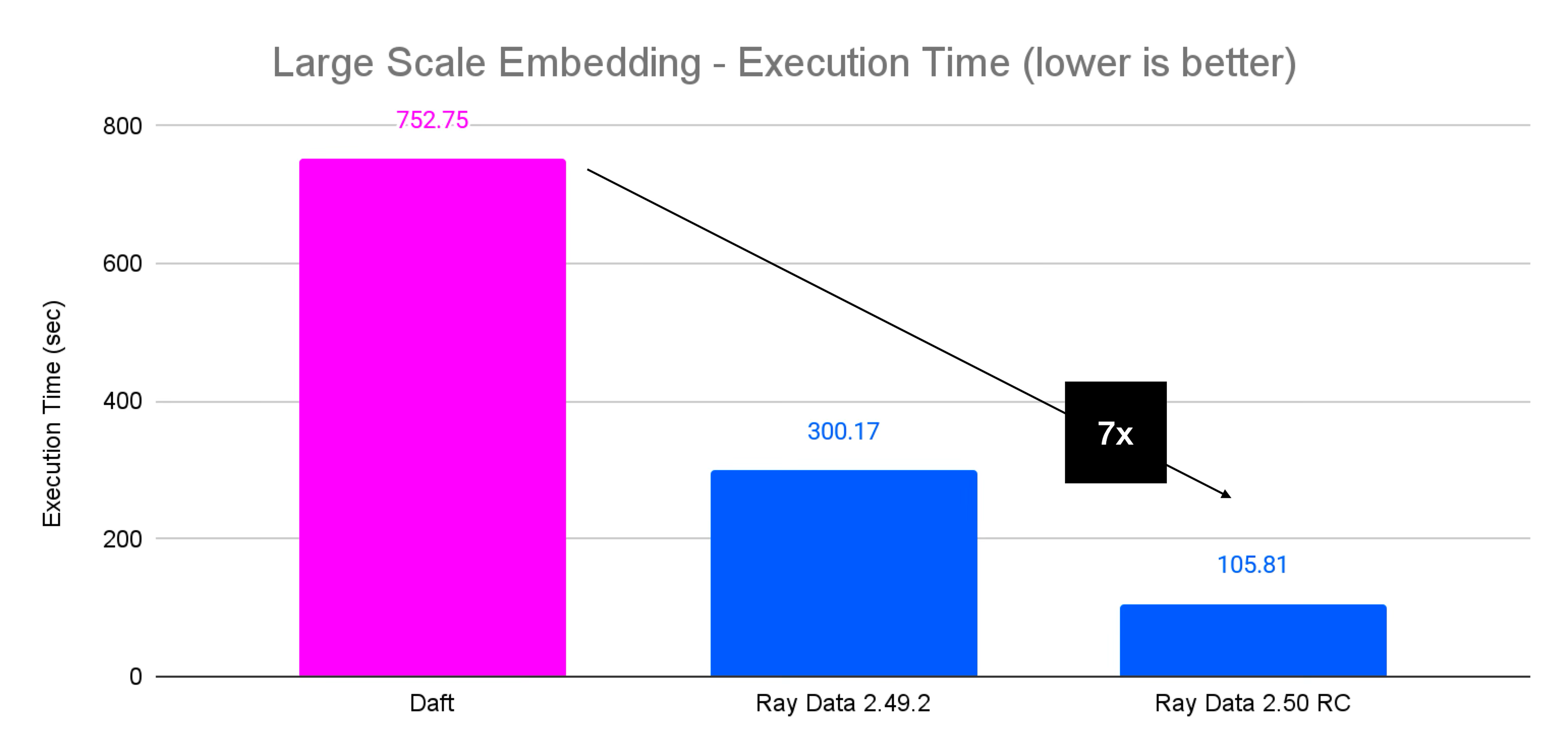

7x more efficient large production-scale workloads.

More details on each of these below.

LinkReproducing the Ray Data vs. Daft benchmarks

To test and better understand the limitations of the systems ourselves, we ran similar workloads under comparable settings to those run by the Daft team.

Here’s a quick summary of the workloads that we ran:

Image Classification: Processing 800k ImageNet images using ResNet18. The pipeline downloads images, deserializes them, applies transformations, runs ResNet18 inference on GPU, and outputs predicted labels.

Document Embedding: Processing 10k PDF documents from Digital Corpora. The pipeline reads PDF documents, extracts text page-by-page, splits into chunks with overlap, embeds using a all-MiniLM-L6-v2 model on GPU, and outputs embeddings with metadata.

Audio Transcription: Transcribing 113,800 audio files from Mozilla Common Voice 17 dataset using a Whisper-tiny model. The pipeline loads FLAC audio files, resamples to 16kHz, extracts features using Whisper’s processor, runs GPU-accelerated batch inference with the model, and outputs transcriptions with metadata.

Video Object Detection: Processing 10k video frames from Hollywood2 action videos dataset using YOLOv11n for object detection. The pipeline loads video frames, resizes them to 640x640, runs batch inference with YOLO to detect objects, extracts individual object crops, and outputs object metadata and cropped images in Parquet format.

Across these multimodal AI workloads, we observed an overall average speedup of roughly 30% comparing Daft 0.6.2 and Ray Data 2.50 (release candidate).

Figure 1. Daft vs. Ray Data Processing Times

Figure 1. Daft vs. Ray Data Processing TimesIn general, we were unable to access the provided datasets from the original Daft benchmarks, so we followed the published methodology and made best-effort reproductions of the datasets available. Below, we share our findings from the process of reproducing each of these workloads.

LinkAudio Transcription

The audio transcription workload used a small Whisper model to generate audio transcriptions from a voice dataset.

In this workload, we were unable to access the provided Daft dataset, so we followed the published methodology -- taking the Mozilla Common Voice dataset from HuggingFace and uploading a subset of files onto S3.

Data: Mozilla Common Voice 17 en (113,800 files)

Path: s3://air-example-data/common_voice_17/parquet/

Model: openai/whisper-tiny

Cluster: g6.xlarge head / 8 g6.xlarge workers

We made no changes to the Daft benchmark script provided by the Daft team.

For the Ray Data script, we made a change to drop unused data after they were used. Again, similarly to above, this puts more burden on the user to keep track of which columns to drop and manage, but in Ray 2.51, this will be automatically handled by Ray Data.

The numbers below are taken from an average across 4 runs. We also ran 1 warmup run to download the model and remove any startup overheads that would affect the result.

Daft | Ray Data | Ray Data | |

|---|---|---|---|

Audio Transcription | 510.5 sec | 499.9 sec | 312.6 sec (39% faster) |

LinkDocument Embedding

The next benchmark was a PDF embedding generation workload on a subset of the Digital Corpora dataset.

On this workload, similarly, we couldn’t access the provided datasets from the Daft team. In a best effort reproduction, we followed their published methodology -- we took the first 10k PDFs from Digital Corpora, uploaded them onto S3, and wrote a metadata file that pointed to the URIs of the PDFs.

Data: 10k PDFs from Digital Corpora

Path: "s3://ray-example-data/digitalcorpora/metadata"

Model: sentence-transformers/all-MiniLM-L6-v2

Cluster: g6.xlarge head / 8 g6.xlarge workers

We made no changes to the Daft benchmark script.

For the Ray Data script, we added a change to drop the raw bytes column after parsing it into the PDF parser. This puts more burden on the user to keep track of which columns to drop and hold onto, but in Ray 2.51, this will be automatically handled by Ray Data. Daft already has such a feature as part of its library.

The numbers below are taken from an average across 4 runs. We also ran 1 warmup run to download the model and remove any startup overheads that would affect the result.

Daft | Ray Data | Ray Data | |

|---|---|---|---|

Document Embedding | 51.3 sec | 148.8 sec (290% slower) | 29.4 sec (43% faster) |

LinkImage Classification

The image classification benchmark uses a ResNet model to do image classification on a subset of the ImageNet dataset. Unfortunately, we couldn’t access the provided datasets from the Daft team.

In a best effort reproduction, we followed their published methodology -- we took an 800k file subset of ImageNet, uploaded them onto S3, and wrote a metadata file that pointed to the URIs of the images.

Data: 800k images from ImageNet

Path: “s3://ray-example-data/imagenet/metadata_file”

Model: Resnet-18

Cluster:

g6.xlarge head

8 workers of various types:

g6.xlarge (4 CPUs/1 GPU)

g6.2xlarge (8 CPUs/1 GPU)

g6.4xlarge (16 CPUs/1 GPU)

g6.8xlarge (32 CPUs/1 GPU)

For Daft, we used their published script and made two minor modifications:

We added an extra repartition step in the pipeline logic to achieve better performance. This code isn't in Daft's benchmark, possibly because their Parquet metadata is pre-partitioned. Without this, we saw a 20-25% increase in execution time for Daft.

We also added a

df = df.where(df["decoded_image"].not_null())line to filter out images with null values, which would otherwise break the pipeline.

For Ray:

We made a change to the provided script to drop columns that are no longer used. This puts more burden on the user to keep track of which columns to drop and hold onto, but in a later version of Ray, this will be automatically handled by Ray Data.

On Ray Data 2.50 release candidate (RC), we also leverage Ray Data’s new

downloadexpression, which provides an optimized way to load images from provided URIs. 2.50 also features optimized parquet footer sampling which reduces the time to first output.

In the first test, we compared Ray Data to Daft on g6.xlarge instances.

Daft | Ray Data | Ray Data | |

|---|---|---|---|

Document Embedding | 314.97 sec | 989.81 sec | 456.23 sec |

To the Daft team’s credit, Daft was still able to run faster in this particular configuration.

However, in the reproduction process, we noticed that:

The GPU utilization of both systems was very low -- less than 20% for both systems, and was fundamentally starved by not having enough CPUs.

We tested multiple CPU–GPU configurations to evaluate performance, and we noticed that Ray Data began to outperform Daft across all other instance types.

g6.xlarge | g6.2xlarge | g6.4xlarge | g6.8xlarge | |

|---|---|---|---|---|

Ray Data | 456.2 +/- 39.9 sec | 195.5 +/- 7.6 sec | 144.8 +/- 1.9 sec | 111.2 +/- 1.2 |

Daft | 315.0 +/- 31.2 sec | 202.0 +/- 2.2 sec | 195.0 +/- 6.6 sec | 195.3 +/- 2.5 sec |

Compared on g6.8xlarge instances, Ray Data is 1.7x faster than Daft. More details in the below section on maximizing GPU efficiency.

LinkVideo Object Detection

The video object detection workload takes a collection of videos stored on S3, downloads and decodes them, and then sends the images through a YOLO11n model to create bounding boxes to extract key objects. The cropped object images are then written to S3, inlined in parquet files.

On the video object detection benchmark, we leveraged the same dataset as provided from Daft.

Data: 1,000 videos from Hollywood-2 Human Actions dataset

Path: s3://daft-public-data/videos/Hollywood2-actions-videos/Hollywood2/AVIClips/

Model: YOLO11n

Cluster:

g6.xlarge head

8 workers of various types:

g6.xlarge (4 CPUs/1 GPU)

g6.2xlarge (8 CPUs/1 GPU)

g6.4xlarge (16 CPUs/1 GPU)

g6.8xlarge (32 CPUs/1 GPU)

In this situation, we were able to reproduce Daft’s benchmark numbers for both Ray Data and Daft. Naturally, we were curious about the performance delta.

What we noticed was a hidden detail in the script -- PNG encoding.

Daft has a default image encoder expression, which leverages the underlying rust native image encoding library. However, Ray Data doesn’t have a default encoding expression unlike Daft, and so the implementation was using Pillow to do compression.

Pillow contains 10 different compression levels when encoding to png -- and its default was set to 6, which has a different compression ratio compared to the Rust default. The lower the compression ratio, the less CPU work required and the larger the output file.

With a quick check across a sample of video files, we found that the default compression level (6), which had a 7.8% discrepancy of file size compared to the Rust image-rs library default setting, and compression level 2 had only a 4.4% discrepancy of file size. Going lower to compression level 1 would introduce a much larger deviation from the Rust default. We decided to set our pillow compression level to 2.

Rust Image PNG default | Pillow Compression Level = 6 | Pillow Compression Level = 2 | Pillow Compression Level = 1 | |

|---|---|---|---|---|

Output (mb) | 204 | 188 | 195 | 262 |

Relative Difference | N/A | -7.8% | -4.4% | +28% |

Another change we made was in adjusting the batch size -- we noticed that the default batch size set for Ray Data was 64, but it caused quite a few OOM errors. We reduced it to 32 to improve the stability of the pipeline. On a g6.xlarge, which has 4 CPUs and 1 L4 GPU, we saw the following results, which were quite similar to the Daft reported numbers:

Daft | Ray Data (2.49.2) | Ray Data (2.49.2) | |

|---|---|---|---|

Video Object Detection | 758.8 | 1590.5 | 922.0 |

With these changes, we saw that Ray Data execution time reduced by 40%, from 1590 to 922 seconds on average. However, we saw that Daft is still about 20% faster than Ray Data for this particular configuration.

Similar to the image processing benchmarks, we noticed an opportunity to reduce CPU starvation and tested multiple CPU to GPU ratios with the final result using g6.8xlarge for both Daft and Ray Data, which has 32 CPUs / 1 GPU.

g6.xlarge | g6.2xlarge | g6.4xlarge | g6.8xlarge | |

|---|---|---|---|---|

Ray Data (2.49.2) | 922 +/- 13.8 | 704.8 +/- 25.0 | 629 +/- 1.8 | 623 +/- 1.4 |

Daft | 758.8 +/- 10.4 | 735.3 +/- 7.6 | 747.5 +/- 13.4 | 771.3 +/- 25.6 |

Notably, Ray Data is able to outperform Daft across most instance types, achieving up to 24% speedups on g6.8xlarge instances.

LinkMaximizing GPU Efficiency

In the image classification task, we found that in both Ray Data and Daft, the GPU utilization was very low, and both systems were fundamentally bottlenecked upstream.

From our metrics, it seemed like this was due to not having enough CPUs to complete upstream work, resulting in starvation in the downstream task.

Figure 2. Node GPU Utilization with g6.xlarge instances.

Figure 2. Node GPU Utilization with g6.xlarge instances.We then ran a couple experiments using the same code, but increasing the number of CPUs per node, going from the g6.xlarge, which only has 4 CPUs / 1 GPU to g6.8xlarge, which has 32 CPUs / 1 GPU, reporting the average over three runs for each configuration. We also ran 1 warmup run to download the model and remove any startup overheads that would affect the result.

Figure 3. Image classification performance across instance types.

Figure 3. Image classification performance across instance types.Comparing Ray Data 2.50 release candidate (RC) vs Daft, we see that as we reduce CPU starvation, Ray Data starts to outperform Daft (by up to 60%). We see that below, comparing similar workloads, Ray Data is able to achieve and sustain higher peak GPU utilization than Daft.

Note that the utilization is still low in absolute terms -- Ray Data is able to achieve much higher GPU utilization. In order to drive the utilization higher, we can adjust batch size, use fractional GPUs, and better leverage h2d bandwidth -- all of which we will cover in a future blog post :)

Figure 4. Node GPU Utilization with g6.8xlarge instances.

Figure 4. Node GPU Utilization with g6.8xlarge instances.Naturally, one could call out that increasing the instance size would translate to higher cost of ownership. However, in comparing costs across instance types, we see that both Ray Data and Daft achieve similar cost profiles -- Daft coming in at $0.44 and Ray Data coming in at $0.43.

g6.xlarge | g6.2xlarge | g6.4xlarge | g6.8xlarge | ||

|---|---|---|---|---|---|

Ray Data | Execution time (sec) | 456.2 | 195.5 | 144.8 | 111.24 (3x faster) |

Cost per run ($) | $0.82 | $0.43 | $0.43 (lowest) | $0.50 | |

Daft | Execution time (sec) | 315.0 | 202.0 | 195.0 | 195.27 |

Cost per run ($) | $0.56 | $0.44 (lowest) | $0.57 | $0.87 |

Even when comparing configurations with lowest cost, Ray Data is able to achieve a 28% faster execution time (145 seconds versus Daft’s 202 seconds).

LinkTesting at large scale

We also note that the above benchmarks from Daft are fairly small compared to real world workloads that we see in production. Our customers are often pushing scale at dozens, hundreds, or even thousands of machines, processing terabytes of data, and working with much larger models.

To show this, we showed a new benchmark that increases the scale of the cluster, the model, and the data, aiming to be more representative of common use cases.

This benchmark reads base64-encoded images from Parquet files in S3, decodes and preprocesses them with a ViT (Vision Transformer) image processor, and then performs inference with the ViT model on GPU. The images are decoded, resized, normalized, and batched (size 1024) for inference, with results written back to S3. This is inspired by real-world production workloads we’ve seen in our community.

Data: ~4 TiB 512MiB to 1GiB Parquet files containing base64 encoded images

Path: s3://ray-example-data/image-datasets/10TiB-b64encoded-images-in-parquet-v3/

Model: google/vit-base-patch16-224 (87M parameters)

Cluster Environment:

1 m5.24xlarge (head)

40 g6e.xlarge (gpu workers)

64 r6i.8xlarge (cpu workers)

The numbers below are taken from an average across 4 runs. We also ran 1 warmup run to download the model and remove any startup overheads that would affect the result.

In this benchmark, we see that Ray Data 2.49.2 execution is around 2x faster than Daft, and Ray Data 2.50 RC is about 7x faster than Daft.

Figure 5. Daft vs. Ray Data - large embedding processing.

Figure 5. Daft vs. Ray Data - large embedding processing.LinkTakeaway

From the Ray Data perspective, here are our takeaways from doing this benchmarking exercise:

Compared to alternative systems, Ray Data is able to effectively utilize cluster resources at scale. The most expensive component in a pipeline is the GPU step, and users often care heavily about GPU resource utilization. While alternative solutions like Daft can be quite efficient in low-resource regimes, we see that with bigger instance types, Ray Data is able to better leverage the available CPU and memory available on the machine to achieve higher GPU utilization, leading to lower execution times and lower costs for the user.

Ray Data is built for cluster heterogeneity, which is critical when GPU pipelines have heavy CPU steps. For these workloads, it is critical to have a higher CPU to GPU ratio than offered by default VM shapes (which are often 12 to 24 CPUs per GPU). For these setups, Ray Data can be up to 7x faster than alternative solutions.

The Ray Data team is actively improving its query optimizer, adding standard optimizations as well as some innovative advancements, like zero-data-transfer shuffles, to its suite. Major advancements are coming in 2.50 and beyond.

We applaud Daft for publishing their results and releasing new improvements to their system. At the same time, we encourage readers to evaluate both systems in their own environments and choose the systems based on their own needs and business requirements.

Try it: You can try both Daft and Ray Data on Anyscale today