Ray Summit 2022 Stories - Large Language Models

In the next few weeks we are delighted to share “the Ray Summit stories” a blog post series highlighting some of the best talks grouped by different themes. In this blog post, we highlight a few companies who are using Ray to train large language models or foundational models.

The Ray Summit 2022 large language model highlights include a fireside chat with Greg Brockman, CTO from OpenAI, a talk from Cohere.ai on how they trained their large language model using Ray on top of TPUs, and the UC Berkeley’s Alpa project on the use of Ray to automate model parallel training and the serving of a large language model like GPT3.

LinkKeynote - OpenAI

In this fireside chat, Greg Brockman, CTO of OpenAI, and Robert Nishihara, CEO of Anyscale, discussed how OpenAI came to life, OpenAI’s mission statement, what artificial intelligence’s role is in tomorrow’s applications, and some of the tools and systems that OpenAI uses today. Greg described how he looked at many different tools to build ML systems at scale and how Ray fits into their architecture.

Some of the talk’s highlights include (click to view video clips):

Greg shares the decision on selecting Ray as the foundation for the distributed systems part of Open.Ai's platform rewrite.

Robert and Greg discuss how OpenAI used Ray to train the largest model.

Greg discusses the scalability of Ray

Ray is not just used for training large models but also to perform generic distributed tasks as well

Here is the full talk:

LinkCohere- Scalable training of language models using Ray, JAX, and TPUv4

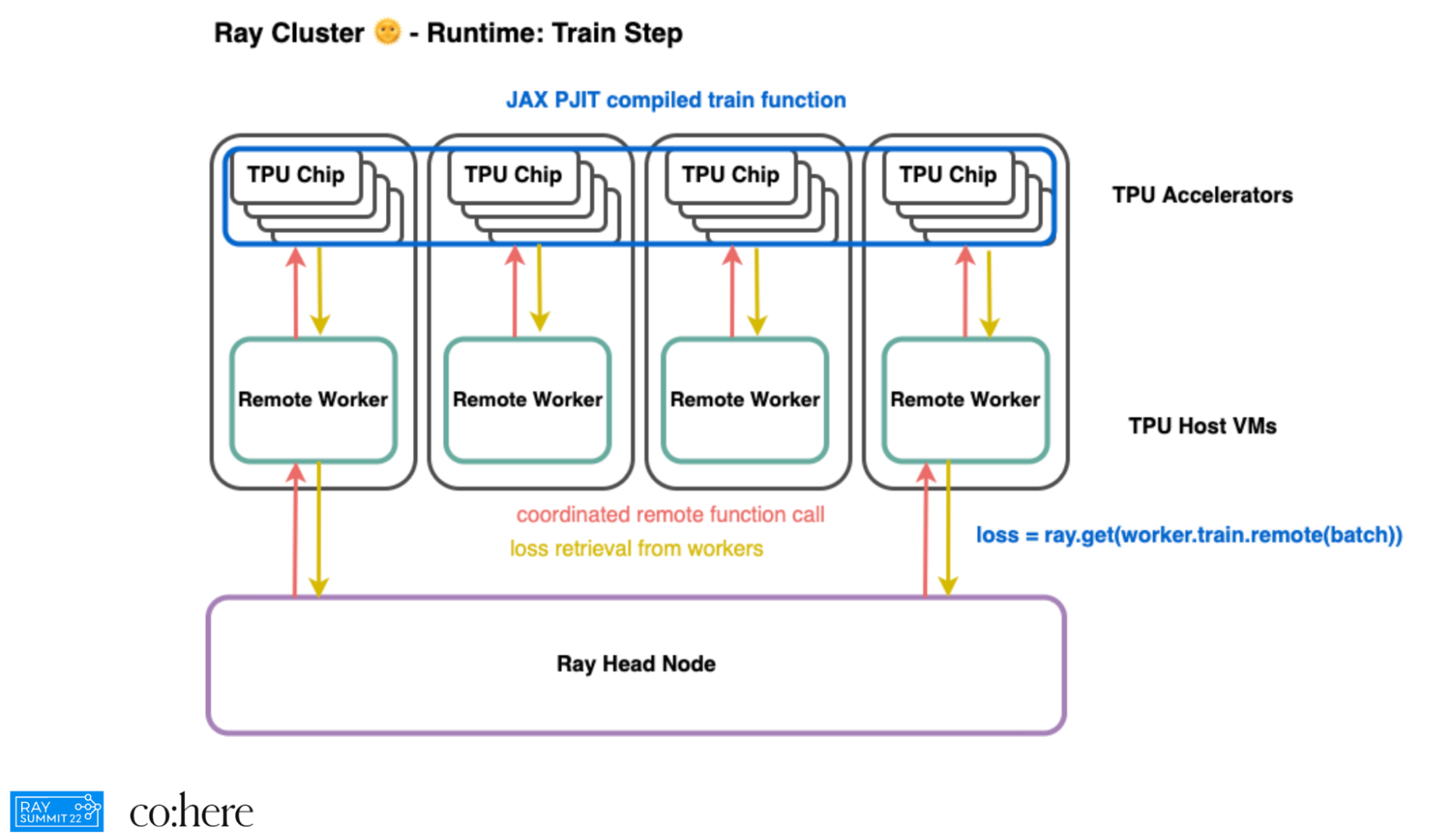

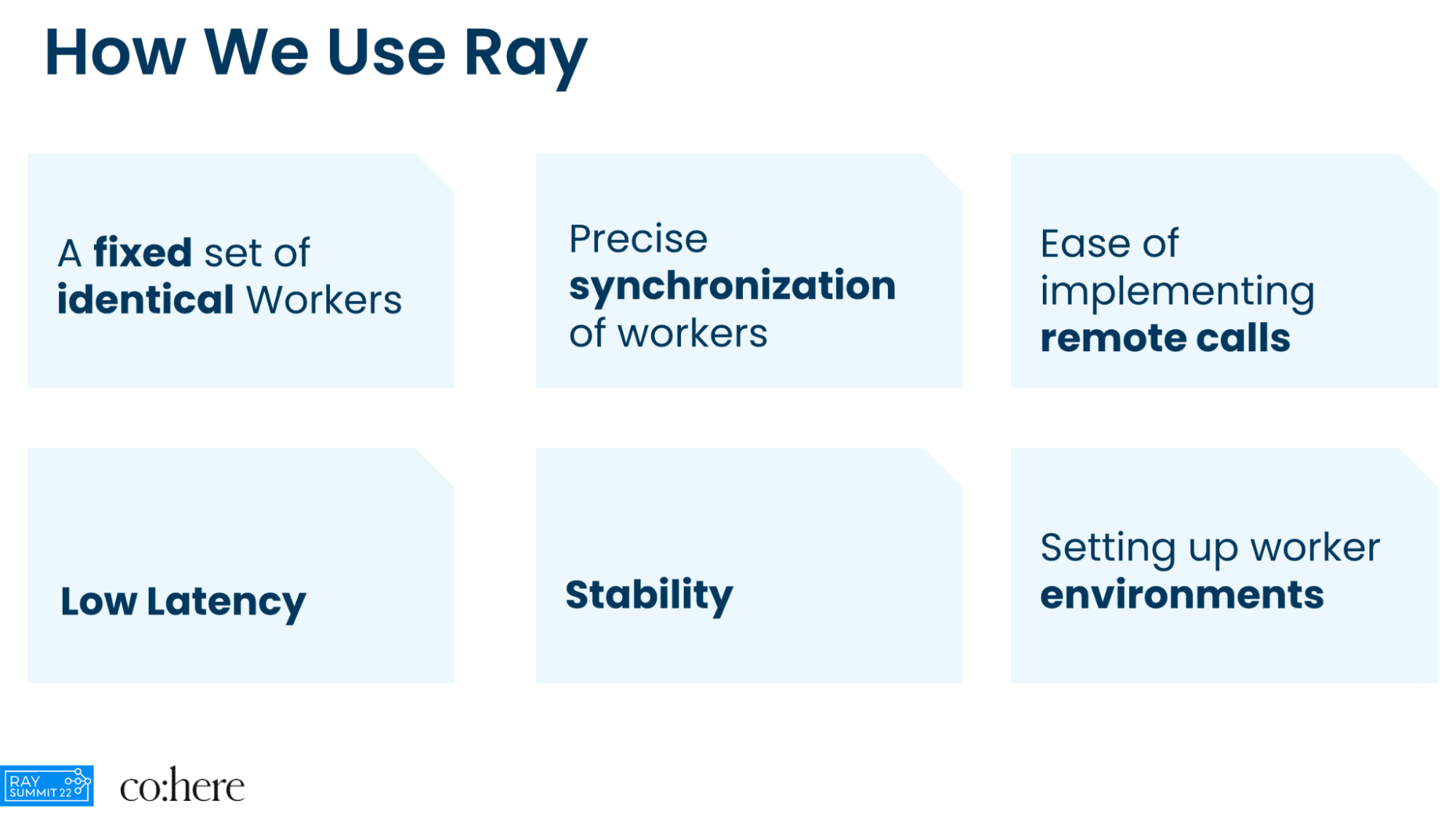

Cohere.ai’s mission is to make cutting edge Natural Language Processing (NLP) technology accessible to anyone in the world to use. The team at Cohere is using Ray on top of TPU v4 for distributing and scheduling different tasks across many TPU hosts as part of training their LLMs. In this talk, the Cohere’s team shares their experience, and introduces their framework called FAX which uses JAX, Ray, PJIT and TPU v4.

“Modern large language models require distributed training strategies due to their size. The challenges of efficiently and robustly training them are met with rapid developments on both software and hardware frontiers. In this technical report, we explore challenges and design decisions associated with developing a scalable training framework, and present a quantitative analysis of efficiency improvements coming from adopting new software and hardware solutions.” Scalable Training of Language Models using JAX pjit and TPUv4

Cohere uses ray to manage environment and code dependencies across hosts. During a train step, the Ray head node will spin a batch, splitting it according to the TPU topology, and schedule a remote function worker, with the batch needing to be synchronized across all TPUs. Each worker then feeds its split batch into the train function, which is run in a distributed fashion, returning a loss. The loss is then threaded back to the host VM. Ray makes it simple as all of this operation can be encapsulated in one line, “loss = ray.get(worker.train.remote(batch))”. (See blue line in the above figure.)

Overall Ray has been very reliable and very easy to use. Ray simplifies the coordination of distributing tasks as part of training large language models on top of TPU VMs.

Check out the full talk below:

LinkAlpa - Simple large model training and inference on Ray

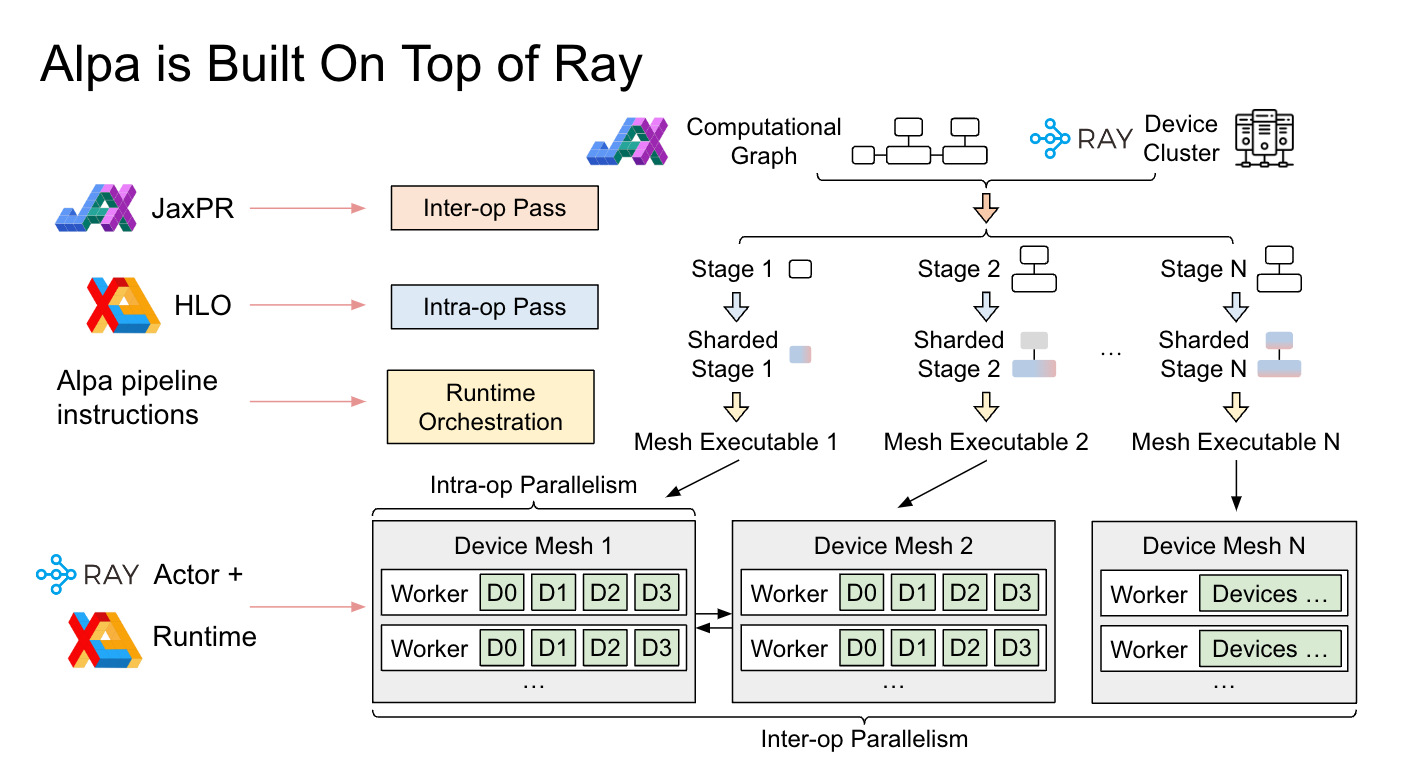

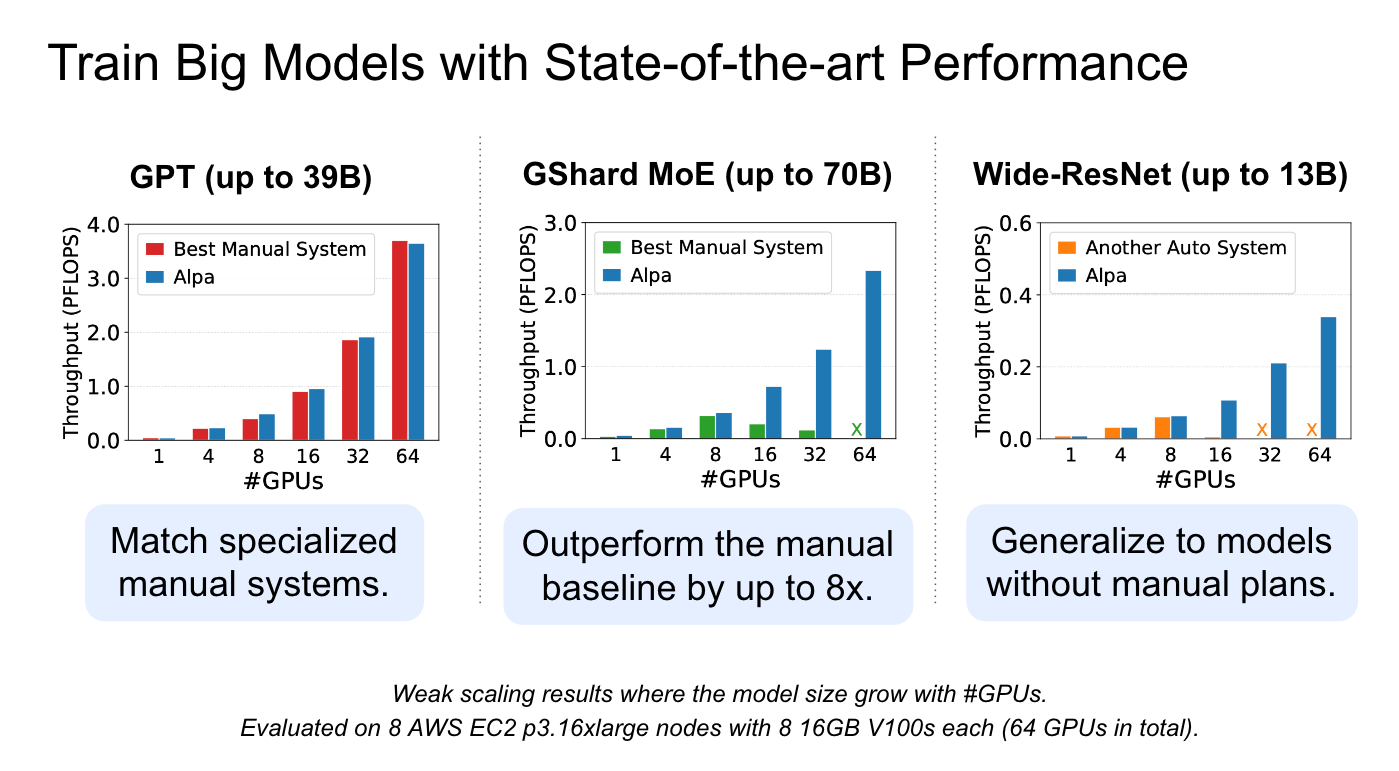

In this talk, Hao Zhang, a postdoc researcher at UC Berkeley, introduces a new system called Alpa that automates model parallel training and serving of large language models like GPT3. The project is a collaboration between UC Berkeley and Anyscale primarily. This talk covers the current challenges posed by large models and the 3D of parallelism (data, model and pipeline) and discusses how Alpa, an unified compiler on Ray, automatically finds and executes the best Inter-op and Intra-op parallelism for large deep learning models using JAX and XLA.

The main Alpa use cases are training LLMs with state of the art performance, serving pre-trained models in a cost efficient, fine-tuning custom architecture with flexible strategies.

Watch the full talk:

Check out the full Ray Summit 2022 talks.

If you are a Ray user and would like to submit a talk for Ray Summit 2023 you can do so here.