Join us for an informative evening of technical talks about Ray, LLMs, and RAGs. Our focus will be on constructing scalable LLM applications based on Retrieval Augmented Generation (RAG). Key topics encompass:

Optimal strategies for RAG applications

Criteria for evaluating successful LLM applications

Critical parameters to fine-tune and monitor in production

Expanding workloads (embedding, chunking, indexing, reranking, etc)

Plus a multitude of additional insights …

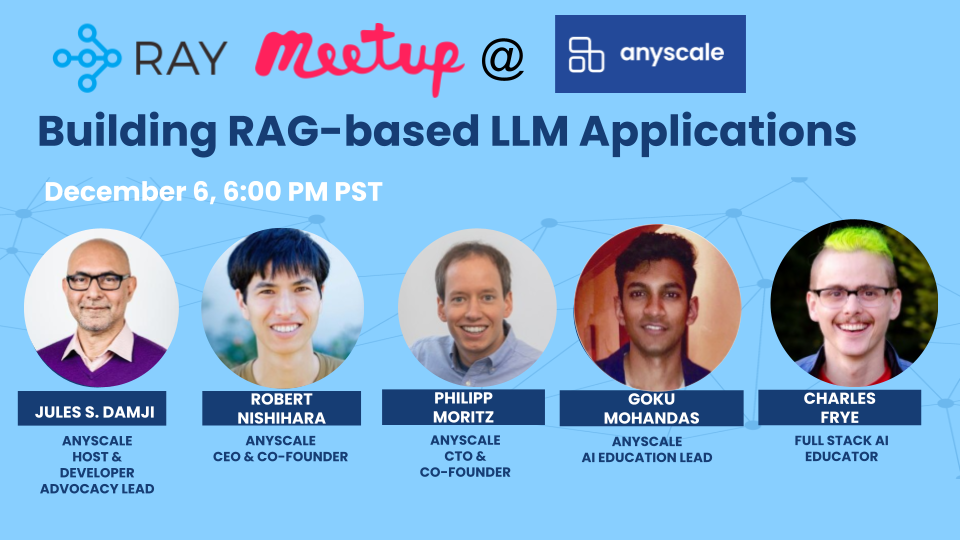

We have a notable lineup of speakers for this meetup.

Agenda

6:00 - 6:30 pm : Networking, Snacks & Drinks

Talk 1 : A year in reflection: Ray, Anyscale, LLMs, and GenAI

Talk 2 : Developing and serving RAG-Based LLM applications in production

Talk 3 : Evaluating LLM Applications Is Hard

Talk 1: A year in reflection: Ray, Anyscale, LLMs, and GenAI

Abstract: TBD

Bio:

Robert Nishihara is one of the creators of Ray, a distributed system for scaling AI/ML workloads and Python applications to clusters. He is one of the co-founders and CEO of Anyscale, which is the company behind Ray and Gen AI. He did his Ph.D. in machine learning and distributed systems in the computer science department at UC Berkeley. Before that, he majored in math at Harvard.

Talk 2: Developing and serving RAG-Based LLM applications in production

Abstract: There are a lot of different moving pieces when it comes to developing and serving LLM applications. This talk will provide a comprehensive guide for developing retrieval augmented generation (RAG) based LLM applications — with a focus on scale (embed, index, serve, etc.), evaluation (component-wise and overall) and production workflows. We’ll also explore more advanced topics such as hybrid routing to close the gap between OSS and closed LLMs.

Takeaways:

Evaluating RAG-based LLM applications are crucial for identifying and productionizing the best configuration.

Developing your LLM application with scalable workloads involves minimal changes to existing code.

Mixture of Experts (MoE) routing allows you to close the gap between open source and closed LLMs.

Bio(s):

Philipp Moritz is one of the creators of Ray, an open source system for scaling AI. He is also co-founder and CTO of Anyscale, the company behind Ray. He is passionate about machine learning, artificial intelligence and computing in general and strives to create the best open source tools for developers to build and scale their AI applications.

Goku Mohandas works on machine learning, education and product development at Anyscale Inc. He was the founder of Made With ML, a platform that educates data scientists and MLEs on MLOps 1st principles and production-grade implementation. He has worked as a machine learning engineer at Apple and was a ML lead at Citizen (a16z health) prior to that.

Talk 3: Evaluating LLM Applications Is Hard

Abstract: A specter is haunting generative AI: the specter of evaluation. In a rush of excitement at the new capabilities provided by open and proprietary foundation models, it seems everyone from homebrew hackers to engineering teams at NASDAQ companies has shipped products and features based on those capabilities. But how do we know whether those products and features are good? And how do we know whether our changes make them better? I will share some case studies and experiences on just how hard this problem is – from the engineering, product, and business perspectives – and a bit about what is to be done.

Bio: Charles Frye teaches people about building products that use artificial intelligence. He completed a PhD on neural network optimization at UC Berkeley in 2020 before working at MLOps startup Weights & Biases and on the popular online courses Full Stack Deep Learning and Full Stack LLM Bootcamp.

REGISTER HERE: https://lu.ma/ray-rag-llm