Anyscale Announces Lineage Tracking for Faster End-to-End Tracing and Debugging of Ray Workloads

Ray makes Python scalable and fast, but once workloads spread across a distributed environment, development lineage visibility gets harder. Without a clear link between datasets, models, and compute, even simple debugging takes too long. Reproducing a past run turns into a manual scavenger hunt through logs, tags, and notebooks. Teams try to DIY lineage with run names and glue scripts, and many lose the built-in mapping they had on other platforms. Every hour spent stitching clues is time not spent shipping mission-critical model improvements.

Today we are announcing the private beta of Anyscale Lineage Tracking – a new capability that brings end-to-end visibility to distributed AI development for clear pipeline transparency, fast experiment reproduction, and easy debugging. With Lineage Tracking, developers can trace datasets and models to the exact Anyscale workspaces, jobs and services that produced or consumed them, debug failures with logs and parameters in context, and reproduce runs with the captured environment configs. As part of this release teams get access to:

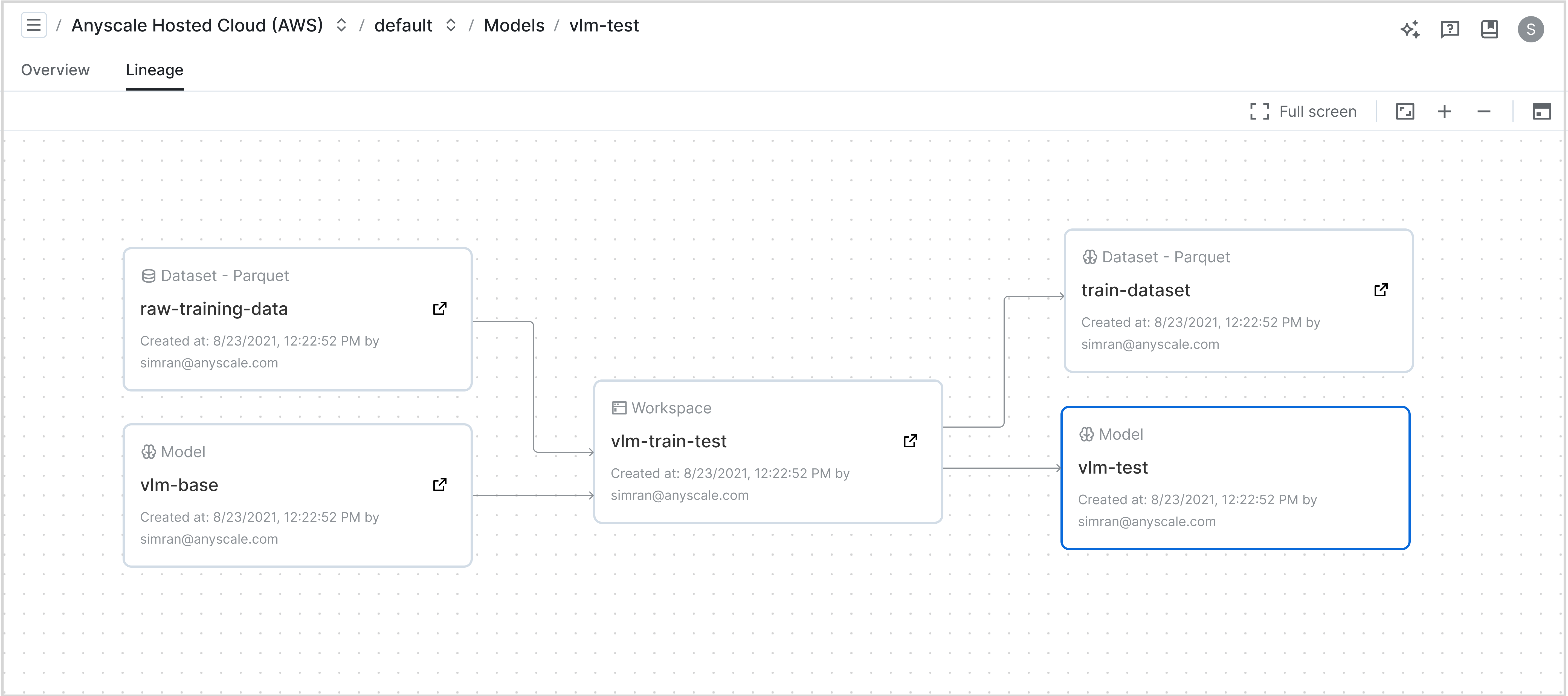

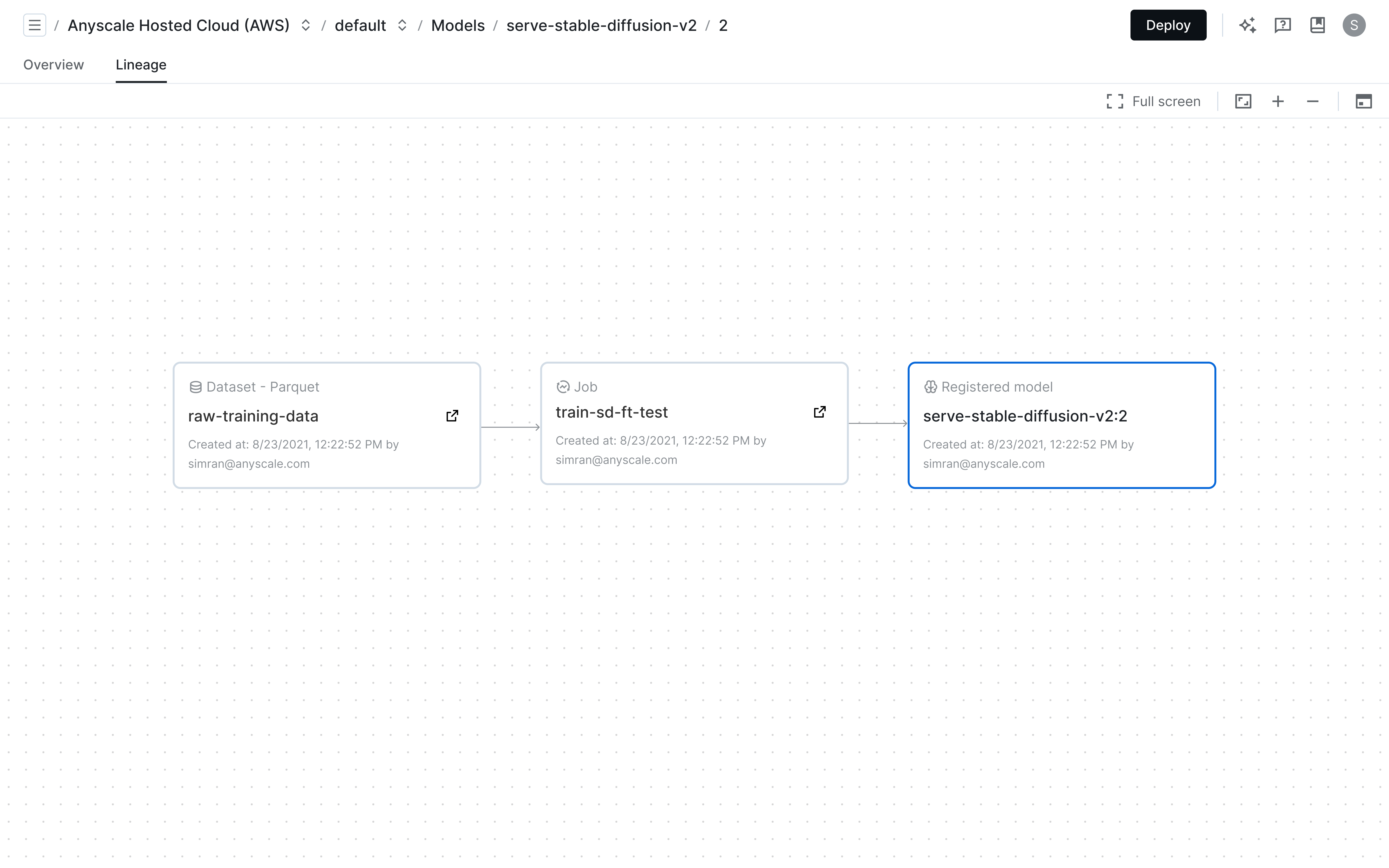

Lineage graphs that provide a graphical view of how datasets and models relate across Anyscale Workspaces, Jobs, and Services, so teams can quickly visualize the connection of all moving pieces in one place.

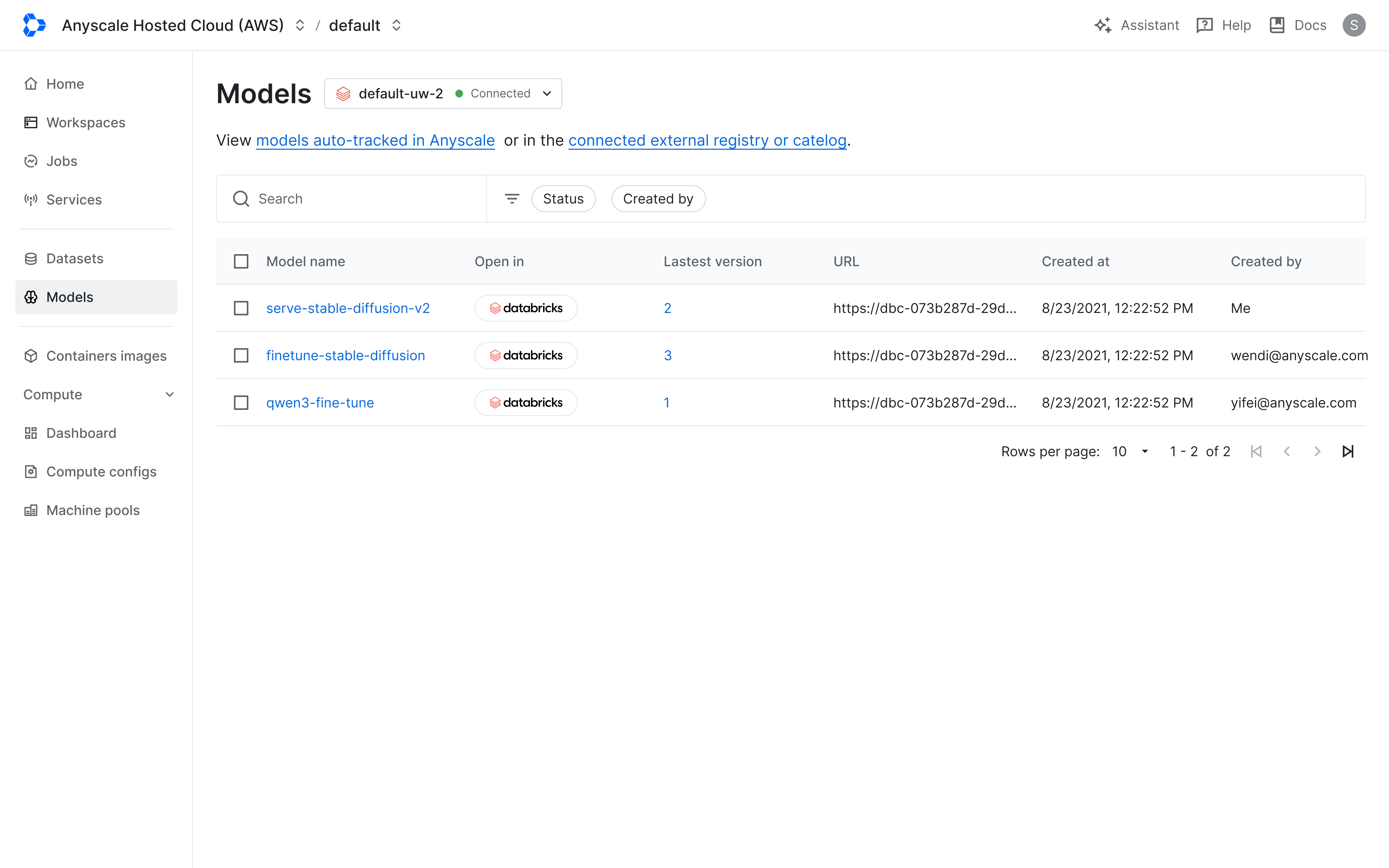

Native integrations with Unity Catalog, MLflow, and Weights & Biases to help teams establish relationships fast, using the catalogs and experiment trackers they already rely on.

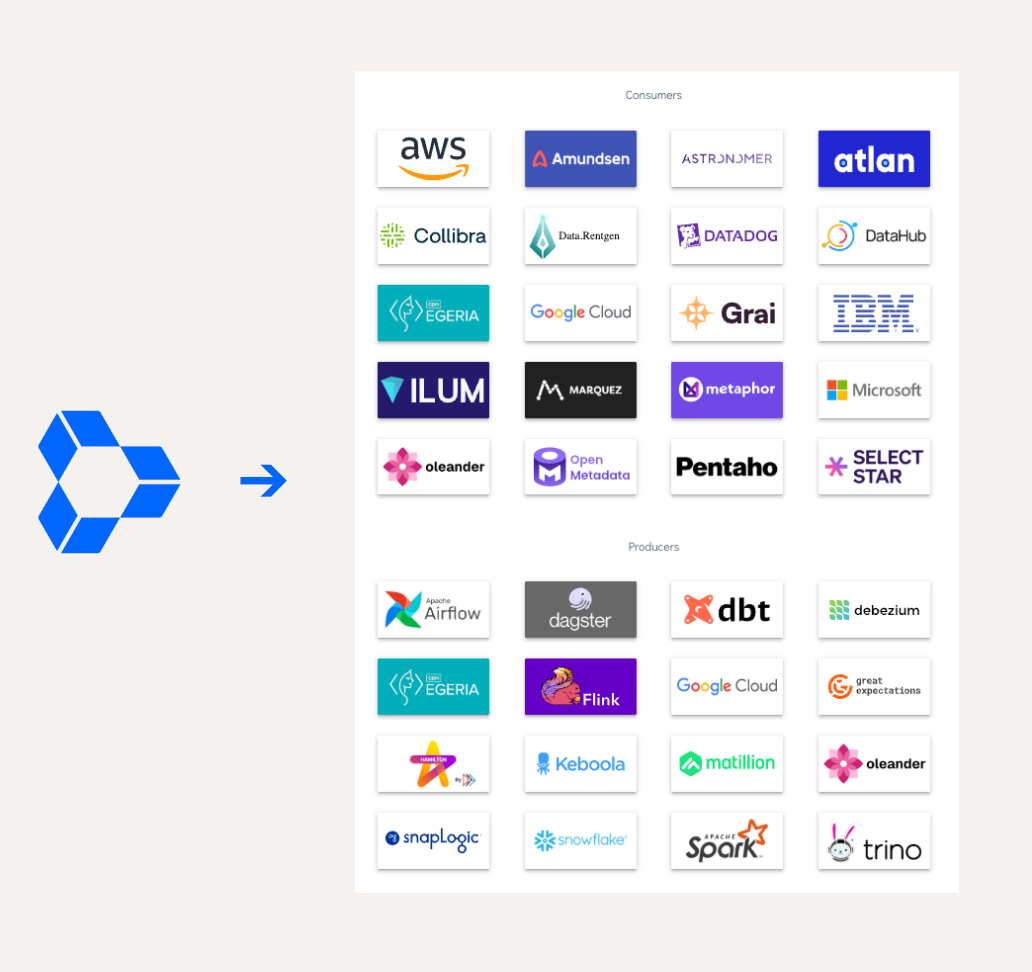

An OpenLineage foundation that keeps lineage portable and compatible with any lineage-aware catalog or registry.

With these new capabilities, developers get a clear map to the right run, platform and MLOps teams can reproduce pipelines from prototype to production with fewer handoffs, and security /compliance teams gain end-to-end auditability of the model lifecycle.

LinkWhat teams did before lineage tracking

Inside Anyscale, many teams had to jump between workload dashboards, logs, and external registries to reconstruct how a pipeline actually ran. For example, an ML team that fine-tuned a model using data from a table from Unity Catalog and a base model from MLflow. The training job would log metrics to MLflow and register a new version. A few days later, strange behavior appeared in production. The team would open MLflow to inspect the model and try to locate the Anyscale job that produced it.

Unless someone had remembered to manually log the exact Job ID, they had to start guessing. They would jump into the Anyscale UI, dig through job histories and logs, and trace the data to another transformation that runs in a different workspace. The exact source job often remained unclear even though the assets were clearly connected. Valuable time was lost while people stitched clues together from run names and code comments. Nobody wanted to restart a job or clean up storage because deleting the wrong asset might break something still in use.

Lineage Tracking eliminates this guesswork by moving the full ML pipeline into view, with clear visibility into the Ray workloads that created it, making debugging, reproducing, and iterating far simpler.

LinkLineage graphs: the map that connects data, models, and compute

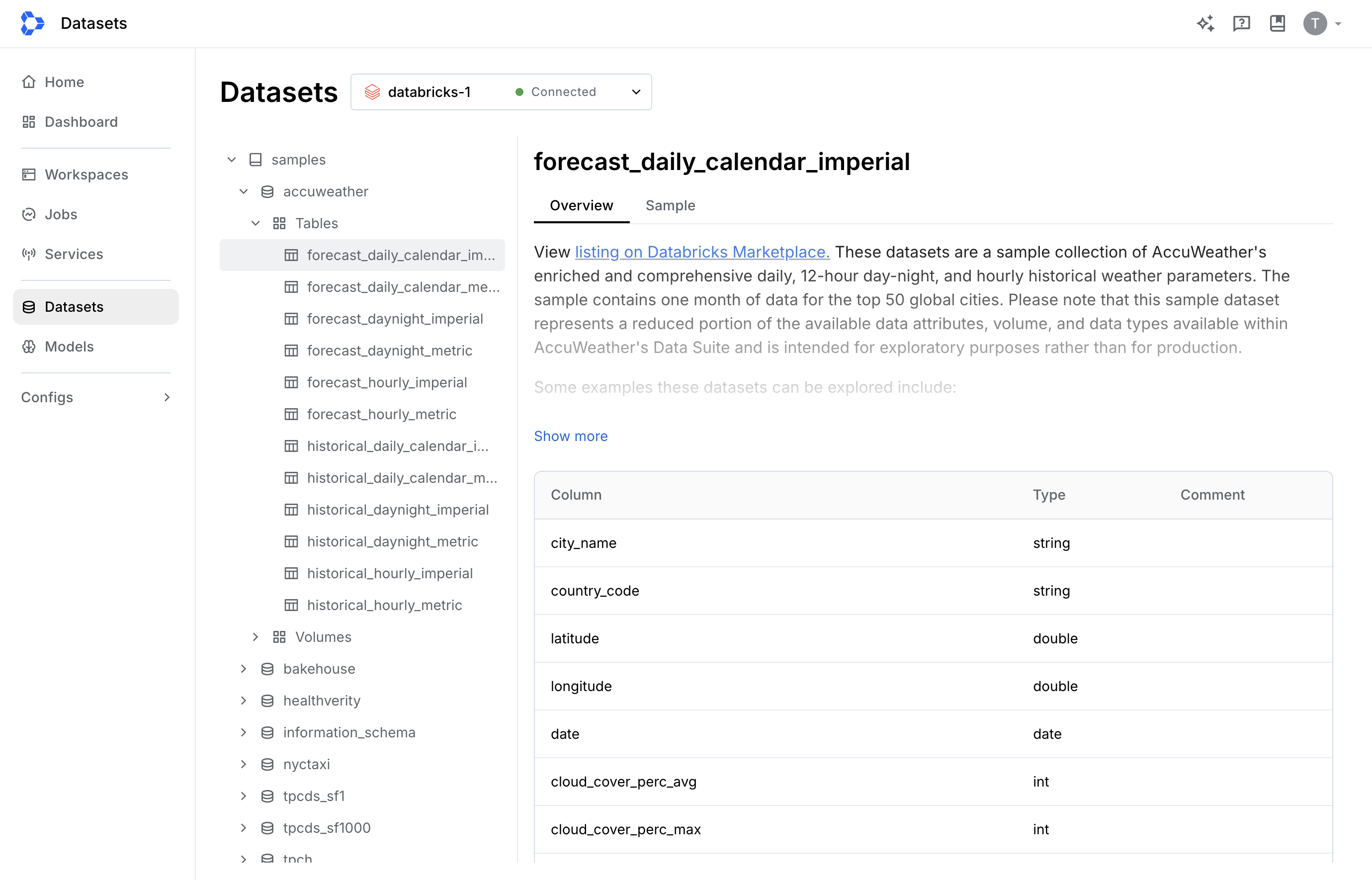

Anyscale Lineage Tracking is an OpenLineage-powered observability feature inside Anyscale that maps datasets and models across any Anyscale managed Ray cluster – including workspaces, jobs, and services – and renders an interactive graph of the pipeline. It captures lineage automatically from Ray Data reads and writes and from MLflow model log and load events. With native integrations to Unity Catalog, MLflow, and Weights & Biases (W&B), a dataset can be viewed in Anyscale with table name, schema, and sample rows. From there, teams can immediately start with Ray Data, or jump to the producing job with logs, parameters, and environment in context. Models link to the exact MLflow experiment and registry version, and runs link to W&B. The journey from data to model to compute stays visible and actionable all in one place.

When a score looks wrong or a model drifts, the difficult part is not just the fix. It is also the time lost finding the right job, the right inputs, and the exact code version that produced the output. The typical process is to open the model registry, search for related jobs by name, cross check dates and tags, ask who ran what and where, and hope the relevant Job ID was logged properly. Meanwhile GPUs sit idle, batch windows slip, and teams are split across tools and tabs with partial context, wasting valuable time and resources.

Lineage Tracking replaces that hunt with a single view. Its interactive graph shows how datasets and models move across Anyscale from data prep to training to serving. Every node links to the workload that produced it with logs, parameters, and environment in context. Teams can see producers and consumers for any dataset or model without leaving Anyscale, reproduce the exact run with recorded parameters and environment, and account for potential downstream impact prior to modification.

Built-in lineage graphs that visualize how datasets and models connect across Workspaces, showing inputs, outputs, and the compute jobs that link them.

From the graph, teams can start broad and then go deep. They can first see the pipeline end to end to understand what ran where and when across any Anyscale managed clusters. From there, it is easy to open a model, and review its full version history, including the exact job that produced each version. Developers can compare versions, inspect the inputs, and reproduce the run with the captured settings. Then pivot to a dataset, and see every job and service that reads or writes it, including upstream transforms and downstream consumers. Before making changes, verify dependents to avoid breaking a live pipeline and to clean up safely. As a result, ML teams go from confusion to certainty in one view, keeping pipelines on schedule and GPUs productive.Teams ship more experiments and promote models safer.

LinkNative integrations with Unity Catalog, MLflow, and Weights & Biases

When pipeline context lives across data catalogs, model registries, and experiment trackers, teams end up copying IDs into tags, pasting links into wikis, and writing glue scripts that drift. Switch tools once, and the trail breaks. The problem compounds when distributed compute with Ray is added into the mix.

Anyscale Lineage Tracking connects to these tools natively, so context is never lost and teams can quickly understand dependencies using the catalogs and experiment trackers they already rely on. From the models tab, you can dig into the details – like experiment and registry version – of any model tracked with MLflow or W&B with just a click. Jump from a dataset tab to confirm schema and ownership of a table in Unity Catalog.

Browse datasets in Anyscale with direct links to Unity Catalog and models with integrations to MLflow and W&B.

With this integrated experience out-of-the-box in Anyscale, developers keep the full context in the same platform where they do their development, and platform teams do not need to build or maintain brittle, custom scripts to keep systems in sync.

LinkBuilt on open OpenLineage standards

Modern ML platforms are built using many specialized systems – catalogs, registries, and experiment trackers – that help govern data and models alongside a compute platform. Closed lineage systems create fragmentation, forcing teams to maintain custom integrations to stay in sync. The better approach is standardization — when tools capture and store lineage in a common format, everything stays connected with less manual work.

Built on the OpenLineage standard, Lineage Tracking plugs into the broader OpenLineage ecosystem, so events from Anyscale can flow to popular catalogs, registries, and observability tools.

Anyscale Lineage Tracking uses OpenLineage end to end, so events can be exported and analyzed in existing metadata management and tracking systems. The schema is consistent, identifiers are stable, and integrations are pluggable. Teams can extend the feature to any OpenLineage compatible catalog or registry without changing how they build. In practice, a model fine tuned on Anyscale carries full context in MLflow, a dataset curated in Unity Catalog can be traced through training and serving, and lineage remains portable as stacks evolve.

LinkSee it in action

Here is how Lineage Tracking works in practice. The Ray Data plugin emits OpenLineage events whenever a job reads or writes data. The MLflow plugin emits events when a model is logged or loaded. Anyscale standardizes those events to OpenLineage and correlates them with the correct Workspace, Job, and Service. The Lineage tab then renders an interactive graph where teams can open logs, compare versions, and reproduce runs directly from the view.

Show datasets and models

View tables in Unity Catalog and models with versions in MLflow. Unity Catalog is view-only at launch.Run workloads as usual

Use ray.data.read and write in data prep and training, or use mlflow.log_model and mlflow.pyfunc.load_model in training and serving.1ray.remote 2import mlflow 3mlflow.log_model(model, "models:/my-model") 4m = mlflow.pyfunc.load_model("models:/my-model")Explore lineage

Open the Datasets or Models page in the Anyscale UI. Select an asset and open the Lineage tab to see the full graph across Workspaces, Jobs, and Services. Click any asset to view logs, parameters, environment, and version info.

LT-4 Iterate and govern

Reproduce a run with the correct artifacts and parameters. Identify dependent assets before any change or delete to avoid breaking live pipelines.

LT-5

LinkWhat is next

Ray today solves the hard parts of distributed AI across heterogeneous clusters. Anyscale helps teams move that power into production with developer velocity, production resilience, and cost efficiency. Lineage Tracking adds the missing map, so data and models are traceable, runs are reproducible, and governance is built-in for modern AI compute. Lineage Tracking is available as beta -with pre-built integrations with Unity Catalog, MLflow, and W&B with plans to expand support to other catalogs and registries.

LinkGet started

Experience Lineage Tracking with our multimodal AI pipeline example. New Anyscale accounts include $100 in credits to get started.

Come join our launch webinar on November 20th for Lineage Tracking to learn from our experts.

Sign up for our monthly newsletter to stay updated on all things Anyscale, including new product updates.