Building an end-to-end ML pipeline using Mars and XGBoost on Ray

In the AI field, training and feature engineering have been traditionally developed independently. In a traditional AI pipeline, feature engineering often uses big data processing frameworks such as Hadoop, Spark, and Flink, while training depends on TensorFlow, PyTorch, XGBoost, LightGBM and other frameworks. The inconsistency of design philosophy and runtime environment between those two types of frameworks lead to the following two problems:

AI algorithm engineers need to understand and master multiple framework platforms even when developing a simple AI end-to-end pipeline.

Frequent data exchanges between different platforms results in too much data serialization, format conversion, and other overhead. In some cases, data exchange overhead becomes the largest proportion of the overall overhead, resulting in serious resource waste and inefficient development.

Therefore, the Ray team at Ant Group developed the Mars On Ray scientific computing framework. Combined with XGBoost on Ray and other Ray machine learning libraries, we can implement an end-to-end AI pipeline in one job, and use one Python script for the whole large-scale AI pipeline.

LinkMars On Ray Introduction

Mars is a tensor-based unified framework for large-scale data computation which scales NumPy, pandas, scikit-learn and Python functions. You can replace NumPy/pandas/scikit-learn import statements with Mars to get distributed execution for those APIs.

1import mars.tensor as mt

2import mars.dataframe as md

3

4N = 200_000_000

5a = mt.random.uniform(-1, 1, size=(N, 2))

6print(((mt.linalg.norm(a, axis=1) < 1)

7 .sum() * 4 / N).execute())

8

9df = md.DataFrame(

10 mt.random.rand(100000000, 4),

11 columns=list('abcd'))

12print(df.describe().execute())Ray is an open source framework that provides a simple, universal API for building various distributed applications. In the past few years, the Ray project has rapidly formed a relatively complete ecosystem (Ray Tune, RLlib, Ray Serve, distributed scikit-learn, XGBoost on Ray, etc.) and is widely used for building various AI and big data systems.

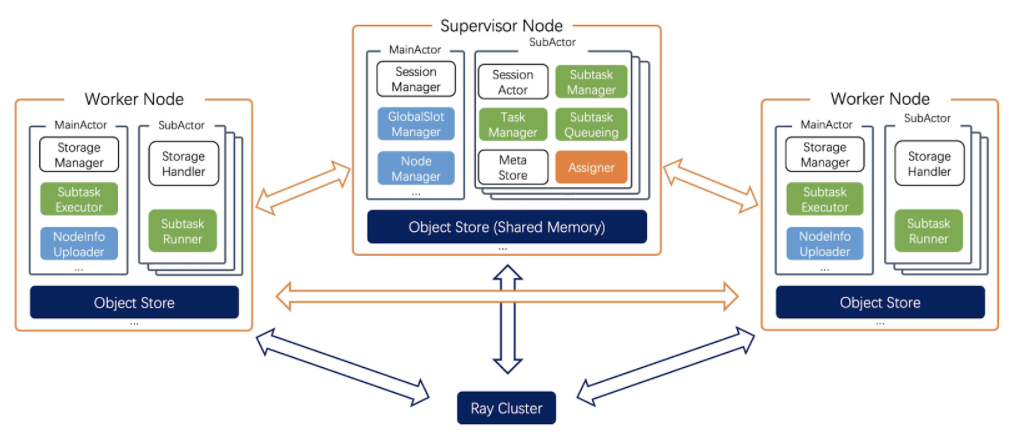

Based on Ray’s distributed primitives, a Ray backend was implemented for Mars. Mars combined with Ray’s large machine learning ecosystem makes it easy to build an end-to-end AI pipeline. The overall Mars On Ray architecture is as follows:

Ray actors implement Mars components such as the supervisor and the worker nodes, and use Ray remote calls for communication between distributed components of Mars. This allows Mars to focus only on the development of framework logic, instead of the complicated underlying details of distributed systems such as component communication, data serialization, and deployment.

All data plane communications are based on the Ray object store. Mars puts computation results in the Ray object store, and data is exchanged between nodes through the Ray object store. Ray’s object store provides shared memory, automatic data GC, and data swapping between memory and external storage to help Mars efficiently manage large amounts of data across a large cluster.

All Mars workers use Ray actor failover for automatic recovery. Mars only needs to add a configuration for fault recovery, without needing to care about the underlying monitoring, restart, and other issues.

Fast and adaptive scale-out. Thanks to Ray’s actor model, there is no need to pull images first and then create a Mars cluster in a Ray cluster. In result, Mars clusters can scale up in less than 5 seconds when the workload increases.

Fast and adaptive scale-in. Ray's object store is a global service (similar to the external shuffle service of a traditional batch computing engine). Therefore, the Mars worker does not need to migrate data when being offline, and can be offline in seconds.

While implementing the Ray backend, we also contributed auto scaling, scheduling, failover, operators, and stability optimizations to Mars.

LinkXGBoost On Ray

XGBoost on Ray is a Ray based XGBoost distributed backend. Compared with the native XGBoost, it supports the following features:

Multi-node and multi-GPU parallel training. The XGBoost backend supports automatic cross-GPU communication using NCCL2. You only need to start an actor on each GPU. For example, if you have two machines with four GPU cards, you can create eight actors by setting

gpus_per_actor = 1. By doing this, each actor will hold a GPU card.Seamless integration with the popular distributed hyper-parameter tuning library Ray Tune.You only need to put the training code into a function, and then pass the function and the super-parameters of the tuning

tune.run. You can perform parallel training and parameter search. At the same time, XGBoost on Ray also checks whether Ray Tune is used for parameter search, adjusts the XGBoost actor deployment policy, and then reports the results to Ray Tune.Advanced fault tolerance mechanisms. For non-elastic training, the entire training process is suspended after the worker fails. The training process is resumed after the failed worker recovers and loads data. Elastic training is also supported. After a worker fails, XGBoost on Ray continues to be trained. After the failed workers are back and loaded their data, they are added to the training. This method greatly speeds up the training when data loading takes up the main time, but has a certain impact on the accuracy. However, in the case of large-scale data, the impact is very small. You can learn more about this here.

Supports loading distributed DataFrame as input data and distributed data. Supports Numpy/Pandas/Ray Datasets and other datasets. For non-distributed datasets, data is centrally loaded and sharded on the head node, and then written to the Ray object store for actor reading on each node. For distributed datasets, each node reads data directly into the Ray object store.

LinkMars+XGBoost AI Pipeline

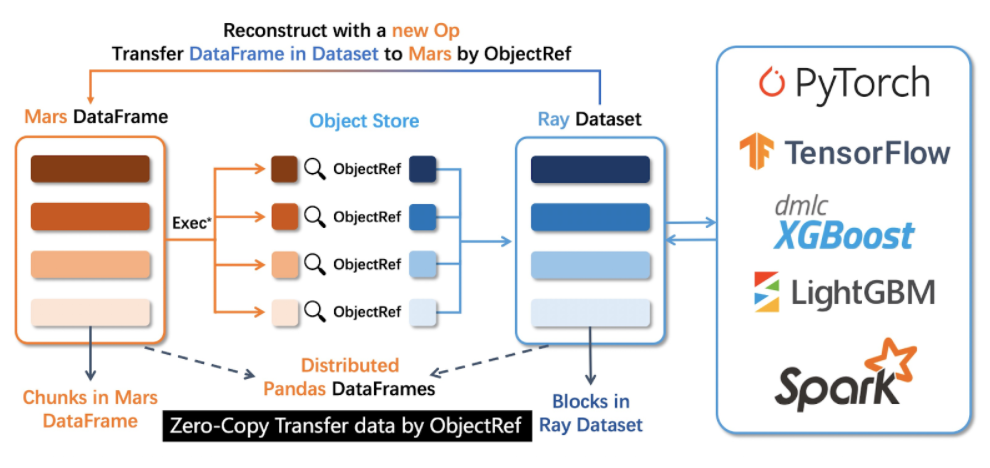

Generally, the time-consuming part of machine learning is data processing and training. Traditional solutions require external storage (usually distributed file systems) to exchange data between data processing and training, which is inefficient. In Mars and XGBoost On Ray, data is stored in the distributed object store provided by Ray, and exchanged through the shared memory object store (zero-copy) to avoid extra overhead. This results in higher efficiency.

In order to achieve highly efficient data exchange between Mars and XGBoost, we use Ray’s distributed dataset abstraction, Ray Datasets. Ray Datasets is a distributed Arrow dataset on Ray, which provides a unified data abstraction on Ray and serves as a standard method for loading data into a Ray cluster in parallel, doing basic parallel data processing, and efficiently exchanging data between Ray-based frameworks, libraries, and applications.

We support conversions between Mars DataFrame and Ray Datasets. XGBoost on Ray can use Mars DataFrame as input data for training and prediction.

Currently, objects stored in Mars are in Pandas format, so converting to Arrow format may incur some overhead. To resolve this, we’ve added support for zero-copy loading of both Arrow and Pandas data formats, which will be available in the next Ray release.

LinkBuild a ML pipeline using Mars and XGBoost On Ray

This section of the post goes over an end-to-end code example based on generated data. The entire process consists of data preparation, model training, and model prediction. In a real scenario, data cleansing and feature engineering are also included.

LinkData Preparation

The code below uses make_classification from Mars learn, a distributed version of scikit-learn provided by Mars, to generate a classification dataset.

1import mars.dataframe as md

2from mars.learn.datasets import make_classification

3# generate data

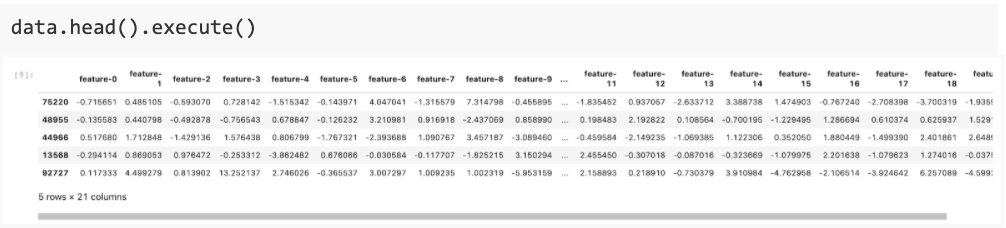

4X: md.DataFrame, y: md.DataFrame = make_classification(n_samples=n_samples, n_features=n_features, n_classes=n_classes, n_informative=n_informative, n_redundant=n_redundant, random_state=shuffle_seed)Like pandas, the head command can be used to explore the first five lines of data:

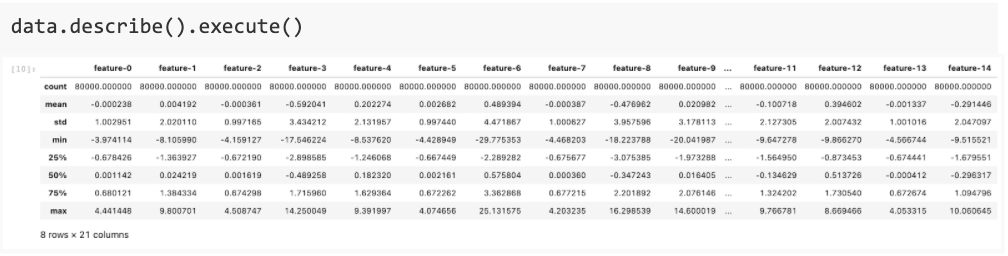

describe can be used to view the data distribution:

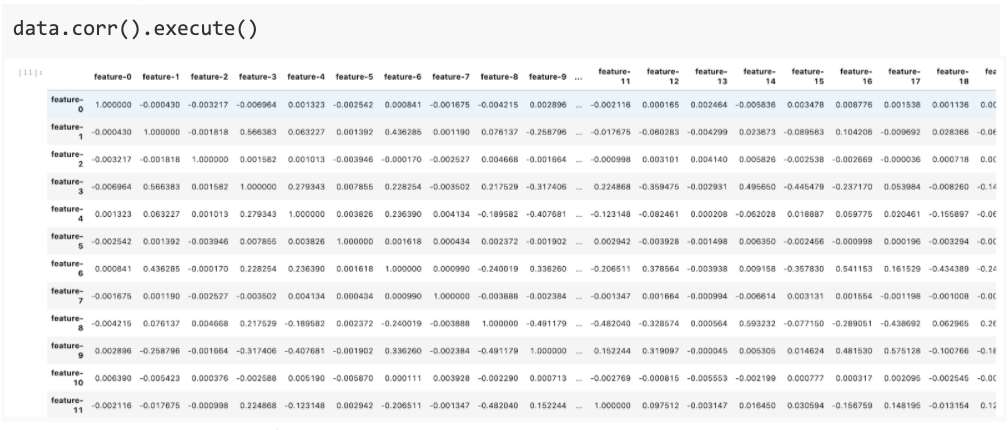

You can also explore feature correlation. If highly correlated features appear, dimension reduction might be required:

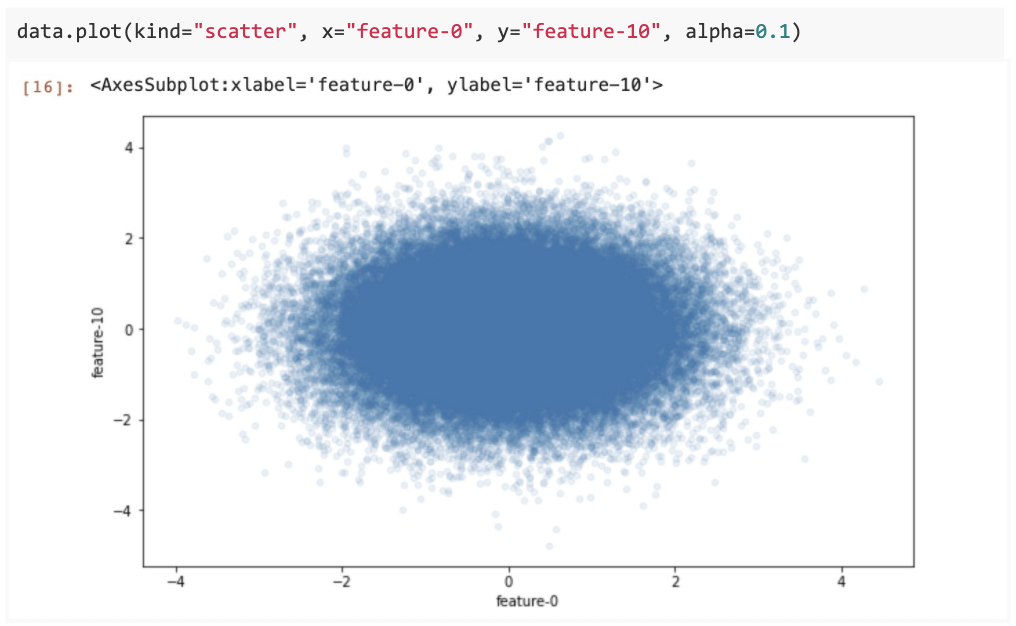

The correlation between features can also be analyzed more intuitively through a plot:

Next, split the data into training sets and test sets.

1from mars.learn.model_selection import train_test_split

2X_train, X_test, y_train, y_test = train_test_split(

3 X, y, test_size=test_size, random_state=shuffle_seed)The next step is to create an XGBoost matrix for training and testing:

1from xgboost_ray import RayDMatrix, RayParams, train, predict

2

3# convert mars DataFrame to Ray dataset

4ds_train = md.to_ray_dataset(df_train, num_shards=num_shards)

5ds_test = md.to_ray_dataset(df_test, num_shards=num_shards)

6# convert Ray dataset to RayDMatrix

7train_set = RayDMatrix(data=ds_train, label="labels")

8test_set = RayDMatrix(data=ds_test, label="labels")LinkTraining

The training process below uses the native XGBoost API for training. Note that the scikit-learn API provided by XGBoost-Ray could also be used for training. In a realistic training case, cross-validation or a separate validation set would be used to evaluate the model. In this case, these steps are omitted.

1from xgboost_ray import RayDMatrix, RayParams, train, predict

2

3evals_result = {}

4params = {

5 'nthread': 1,

6 'objective': 'multi:softmax',

7 'eval_metric': ['mlogloss', 'merror'],

8 'num_class': n_classes,

9 'eta': 0.1,

10 'seed': 42

11}

12bst = train(

13 params=params,

14 dtrain=train_set,

15 num_boost_round=200,

16 evals=[(train_set, 'train')],

17 evals_result=evals_result,

18 verbose_eval=100,

19 ray_params=ray_params

20)The scikit-learn style API could also have been used:

1from xgboost_ray import RayXGBClassifier

2

3clf = RayXGBClassifier(

4 n_jobs=4, # In XGBoost-Ray, n_jobs sets the number of actors

5 random_state=42

6)

7# scikit-learn API will automatically convert the data

8# to RayDMatrix format as needed.

9# You can also pass X as a RayDMatrix, in which case y will be ignored.

10clf.fit(train_set)LinkPredict

The overall prediction process is similar to that of training.

1from xgboost_ray import RayParams, predict

2

3# predict on a test set.

4pred = predict(bst, test_set, ray_params=ray_params)

5precision = (ds_test.dataframe['labels'].to_pandas() == pred).astype(int).sum() / ds_test.dataframe.shape[0]

6print(f"Prediction Accuracy: {precision}")If the model is trained by the scikit-learn style API, that API should be used for prediction:

1pred_ray = clf.predict(test_set)

2print(pred_ray)

3

4pred_proba_ray = clf.predict_proba(test_set)

5print(pred_proba_ray)LinkComplete Code

The code below shows it is possible to build a complete end-to-end distributed data processing and training job in less than 100 lines.

1import logging

2import ray

3import numpy as np

4import mars.dataframe as md

5from mars.learn.model_selection import train_test_split

6from mars.learn.datasets import make_classification

7from xgboost_ray import RayDMatrix, RayParams, train, predict

8

9logger = logging.getLogger(__name__)

10logging.basicConfig(format=ray.ray_constants.LOGGER_FORMAT, level=logging.INFO)

11

12def _load_data(n_samples: int,

13 n_features:int,

14 n_classes: int,

15 test_size: float = 0.1,

16 shuffle_seed: int = 42):

17 n_informative = int(n_features * 0.5)

18 n_redundant = int(n_features * 0.2)

19 # generate dataset

20 X, y = make_classification(n_samples=n_samples, n_features=n_features,

21 n_classes=n_classes, n_informative=n_informative,

22 n_redundant=n_redundant, random_state=shuffle_seed)

23 X, y = md.DataFrame(X), md.DataFrame({"labels": y})

24 X.columns = ['feature-' + str(i) for i in range(n_features)]

25 # split dataset

26 X_train, X_test, y_train, y_test = train_test_split(

27 X, y, test_size=test_size, random_state=shuffle_seed)

28 return md.concat([X_train, y_train], axis=1), md.concat([X_test, y_test], axis=1)

29

30def main(*args):

31 n_samples, n_features, worker_num, worker_cpu, num_shards = 10 ** 5, 20, 10, 8, 10

32 ray_params = RayParams(

33 num_actors=10,

34 cpus_per_actor=8

35 )

36

37 # setup mars

38 mars.new_ray_session(worker_num=worker_num, worker_cpu=worker_cpu, worker_mem=8 * 1024 ** 3))

39 n_classes = 10

40 df_train, df_test = _load_data(n_samples, n_features, n_classes, test_size=0.2)

41 # convert mars DataFrame to Ray dataset

42 ds_train = md.to_ray_dataset(df_train, num_shards=num_shards)

43 ds_test = md.to_ray_dataset(df_test, num_shards=num_shards)

44 train_set = RayDMatrix(data=ds_train, label="labels")

45 test_set = RayDMatrix(data=ds_test, label="labels")

46

47 evals_result = {}

48 params = {

49 'nthread': 1,

50 'objective': 'multi:softmax',

51 'eval_metric': ['mlogloss', 'merror'],

52 'num_class': n_classes,

53 'eta': 0.1,

54 'seed': 42

55 }

56 bst = train(

57 params=params,

58 dtrain=train_set,

59 num_boost_round=200,

60 evals=[(train_set, 'train')],

61 evals_result=evals_result,

62 verbose_eval=100,

63 ray_params=ray_params

64 )

65 # predict on a test set.

66 pred = predict(bst, test_set, ray_params=ray_params)

67 precision = (ds_test.dataframe['labels'].to_pandas() == pred).astype(int).sum() / ds_test.dataframe.shape[0]

68 logger.info("Prediction Accuracy: %.4f", precision)LinkFuture work

Currently, Mars on Ray is widely used at Ant Group and in the open source Mars Community. We plan to further optimize Mars and Ray in the following ways:

Mars & XGBoost worker collocation. Currently, both Mars and XGBoost on Ray use Ray’s placement group separately for custom scheduling. Generally, Mars & XGBoost workers are not located on the same nodes. When XGBoost reads Ray Datasets data and converts it to the internal format of the DMatrix, it needs to pull data from the object store of other nodes, which may incur data transfer overhead. In the future, Mars and XGBoost workers will be collocated on the same nodes to reduce data transfer overhead and speed up training.

Task Scheduling optimization. Currently, we are working on locality aware scheduling. This mechanism will allow scheduling computation tasks to the nodes where the data lives and reduce the overhead from internal data transfer. This effort is partially done in some scenarios and needs more optimizations.

DataFrame storage format optimization. When a Pandas DataFrame is stored in the object store, the entire Pandas DataFrame is serialized and then written into the object store. When manipulating partial columns of a DataFrame object, the entire DataFrame object needs to be rewritten into another Ray object, resulting in additional memory copy and memory usage overhead. We plan to store Pandas DataFrames and Arrow tables on a column-by-column basis to avoid this overhead.

Pandas originated from financial companies. A large number of businesses at Ant Group also use Pandas for data analysis and processing. We believe that with the optimization of Mars and Ray, we can broaden use cases, and further speed up the productivity of data and algorithm engineers.

LinkAbout us

We are in the Computing Intelligence Technology Department at Ant Group which spans Silicon Valley, Beijing, Shanghai, Hangzhou and Chengdu. The engineer culture we pursue is openness, simplicity, iteration, efficiency, and solving problems with technology! We warmly invite you to join us!

Please contact us at antcomputing@antgroup.com