Gang Scheduling Ray Clusters on Kubernetes with Multi-Cluster-App-Dispatcher (MCAD)

LinkIntroduction

Large scale machine learning workloads on Kubernetes commonly suffer from the lack of a resource reservation system to allow gang scheduling. For example, a training job requiring 100 GPU pods can be stuck with only 80 out of 100 GPUs provisioned, while other pods are stuck in a pending state. This is common when there are other jobs competing for resources in the same K8s cluster.

Using KubeRay with the Multi-Cluster-App-Dispatcher (MCAD) Kubernetes controller helps to avoid such situations and unblock your ML workload. Specifically, MCAD allows you to queue each of your Ray workloads until resource availability requirements are met. With MCAD, your Ray cluster’s pods will only be created once there is a guarantee that all of the pods can be scheduled.

LinkWorkload requirements

To train large models using Ray, users must submit Ray workloads that are long-running and run to completion. Such workloads benefit from the following capabilities:

Gang scheduling: Start one of the queued Ray clusters when aggregated resources (i.e., resources for all Ray worker pods) are available in the Kubernetes cluster.

Workload pre-emption: Pre-empt lower priority Ray workloads when high priority Ray workloads are requested.

Requirement #2, workload pre-emption, is in the future roadmap for MCAD development. This blog helps to address requirement #1, gang-scheduling.

Note that Ray supports internal gang-scheduling via placement groups. Placement groups allow you to queue a Ray workload until the Ray cluster can accommodate the workload’s tasks and actors. On the other hand, MCAD allows you to queue Ray cluster creation until your Kubernetes cluster can accommodate all of the Ray cluster’s pods.

LinkComponents for scaling workloads on Kubernetes

In this blog, we discuss how specific workloads can be scaled performantly with three components: Ray, KubeRay, and MCAD. Ray is the distributed Python runtime that executes your ML application. KubeRay provides K8s-native tooling to manage your Ray cluster on Kubernetes. Finally, MCAD ensures physical resource availability, so that your Ray cluster never gets stuck in a partially provisioned state. We elaborate on Ray, KubeRay, and MCAD below.

LinkRay

Ray is a unified way to scale Python and AI applications from a laptop to a cluster. With Ray, you can seamlessly scale the same code from a laptop to a cluster. Ray is designed to be a general-purpose library, meaning that it can run a broad array of distributed compute workloads performantly. If your application is written in Python, you can scale it with Ray, no other infrastructure is required.

LinkKubeRay

KubeRay is an open-source toolkit to run Ray applications on Kubernetes. KubeRay provides several tools to simplify managing Ray clusters on Kubernetes. The key player is the KubeRay operator, which converts your Ray configuration into a Ray cluster consisting of one or more Ray nodes; each Ray node occupies its own Kubernetes pod.

LinkMulti-Cluster-App-Dispatcher (MCAD)

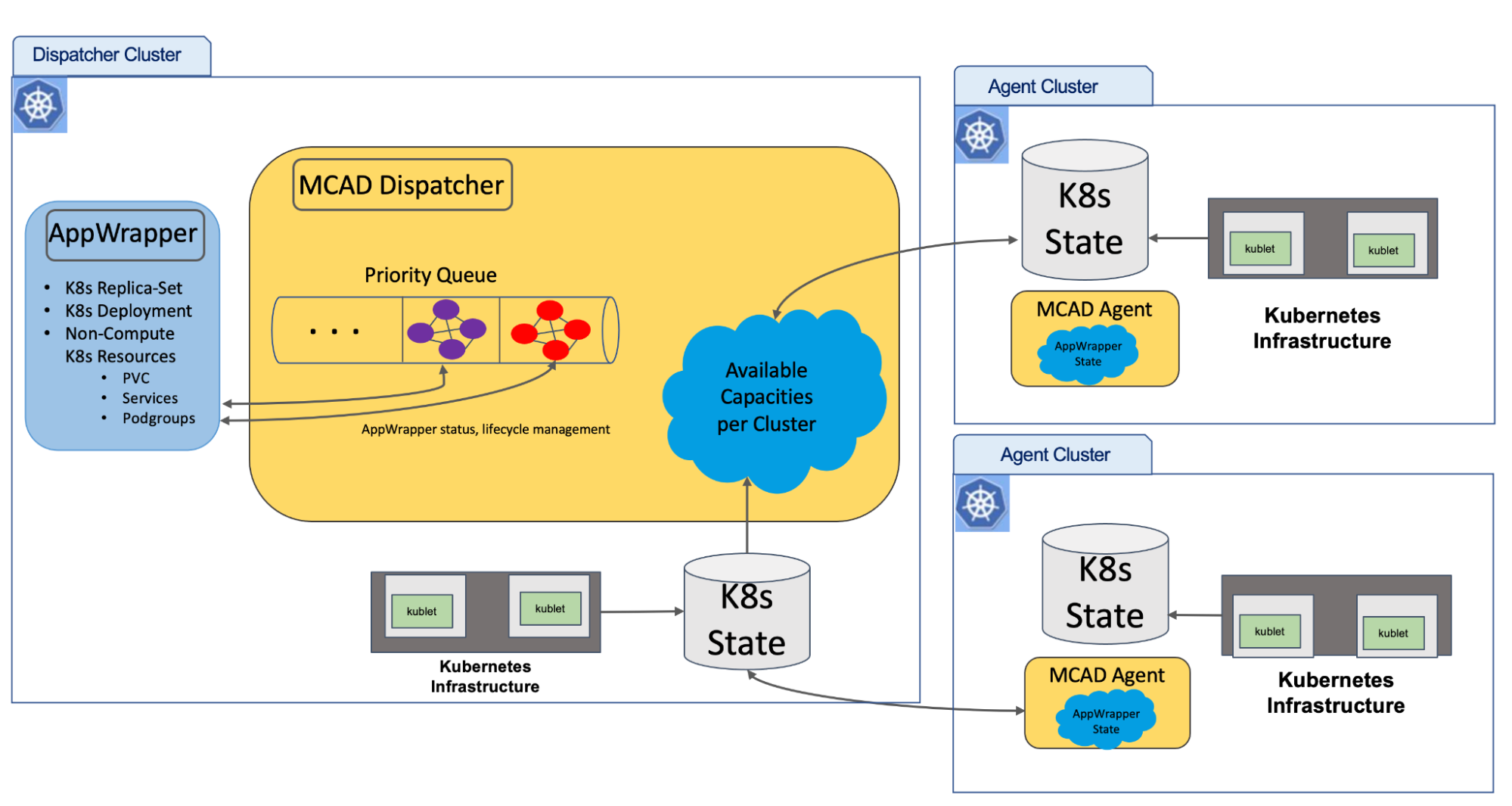

The Multi-Cluster-App-Dispatcher (MCAD) is a Kubernetes controller providing mechanisms for applications to manage batch jobs in a single Kubernetes cluster or multi-Kubernetes-cluster environment:

Figure 1. MCAD dispatch cluster

Figure 1. MCAD dispatch clusterMCAD uses

AppWrappersto wrap any Kubernetes object (Service, PodGroup, Job, Deployment, or any custom resource) the user provides. AnAppWrapperis another object represented as a custom resource (CR) that wraps any Kubernetes object.Wrapping objects means appending user yaml definitions to

.Spec.GenericItemlevel inside theAppWrapper.User objects within an

AppWrapperare queued until aggregated resources are available in one of the Kubernetes clusters.

A sample AppWrapper configuration can be found here.

LinkRunning the workload

We will run the General Language Understanding Evaluation (GLUE) benchmark . The GLUE benchmark is a collection of resources for training, evaluating, and analyzing natural language understanding systems. For our example workload, we will fine-tune the RoBERTa model, with KubeRay and MCAD installed on Openshift or Kubernetes.

Fine-tuning is a process that takes a model that has already been trained for one given task and then tunes or tweaks the model to make it perform another similar task. In this example, we will train 90 models on different natural language understanding (NLU) tasks. Running a GLUE workload on a single V100 GPU would take tens of GPU hours. We plan to run fine-tuning on 4 GPUs. With enough resources available in the cluster, we can run the job fairly quickly in a few hours.

Pre-requisites:

Please ensure KubeRay and MCAD are installed on your Kubernetes cluster using the steps outlined here. Configure a blob store with the GLUE dataset by following the instructions here.

This workload requires Kubernetes nodes with GPU available. We recommend using at least 2 AWS p3.2xlarge nodes or the equivalent with your preferred cloud provider.

The following commands demonstrate how to run the GLUE workload described above. The commands will use the AppWrapper configuration aw-ray-glue.yaml. Below is a condensed view of the configuration. Observe that the AppWrapper reserves aggregate resources for the wrapped RayCluster. The wrapped RayCluster object is specified under generictemplate.

1kind: AppWrapper

2metadata: {"name": "raycluster-glue"}

3spec:

4 resources:

5 custompodresources:

6 - replicas: 2

7 requests: {"cpu": "3", "memory": "16G", "nvidia.com/gpu": "1"}

8 limits: {"cpu": "3", "memory": "16G", "nvidia.com/gpu": "1"}

9 generictemplate:

10 kind: RayCluster

11 spec:

12 headGroupSpec:

13 containers:

14 - image: projectcodeflare/codeflare-glue:latest

15 resources:

16 limits: {"cpu":"2", "memory":"16G", "nvidia.com/gpu":"0"}

17 requests: {"cpu":"2", "memory":"16G", "nvidia.com/gpu":"0"}

18 workerGroupSpecs:

19 - replicas: 1

20 containers:

21 - image: projectcodeflare/codeflare-glue:latest

22 resources:

23 limits: {"cpu":"4", "memory":"16G", "nvidia.com/gpu":"2"}

24 requests: {"cpu":"4", "memory":"16G", "nvidia.com/gpu": "2"}To schedule our Ray cluster, we need to place a head pod with 2 CPUs and 16GB memory. We also need to place a worker pod with 4CPUs, 16GB memory, and 2 GPU. To accommodate these requirements, the AppWrapper reserves aggregate resources of 2 * ( 3 CPUs + 16GB memory + 1 GPUs ).

To demonstrate MCAD’s gang scheduling functionality, we create two AppWrappers. We create our first AppWrapper as follows:

1$ kubectl apply –f aw-ray-glue.yaml : First Ray GLUE cluster1Conditions:

2 Last Transition Micro Time: 2022-10-03T18:14:16.693259Z

3 Last Update Micro Time: 2022-10-03T18:14:16.693257Z

4 Status: True

5 Type: Init

6 Last Transition Micro Time: 2022-10-03T18:14:16.693574Z

7 Last Update Micro Time: 2022-10-03T18:14:16.693571Z

8 Reason: AwaitingHeadOfLine

9 Status: True

10 Type: Queueing

11 Last Transition Micro Time: 2022-10-03T18:14:16.711161Z

12 Last Update Micro Time: 2022-10-03T18:14:16.711159Z

13 Reason: FrontOfQueue.

14 Status: True

15 Type: HeadOfLine

16 Last Transition Micro Time: 2022-10-03T18:14:18.363510Z

17 Last Update Micro Time: 2022-10-03T18:14:18.363509Z

18 Reason: AppWrapperRunnable

19 Status: True

20 Type: Dispatched

21 Controllerfirsttimestamp: 2022-10-03T18:14:16.692755Z

22 Filterignore: true

23 Queuejobstate: Dispatched

24 Sender: before manageQueueJob - afterEtcdDispatching

25 State: Running

26 Systempriority: 9

27The above output shows various conditions Ray AppWrapper object takes to get dispatched.

Init: state is set when Ray

AppWrapperis submitted to MCAD.Queueing : State is set when Ray

AppWrapperobject is queued since point-in time aggregated resources are not available in the cluster.HeadOfLine : state is set after applying FIFO policy to bring Ray

AppWrapperto the head of the queue.Dispatched: state is set when Ray

AppWrapperis dispatched and aggregated resources are available in the Kubernetes cluster.

Running: state is set after all pods associated with Ray AppWrapper are in phase Running.

Now follow these instruction to run the GLUE benchmark: Training is kicked-off using the Ray driver script glue_benchmark.py.

Next, change the AppWrapper Name under .metadata.name and the RayCluster name under .spec.GenericItems.generictemplate.metadata.name in aw-ray-glue.yaml and re-apply the same yaml with the following command:

1$ kubectl apply –f aw-ray-glue.yaml : Second Ray GLUE cluster

2$ kubectl get appwrappers

31NAME AGE

2raycluster-glue 30m

3raycluster-glue-1 5sWe see that two AppWrappers have been created. However only the pods corresponding to the first AppWrapper have been provisioned:

1$ kubectl get pods

21NAME READY STATUS RESTARTS AGE

2glue-cluster-head-8nnh9 1/1 Running 0 31m

3glue-cluster-worker-small-group-ssk75 1/1 Running 0 31m

4When the first GLUE cluster completes its work and is deleted, MCAD will dispatch the second GLUE cluster; the second cluster’s pods will be created only at dispatch time. You may optionally observe this by deleting the first AppWrapper:

1 $ kubectl delete appwrapper raycluster-glue

2After deleting the first AppWrapper, the second RayCluster’s pods should be created; you can confirm this by asking Kubernetes to list pods:

1$ kubectl get pods.LinkConclusion

In this blog, we configured a Kubernetes cluster that had KubeRay and MCAD installed. We submitted Ray clusters that ran a GLUE workload and used an MCAD AppWrapper to guarantee resource availability for the workload. In doing so, we learned about gang dispatching and queuing of Ray clusters. In summary, MCAD allowed us to do batch computing with Ray on Kubernetes.

Please feel free to open issues for any questions on the KubeRay+MCAD integration.