How Nixtla uses Ray to accurately predict more than a million time series in half an hour

Nixtla is an open-source time-series startup that seeks to democratize the use of state-of-the-art models in the field. Its founders are building the platform and tools they wanted to have while forecasting for the world’s leading companies. Recently, Nixtla launched its forecasting API driven by transfer learning using deep learning and efficient implementation of statistical models, relying on Ray for distributed use.

Today, businesses in every industry collect time-series data and want to be able to predict those patterns. Notable examples include measuring and predicting the temperature and humidity of sensors to help manufacturers prevent failures or predicting streaming metrics to identify popular music and artists. Time series forecasting also has other applications like predicting sales of thousands of SKUs across different locations for supply chain optimization or anticipating peaks in the electricity market for cost reduction strategies. The growing need for efficient tools for time series data has been addressed by specialized databases such as Timescale and QuestDB. Now, we push the limits of time series forecast efficiency.

In this post, we will train and forecast millions of series with Nixtla’s StatsForecast library using its Ray integration for distributed computing.

LinkAbout StatsForecast and AutoARIMA

StatsForecast is the first library to efficiently address the challenge of predicting millions of time series with AutoARIMA. Before StatsForecast and its Ray integration, practitioners would have had to spend a lot of time parallelizing their tasks manually, setting up their clusters from scratch, and depending on slower ARIMA implementations. Now, Nixtla facilitates these tasks using the full power of Numba and Ray.

AutoARIMA is one of the most widely used models for time series forecasting. It was developed by Hyndman and Khandakar and from its conception has been an industry standard for its speed and accuracy. ARIMA models the time series through three parameters: the number of autoregressive terms p, the number of differences d, and the number of moving average parameters q. AutoARIMA finds the best ARIMA model; in this sense, the hyperparameter tuning (the right set of p,d,q) occurs inside the model using the Hyndman and Khandakar algorithm, so the user does not have to think about this task. The model focuses on leveraging autocorrelations to obtain accurate predictions and constitutes a well-proven model that works great for baselines.

Prior to the implementation of AutoARIMA by Nixtla, the model was only available in R (the one originally developed by Hyndman). In addition, there was a Python version, pmdarima, based on StatsModels. As this experiment shows, pmdarima is much more expensive in time and performance, particularly for time series with seasonality.

StatsForecast compiles AutoARIMA just in time (JIT) using Numba, making it exceptionally fast. With Ray, we can distribute the computation across many different cores and scale horizontally. Here we show how this code allows data scientists and developers to fit a million series in less than an hour and spend less than USD $30 on AWS.

The rest of this post is structured as follows:

Installing StatsForecast

Setting up a Ray cluster on AWS

Experimental design

Using StatsForecast on a Ray cluster

Conclusion

LinkInstalling StatsForecast

The first thing you have to do is install StatsForecast and Ray.

You can do this easily using the following line:

pip install “statsforecast[ray]”

LinkSetting up a Ray cluster

Setting up a Ray cluster is as simple as following the instructions here. In this experiment, we use the following yaml file, and we run the experiments using AWS. Before launching the cluster you have to set up your AWS credentials. The easiest way to do this is using the AWS CLI; just run aws configure and follow the instructions.

1cluster_name: default

2max_workers: 249

3upscaling_speed: 1.0

4docker:

5 image: rayproject/ray:latest-cpu

6 container_name: "ray_container"

7 pull_before_run: True

8 run_options:

9 - --ulimit nofile=65536:65536

10idle_timeout_minutes: 5

11provider:

12 type: aws

13 region: us-east-1

14 cache_stopped_nodes: True.

15 security_group:

16 GroupName: ray_client_security_group

17 IpPermissions:

18 - FromPort: 10001

19 ToPort: 10001

20 IpProtocol: TCP

21 IpRanges:

22 - CidrIp: 0.0.0.0/0

23auth:

24 ssh_user: ubuntu

25available_node_types:

26 ray.head.default:

27 node_config:

28 InstanceType: m5.2xlarge

29 BlockDeviceMappings:

30 - DeviceName: /dev/sda1

31 Ebs:

32 VolumeSize: 100

33 ray.worker.default:

34 min_workers: 249

35 max_workers: 1000

36 node_config:

37 InstanceType: m5.2xlarge

38 InstanceMarketOptions:

39 MarketType: spot

40head_node_type: ray.head.default

41rsync_exclude:

42 - "**/.git"

43 - "**/.git/**"

44rsync_filter:

45 - ".gitignore"

46initialization_commands: []

47setup_commands:

48 - pip install statsforecast

49head_setup_commands: []

50worker_setup_commands: []

51head_start_ray_commands:

52 - ray stop

53 - ray start --head --port=6379 --object-manager-port=8076 --autoscaling-config=~/ray_bootstrap_config.yaml

54worker_start_ray_commands:

55 - ray stop

56 - ray start --address=$RAY_HEAD_IP:6379 --object-manager-port=8076

57head_node: {}

58worker_nodes: {}As can be seen, the head node is an m5.2xlarge instance (8 CPU, 32 GB RAM). The worker nodes are configured using the same instance, and 249 are used for the experiments. Therefore, the deployed cluster uses 2,000 CPUs and 8,000 GB of RAM. Each worker installs StatsForecast. To launch the cluster, simply use:

ray up cluster.yaml

Don’t forget to shut down the cluster once the job is done:

ray down cluster.yaml

LinkExperimental design

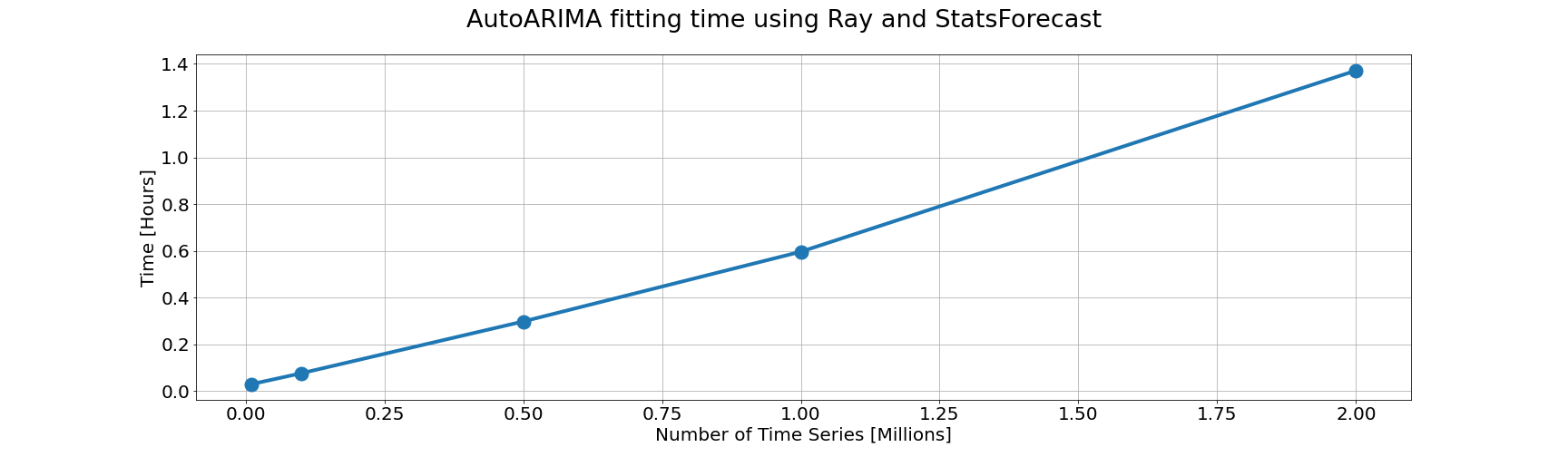

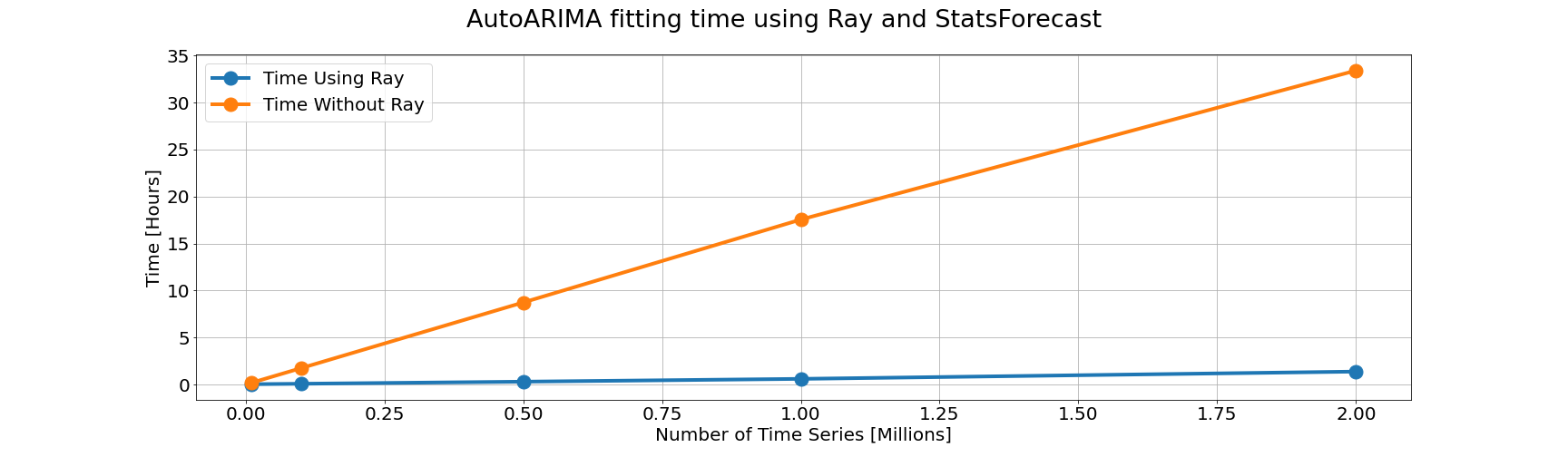

The experiment consists of training sets of time series of different sizes on the cluster. The chosen sizes are 10 thousand, 100 thousand, 500 thousand, 1 million, and 2 million. The time series were randomly generated using the generate_series function of StatsForecast. All have a size of between 50 and 500 observations, and their frequency is daily.

LinkUsing StatsForecast on a Ray cluster

Using StatsForecast is as simple as specifying the Ray cluster address and using the ray_address argument of the class, as shown below.

1import argparse

2import os

3from time import time

4

5import ray

6import pandas as pd

7from statsforecast.utils import generate_series

8from statsforecast.models import auto_arima

9from statsforecast.core import StatsForecast

10

11if __name__=="__main__":

12 parser = argparse.ArgumentParser(

13 description='Scale StatsForecast using ray'

14 )

15 parser.add_argument('--ray-address')

16 args = parser.parse_args()

17

18 for length in [10_000, 100_000, 500_000, 1_000_000, 2_000_000]:

19 print(f'length: {length}')

20 series = generate_series(n_series=length, seed=1)

21

22 model = StatsForecast(series,

23 models=[auto_arima], freq='D',

24 n_jobs=-1,

25 ray_address=args.ray_address)

26 init = time()

27 forecasts = model.forecast(7)

28 total_time = (time() - init) / 60

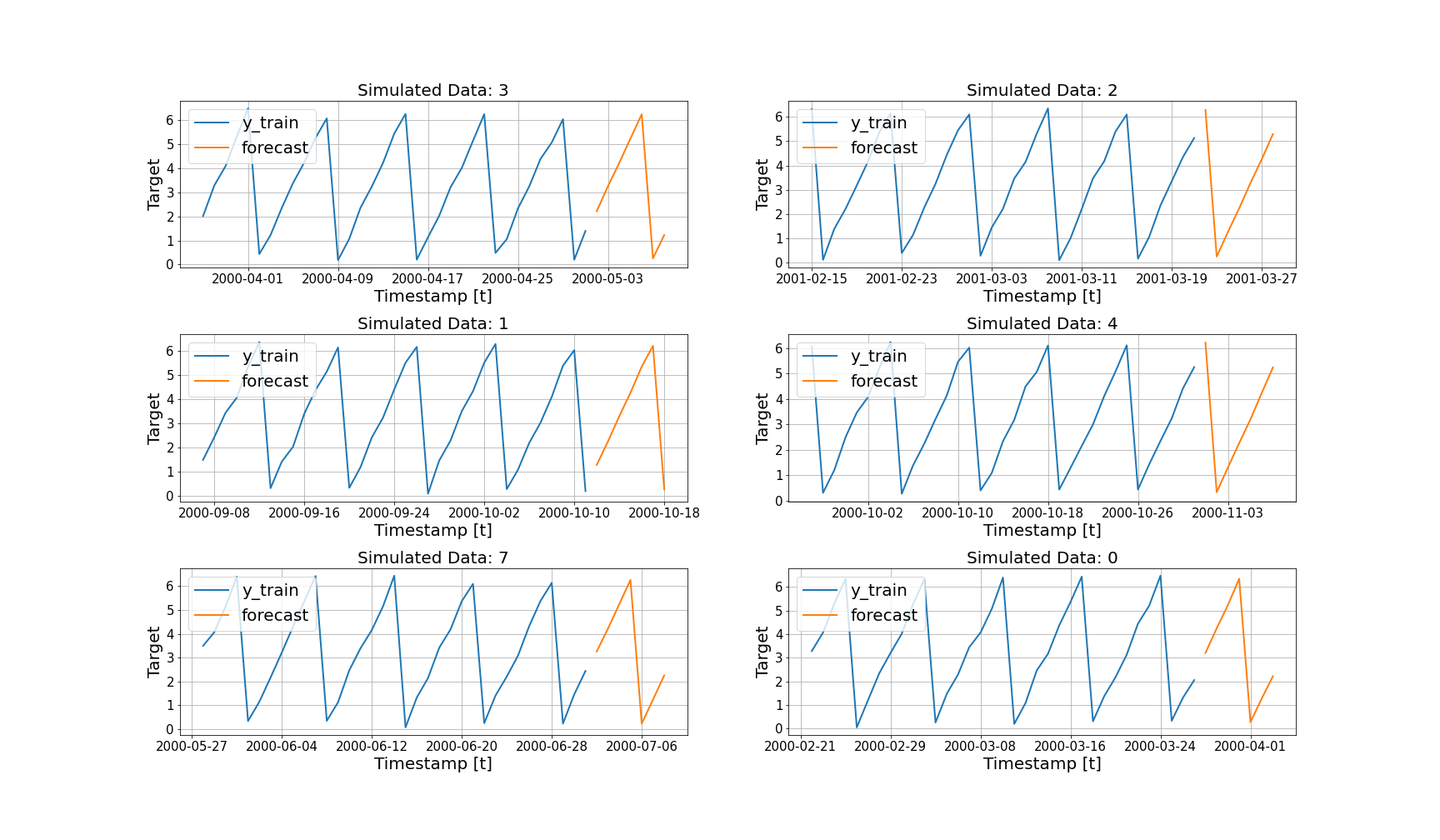

29 print(f'n_series: {length} total time: {total_time}')After training the model for all the series, forecasts like the ones shown below will be obtained.

The results of the experiment are shown below. As can be seen, with Ray and StatsForecast you can train AutoARIMA for 1 million time series in half an hour.

We also performed an experiment without using Ray. As shown below, fitting time is much faster with Ray than without Ray.

StatsForecast allows you to train more models — just import them and add them to the model's list as follows:

1from statsforecast.models import auto_arima, naive, seasonal_naive

2

3model = StatsForecast(series,

4 models=[auto_arima, naive, seasonal_naive],

5 n_jobs=-1,

6 ray_address=’ray_address’)LinkConclusion

In this blog post, we showed the power of StatsForecast in combination with Ray, forecasting millions of time series with AutoARIMA in less than one hour.

Please try out StatsForecast and its Ray integration and let us know about your experience. Feedback of any kind is always welcome. Here’s a link to our repo: https://github.com/Nixtla/statsforecast.