How ThirdAI uses Ray for Parallel Training of Billion-Parameter Neural Networks on Commodity CPUs

LinkIntroduction

This is a guest blog from the ThirdAI machine learning and AI engineering team. They share how to employ their BOLT framework along with Ray for distributed training of deep learning and foundational models using only CPUs. All their code experiments run on AWS can be found here.

ThirdAI Corp is an early-stage startup dedicated to democratizing AI models through algorithmic and software innovations that enable training and deploying large neural networks on commodity CPU hardware.

The core component of ThirdAI’s efficient model training is our proprietary BOLT engine, a new deep learning framework built from scratch. In certain tasks, ThirdAI’s sparse deep learning models can even outperform the analogous dense architecture on GPUs in both training time and inference latency.

In this post, we introduce our new distributed data parallel engine powered by Ray to scale ThirdAI models to terabyte-scale datasets and billion-parameter models. We discuss how Ray enabled us to quickly build an industry-grade distributed training solution on top of BOLT with key features such as fault-tolerance, multiple modes of communication, and the seamless scalability provided by Ray. We also dive deep into our recent migration from Ray Core to Ray Trainer for distributed training and highlight the benefits of this upgrade. Finally, we present experimental results on a cluster of low-cost AWS CPU machines that demonstrate how Ray allowed us to achieve near-linear scaling for distributed training on a popular terabyte-scale benchmark dataset.

LinkThirdAI’s BOLT engine for standard CPU hardware

Today, all widely-used deep learning frameworks, such as TensorFlow and PyTorch, require access to a plethora of specialized hardware accelerators, such as GPUs or TPUs, to train large neural networks in a feasible amount of time. However, these specialized accelerators are extremely costly (roughly 3-10x more expensive than commodity CPUs in the cloud). Furthermore, these custom accelerators often consume a troubling amount of energy, raising concerns about the carbon footprint of deep learning. In addition, data scientists working in privacy-sensitive domains, such as healthcare, may have requirements to store data in on-premises environments as opposed to the cloud, which may hinder their ability to access such specialized computing devices for building models.

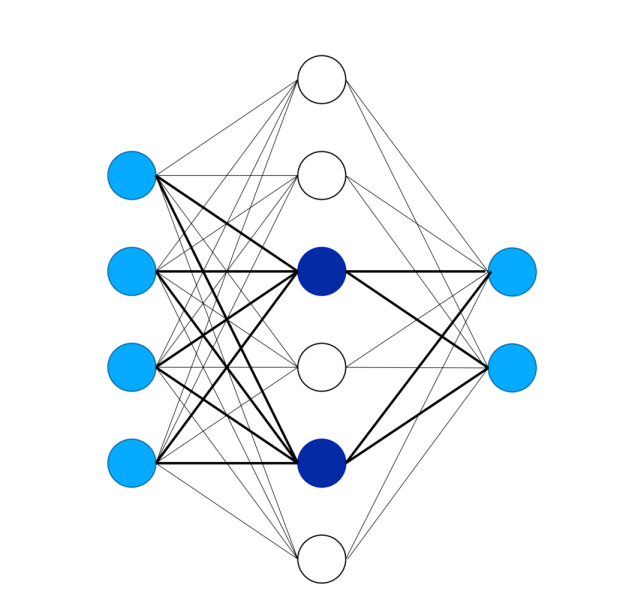

Motivated by these challenges for democratizing the power of deep learning in a sustainable manner, we at ThirdAI rejected the conventional wisdom that specialized hardware is essential for taking advantage of large-scale AI. To achieve this vision, we have built a new deep learning framework, called BOLT, for efficiently training large models on standard CPU hardware. The key innovation behind BOLT is making sparsity a first-class design principle. By selectively computing only a small fraction of the activations for a given input, we can dramatically reduce the overall number of operations required for training and inference and thereby achieve competitive efficiency on CPUs. Our custom algorithms for selecting a sparse subset of neurons are based on a decade of research in applying randomized algorithms for efficient machine learning.

For more details, please see our research paper describing our algorithmic innovations and summarizing the performance of BOLT on a variety of machine learning tasks.

Figure 1: Illustration of BOLT’s sparse neural network training process. Given an input (represented by the four light blue circles on the far left), BOLT only computes a sparse subset of activations most relevant to the input (represented by the two dark blue neurons in the center). BOLT then uses these activations to compute the final output prediction (light blue neurons on the right). By only computing a small subset of the activations, BOLT significantly reduces the amount of computation required to train a neural network.

To take our training capabilities with BOLT to the next level, we knew that we would need to be able to handle terabyte-scale datasets and beyond, which necessitates distributed data parallel training. Ultimately, we decided to build our distributed training capabilities on Ray for ease of development, fault-tolerance, and strong performance.

LinkWhy Ray with BOLT?

In 2022, we initially adopted Ray Core for our distributed data-parallel training framework after evaluating multiple software libraries, including DeepSpeed, Horovod, and MPI. Ray Core's Actor model and its built-in features such as actor fault-tolerance, autoscaling, and integration with Gloo were indispensable to us for moving fast. Ray Core allowed us to build a full-fledged data-parallel training solution, which involves maintaining copies of a model on multiple machines, each training on separate shards of data and communicating gradients to update parameters. We were able to develop this solution in only a few weeks, and were delighted by the system’s performance.

However, as we aimed to optimize our processes, transitioning to Ray Trainer became a clear choice for a much more simplified developer experience. In 2023, we thus decided to re-architect our system to take advantage of Ray Trainer.

LinkMigrating from Ray Core to Ray Trainer

Ray Trainer, building upon the foundational strengths of Ray Core, is specifically tailored for data-parallel distributed machine learning training, providing a streamlined training pipeline adept at handling task scheduling, resource management, and data movement. These features considerably simplify the overall developer experience. Moreover, Ray Trainer offers enhanced fault tolerance and refined automatic scaling, ensuring our training operations are both efficient and resilient against potential interruptions. Finally, the integration capabilities that were pivotal in Ray Core have been broadened in Ray Trainer, allowing for greater compatibility with an expansive range of machine learning libraries and tools. These compelling features ultimately convinced us to take the plunge and reengineer our system with Ray Trainer.

After transitioning to using Ray Trainer, we introduced BoltTrainer, which functions similarly to Ray’s TorchTrainer and TensorflowTrainer, and is implemented on top of Ray's DataParallelTrainer. Using BoltTrainer is also a frictionless experience; developers simply provide Ray AI Runtime (AIR)* specifics, like scaling and runtime configs, with the training loop, and Ray executes the data-parallel training.

Communication within our framework now leverages the Torch Communication Backend, enhancing efficiency and reliability. Users also have the flexibility to provide Ray's TorchConfig, choosing between communication frameworks like MPI or Gloo. This migration has not only simplified our codebase, but also enhanced user engagement, especially for those familiar with systems like Ray’s TorchTrainer. Additionally, Ray Trainer’s comprehensive documentation further elevates the user experience, and it also allowed us to unify our APIs.

From a performance perspective, transitioning from Ray Core to Ray Trainer has also led to a significant reduction in our memory usage, by approximately a factor of 2. Previously, we had to extensively use Ray Object Store, which is no longer necessary with Ray Trainer. This change allowed us to dramatically reduce our memory footprint and thus open the door to utilize even cheaper CPU instances for training.

After moving from Ray Core to Ray Trainer, distributed training has become a painless experience, allowing us to focus our attention on our differentiating technology in providing efficient algorithms for building large-scale neural networks.

LinkExperimental results

To empirically test the performance of our distributed training framework with Ray, we evaluated our models on the popular Criteo 1TB clickthrough prediction benchmark. We also provide a reference comparison to a study by Google benchmarking TensorFlow distributed training on CPUs with the same Criteo dataset. It is worth noting that, on this benchmark, a test ROC-AUC improvement of 0.02% is considered significant.

LinkEvaluation of ThirdAI’s BOLT on Criteo

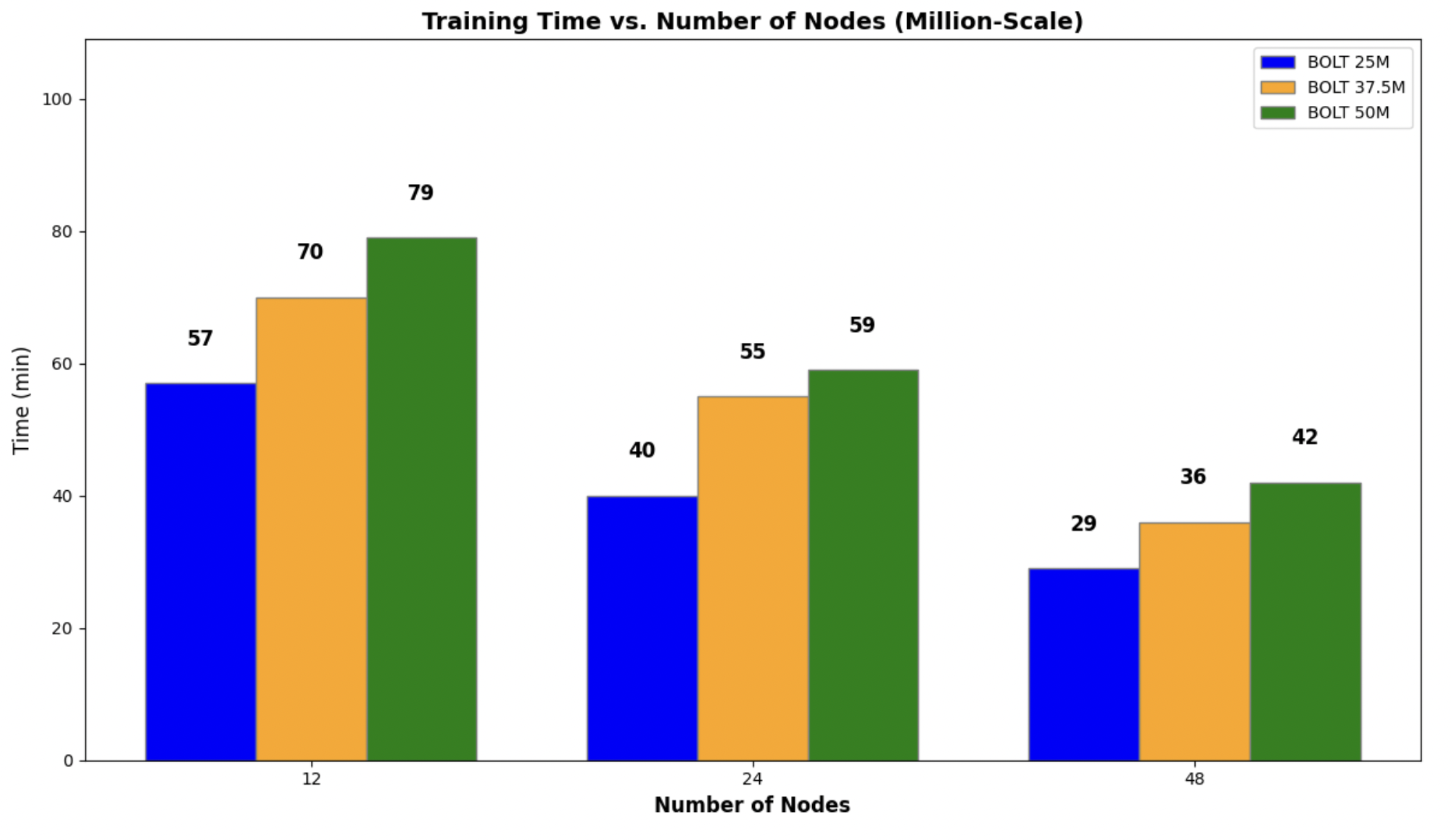

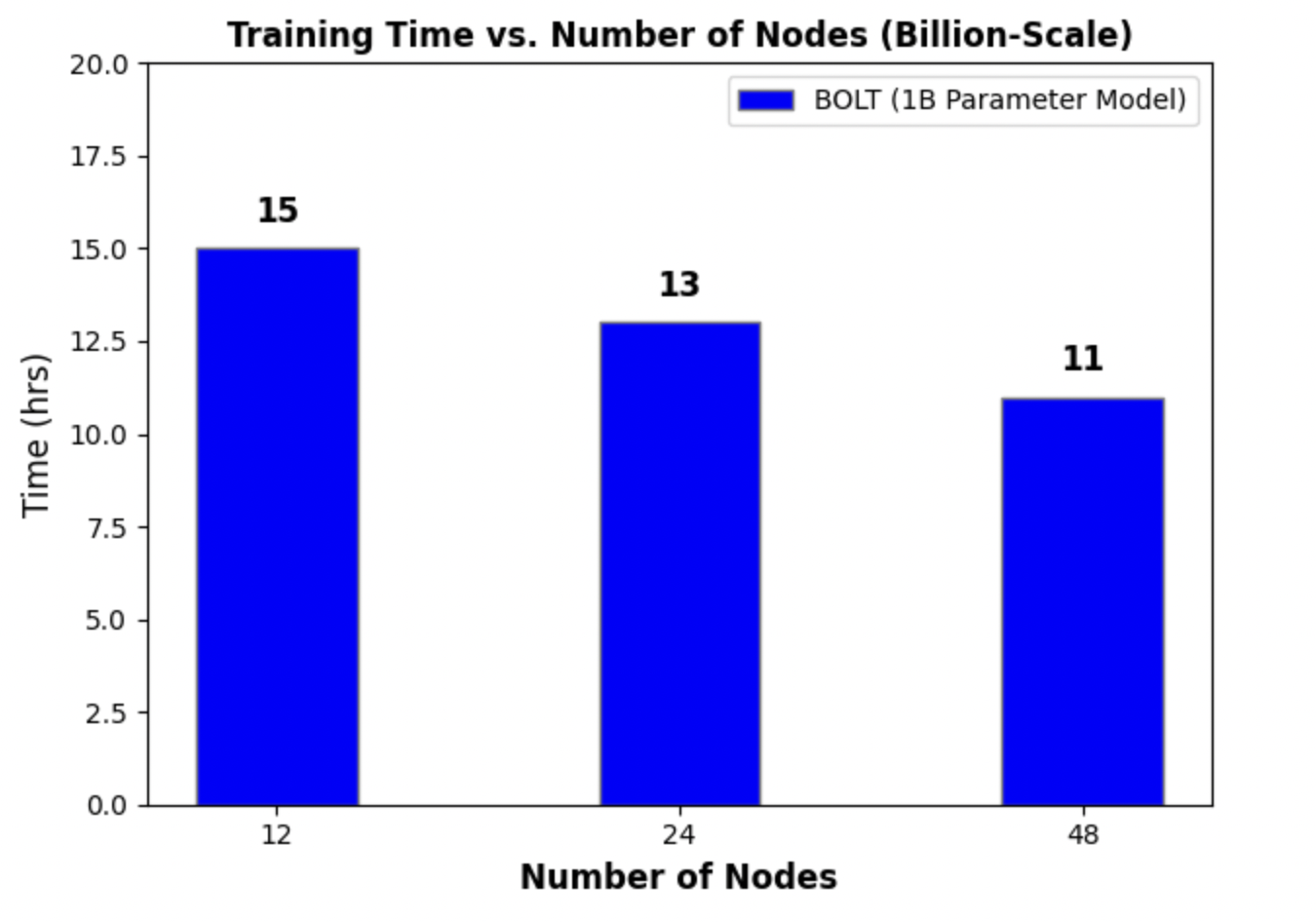

We evaluated Distributed BOLT on AWS EC2 instances across varying model sizes. We consider both relatively small million-parameter models that train in a few minutes as well as a large-scale billion-parameter model to demonstrate the scalability of BOLT.

For our training jobs on AWS, we used 1 `hpc7a.12xlarge` and 4 `hpc7a.24xlarge` instances. These instances have a total of 216 vCPUs, each of these instances are running 12 workers per worker machine, and each worker is running on 4 vCPUs. You can peruse the cluster config here and run the job for yourself.

Training Resources:

Trainer Resources: 24 vCPUs

Worker Resources: 48 workers, 4 vCPUs

Model Size | Training Time | ROC-AUC |

|---|---|---|

25M | 29 min | 0.7920 |

37.5M | 36 min | 0.7942 |

50M | 42 min | 0.7951 |

1B | 11 hrs | 0.8001 |

This table presents the ROC-AUC (Area Under the Receiver Operating Characteristic Curve) values for multiple ThirdAI UDT Models, along with their corresponding training times on AWS EC2 Instances.

LinkEvaluation of Tensorflow on Criteo from Google [1]

Machine Configuration:

Manager: 1 machine, n1-highmem-8

Workers: 60 machine, n1-highcpu-16

Parameter Server: 19 machines, n1-highmem-8

Modeling Technique | Training Time | AUC |

|---|---|---|

Linear Model | 70 min | 0.7721 |

Linear Model with Crosses | 142 min | 0.7841 |

Deep Neural Network (1 epoch) | 26 hours | 0.7963 |

Deep Neural Network (3 epochs) | 78 hours | 0.8002 |

This table presents the ROC-AUC (Area Under the Receiver Operating Characteristic Curve) values for Tensorflow Models, along with their corresponding training times on GCP.

Figure 2. AWS Training time (in minutes) of three million-parameter BOLT models of varying size across clusters of 12, 24, and 48 workers. We observe a near-linear reduction in training time as we scale the number of nodes thanks to Ray’s ability to parallelize computations with minimal overhead.

Figure 3. AWS Training time (in hours) for a 1B parameter BOLT model across clusters of 12, 24, and 48 workers.

LinkTry Out ThirdAI’s Distributed Training on CPUs

To help our readers understand and reproduce the results discussed in this blog post, we have made the code available on our public GitHub repository. Please refer to the README file for setup instructions and further details. For more information about ThirdAI, including how to get started with our software, please visit our website.

Also, we are speaking at the Ray Summit 2023. If you want to meet us, come to our talk Scaling up Terascale Deep Learning on Commodity CPUs with ThirdAI and Ray to discover more about BOLT and Ray.

References

1. cloud.google.com. (2017). Using Google Cloud Machine Learning to predict clicks at scale [online]. Available: https://cloud.google.com/blog/products/gcp/using-google-cloud-machine-learning-to-predict-clicks-at-scale. [Accessed 23 Jan. 2023].

*Update 9/15/2023: We are sunsetting the "Ray AIR" concept and namespace starting with Ray 2.7. The changes follow the proposal outlined in this REP.