Low-latency Generative AI Model Serving with Ray, NVIDIA Triton Inference Server, and NVIDIA TensorRT-LLM

In a previous blog, we covered how the flexibility, scalability and simplicity of Ray Serve can help you to improve hardware utilization and deploy AI applications to production faster. As AI models are becoming larger and more complex, the market has accepted that it is crucial to optimize model inference to reduce GPU costs. Now, Anyscale is teaming with NVIDIA to combine the developer productivity of Ray Serve and RayLLM with the cutting-edge optimizations from NVIDIA Triton Inference Server software and the NVIDIA TensorRT-LLM library.

LinkModel Serving with Ray Serve and RayLLM

Ray Serve is a scalable model-serving library built on top of Ray for building end-to-end AI applications. It provides a simple Python API for serving everything from deep learning neural networks, built with frameworks like PyTorch, to arbitrary business logic. Ray Serve is particularly well suited for model composition and many-model serving, enabling you to build a complex inference service consisting of multiple ML models that can auto-scale independently for optimal hardware utilization. In 2023, Anyscale made significant investments in user experience as well as price-performance to support Ray Serve users like LinkedIn, Samsara, and DoorDash. Developers took notice, and we saw 10x growth in adoption last year as both startups and enterprises are looking for faster and cheaper ways to serve AI models.

RayLLM is an LLM-serving solution, built on Ray Serve, that makes it easy to deploy and manage a variety of open-source LLMs. It provides an extensive suite of pre-configured open-source LLMs with optimized configurations that work out of the box, as well as the capability to bring your own models. It also provides a fully OpenAI-compatible API that allows you to easily migrate and integrate into existing LLM tooling like LangChain and LlamaIndex. Since it is built on top of Ray Serve, it comes with auto-scaling, multi-GPU support and multi-node inference.

LinkAI Deployment with NVIDIA Triton Inference Server and TensorRT-LLM

Triton Inference Server is an open-source software platform developed by NVIDIA for deploying and serving AI models in production environments. It helps enterprises reduce the complexity of model serving infrastructure, shorten the time needed to deploy new AI models in production, and increase AI inferencing and prediction capacity. Triton Inference Server supports various deep learning frameworks, such as TensorFlow, PyTorch and ONNX. It provides optimizations that accelerate inference on GPUs and CPUs, and is widely used by many enterprises like Amazon, Microsoft, Oracle, Siemens and American Express for deploying efficient deep learning models at scale.

TensorRT-LLM is an open-source library for defining, optimizing and executing LLMs for inference. It maintains the core functionality of FasterTransformer, paired with TensorRT’s Deep Learning Compiler using an open-source Python API to quickly support new models and customizations. It has features like quantization, inflight batching, and attention optimizations, behind a simplified Python API to optimize LLM latency and throughput.

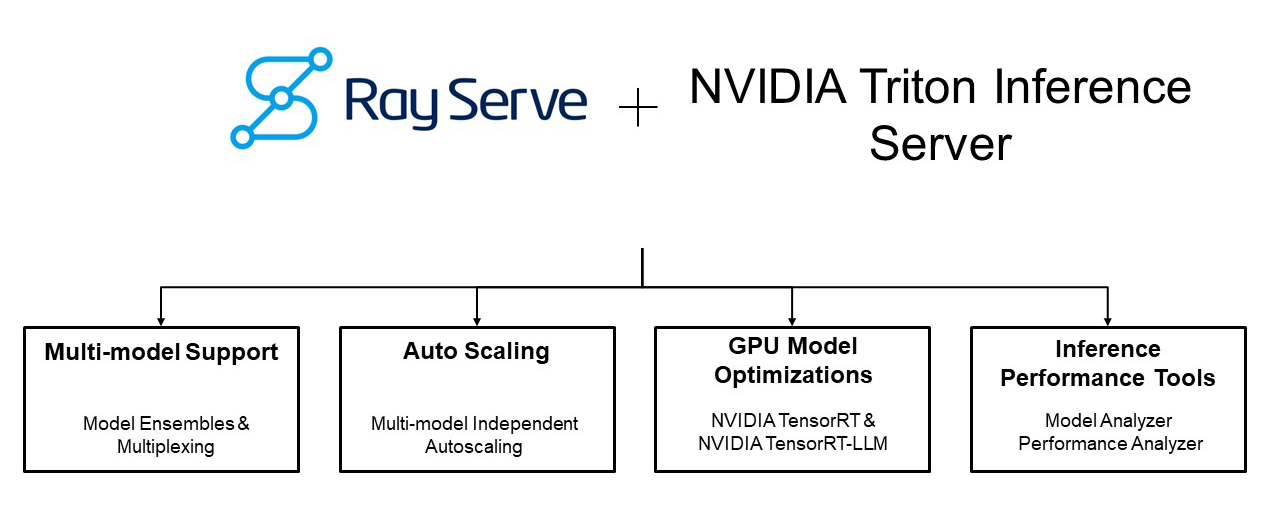

LinkMaximizing Potential: Leveraging Ray Serve and RayLLM with Triton Inference Server and TensorRT-LLM

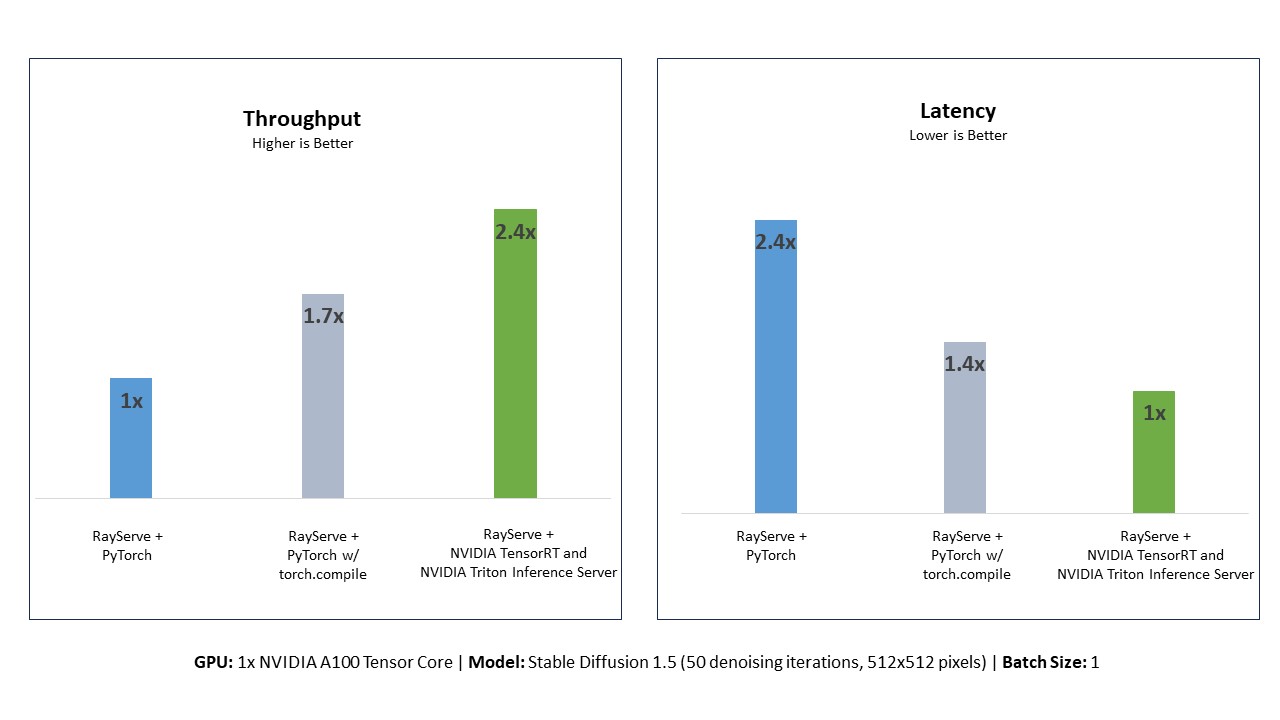

With the 23.12 release, Triton Inference Server includes a Python API that allows developers to seamlessly embed the Triton Inference Server optimizations inside their Python applications running on Ray Serve. For Ray Serve users, this allows them to improve the performance of their ML models as evidenced by our benchmark below. It also allows them to leverage advanced inference serving capabilities in NVIDIA Triton Inference Server like the Model Analyzer that recommends optimal model configurations based on a specified application service level agreement. Ray Serve users also benefit from the high reliability of the Triton Inference Server achieving uptimes of 99.999% at customers like WealthSimple. Triton Inference Server users can now leverage Ray Serve to build complex applications, including business logic and many models with auto-scaling, and simplify their AI development with Python.

With v0.5, RayLLM supports TensorRT-LLM as well as vLLM, allowing developers to choose the most optimal backend for their LLM deployment.

Please see our guide here to try out Triton Inference Server on Ray Serve. Get started with Ray LLM here to deploy TensorRT-LLM-optimized models with Ray LLM.

Authors:

Neelay Shah, NVIDIA

Akshay Malik, Anyscale