Ray & MLflow: Taking Distributed Machine Learning Applications to Production

In this blog post, we're announcing two new integrations with Ray and MLflow: Ray Tune+MLflow Tracking and Ray Serve+MLflow Models, which together make it much easier to build ML models and take them to production.

In this blog post, we're announcing two new integrations with Ray and MLflow: Ray Tune+MLflow Tracking and Ray Serve+MLflow Models, which together make it much easier to build ML models and take them to production.

These integrations are available in the latest Ray wheels. You can follow the instructions here to pip install the nightly version of Ray and take a look at the documentation to get started. This will also be in the next Ray release -- version 1.2

Our goal is to leverage the strengths of the two projects: Ray's distributed libraries for scaling training and serving and MLflow's end-to-end model lifecycle management.

LinkWhat problem are these tools solving?

Let's first take a brief look at what these libraries can do before diving into the new integrations.

LinkRay Tune scales Hyperparameter Tuning

With ML models increasing in size and training times, running large-scale ML experiments on a single machine is no longer feasible. It’s now a necessity to distribute your experiment across many machines.

Ray Tune is a library for executing hyperparameter tuning experiments at any scale and can save you tens of hours in training time.

With Ray Tune you can:

Launch a multi-node hyperparameter sweep in <10 lines of code

Use any ML framework such as Pytorch, Tensorflow, MXNet, or Keras

Leverage state of the art hyperparameter optimization algorithms such as Population Based Training, HyperBand, or Asynchronous Successive Halving (ASHA).

LinkRay Serve scales Model Serving

After developing your model, you will often need to deploy your model to actually serve prediction requests. However, ML models are often compute intensive and require scaling out in real deployments.

Ray Serve is an easy-to-use scalable model serving library that:

Simplifies model serving using GPUs across many machines allowing you to achieve the required production uptime and performance requirements.

Works with any machine learning framework -- such as Pytorch, Tensorflow, MXNet, or Keras.

Provides a programmatic configuration interface (no more YAML or JSON!).

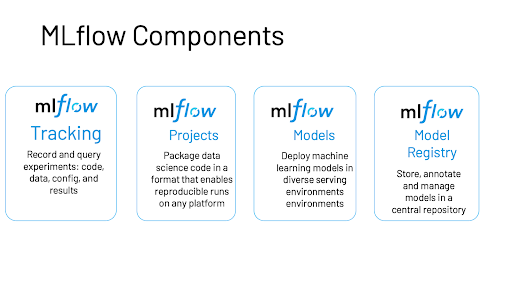

LinkMLflow tames end-to-end Model Lifecycle Management

Ray Tune and Ray Serve make it easy to distribute your ML development and deployment, but how do you manage this process? This is where MLflow comes in.During experiment execution, you can leverage MLflow’s Tracking API to keep track of the hyperparameters, results, and model checkpoints of all your experiments, visualize them, and easily share them with other team members. And when it comes to deployment, MLflow Models provides standardized packaging to support deployment in a variety of different environments.

LinkKey Takeaways

By using Ray Tune, Ray Serve, and MLflow together, these new integrations remove the scaling and managing burden from ML Engineers, allowing them to focus on the main task- building ML models and algorithms.

Let’s see how we can leverage these libraries together.

LinkRay Tune + MLflow Tracking

Ray Tune integrates with MLflow Tracking API to easily record information from your distributed tuning run to an MLflow server.There are two APIs for this integration: an MLFlowLoggerCallback and an mlflow_mixin. With the MLFlowLoggerCallback, Ray Tune will automatically log the hyperparameter configuration, results, and model checkpoints from each run in your experiment to MLflow.

1from ray.tune.integration.mlflow import MLFlowLoggerCallback

2tune.run(

3 train_fn,

4 config={

5 # define search space here

6 "parameter_1": tune.choice([1, 2, 3]),

7 "parameter_2": tune.choice([4, 5, 6]),

8 },

9 callbacks=[MLFlowLoggerCallback(

10 experiment_name="experiment1",

11 save_artifact=True)])You can see below that Ray Tune runs many different training runs, each with a different hyperparameter configuration, all in parallel. These runs can all be seen on the MLflow UI, and on this UI, you can visualize any of your logged metrics. When the MLflow tracking server is remote, others can even access results of your experiments and artifacts.

If you want to manage what information gets logged yourself rather than letting Ray Tune handle it for you, you can use the mlflow_mixin API.

Add a decorator to your training function, and you can call any MLflow methods inside the function:

1from ray.tune.integration.mlflow import mlflow_mixin

2

3@mlflow_mixin

4def train_fn(config):

5 ...

6 mlflow.log_metric(...)The mixin API also allows you to leverage MLflow automatic logging if you are using any supported framework such as XGBoost, Pytorch Lightning, Spark, Keras, or many more.

1from ray.tune.integration.mlflow import mlflow_mixin

2

3@mlflow_mixin

4def train_fn(config):

5 mlflow.autolog()

6 xgboost_results = xgb.train(config, ...)You can check out the documentation here for full runnable examples and more information.

LinkRay Serve + MLflow Models

MLflow models can be conveniently loaded as python functions, which means that they can be served easily using Ray Serve. The desired version of your model can be loaded from a model checkpoint or from the MLflow Model Registry by specifying its Model URI. Here’s how this looks:

1import ray

2from ray import serve

3

4import mlflow.pyfunc

5

6class MLFlowBackend:

7 def __init__(self, model_uri):

8 self.model = mlflow.pyfunc.load_model(model_uri=model_uri)

9

10 async def __call__(self, request):

11 return self.model.predict(request.data)

12

13ray.init()

14client = serve.start()

15

16#This can be the same checkpoint that was saved by MLflow Tracking

17model_uri = "/Users/ray_user/my_mlflow_model"

18

19#Or you can load a model from the MLflow model registry

20model_uri = "model:/my_registered_model/Production"

21client.create_backend("mlflow_backend", MLFlowBackend, model_uri)While the strategy above lets you employ the full features of Ray Serve for scaling and training, the Ray Serve MLflow deployment plugin that implements the MLflow deployments API (including a command-line interface) will make integrating Ray Serve into your MLflow workflow even more seamless. Stay tuned for this feature.

LinkConclusion and Outlook

Using Ray with MLflow makes it much easier to build distributed ML applications and take it to production. Ray Tune+MLflow Tracking make development and experimentation much faster and more manageable, and Ray Serve+MLFlow Models simplify deploying your models at scale.

LinkWhat’s Next

Give this integration a try by pip install the latest Ray nightly wheels and pip install mlflow. Also stay tuned for a future deployment plugin that further integrates Ray Serve and MLflow Models.

For now you can:

Check out the documentation for the Ray Tune + MLflow Tracking integration and the runnable example

See how you can use this integration to tune and autolog a Pytorch Lightning model. Example.

Please feel free to share your experiences on the Ray Discourse or join the Ray community Slack for further discussion!

LinkCredits

Thanks to the respective Ray and MLflow team members from Anyscale and Databricks: Richard Liaw, Kai Fricke, Eric Liang, Simon Mo, Edward Oakes, Michael Galarnyk, Jules Damji, Sid Murching and Ankit Mathur.

LinkAdditional Resources

For more information about Ray Tune, check out the following links:

Ray Summit Connect, June 2020: Introduction and Richard Liaw

What's New with Ray Libraries: Tune - Richard Liaw, Anyscale

For more information on Ray Serve, check out the following links: