Ray breaks the $1/TB barrier as the world’s most cost-efficient sorting system

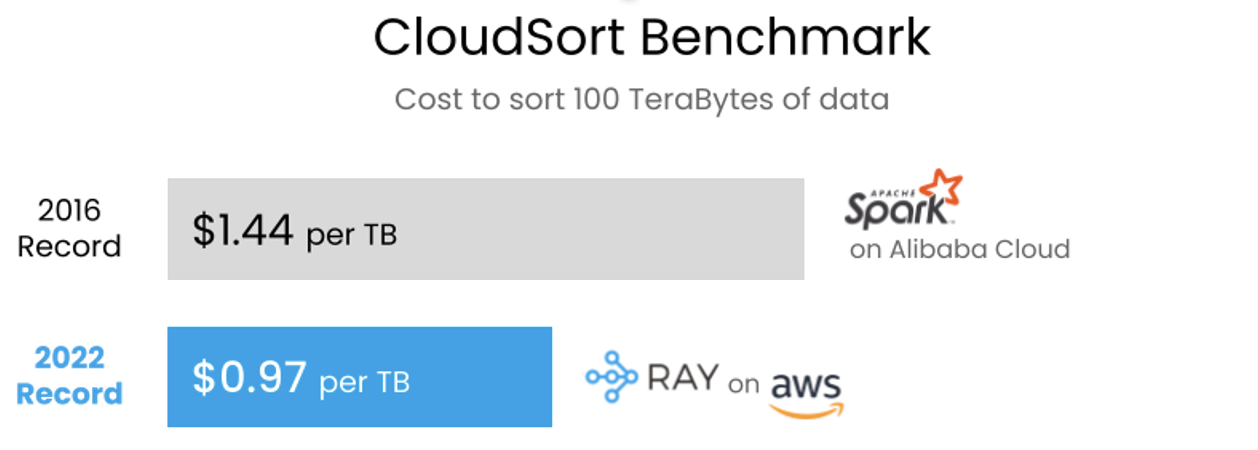

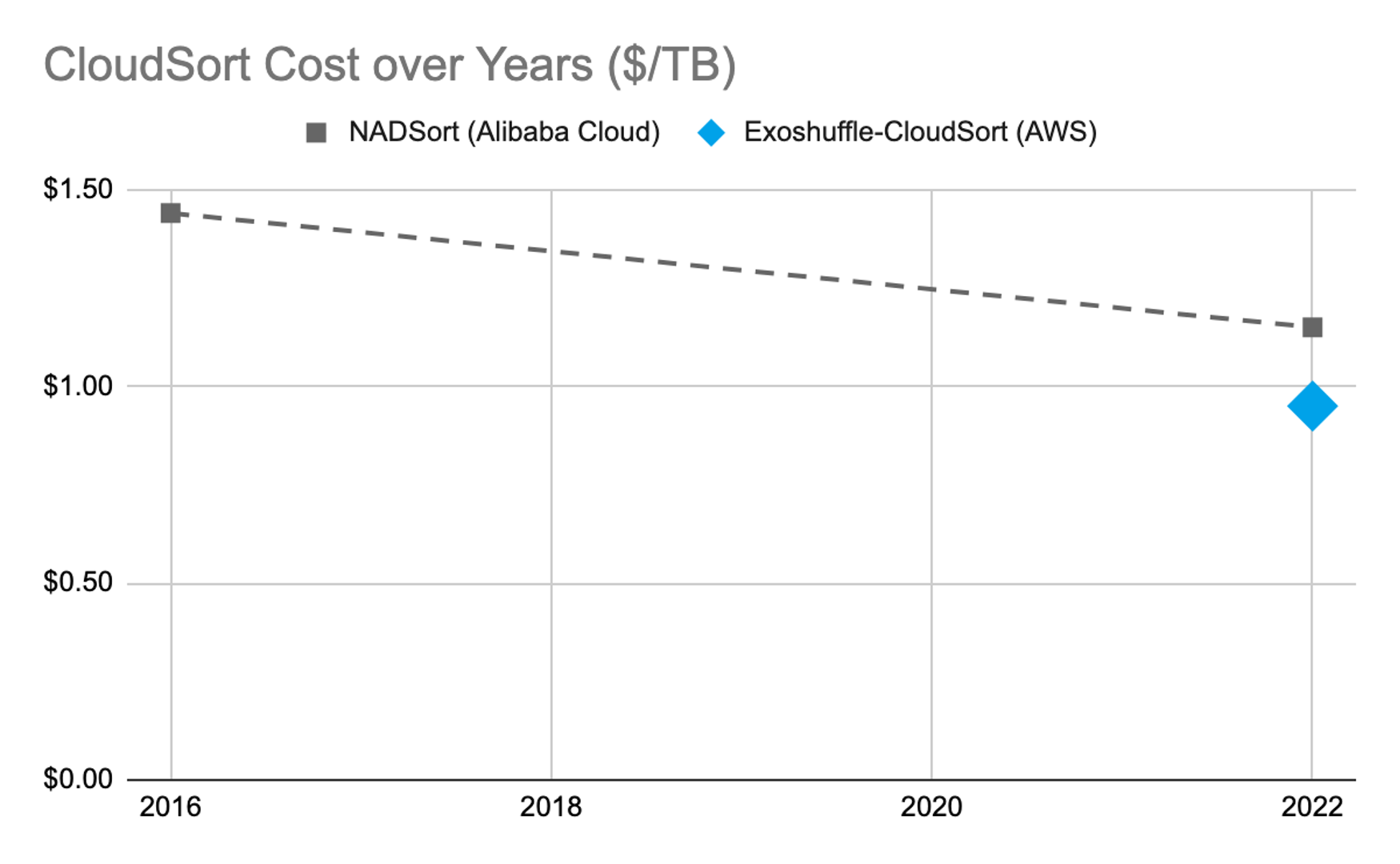

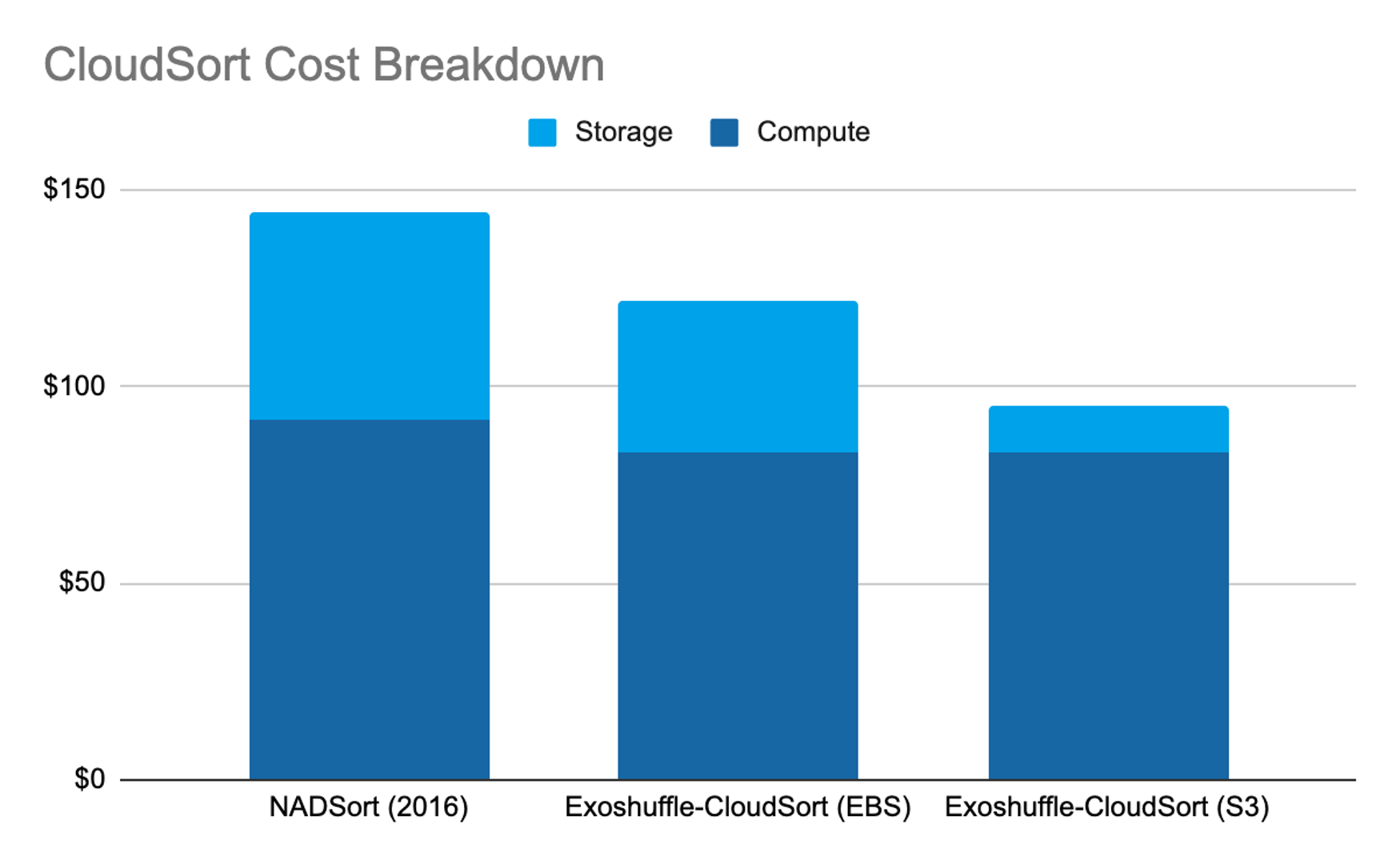

We are excited to announce a new world record on the CloudSort benchmark using Ray. We at the Sky Computing Lab at UC Berkeley developed Exoshuffle, a new architecture for building distributed shuffle that is simple, flexible, and achieves high performance. Building upon this architecture and Ray, Exoshuffle-CloudSort is now the most cost-efficient way to sort 100TB of data on the public cloud, using only $97 worth of cloud resources, or $0.97 per terabyte. This is 33% more cost-efficient than the previous world record, set by Apache Spark in 2016, and 15% cheaper when factoring in decreasing hardware costs.

Ray sets the new CloudSort world record, 33% more cost-efficient than previous state-of-the-art and 15% cheaper when factoring in decreasing hardware costs.

Ray sets the new CloudSort world record, 33% more cost-efficient than previous state-of-the-art and 15% cheaper when factoring in decreasing hardware costs.Sorting is one of the most challenging system performance benchmarks because it stress-tests all aspects of the system. To reach high performance on the sorting benchmark, the system must eliminate bottlenecks in both the hardware stack (CPU, memory, disk, network) and the software stack (OS, filesystem, runtime libraries). The new Exoshuffle-CloudSort record shows that Ray has achieved the scalability and performance required to run the most challenging distributed data processing jobs.

What makes the new world record possible? We attribute this success to innovations in both open-source software and in cloud infrastructure. In the rest of this blog, we will look at how Ray simplifies high-performance distributed programming and how recent advancements in cloud infrastructure improve cost efficiency.

LinkRay: A new way of building distributed applications

The Exoshuffle-CloudSort program comprises less than 1000 lines of application-level Python code, yet it reaches world-class performance and scalability. This is unlike previous high-performance distributed systems which were built from the ground up. For example, the previous record for CloudSort used a heavily optimized shuffle system that required changes to the internals of the Apache Spark framework.

In contrast, Exoshuffle-CloudSort runs as an application on unmodified Ray, a general-purpose distributed execution framework that not only supports distributed sorting, but also a diverse range of applications and workloads, such as distributed ML training, tuning and serving, and reinforcement learning.

So what is the Ray magic that makes all of this possible?

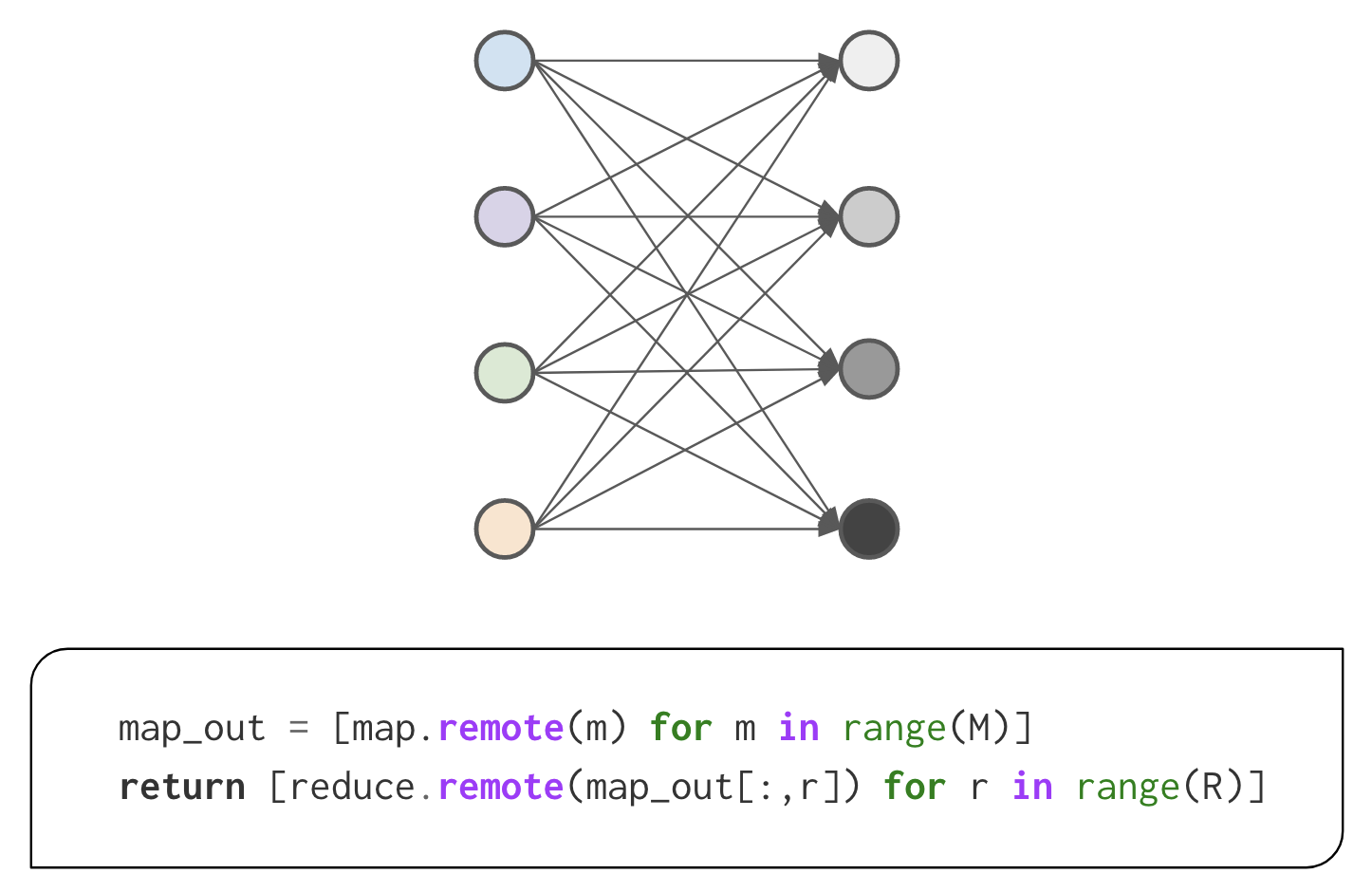

First, Ray uses distributed futures as a general and flexible abstraction to enable building complex distributed applications with fine-grained control. A distributed future is a reference to an object that can exist anywhere in a cluster (“distributed”), and whose value may not yet be computed (“future”). You can pass distributed futures as arguments to remote tasks, and immediately get back another distributed future that represents the result of the task. This allows programmers to specify dynamic computation graphs in a simple and intuitive way. For example, the quintessential MapReduce compute paradigm can be specified in the following two lines of code:

These two lines of code create a simple MapReduce task graph. Details on how Ray executes this are in Executing a distributed shuffle without a MapReduce system.

These two lines of code create a simple MapReduce task graph. Details on how Ray executes this are in Executing a distributed shuffle without a MapReduce system.Second, Ray implements system-level functionalities required for efficiently executing user programs on a distributed cluster, including:

Scheduling: Ray handles asynchronous task scheduling and resource allocation automatically. Advanced programs like Exoshuffle-CloudSort can also customize when and where to run tasks.

Distributed memory: The user program manipulates object references in a virtual, infinite address space; Ray automatically transfers objects across the network to fulfill dependencies, spills objects to persistent storage when memory is full, and uses reference counting to clean up dead objects.

I/O Pipelining: The network transfer, spilling and recovery of objects are transparent to the application and are performed asynchronously.

Fault Tolerance: Ray uses lineage reconstruction to transparently re-execute tasks when a worker process fails.

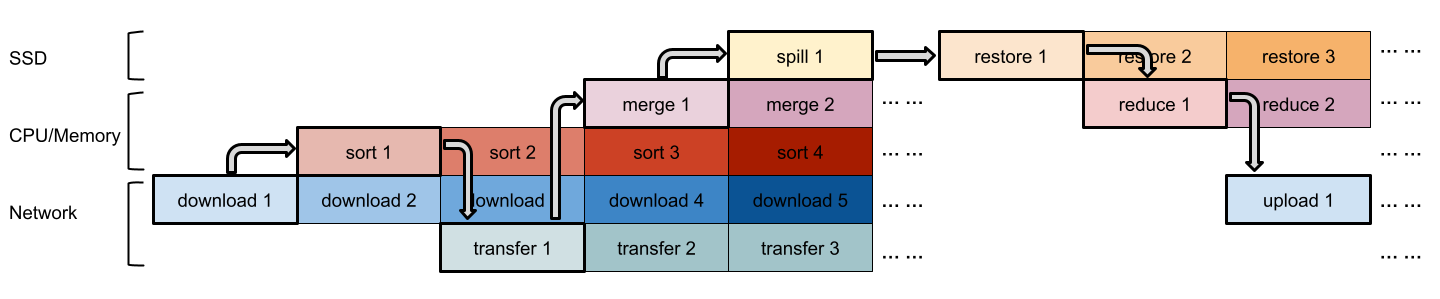

By providing a high-performance data plane, Ray allows programmers to build complex distributed applications with ease. We were able to quickly experiment with different shuffle strategies employed by previous specialized shuffle systems (details in the Exoshuffle technical paper). In Exoshuffle-CloudSort, we use a push-based shuffle algorithm that focuses on improving pipelining efficiency. A simplified pipeline is shown below.

In push-based shuffle, CPU and I/O tasks are pipelined to increase resource utilization. Many parts of the pipelining are provided by the Ray system “for free”.

In push-based shuffle, CPU and I/O tasks are pipelined to increase resource utilization. Many parts of the pipelining are provided by the Ray system “for free”.LinkA new disaggregated cloud-native architecture

With Ray making distributed programming simpler, developers can focus more on maximizing resource efficiency on today’s cloud infrastructure.

Constant technological advancements are lowering the cost of compute and storage. However, this alone would not have made this new world record possible. As a baseline, we took the setup from the previous world record from 2016, and looked up its cost on today’s Alibaba Cloud. It turned out that the same amount of resources that cost $144 6 years ago would cost $115 today. Therefore, although the cloud is getting cheaper, the cost reduction doesn’t fully explain the new record.

Disaggregated storage, the separation of compute and storage, turns out to be critical for breaking the CloudSort benchmark. Disaggregated storage allows for scaling compute and storage independently and elastically. This architecture is employed by recent data lake and lakehouse designs. We store the input and output data on the storage service Amazon S3, instead of block storage devices mounted to individual VMs. This architecture has several advantages:

S3 only charges for IOPS whereas EBS charges for both IOPS and throughput. For throughput-oriented applications such as sorting, S3 is therefore very cost-efficient. The S3 cost was $12 for CloudSort. If we used EBS instead, its cost would have been $38.74.

S3 storage does not require provisioning in advance, whereas EBS must be provisioned and mounted ahead of time. This makes S3 more elastic to varying data sizes and free of data locality issues.

Storing data on S3 means the data is preserved under worker failure. We are working to take advantage of this property, plus Ray’s fault tolerance capabilities, to make CloudSort work on auto-scaling clusters of spot instances.

Cost breakdown of different CloudSort runs. Amazon S3 provides the best I/O performance with the lowest cost.

Cost breakdown of different CloudSort runs. Amazon S3 provides the best I/O performance with the lowest cost.LinkThe significance of Exoshuffle-CloudSort

What is the significance of the sorting benchmark?

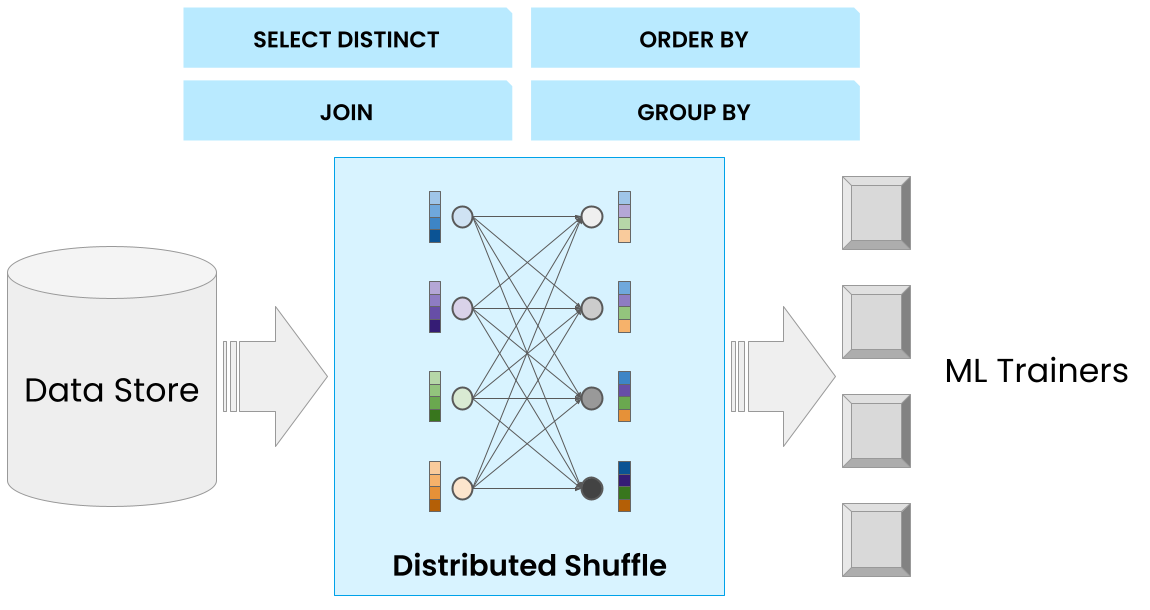

The sorting benchmark is effectively a shuffle benchmark. Shuffle refers to the all-to-all data transfer in a distributed cluster, and is a key operation that underpins many distributed data processing applications. It is difficult to execute efficiently because of the need to transfer a large amount of small data blocks across memory, disk and network. Shuffle’s applications include many common data processing operations such as joins and group-bys; another example is data loading for distributed ML training, which needs to randomly shuffle data partitions between training epochs.

The popular belief is that specialized shuffle systems are necessary for achieving high performance. Our new world record shows that this need not be the case: shuffle can run as an application program on a generic distributed execution system, such as Ray, with state-of-the-art performance and scalability. This opens up the possibility to run a rich set of data processing workloads on Ray.

We are working to bring the Exoshuffle capabilities into Ray Datasets, a distributed data preprocessing library, to support use cases such as shuffling data loading for ML training. This milestone is another step towards Ray as a common dataplane for distributed applications including data processing, ML training, tuning and serving.

We are happy to open-source the code for Exoshuffle-CloudSort at https://github.com/exoshuffle/cloudsort. Anyone is welcome to run the code to reproduce our results and to help improve it.

LinkAcknowledgements

I would like to thank my teammates at UC Berkeley for making this achievement possible: Stephanie Wang, Samyu Yagati, Sean Kim, Kenneth Lien, Isaac Ong, Tony Hong, Ion Stoica, and our collaborators at Anyscale, SangBin Cho, Chen Shen and Eric Liang for their support. We also thank the benchmark committee Mehul Shah, Chris Nyberg and George Porter for validating our records and for pushing the boundaries of computing together.

Note: Our 2022 CloudSort Benchmark submission is in the Indy category. We are working on a Daytona category submission, which involves sorting datasets with skewed distribution, and the result will be reviewed by the Sort Benchmark committee as a 2023 entry.

About the Author

Frank Sifei Luan is a PhD student at the Sky Computing Lab at UC Berkeley, advised by Ion Stoica. His research interest is in data, AI systems and cloud computing.

(Link to bio: https://people.eecs.berkeley.edu/~lsf/)