Ray Forward 2022

This year’s Ray Forward conference highlighted exciting and revealing stories of Ray’s adoption in China, including hyper-scale Ray applications at Ant Group, production deep learning on Ray at DJI, next generation Ray-based ML Platform at Qihoo, and much more!

Since 2020, the Ray Community in China has been organizing a “Ray Forward” conference as a companion and a preamble event to Ray Summit. This year’s Ray Forward on August 5, highlighted exciting and revealing stories of Ray’s adoption in China.

Let’s start with the key themes that arose in Ray user journeys before diving into in-depth technical discussions about each organization using Ray.

Key Ray observations and highlights:

There’s a common observation that the number of “vertical” computing systems (to fulfill different business needs such as search, recommendation, risk) is growing rapidly. Collectively, these systems are becoming overwhelmingly complex; and Ray is emerging as an ideal abstraction layer to “push down” the complexities shared by multiple such verticals.

Ant Group more than doubled their Ray usage this year, to over 400k CPU cores – this includes expanded usage of online serving with Ray, and new use cases such as audio / video processing.

DJI uses Ray for distributed deep learning in production, because of the flexibility and low overhead of adding/removing GPUs, and high performance object store.

Huawei Cloud is launching a new framework called Fathom (powered by Ray). Ray’s simple distributed programming model allows Fathom to connect previous disjoint data and AI systems, and reduce the complexity of data movement.

Qihoo 360 introduced an ML toolkit Veloce (built on Ray), which provides more flexible heterogeneous hardware support, more rapid development iterations, and stronger cross-stack optimizations than their existing Kubeflow-based system.

Baihai Tech is using Ray to build their machine learning (ML) platform (a SaaS offering). Ray’s greatest value propositions for them are the Python-native scalable computing APIs, and the KubeRay integration allowing for easy auto scaling on K8s.

Byzer is an open source programming language for analytics and AI. Using Ray (together with Spark), Byzer integrates SQL data processing (on Spark), model training (on Ray), and serving (on Ray) in a flexible, efficient, and scalable way.

Now, let’s dive deeper into how each organization is using Ray as their foundational compute strata at scale and explore their use cases.

LinkAnt Group

Ruoyi Ruan (who leads the Next-Generation Compute and Real-Time Compute teams) introduced:

Why Ant Group decided to bet on Ray

Current state of Ray’s adoption

Ant Group’s contributions to open source Ray

LinkWhy Ray?

A major pain point at Ant Group is the complexity of compute systems [ref]. Over the past several years, the variety of data- and compute-intensive business applications at Ant Group has increased very quickly — including financial data processing, searching, AI, recommendations, audio/video, and more. As a result, many “vertical” compute systems were built that architecturally overlap with each other and repeatedly solve the same technical problems, such as autoscaling.

Ray provides the right level of abstraction and serves as the common foundation for these applications. Many common needs can be pushed down to the Ray layer, including communication, fault tolerance, high frequency/performance scheduling, and more.

LinkState of Ray’s adoption at Ant

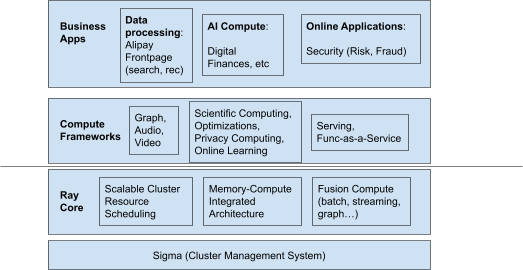

As shown in the above figure, many different compute frameworks and business applications at Ant Group (and Alibaba Group) are powered by Ray:

Data Processing

Ray is the foundation for Ant’s real time graph computing system Geaflow

Support of audio/video processing for the Ant frontpage

AI compute

Scientific computing via the open source Mars project from Ali Group

AntOpt: a cutting edge, large scale optimization system (see blog)

SecretFlow: a privacy computing framework

Mobius: an online learning system that supports Alipay’s search and recommendation functionalities

Ant Ray Serving: supports the unified inference service “Maya” at Ant

In total, Ant Group runs multiple Ray clusters with a total scale of over 400k CPU cores — that’s more than double the 2021 scale of around 200k cores.

LinkOpen source contributions

Ant Group has been the second largest contributor to the Ray project, with eight committers and accounting for about 25% in terms of lines of code (especially in Ray Core). The Ant Ray team has either driven or made significant contributions to features like Java/C++ API, Actor fault tolerance, Ray Serve, and runtime_env.

LinkDJI

DJI is the world’s largest producer of consumer drones. Shuang Qiu from DJI shared their experience of using Ray in distributed deep learning training.

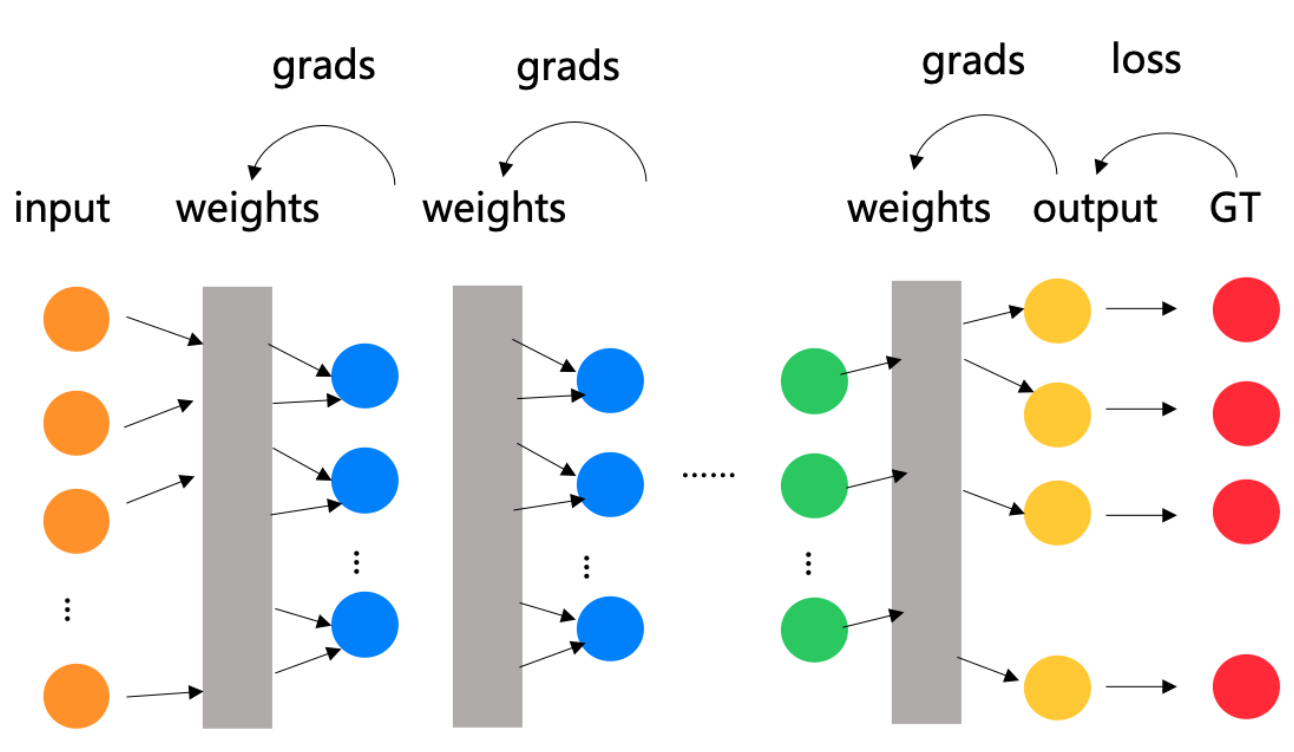

Deep learning training usually has a large number of iterations, with each iteration being short and the total elapsed time being long. These characteristics lead to two key requirements for the underlying infrastructure: strong tolerance for single node failures (avoid failing the entire job); and elasticity and scalability of compute resources (which determine the training efficiency and resource utilization).

LinkWhy Ray?

There are a few important reasons why the DJI engineering team think Ray is the ideal backend for distributed deep learning. There are strong case studies of deep learning on Ray (e.g., Horovod on Ray at Uber). Ray offers performance with low overhead using actor/tasks when compared to containers — meeting the object store performance needs for DJI. And we can’t forget flexible computation.

LinkDJI’s innovation in deep learning on Ray

In using Ray to support distributed deep learning training on Ray, DJI engineering team made two main innovations:

Dynamically add / remove GPU during training

This is done by setting up special “monitor” and “manager” Ray Actors to manage the status of GPU devices, and checkpointing-restarting when appropriate.

Taking over Pytorch’s launcher module and configure Ray Actor rank in collective group

Re-tune hyperparameters when the number of GPUs changes for model quality and consistency

Reduce the network I/O overhead from frequent data accesses

Data import is usually the bottleneck as each node requires a full copy of the data.

DJI uses Ray object store to share training data among workers in the same Ray cluster to achieve partial data download rather than (N-1) copies from remote storage. This also reduces disk spilling to SSD and minimizes its impact to the performance of the training job.

In epoch 0, all data is loaded to Ray object store and subsequent epochs just shuffle and workers get data from each other, delete shuffled data after each epoch. Therefore, each worker always has 1/N amount of data and each epoch dynamically loads 1/N data from other nodes. This offers performance parity with Pytorch’s native data loader

If a node fails, the data owned by the node will be distributed to the remaining healthy nodes

LinkHuawei Cloud

Huawei Cloud is the second largest public cloud vendor in China. Yu Cao from the ModelArts team shared their experience using Ray to power a new framework called Fathom (to be released in this year’s Huawei Cloud Developers Conference). Fathom’s mission is to provide seamless development experience on large scale distributed Data + AI problems.

LinkWhy Ray?

In helping their customers adopt AI, Huawei Cloud team has realized a few common but major pain points. The high complexity of the compute systems and poor efficiency of moving data between systems. Turns out data and AI systems are very disconnected, making it difficult to develop applications which require both kinds of computation. And in the fast evolving AI ecosystem, staying up-to-date with new technologies (especially open source) is difficult.

After searching for the right technology for the AI + Data problem, Huawei Cloud team chose Ray as the distributed compute backend to tackle those challenges. Ray (together with its ecosystem) provides:

Simplified distributed programming experience with a high-performance object store to eliminate expensive data movements

Native integration between data processing tools such as Modin, Mars, Dask, and AI frameworks including PyTorch and Tensorflow

Future proofing as more and more cutting edge AI systems adopt Ray, making it easy to extend Fathom to support additional open source models and frameworks

LinkQihoo 360

Qihoo 360 is a large computer security company in China. Xiaoyu Zhai from Qihoo ML infra team introduced Veloce, a heterogeneous low code ML toolkit based on Ray.

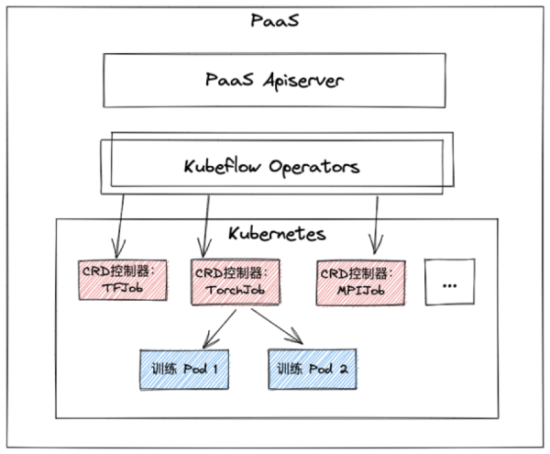

Qihoo’s current ML infra architecture based on Kubeflow

Qihoo’s current ML infra architecture based on Kubeflow

Link

Why Ray?

Qihoo’s current ML infrastructure is based on Kubeflow (as shown in the figure above). When trying to use this architecture to support an increasingly heterogeneous and stateful portfolio of ML workloads, the ML infrastructure team realized it was unable to resolve uncertain heterogeneity on the server side, provide rapid experimentation and development iteration, or achieve holistic, cross-stack optimizations from loosely coupled Kubeflow components.

LinkKubeflow vs. Ray

Heterogeneity

Kubeflow: A basic principle of K8s Operators is that the roles and responsibilities of individual workloads need to be defined on the server side. In order to experiment with a new heterogeneous architecture, the user needs to develop it continuously inside Operators and bring it online.

Ray: KubeRay only defines cluster resources and performs Ray cluster orchestration. Users can define complex topology/data structure locally and submit through clients. On top of KubeRay and Ray, users can simply commit complex architectures on the client side.

Development iterations

Kubeflow: In Kubernetes/Docker mode, users can't run their locally-developed code seamlessly. Oftentimes, this requires rebuilding and redeploying a Docker image.

Ray: With one line of code change, scale locally-developed complex compute logic to a remote GPU cluster seamlessly.

Cross-stack optimizations

Kubeflow: Most of Kubeflow's components are independent and disjoint. Interoperability needs to be worked around via external storage and orchestrators.

Ray: Users can rely on Ray Data to address feature processing and sparsity definition without introducing new tools.

LinkBaihai

Baihai Tech is a startup based in Beijing aiming to provide a cloud-native AI development and production platform that is easy, fast, and efficient. Their flagship product IDP (Intelligent Development Platform) is built on Ray as the compute layer.

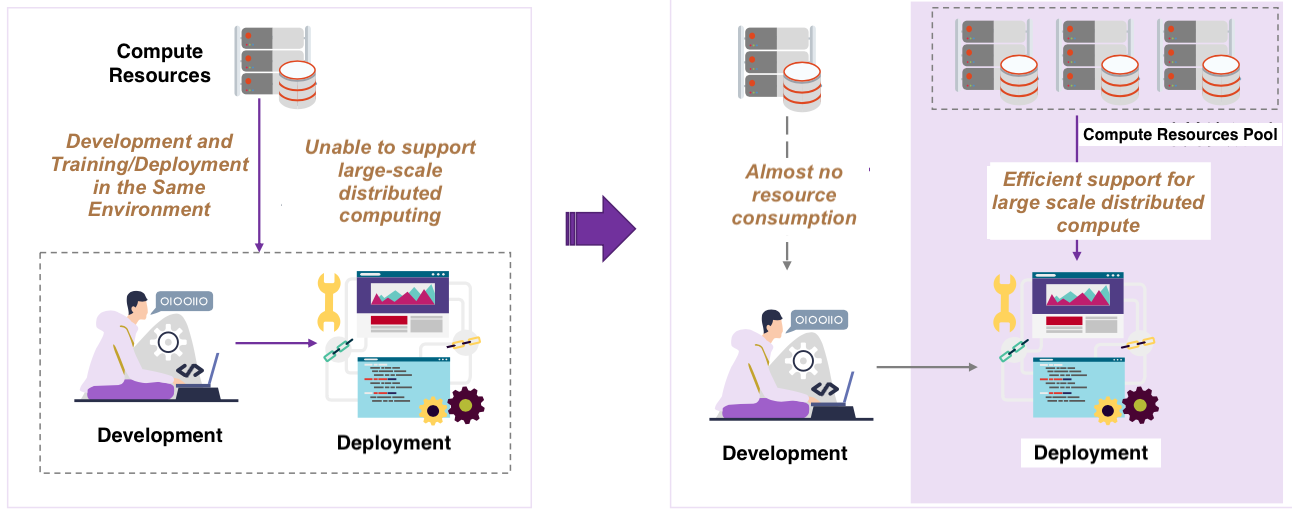

Optimized development to deployment flow with Baihai IDP

Optimized development to deployment flow with Baihai IDPOptimized development to deployment flow with Baihai IDP

LinkWhy Ray?

Bahai Tech chose Ray in achieving the above vision because its abstraction and APIs are open, simple, and easily-customizable. Ray offers a rich ecosystem of integrations with data and AI libraries — while being Python native and easy to use for data scientists. It functions as the perfect complement with the IDP orchestration layer and KubeRay has great support for autoscaling on K8s.

LinkByzer

Byzer (formerly MLSQL) is a low-code and cloud-native distributed programming language for data pipeline, analytics and AI. Byzer was created to help data scientists and big data practitioners adopt and benefit from AI — by providing a unified platform and a SQL-like programming language.

A unique feature in Byzer is its use of both Spark and Ray in a hybrid architecture, with the purpose of enabling the scalable execution of Python and seamless inter-op with SQL. The user queries are usually expressed in Byzer’s SQL-like language, which supports standard SQL and Python syntax.

LinkWhy Ray (together with Spark)?

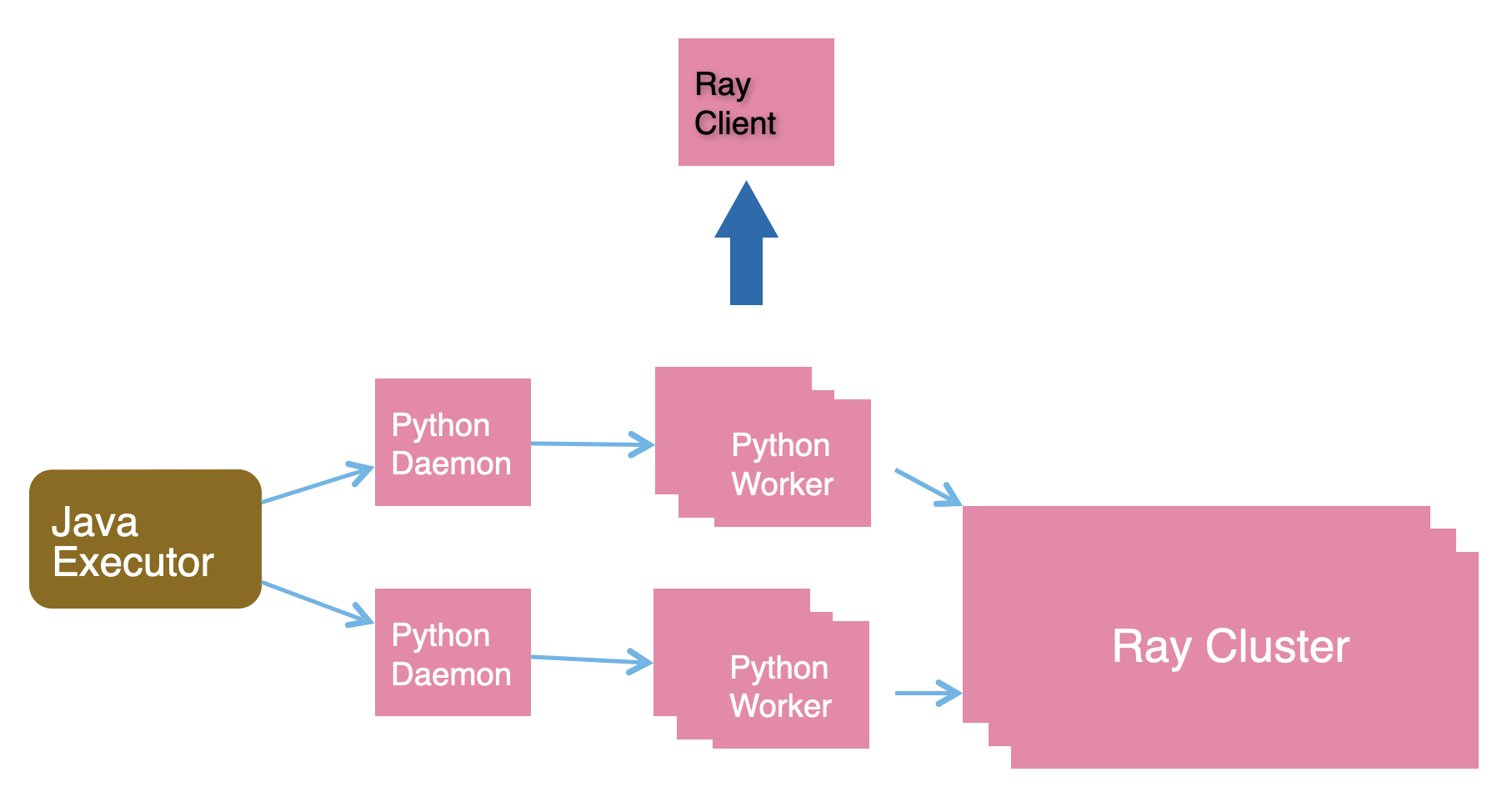

In order to support scalable execution of Python, Byzer developers evaluated both PySpark and Ray, and chose Ray. Compared to Ray, PySpark requires a JVM executor process to launch Python workers. This has several fundamental limitations. Python workers cannot communicate to each other, which is an essential requirement for ML workload. Complicated resource management and extra overhead between Java and Python processes. Limited support operations restricted by PySpark dataframe API and a lack of first-citizen support of Python distributed programming.

LinkHead to Ray Summit to Learn More!

If you are interested in learning more about how Ray is used across the industry, please register for Ray Summit with this 50% off code! Ray Summit will be held next week (August 22~24) in San Francisco.