Understanding the Ray Ecosystem and Community

Ray is both a general purpose distributed computing platform and a collection of libraries targeted at machine learning and other workloads.

Update: Ben has created a short video covering the highlights of this post.

Ray is usually described as a distributed computing platform that can be used to scale Python applications with minimal effort. While this is true, in recent conversations we’ve been highlighting the fact that Ray also draws users with very specific needs. There are now several popular open source libraries built on top of Ray that are attracting users on their own. In this post we describe some early observations about the Ray community.

LinkA framework for building frameworks

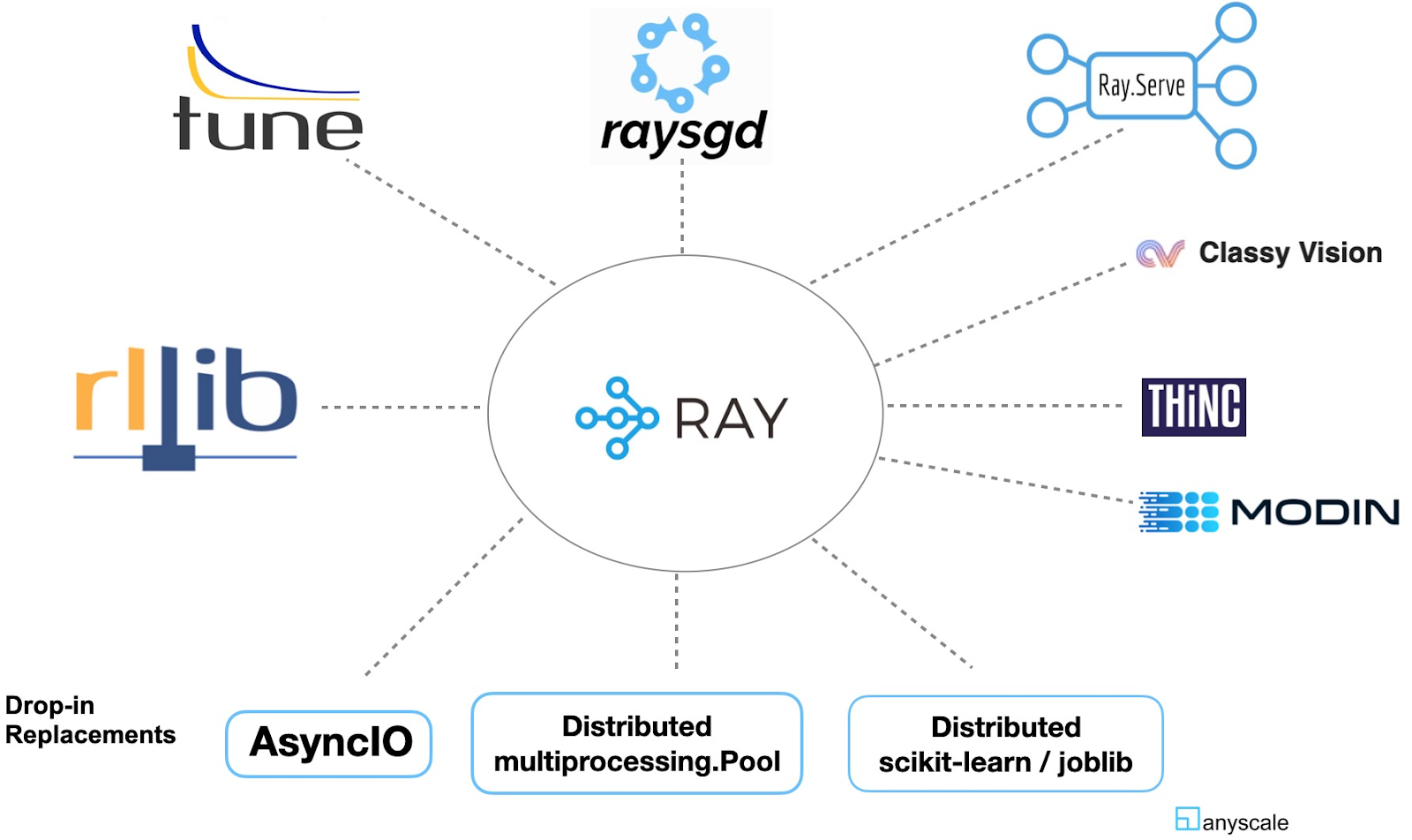

To understand its user base, let’s start by briefly describing some popular libraries and tools built on top of Ray. These examples show that Ray is general enough that companies and users are building flexible libraries and other frameworks on top of it.

There are two types of libraries in the Ray ecosystem: (1) libraries for new workloads and (2) drop-in replacements for parallelizing existing libraries.

LinkHaving a variety of libraries appeals to Python developers

Unlike other “end-to-end machine learning platforms”, each of these libraries and tools can be used on their own (a simple pip install and off you go). For example, if you are happy with your model training tools but want to replace your model serving framework, you can use Ray Serve without needing to introduce the other Ray libraries. This flexibility explains why Ray’s libraries have attracted many developers who need to build machine learning applications. Developers and machine learning engineers are accustomed to building applications that use many different libraries written by different parties (having a number of “import” statements in a Python program is quite common).

LinkLibraries are helping grow Ray’s user base

Another important point to emphasize is that each of these libraries helps grow awareness and usage of Ray. In recent conversations with users we found that many of them began using Ray through one of these libraries and tools. Communities are even starting to sprout around each library (there’s an active Slack channel for each). Some of the libraries (Tune, RLlib) now boast a number of production use cases, while the newer libraries (RaySGD, Serve) are attracting many early users.

These libraries also serve as entry points through which many users learn about the broader Ray ecosystem. While Ray users can pick and choose which libraries and tools to use, we have long believed that most of them will end up using more than one of these libraries. Recent conversations with over forty companies have borne this out – as users become comfortable with one of these tools they end up using the other Ray libraries as well.

LinkThere are also many users of the core API

So far we’ve focused on users of libraries and tools built on top of Ray. But we’d like to stress that there are also many users of “Ray core”. They tend to fall into two main categories: those who need to scale a Python program or application and those who need to create scalable and high-performance libraries. For example, we recently spoke with a researcher from Oak Ridge National Laboratory who needed Ray for several large-scale machine learning and natural language applications. Another example comes from one of the largest financial services companies in China: Ant Financial has written libraries on top of Ray to support their massive scale fraud detection and personalization systems.

We plan to conduct a formal survey of Ray users in the very near future. But for now, think of Ray as both a general purpose distributed computing platform and as a collection of libraries targeted at machine learning and other workloads. Unlike monolithic platforms, users of Ray are free to use one or more of the existing libraries or to use Ray to build their own.