What is distributed training?

If you’re new to distributed training, or you’re looking for a refresher on what distributed training is and how it works, you’ve come to the right place.

In this article, we’ll start with a quick definition of distributed training and the problem it solves. Then, we’ll explore different things you should take into consideration when you’re selecting a distributed training tool, and introduce you to Ray Train, a one-stop distributed training toolkit.

Let’s jump in.

LinkIntroducing distributed training

Training machine learning models is a slow process. To compound this problem, successful models — those that make accurate predictions — are created by running many experiments, where engineers play with different options for model creation.

These options include feature engineering, choosing an algorithm, selecting a network topology, using different loss functions, trying various optimization functions, and experimenting with varying batch sizes and epochs. A handful of best practices help narrow these options based on your data and the prediction you hope to make. Ultimately, though, you still have to run many experiments. The faster your experiments execute, the more experiments you can run, and the better your models will be.

Distributed machine learning addresses this problem by taking advantage of recent advances in distributed computing. The goal is to use low-cost infrastructure in a clustered environment to parallelize training models.

Kubernetes is the most popular cluster example. Deploying machine learning models on Kubernetes in a cloud machine learning environment (AWS, Azure, or GCP) enables us to access hundreds of instances of a model-training service. When we divide and distribute the training workload within this cluster, model-training time improves from hours to minutes versus training on a local workstation.

LinkData parallelism and model parallelism

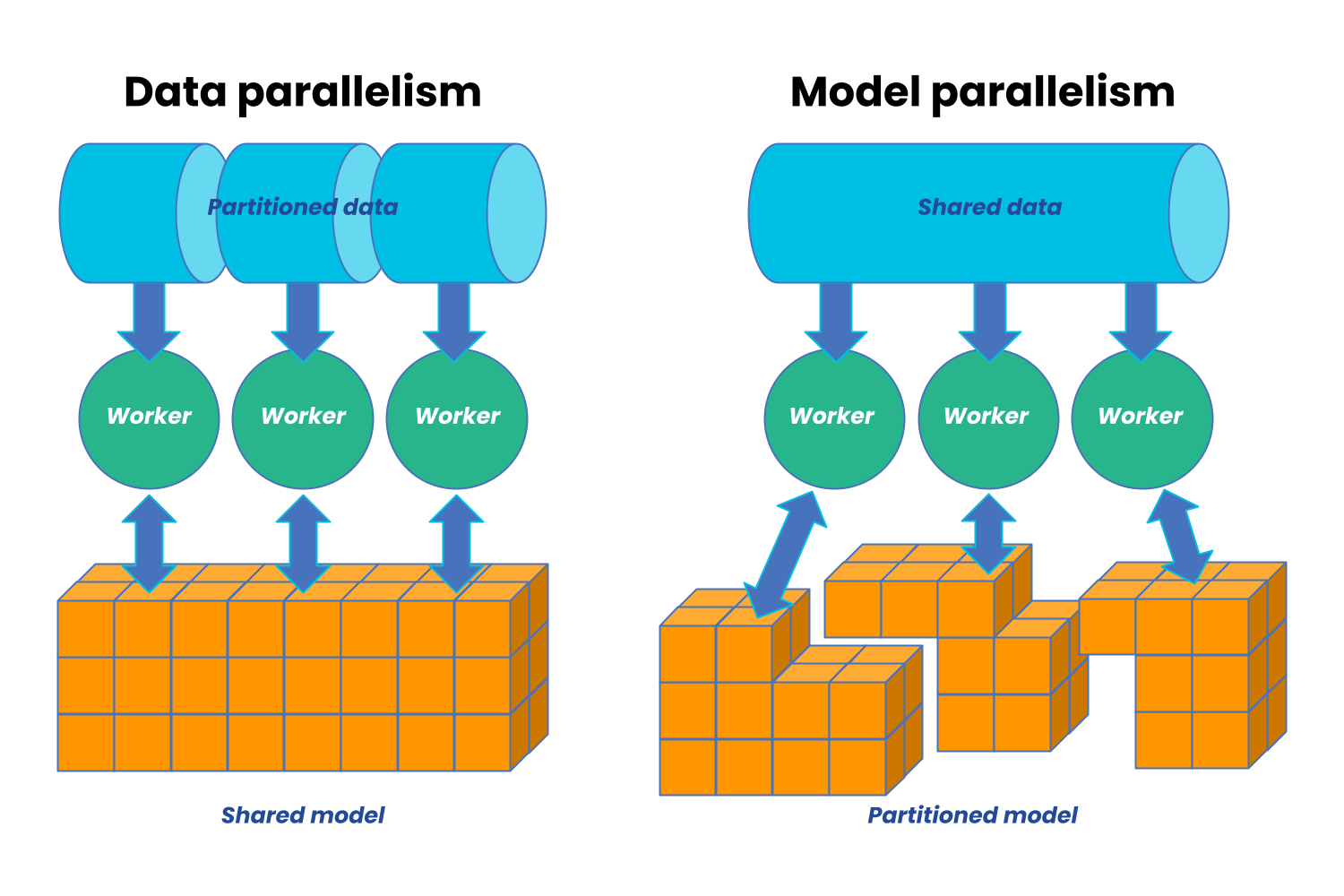

When we parallelize our training model, we’re dividing our workload across multiple processors, or workers, in order to speed up the training process. There are two main types of parallelization. Data parallelism is when we divide our training data across our available workers and run a copy of the model on each worker. Each worker then runs a different fragment of the data on the same model. In contrast, model (or network) parallelism is when we divide our model across different workers, with each worker running the same data on a different segment of the model.

The intricacies of data and model parallelism are beyond the scope of this article, but know that the approach should be based on the size of the model — model parallelism comes in handy in situations where the model is too big for any single worker, such as natural language processing and large-scale deep learning.

The gist is that distributed training tools spread the training workload within a cluster and on a local workstation with multiple CPUs. Let’s review the distributed training landscape to get a feel for all the options available in the marketplace.

LinkChoosing a distributed machine learning training tool

There are many distributed machine learning toolkits. Some are extensions of existing products, a few are APIs that come with existing frameworks, and some are frameworks themselves with their own object models. Each has its pros and cons to consider.

If you want to get started quickly with an effective distributed machine learning toolkit, consider these requirements when shopping:

Easy installation procedures that don’t require infrastructure expertise.

Workstation friendly. Engineers like to play around with their toys locally before deciding if a tool is worth incurring additional costs on a cloud machine learning cluster. A distributed training tool should efficiently run on an engineer’s workstation and take advantage of multiple CPUs.

Supports tools like Jupyter Notebooks for efficient experimentation.

Easy to use. Engineers shouldn’t be impacted by the complexities of distributed computing, allowing them to focus their attention on training and fine-tuning models.

Fault-tolerant. If an instance of your cluster fails, the framework should be smart enough to reassign the work. You shouldn’t have to start your training over.

Ray Train is designed with these concerns in mind. This framework promises to be easy to install — you’re a pip install away from your machine learning deployment and tinkering with your model. You don’t have to set up a cluster to play with Ray Train. It uses multiple processes on your workstation to seamlessly emulate a clustered environment. Also, Ray Train can run on low-cost infrastructure since it’s fault-tolerant and can recover from the failure of individual instances.

LinkLearn more

If you’re ready to learn more about Ray Train, check out the following resources: