Machine learning (ML) has become a cornerstone of innovation across industries. But behind the scenes of every successful ML initiative lies a critical component: ML infrastructure.

LinkWhat is ML Infrastructure?

ML infrastructure encompasses the underlying systems, tools, and platforms that support the entire machine learning lifecycle. It's the robust foundation that enables data scientists and ML engineers to develop, test, and deploy models efficiently and at scale.

Think of ML infrastructure as the engine room of a large ship. While passengers may not see it, this complex system of machinery keeps the vessel moving forward, adapting to changing conditions, and reaching its destination safely and efficiently.

Key components of ML infrastructure typically include:

Data storage and processing systems

Model training and experimentation platforms

Version control for both data and models

Deployment and serving mechanisms

Monitoring and logging tools

LinkWhy ML Infrastructure Matters

Investing in robust ML infrastructure is not just beneficial—it's essential for organizations serious about leveraging machine learning. Here's why:

Scalability: As your ML projects grow, you need an infrastructure that can handle increased data volumes and computational demands. Solid ML infrastructure allows your projects to scale seamlessly from proof-of-concept to production-level applications.

Reproducibility: In ML, reproducibility is paramount. A well-designed infrastructure platform ensures that experiments and model training can be easily reproduced, which is critical for debugging and iterating on models.

Collaboration: ML projects often involve multiple stakeholders, from data scientists to ML engineers to business analysts. A good ML infrastructure facilitates teamwork by providing shared resources, standardized workflows, and clear communication channels.

Efficiency: By automating repetitive tasks and providing streamlined workflows, robust ML infrastructure allows your team to focus on high-value activities like model design and feature engineering, significantly boosting productivity.

Governance and Compliance: In an era of increasing data privacy regulations, ML infrastructure plays a crucial role in maintaining version control, tracking experiments, and ensuring compliance with data handling and privacy requirements.

LinkML Infrastructure: Build vs Buy

When it comes to ML infrastructure, organizations often face a critical decision: whether to build a custom solution or invest in an existing platform. Both approaches have their merits and challenges.

LinkBuilding Your Own ML Infrastructure

Developing a custom ML infrastructure gives you maximum flexibility and control over the machine learning models you build. However, building your own infrastructure requires significant expertise and resources. It's a complex undertaking that demands a dedicated team and ongoing maintenance.

Key considerations for building your own infrastructure:

Platform Team Size: Building your own ML infrastructure requires a substantial investment in human resources. You need a large, dedicated platform team to design, implement, and maintain the infrastructure. This team should be prepared to handle ongoing development, troubleshooting, and scaling as your ML needs evolve.

Developer Knowledge and Experience: Your platform team must also possess specialized expertise in ML infrastructure. This goes beyond general software development skills. Developers need deep understanding of ML workflows, distributed systems, data engineering, and the intricacies of various ML frameworks. They should be familiar with tools like Kubernetes, Docker, and cloud platforms, as well as ML-specific technologies like TensorFlow Extended (TFX) or MLflow.

Time Investment: Building robust ML infrastructure is a time-intensive process. It requires careful planning, iterative development, and continuous refinement. Be prepared for a significant upfront time investment before seeing tangible benefits, and factor in ongoing time for maintenance and upgrades.

Integration: Your developers will need to establish connections and integrations with other data systems within your organization. This includes setting up data pipelines, ensuring compatibility with existing data storage solutions, and creating interfaces with other business systems. The complexity of these integrations can vary greatly depending on your existing IT landscape.

Control: Building your own infrastructure allows you to maintain full oversight of its development and evolution. You can tailor every aspect to your specific needs, from the choice of technologies to the implementation of security measures. This level of control can be particularly valuable for organizations with unique requirements or those in highly regulated industries.

While building your own ML infrastructure offers maximum customization and control, it's crucial to carefully assess whether your organization has the necessary resources, expertise, and long-term commitment to successfully undertake this approach. The benefits of a custom-built solution must be weighed against the substantial investment required in terms of time, money, and specialized talent.

LinkBuying an ML Infrastructure Solution

Purchasing an existing ML platform can significantly accelerate your time-to-value and often comes with professional support for complex deep learning applications. While it may lack some of the flexibility of a custom-built solution, it offers numerous advantages, especially for organizations looking to quickly scale their ML capabilities.

Key considerations for buying a solution:

Speed of Deployment: Get up and running quickly with pre-built components and workflows. Established platforms offer out-of-the-box solutions that can be implemented in a fraction of the time it would take to build from scratch. This rapid deployment can be crucial for organizations looking to gain a competitive edge or respond quickly to market demands.

Access to Expert Knowledge: Leverage the knowledge and best practices built into established platforms. These solutions encapsulate years of experience and refinement, offering optimized workflows and architectures that have been battle-tested across various use cases and industries.

Pre-Built Integrations: Many existing platforms come with partnerships and pre-established integrations. This ecosystem of connections can dramatically reduce the time and effort required to connect your ML infrastructure with other tools, data sources, and dependencies. From connections to cloud storage providers like AWS and Google Cloud to popular data visualization tools, these ready-made integrations can streamline your entire ML pipeline.

Professional Services and Support: Access professional assistance for troubleshooting and optimization. Vendor support can be invaluable, especially when dealing with complex issues or when you need to scale rapidly. This support often extends beyond mere troubleshooting to include guidance on best practices and optimization strategies.

Constantly-Updated Technology: Benefit from ongoing improvements and new features developed by the vendor. As the field of machine learning evolves, vendors continually update their platforms to incorporate the latest advancements—for example, in deep learning models, large language models, and generative AI—ensuring your infrastructure remains cutting-edge without requiring internal development efforts.

Maintain Developers Focus: By outsourcing platform creation and maintenance, you free your machine learning engineers to focus on what matters most: building and refining ML models that drive business value. This shift in focus can lead to faster innovation and more impactful ML projects.

Cost Predictability: While costs can increase with usage, they are often more predictable than the ongoing expenses of maintaining a custom-built solution. Many vendors offer scalable pricing models that allow you to start small and grow as your needs evolve.

Built-in Observability Metrics: A platform you invest in will likely come with out-of-the-box observability features like dashboards and log viewing.

Compliance and Security: Established platforms often come with built-in features to address common compliance requirements and security concerns, which can be particularly valuable in regulated industries.

The trade-offs of buying a solution should be carefully considered:

Vendor Lock-in: Depending on the platform, you may face challenges if you decide to switch vendors or move to a custom solution in the future. Consider the ease of data and model portability when evaluating platforms.

Customization Limitations: While many platforms offer extensive customization options, you may encounter limitations for highly specialized or unique requirements.

By carefully weighing these factors against your organization's specific needs, resources, and long-term ML strategy, you can make an informed decision on whether buying an ML infrastructure solution is the right choice for your team.

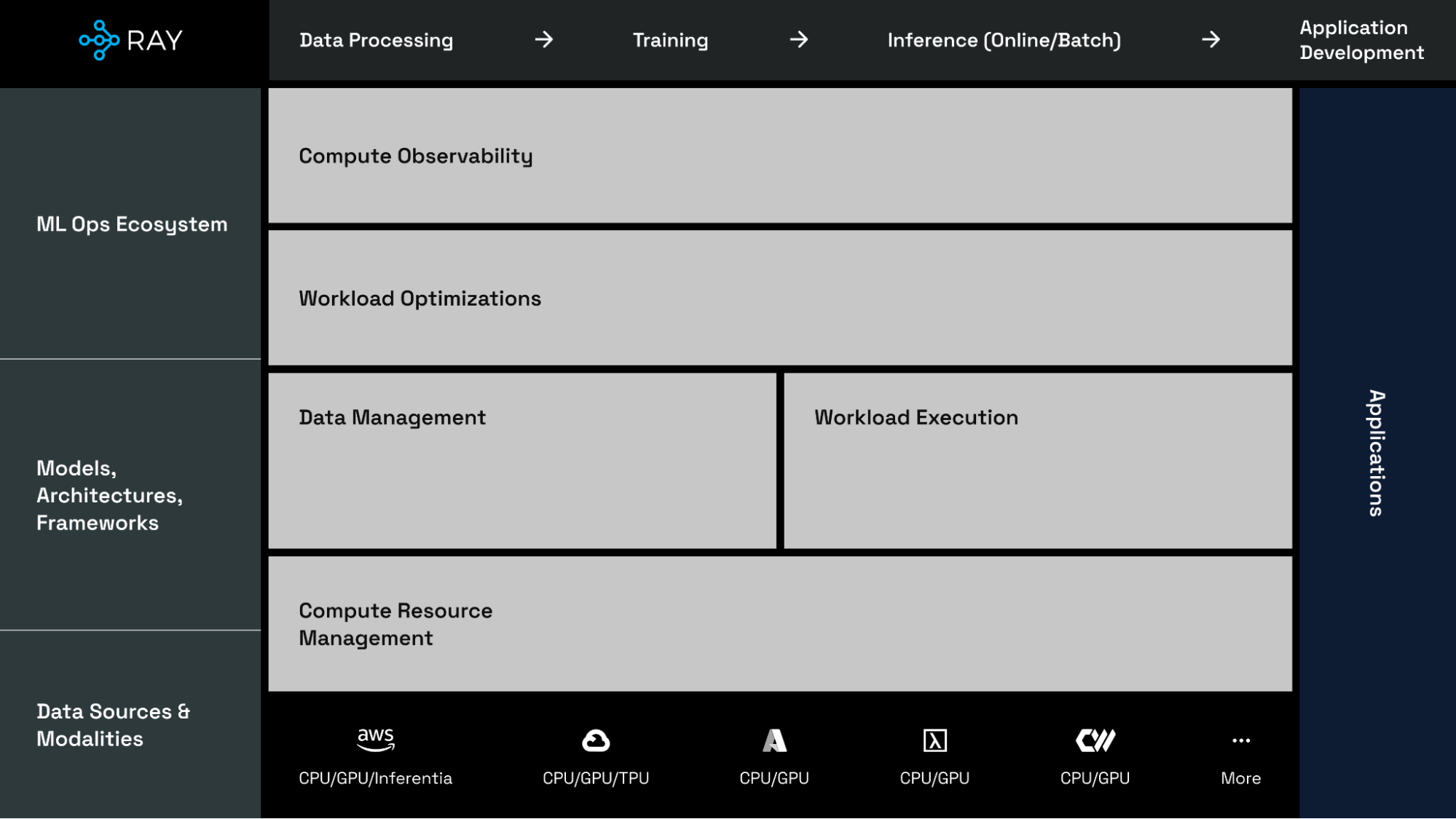

LinkRay: A Flexible Foundation for ML Infrastructure

Whether you choose to build or buy, Ray is the best foundation for ML infrastructure. Ray is the AI Compute Engine, providing a unified compute runtime that simplifies the ML ecosystem. Take a look at how you might use or deploy Ray in your infrastructure, whether you decide to deploy it as your platform or integrate it into your existing framework. With Ray, you get:

Scalability: The same Python code scales seamlessly from a laptop to a large cluster, enabling smooth transitions from development to large-scale production.

Unified ML API: Easily switch between popular frameworks like XGBoost, PyTorch, and Hugging Face, reducing the complexity of managing multiple tools.

Flexibility: Run on any cluster, cloud, or Kubernetes environment, providing freedom in deployment choices.

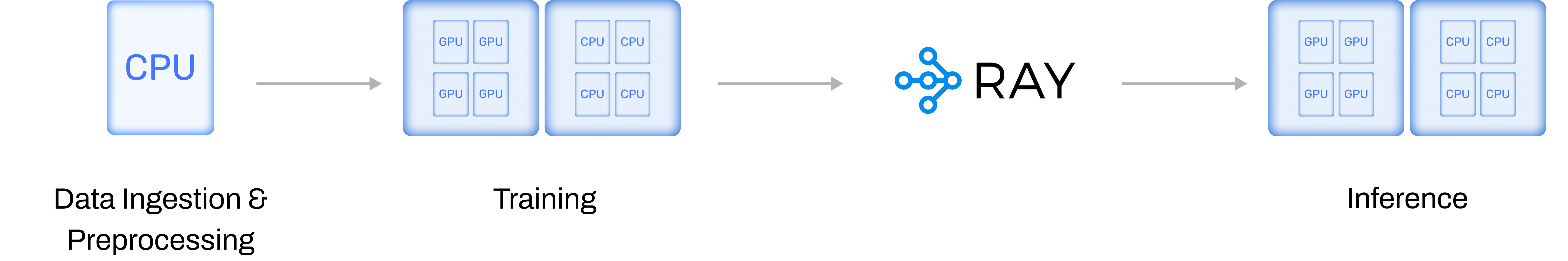

Heterogeneous Compute: Ray makes it easy to deploy your machine learning models across heterogeneous clusters made up of GPU-CPU accelerators.

LinkRay Design Principles

Whether you're looking to optimize a specific part of your ML workflow or build a comprehensive, distributed ML platform, Ray's design principles ensure it can meet your needs effectively.

LinkFocused on Compute-Intensive Tasks

Ray and its libraries are specifically designed to handle the heavyweight compute aspects of AI applications and services. This focus means Ray excels in areas where computational efficiency is crucial, such as unstructured data processing, distributed training of large models, hyperparameter tuning, and serving complex model pipelines. By optimizing for these compute-intensive tasks, Ray enables organizations to scale their ML workloads efficiently and effectively.

LinkIntegration-Friendly Architecture

Ray is built with the understanding that ML workflows often involve multiple tools and platforms. Rather than trying to be an all-in-one solution, Ray relies on external integrations for certain functionalities:

Storage: Ray integrates with your existing data storage solutions, so you can use your preferred data lakes or warehouses like Databricks or Snowflake.

Tracking: For experiment tracking and model versioning, Ray seamlessly integrates with popular tools like MLflow and Weights & Biases (W&B). This approach allows teams to maintain their existing tracking workflows while leveraging Ray's computational power.

LinkFlexible Workflow Orchestration

Workflow orchestration needs can vary significantly between organizations, but regardless of your needs, Ray fits in seamlessly:

Optional External Orchestrators: Ray can work alongside workflow orchestrators like Apache Airflow. These tools can be used for scheduling recurring jobs, launching new Ray clusters for specific tasks, and managing non-Ray compute steps in a larger pipeline.

Built-in Task Orchestration: For lighter orchestration needs within a single Ray application, Ray tasks provide a built-in mechanism for managing task graphs. This feature allows for efficient scheduling and execution of interdependent tasks without the need for an external orchestrator.

LinkModular Library Structure

Ray's libraries are designed to be used flexibly, catering to different adoption strategies:

Independent Usage: Each Ray library (e.g., Ray Tune for hyperparameter tuning, Ray Serve for model serving) can be used independently. This means you can adopt Ray incrementally to address your specific needs without necessarily overhauling your entire ML infrastructure.

Integration with Existing Platforms: Ray libraries can be seamlessly integrated into existing ML platforms, augmenting their capabilities with Ray's distributed computing power.

Foundation for Ray-Native Platforms: For organizations looking to build a comprehensive ML platform, Ray's libraries provide a solid foundation. They can be combined to create a full-fledged, Ray-native ML platform tailored to specific organizational needs.

LinkScalability and Performance

Underlying all these principles is Ray's commitment to scalability and performance. Whether used for a single component of an ML workflow or as the basis for an entire platform, Ray is designed to efficiently scale from a laptop to a large cluster, ensuring that computational resources are used effectively.

By adhering to these design principles, Ray positions itself as a versatile and powerful tool in the ML infrastructure landscape. It offers the flexibility to enhance existing setups, the power to handle compute-intensive tasks, and the scalability to grow with an organization's ML needs.

LinkUsing Ray as your ML Platform

Ray’s AI libraries simplify the ecosystem of machine learning frameworks, platforms, and tools, by providing a seamless, unified, and open experience for scalable ML:

Seamless Dev to Prod: Ray’s AI libraries reduce friction going from development to production. With Ray and its libraries, the same Python code scales seamlessly from a laptop to a large cluster.

Unified ML API and Runtime: Ray’s APIs enables swapping between popular frameworks, such as XGBoost, PyTorch, and Hugging Face, with minimal code changes. Everything from training to serving runs on a single runtime (Ray + KubeRay).

Open and Extensible: Ray is fully open-source and can run on any cluster, cloud, or Kubernetes. Build custom components and integrations on top of scalable developer APIs.

This is enhanced by the fact that you can pick and choose which Ray AI libraries you want to use.

LinkExample ML Platforms built on Ray

Several companies have successfully built their ML platforms on Ray:

Shopify's Merlin: Enables rapid iteration and scaling of distributed applications for product categorization and recommendations.

Spotify: Uses Ray for advanced applications for personalizing content recommendations and optimizing radio track sequencing.

Instacart's Griffin: Leveraged Ray to triple their ML platform capabilities in just one year.

These examples demonstrate Ray's versatility and scalability across different industries, systems, and use cases.

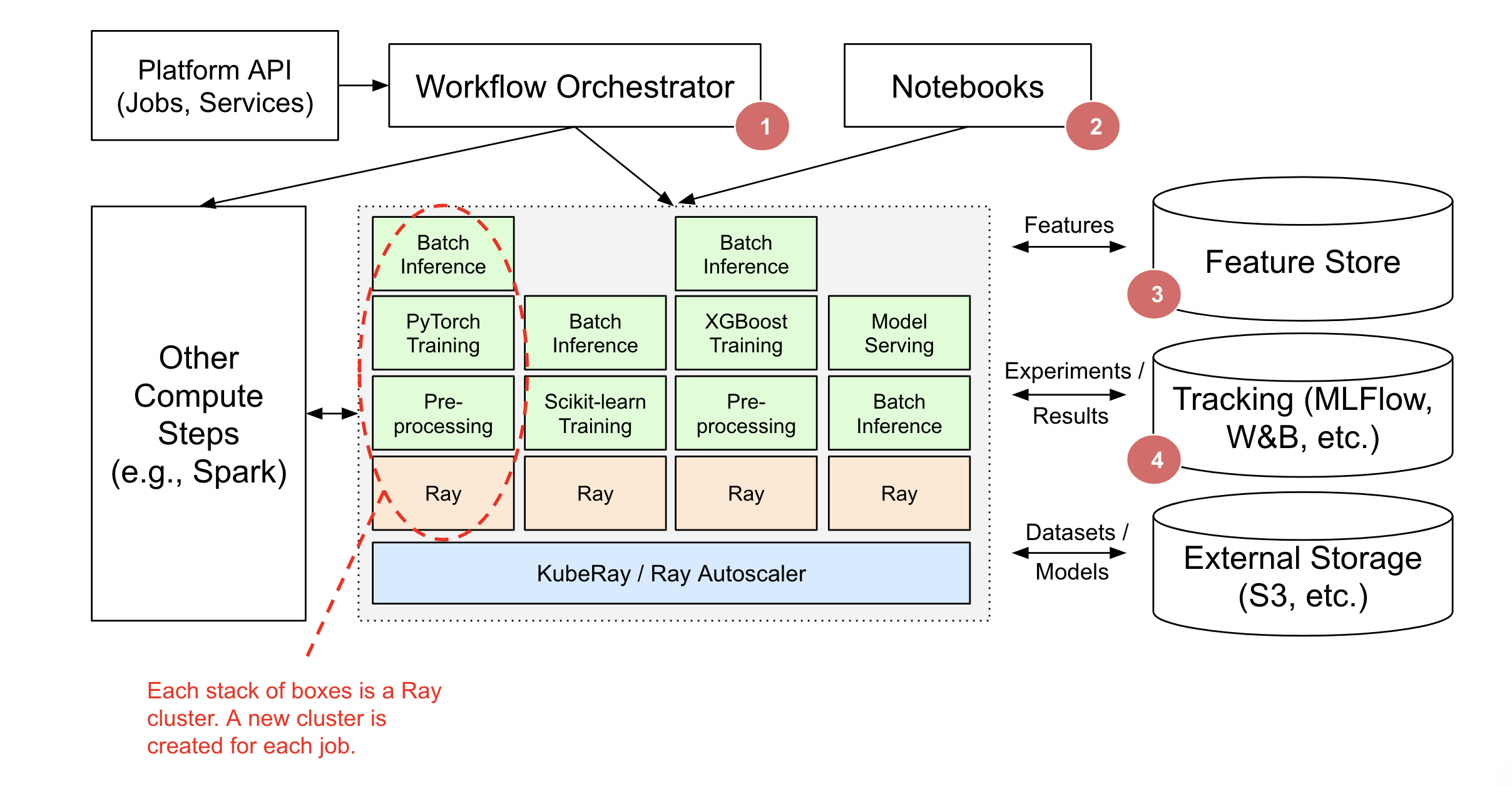

LinkIntegrating Ray into Your Existing ML Platform

You may already have an existing machine learning platform but want to use some subset of Ray’s ML libraries. For example, an ML engineer wants to use Ray within the ML Platform their organization has purchased (e.g., SageMaker, Vertex).

Ray can complement existing machine learning platforms by integrating with existing pipeline/workflow orchestrators, storage, and tracking services, without requiring a replacement of your entire ML platform.

In the above diagram:

A workflow orchestrator such as AirFlow, Oozie, SageMaker Pipelines, etc. is responsible for scheduling and creating Ray clusters and running Ray apps and services. The Ray application may be part of a larger orchestrated workflow (e.g., Spark ETL, then Training on Ray).

Lightweight orchestration of task graphs can be handled entirely within Ray. External workflow orchestrators will integrate nicely but are only needed if running non-Ray steps.

Ray clusters can also be created for interactive use (e.g., Jupyter notebooks, Google Colab, Databricks Notebooks, etc.).

Ray Train, Data, and Serve provide integration with Feature Stores like Feast for Training and Serving.

Ray Train and Tune provide integration with tracking services such as MLFlow and Weights & Biases.

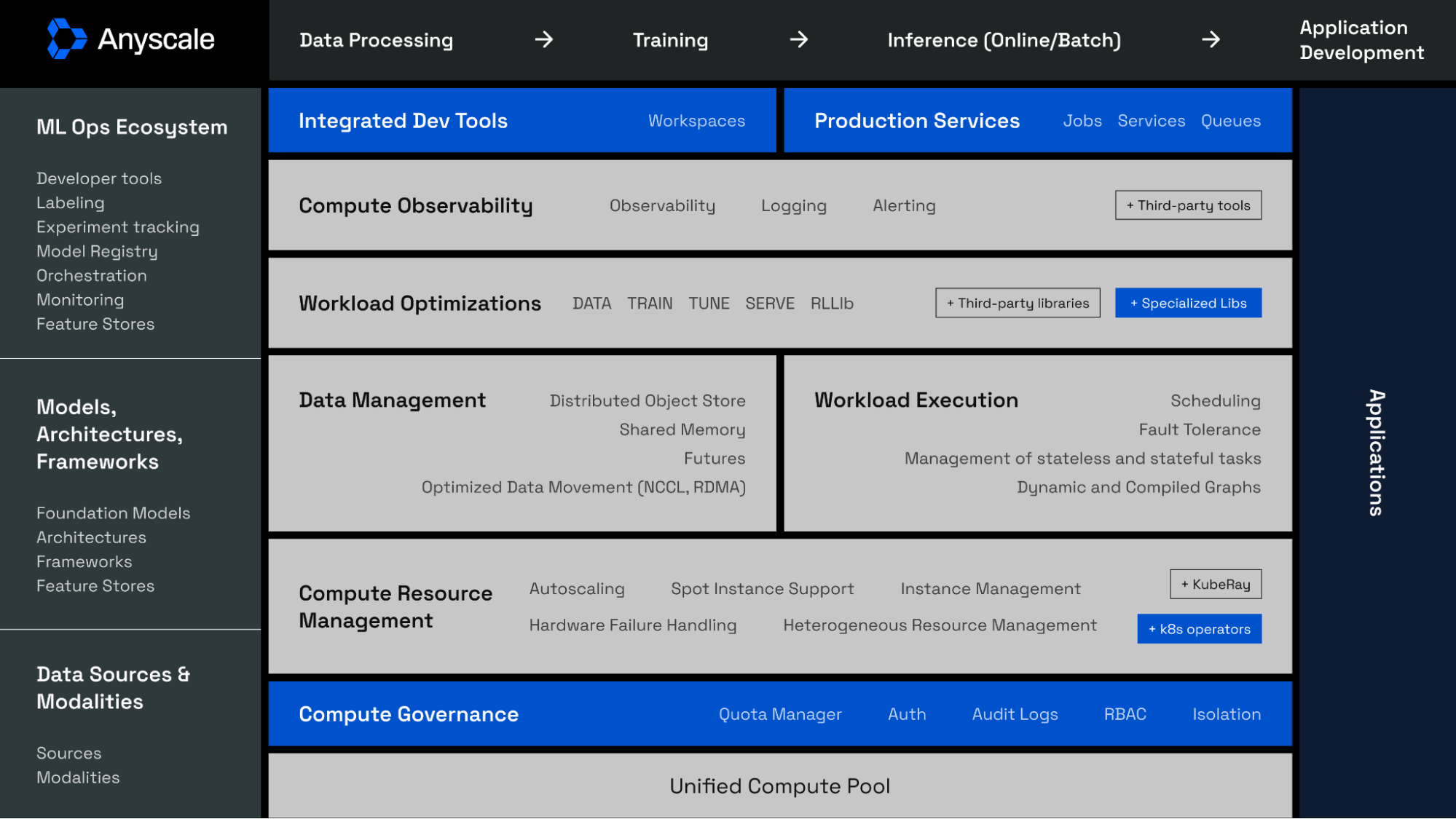

LinkRay vs Anyscale

Ray and its AI libraries provide unified compute runtime for teams looking to simplify their ML platform. Ray’s libraries such as Ray Train, Ray Data, and Ray Serve can be used to compose end-to-end ML workflows, providing features and APIs for data preprocessing as part of training, and transitioning from training to serving.

Anyscale is the best place to run Ray, created by the minds behind it. With Anyscale, you get a configurable AI platform. Its components together form a unified AI platform that gives your AI/ML builders unbeatable tooling to get to production with speed and cost efficiency.

LinkBest Practices for ML Infrastructure

Regardless of whether you build or buy, consider these best practices when approaching AI infrastructure:

Start with Clear Objectives: Understand your organization's ML goals and align your infrastructure accordingly.

Plan for Scale: Design your infrastructure to handle growth in data volume, model complexity, and user demands.

Prioritize Data Quality: Implement robust data pipelines and validation processes to ensure high-quality inputs for your models.

Embrace Automation: Automate repetitive tasks like data preprocessing, model training, and deployment to improve efficiency.

Monitor Performance: Implement comprehensive monitoring to track both infrastructure and model performance.

Ensure Security and Compliance: Build in security measures and compliance checks from the ground up.

LinkConclusion

ML infrastructure forms the backbone of successful machine learning initiatives. Whether you choose to build a custom solution or opt for an existing platform, the key is to create a foundation that supports your team's productivity, ensures scalability, and enables rapid iteration on ML projects.

Having a flexible and robust ML infrastructure will be crucial for staying competitive and delivering value through AI-powered solutions. Remember, the right ML infrastructure isn't just about technology—it's about empowering your team to innovate, experiment, and deliver impactful ML solutions efficiently and at scale.

FAQs

Table of contents

- What is ML Infrastructure?

- Why ML Infrastructure Matters

- ML Infrastructure: Build vs Buy

- Building Your Own ML Infrastructure

- Buying an ML Infrastructure Solution

- Ray: A Flexible Foundation for ML Infrastructure

- Ray Design Principles

- Using Ray as your ML Platform

- Example ML Platforms built on Ray

- Integrating Ray into Your Existing ML Platform

- Ray vs Anyscale

- Best Practices for ML Infrastructure

- Conclusion

- FAQs