17x

faster compared to AWS SageMaker for data preprocessing

2x

faster than Apache Spark for unstructured data preprocessing

up to

4.5x

faster at running read-intensive data workloads compared to open source Ray

up to

6x

cheaper for LLM batch inference compared to AWS Bedrock and OpenAI

What is Ray Data?

Ray Data is a scalable data processing library for ML and AI workloads.

With flexible and performant APIs for distributed data processing, Ray Data enables offline batch inference and data preprocessing ingest for ML training. Built on top of Ray Core, it scales effectively to large clusters and offers scheduling support for both CPU and GPU resources. Ray Data also uses streaming execution to efficiently process large datasets and maintain high GPU utilization.

Use Cases

Offline Batch Inference

Ray Data offers an efficient and scalable solution for batch inference, consistently outperforming competitors:

- 17x faster than SageMaker Batch Transform

- 2x faster than Spark for offline image classification

Benefits

Maximize GPU and CPU Utilization

Leverage CPUs and GPUs in the same pipeline with to increase GPU utilization and decrease costs

Built-in Fault Tolerance

Ray Data automatically recovers from out-of-memory failures and spot instance preemption.

One Unified API

Work with your favorite ML frameworks and libraries, just at scale. Ray Data supports any ML framework of your choice—from PyTorch to HuggingFace to Tensorflow and more.

Any Format, Any Data Type

Ray Data supports a wide variety of formats including Parquet, images, JSON, text, CSV, and more, as well as storage solutions like Databricks and Snowflake.

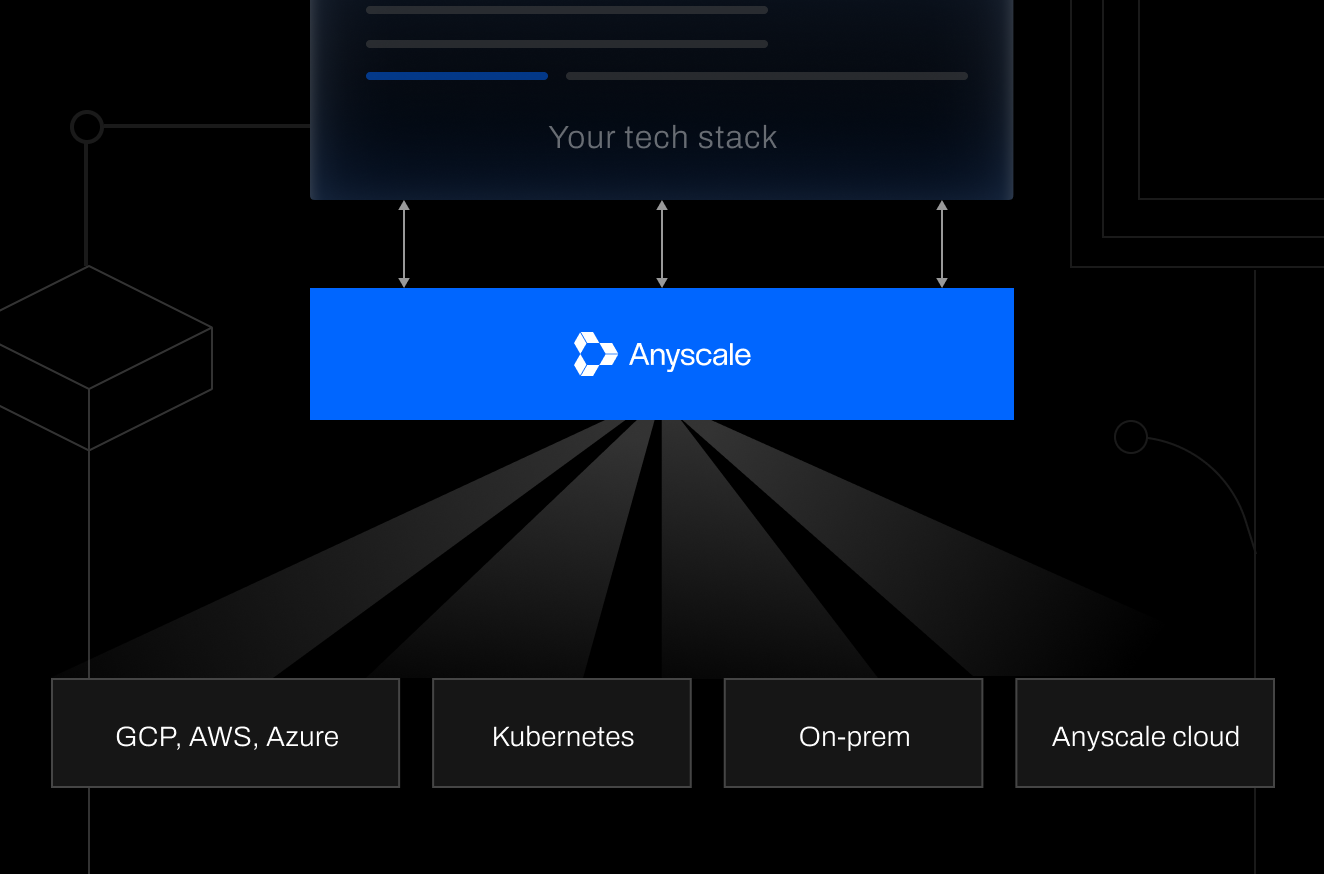

Supercharge Ray Data with Anyscale

Best-In-Class Data Processing for ML/AI

Text Support

Image Support

Audio Support

Video Support

3D Data Support

Task-Specific CPU & GPU Allocation

Stateful Tasks

Native NumPy Support

Native Pandas Support

Model Parallelism Support

Nested Task Parallelism

Fast Node Launching and Autoscaling

Fractional GPU Support

Load Datasets Larger Than Cluster Memory

Improved Observability

Autoscale Workers to Zero

Job Queues

Priority Scheduling

Accelerated Execution

Data Loading / Data Ingest / Last Mile Preprocessing

|  |  | ||

|---|---|---|---|---|

Text Support |  |  |  | |

Image Support |  |  - |  | |

Audio Support |  Manual |  - |  Manual | |

Video Support |  Manual |  - |  Manual | |

3D Data Support |  - |  - |  Binary | Binary |

Task-Specific CPU & GPU Allocation |  - |  - |  | |

Stateful Tasks |  - |  - |  | |

Native NumPy Support |  - |  - |  | |

Native Pandas Support |  |  - |  | |

Model Parallelism Support |  - |  - |  | |

Nested Task Parallelism |  - |  - |  | |

Fast Node Launching and Autoscaling |  - |  - |  - | 60 sec |

Fractional GPU Support |  - |  Limited |  | |

Load Datasets Larger Than Cluster Memory |  - |  - |  | |

Improved Observability |  - |  - |  - | |

Autoscale Workers to Zero |  - |  Limited |  | |

Job Queues |  - |  - |  - | |

Priority Scheduling |  - |  - |  - | |

Accelerated Execution |  - |  - |  - | |

Data Loading / Data Ingest / Last Mile Preprocessing |  |  |  |

Out-of-the-Box Templates & App Accelerators

Jumpstart your development process with custom-made templates, only available on Anyscale.

Batch Inference with LLMs

Run LLM offline inference on large scale input data with Ray Data

Computing Text Embeddings

Compute text embeddings with Ray Data and HuggingFace models.

Pre-Train Stable Diffusion

Pre-train a Stable Diffusion V2 model with Ray Train and Ray Data

FAQs

The Best Option for Data Processing At Scale

Get up to 90% cost reduction on unstructured data processing with Anyscale, the smartest place to run Ray.