RAG at Scale: 10x Cheaper Embedding Computations with Anyscale and Pinecone

Check out our updated RAG blog

For the most up-to-date content on how to run the best RAG pipelines with Ray, read our updated blog.

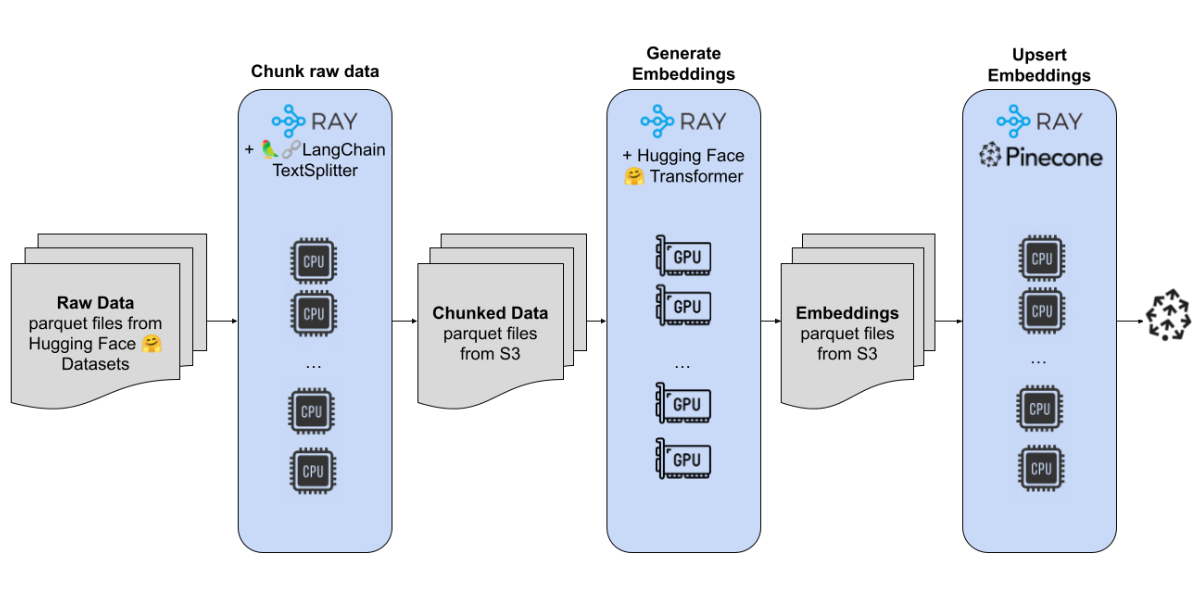

Previously, we showed how Ray Data is highly efficient on batch inference compared to other solutions (see the blog post for more details). In this blog, we demonstrate this on a large-scale production workload with Pinecone.

The first steps in building a Retrieval-Augmented Generation (RAG) application is to create embeddings of your data. These are representations of meaning and context that vector databases use for search and retrieval. Creating embeddings presents several challenges.

Scale: Terabyte-sized datasets are common, and the workloads are memory-intensive. With scale comes cost.

Heterogeneous compute: Embedding computations involve data ingest, preprocessing, chunking, and inference. This requires a mix of CPU and GPU compute. Adding to the challenge, a mixture of GPU types may be needed.

Multimodal data: RAG applications work with documents, images, and an increasing variety of data modalities.

We are excited to announce that with Anyscale and Pinecone, users can now generate embeddings for use in a distributed vector database at 10% of the cost of other popular offerings!

LinkBreakthrough vector database delivers up to 50x cost savings

With this launch, Pinecone is extending its lead as the technology and market leader by completely re-inventing the vector database. This groundbreaking serverless vector database lets companies add unlimited knowledge to their GenAI apps at 2% of the prior cost. With Pinecone serverless, you can store billions of vectors and only pay for what you search.

Key innovations that lead to the cost-savings and high performance include the following.

The separation of reads, writes, and storage significantly reduces costs for all types and sizes of workloads.

A pioneering architecture, with vector clustering on top of blob storage, provides low latency and always-fresh vector search over practically unlimited data sizes at a low cost.

Novel indexing and retrieval algorithms enable fast and memory-efficient vector search from blob storage without sacrificing retrieval quality.

A multi-tenant compute layer provides powerful and efficient retrieval for thousands of users, on demand. This enables a serverless experience in which developers don’t need to provision, manage, or even think about infrastructure, as well as usage-based billing that lets companies pay only for what they use.

LinkComparing to other embedding computation approaches

When computing embeddings, there are a handful of common approaches.

OpenAI: API providers like OpenAI can handle embeddings computation, but require a separate solution for scalable data ingest and preprocessing. Flexibility in model choice is limited.

Spark: Distributed frameworks like Spark can scale data ingest and preprocessing, but need a separate solution for efficient embeddings computation.

Vertex AI: Google Cloud can handle data ingest and preprocessing, and Vertex AI can handle embedding computations. Like OpenAI, flexibility in model choice is limited.

The approaches above do not comprise an all-in-one solution that handles both data ingestion and embedding computations at scale, while allowing for flexibility in the preprocessing step and embedding model.

The Overall RAG Stack

Zooming out, embedding computations are just part of the picture. To build a RAG application, there are many pieces.

A high-performance, distributed vector database such as Pinecone is necessary to support efficient inserts and queries with the computed embeddings.

Hugging Face and LangChain provide open source models and utilities used for computing embeddings.

Finally, a distributed computation engine such as Ray ties all of these elements together and seamlessly manages the end-to-end workflow.

LinkUsing Ray Data and the Pinecone API

The following example outlines how to use Ray Data and the Pinecone API to compute and upsert embeddings to Pinecone:

1import ray

2from pinecone import Pinecone

3

4from langchain.text_splitter import RecursiveCharacterTextSplitter

5import numpy as np

6from transformers import AutoTokenizer, AutoModel

7import uuid

8

9def chunk_row(row):

10 # Chunk each input row

11 splitter = RecursiveCharacterTextSplitter(...)

12 chunks = splitter.split_text(row["text"])

13 return [

14 {

15 "id": str(uuid.uuid4()),

16 "text": chunk,

17 } for chunk in chunks

18 ]

19

20class ComputeEmbeddings:

21 def __init__(self, model_name="thenlper/gte-large"):

22 # To use a different embedding model, update the default name

23 # above, or pass the new model name in the map_batches() call:

24 # chunked_ds.map_batches(

25 # ComputeEmbeddings, fn_constructor_args=(new_model_name,)

26 # )

27 self.model = AutoModel.from_pretrained(model_name)

28 self.tokenizer = AutoTokenizer.from_pretrained(model_name)

29

30 def __call__(self, batch):

31 batch_text = batch["text"]

32 tokenize_results = self.tokenizer(batch_text)

33 model_input = {

34 "input_ids": tokenize_results["input_ids"],

35 "token_type_ids": tokenize_results["token_type_ids"],

36 "attention_mask": tokenize_results["attention_mask"],

37 }

38

39 embeddings = self.model(**model_input)

40 embeddings = self._average_pool(outputs.last_hidden_state, model_input['attention_mask'])

41 embeddings = F.normalize(embeddings)

42

43 batch["values"] = embeddings.numpy().astype(np.float32)

44 return batch

45

46 def _average_pool(self, last_hidden_states, attention_mask):

47 last_hidden = last_hidden_states.masked_fill(~attention_mask[..., None].bool(), 0.0)

48 return last_hidden.sum(dim=1) / attention_mask.sum(dim=1)[..., None]

49

50def pinecone_upsert(batch):

51 client = Pinecone(api_key=...)

52 index = client.Index(index_name)

53 result = index.upsert(vectors=batch)

54 return {"num_success": np.array([result.upserted_count])}

55

56# replace with read API corresponding to your input file type

57ds = ray.data.read_parquet("s3://bucket-raw-text")

58

59# chunk the input text

60chunked_ds = ds.flat_map(chunk_row)

61

62# compute embeddings with a class that calls the embeddings model

63embeddings_ds = chunked_ds.map_batches(ComputeEmbeddings)

64

65# replace with write API corresponding to your desired output file type

66embeddings_ds.write_parquet("s3://output-embeddings")

67

68# upsert embeddings to Pinecone with the Pinecone API

69upsert_results = embeddings_ds.map_batches(pinecone_upsert).materialize()

70For more details and additional examples on how to best leverage the Ray Data API, please refer to the Ray Data documentation.

LinkCost comparison

To compare the price-performance of Anyscale to other solutions, we generated embeddings for the falcon-refinedweb dataset, which has over 600 billion tokens using Amazon Web Services EC2 service g5.xlarge machines, pre-processing was done on CPU-only machines. The following table compares cost estimates for Anyscale and several popular alternatives to generate the 1 billion embeddings (prices as of January 2024):

Total cost for generating embeddings | |

|---|---|

OpenAI | $60,000 ($0.0001 per 1000 tokens) |

Vertex AI | $48,000 ($0.00002 per 1000 characters) |

Anyscale | $6,000 |

Note that for the alternatives listed here, these costs do not include data ingest and preprocessing costs, so Anyscale is even more cost-efficient than the above estimates suggest!

LinkConclusion

Generating embeddings is a critical task for developing successful RAG applications, and Anyscale is the most cost-efficient solution for this workflow. To fully take advantage of the scale that Anyscale enables, check out Pinecone’s distributed vector database service and try out the Anyscale Platform.