An informal introduction to reinforcement learning

This series on reinforcement learning was guest-authored by Misha Laskin while he was at UC Berkeley. Misha's focus areas are unsupervised learning and reinforcement learning.

Reinforcement learning (RL) has played a critical role in the rapid pace of AI advances over the last decade. In this post, we will explain in simple terms what RL is and why it is important, not only as a research subject but also for a diverse set of practical applications.

LinkWhat is reinforcement learning?

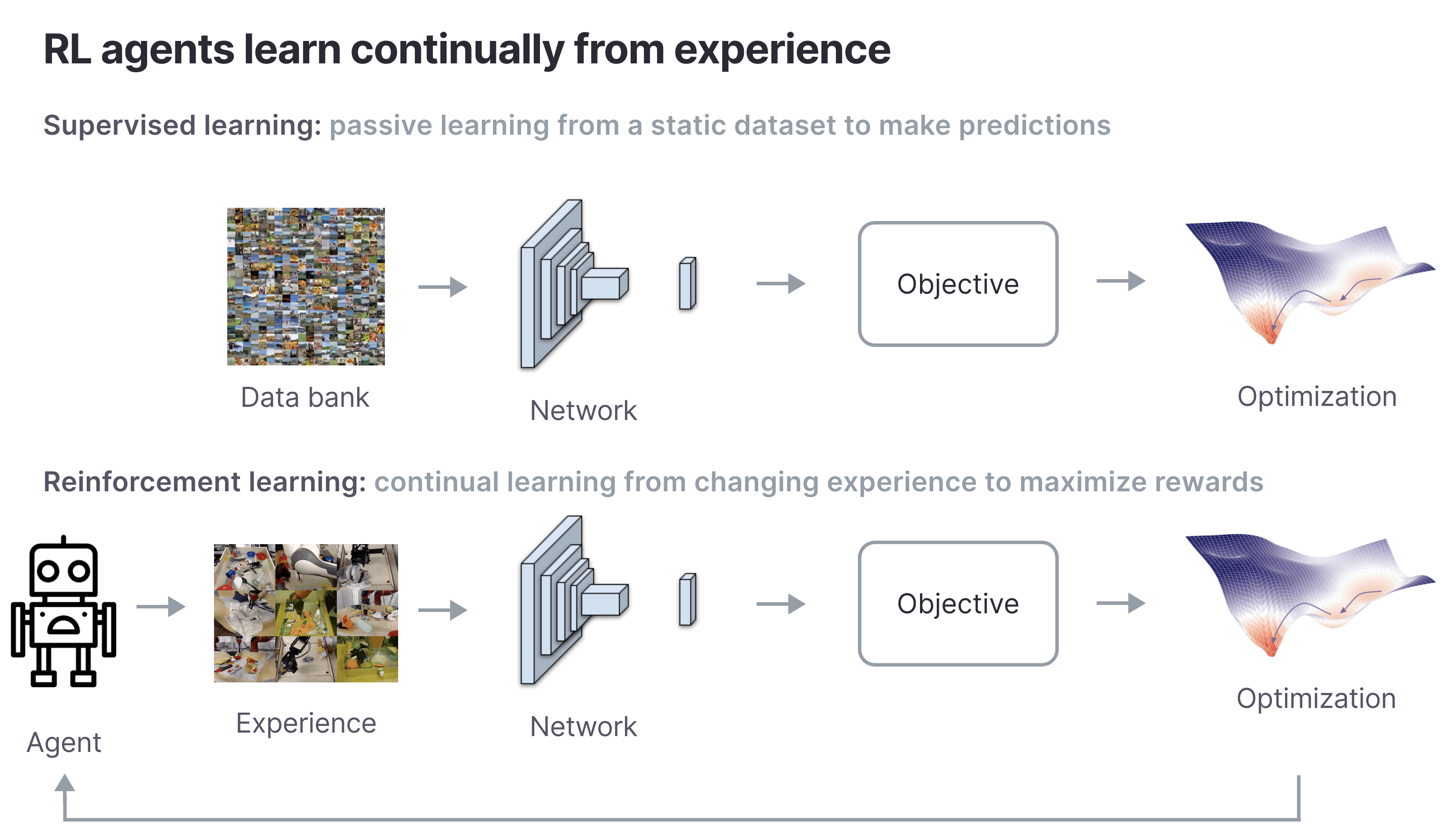

To understand what RL is, it’s easier to first understand what it is not. Most well-known machine learning algorithms make predictions but do not need to reason over a long time period or interact with the world. For example, given an image of an object, an image classifier will make a prediction about the identity of the object (e.g., an apple or an orange). This image classifier is passive. It doesn’t interact with the world and it doesn’t need to reason about how previous images influence its current prediction. Image classification is categorized as supervised learning but it is not RL.

Unlike passive predictive models like image classifiers, RL algorithms both interact with the world and need to reason over periods of time to achieve their goals. An RL algorithm’s goal is to maximize rewards over a given time period. Intuitively, it works similar to classical conditioning ideas from psychology. For example, a common way to train a dog to learn a new trick is by rewarding it with a treat when it does something right. The dog wants to maximize the number of treats it gets and will learn new skills to maximize its reward.

RL agents continually learn from experience, whereas supervised learning agents learn to make predictions based on a static dataset.

RL agents continually learn from experience, whereas supervised learning agents learn to make predictions based on a static dataset.LinkApple-picking robot

RL algorithms work in the same way as training dogs to learn new tricks. Instead of food treats, RL agents receive numerical rewards. For example, suppose we drop a robot into a maze and want it to maximize the number of apples it picks up. We can assign a +1 reward each time the robot picks up an apple. At the end of a fixed time period, we count all the apples the robot picked up to determine its total reward. RL algorithms are a mathematical framework for maximizing the total reward. An RL algorithm, given some set of assumptions about its environment, will guarantee that the robot picks up the maximal possible number of apples in its environment.

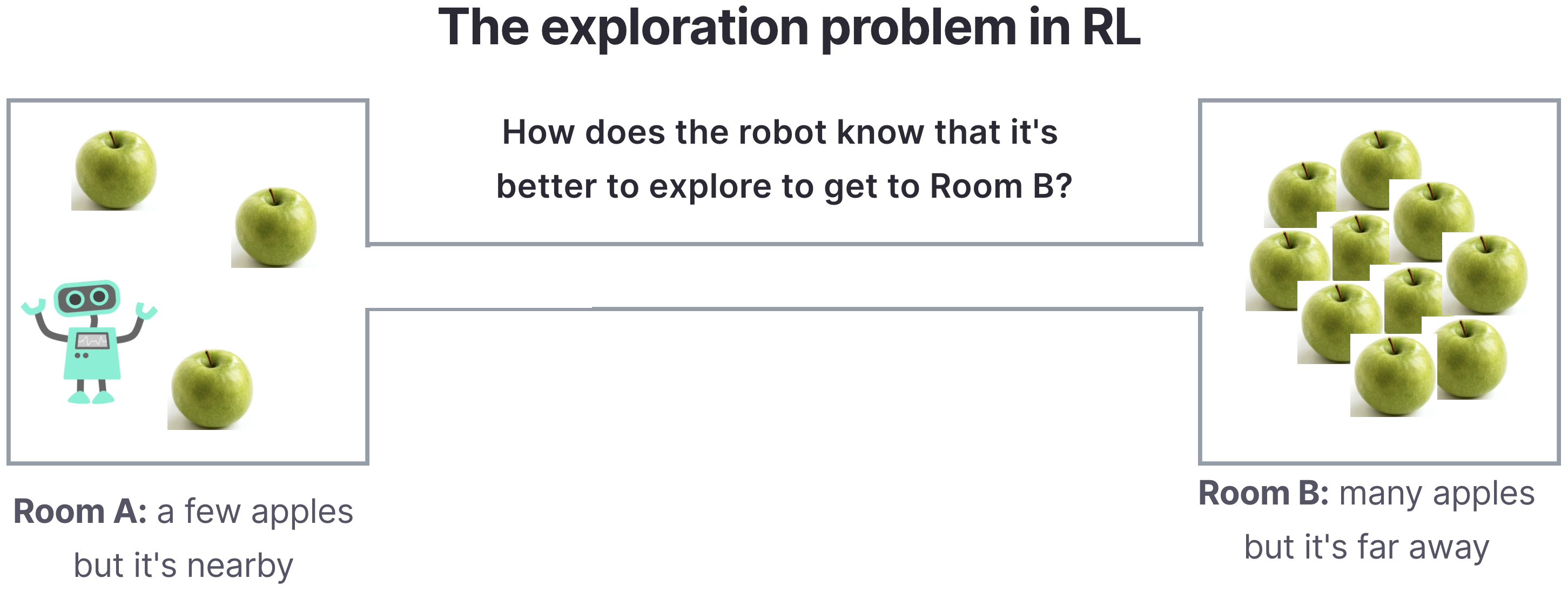

With this apple-picking robot example we can also illustrate a fundamental challenge with RL algorithms: the exploration problem. Suppose the robot is in a room with a moderate number of apples, but there exists another room down a long corridor with an entire apple tree. The best place for the robot to be is in the room with the apple tree, but it first has to traverse a long corridor with no rewards to get there. RL algorithms will discover the optimal solution on their own, but they can take a long time to train if exploration is hard.

The exploration problem in RL: Room A has few apples, but it's nearby. Room B has many more apples, but it's far away. How does the robot know that it's better to explore to get to Room B?

The exploration problem in RL: Room A has few apples, but it's nearby. Room B has many more apples, but it's far away. How does the robot know that it's better to explore to get to Room B?LinkA brief history of reinforcement learning

While parts of RL theory started to be developed as early as the mid 1950s, the ideas that have shaped modern RL weren’t brought together until 1989 when Chris Watkins formalized Q-learning — the mathematical framework for training RL agents. But it wasn’t until after the breakthrough AlexNet paper in 2012 that RL took off in the modern era. The ImageNet moment marked the first time a deep neural network substantially outperformed prior approaches to image classification on complex real-world images.

Shortly after in 2013, Vlad Mnih and collaborators at DeepMind released the Deep Q Network (DQN), which was the first system to play Atari video games autonomously from pixel inputs. This groundbreaking achievement paved the way for modern RL called Deep RL because it utilized deep neural networks to estimate how likely particular actions are to lead to high rewards. The DQN algorithm was at the core of subsequent advances like AlphaGo and AlphaStar.

LinkPractical applications of reinforcement learning

While most RL research today focuses on algorithms to develop reward maximizing agents in simulated settings, the RL framework is quite general and is already starting to be applied to solve a variety of practical problems.

Recommender systems: Recommender systems aim to recommend products or content to a user that will have higher likelihood to convert or engage. The number of possible products a system could recommend is quite large and efficient algorithms are needed to not only select products a user will like most, but also make sure these selections will increase the company’s desired long-term metrics. Examples of RL being used to power recommender systems include product recommendation at Alibaba and video recommendations at YouTube.

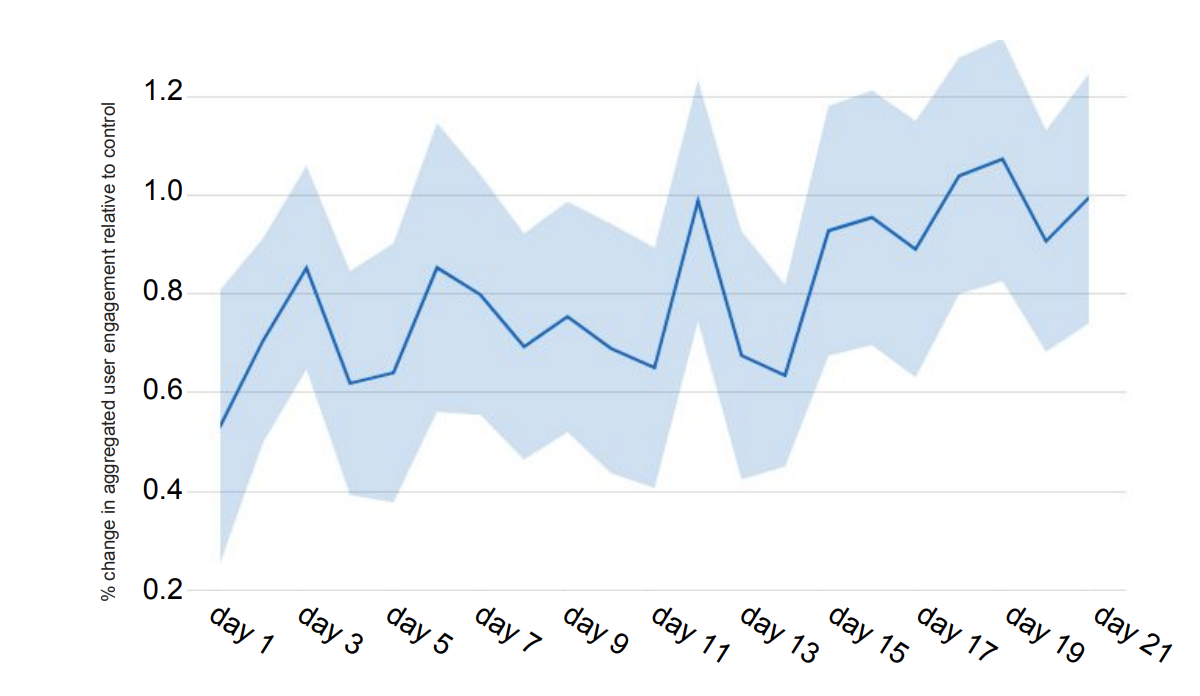

Over the last few years, new RL algorithms have been developed specifically tailored for recommender systems. One example is SlateQ, which uses RL to present a slate of items to the user. SlateQ is designed to deal with the combinatorially large space of potential product recommendations to drive overall user satisfaction.

SlateQ increases user engagement over time. Source: https://arxiv.org/abs/1905.12767

SlateQ increases user engagement over time. Source: https://arxiv.org/abs/1905.12767One important aspect to keep in mind when deploying RL algorithms is to make sure that the reward function is set up to maximize the company’s long-term metrics, such as satisfaction with the company’s product. For example, in the mid 2010s YouTube learned that while their recommendations were increasing user engagement, they were not increasing long-term user satisfaction. This was due to implicit bias, when it is unclear if a user is clicking on a video because it is their intent or simply because the recommendation was present on the screen. Since then Google released a new RL algorithm that addresses implicit bias.

Autonomous vehicle navigation: RL is being used today to plan paths and control autonomous vehicles (AVs) at Tesla. One example of RL usage for AVs is to plan a path to autonomously park a car. The number of possible paths a car could explore is exponential, which results in slow and inefficient path planning with traditional methods. At AI day, Tesla showcased an algorithm based on AlphaGo to find faster and more efficient plans.

Cooling data centers: Another useful application of RL has been cooling data centers at Google. Data centers consume a lot of energy and the computers they house dissipate it as heat. Maintaining an operational data center requires sophisticated cooling systems, which are expensive to run. With RL, Google was able to deploy cooling on-demand to eliminate wasteful periods where cooling was not needed.

In this post, we covered an informal introduction to what is RL and why it’s useful. These are just three of many practical applications of RL. It’s exciting that one algorithmic framework is general enough to apply to such a broad range of problems.

Next up, we'll explore how the mathematical framework for RL actually works. Or, check out our other resources below for more on RL:

Register for the upcoming Production RL Summit, a free virtual event that brings together ML engineers, data scientists, and researchers pioneering the use of RL to solve real-world business problems

Learn more about RLlib: industry-grade reinforcement learning

Check out our introduction to reinforcement learning with OpenAI Gym, RLlib, and Google Colab

Get an overview of some of the best reinforcement learning talks presented at Ray Summit 2021