Build and Scale a Powerful Query Engine with LlamaIndex and Ray

By Jerry Liu and Amog Kamsetty | June 26, 2023

In this blog, we showcase how you can use LlamaIndex and Ray to build a query engine to answer questions and generate insights about Ray itself, given its documentation and blog posts.

We’ll give a quick introduction of LlamaIndex + Ray, and then walk through a step-by-step tutorial on building and deploying this query engine. We make use of both Ray Datasets to parallelize building indices as well as Ray Serve to build deployments.

LinkIntroduction

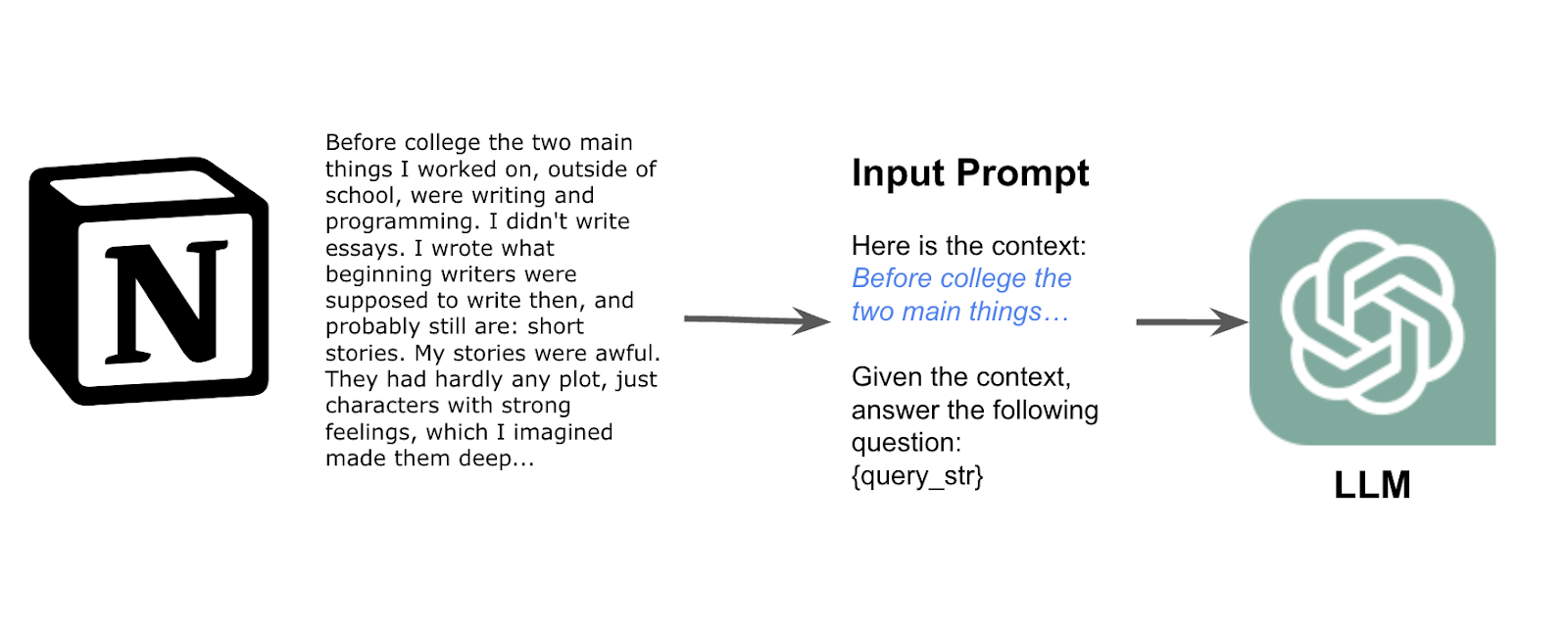

Large Language Models (LLMs) offer the promise of allowing users to extract complex insights from their unstructured text data. Retrieval-augmented generation pipelines have emerged as a common pattern for developing LLM applications allowing users to effectively perform semantic search over a collection of documents.

Example of retrieval augmented generation. Relevant context is pulled from a set of documents and included in the LLM input prompt.

However, when productionizing these applications over many different data sources, there are a few challenges:

Tooling for indexing data from many different data sources

Handling complex queries over different data sources

Scaling indexing to thousands or millions of documents

Deploying a scalable LLM application into production

Here, we showcase how LlamaIndex and Ray are the perfect setup for this task.

LlamaIndex is a data framework for building LLM applications, and solves Challenges #1 and #2. It also provides a comprehensive toolkit allowing users to connect their private data with a language model. It offers a variety of tools to help users first ingest and index their data - convert different formats of unstructured and structured data into a format that the language model can use, and query their private data.

Ray is a powerful framework for scalable AI that solves Challenges #3 and #4. We can use it to dramatically accelerate ingest, inference, pretraining, and also effortlessly deploy and scale the query capabilities of LlamaIndex into the cloud.

More specifically, we showcase a very relevant use case - highlighting Ray features that are present in both the documentation as well as the Ray blog posts!

LinkData Ingestion and Embedding Pipeline

We use LlamaIndex + Ray to ingest, parse, embed and store Ray docs and blog posts in a parallel fashion. For the most part, these steps are duplicated across the two data sources, so we show the steps for just the documentation below.

Code for this part of the blog is available here.

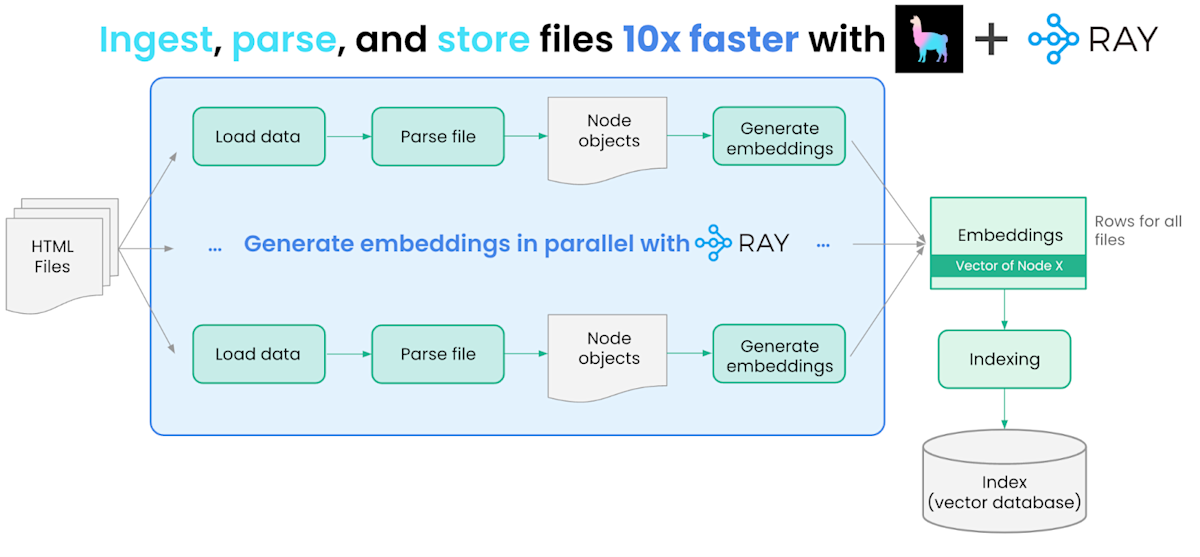

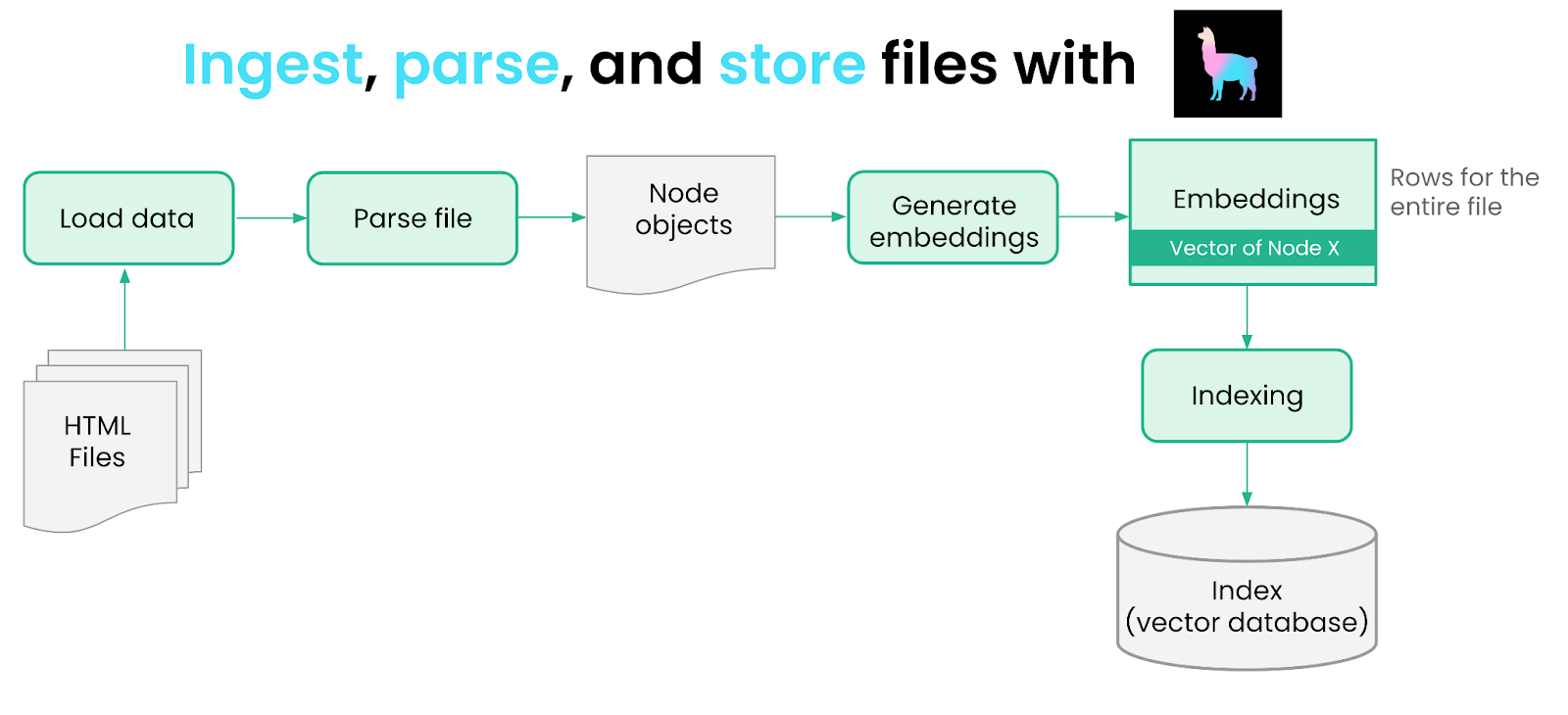

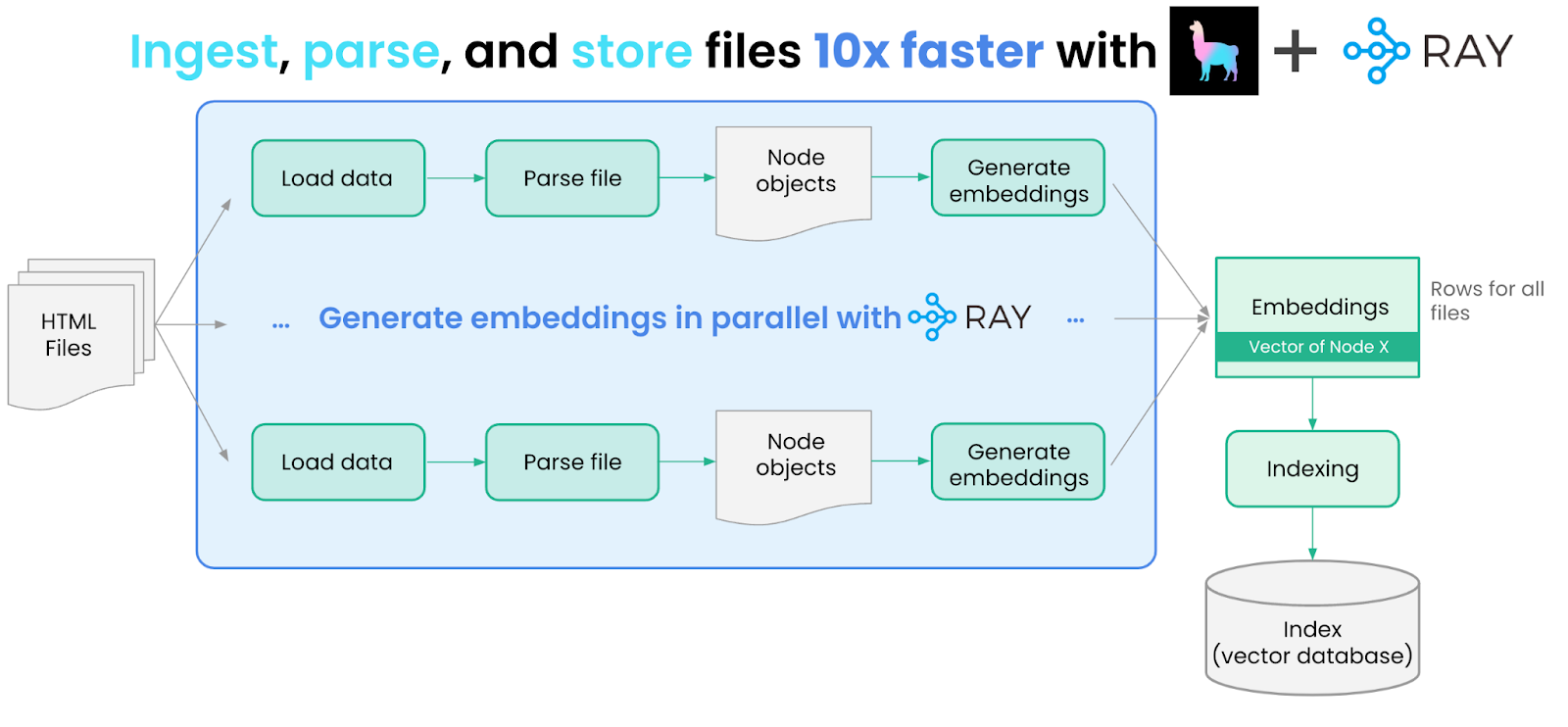

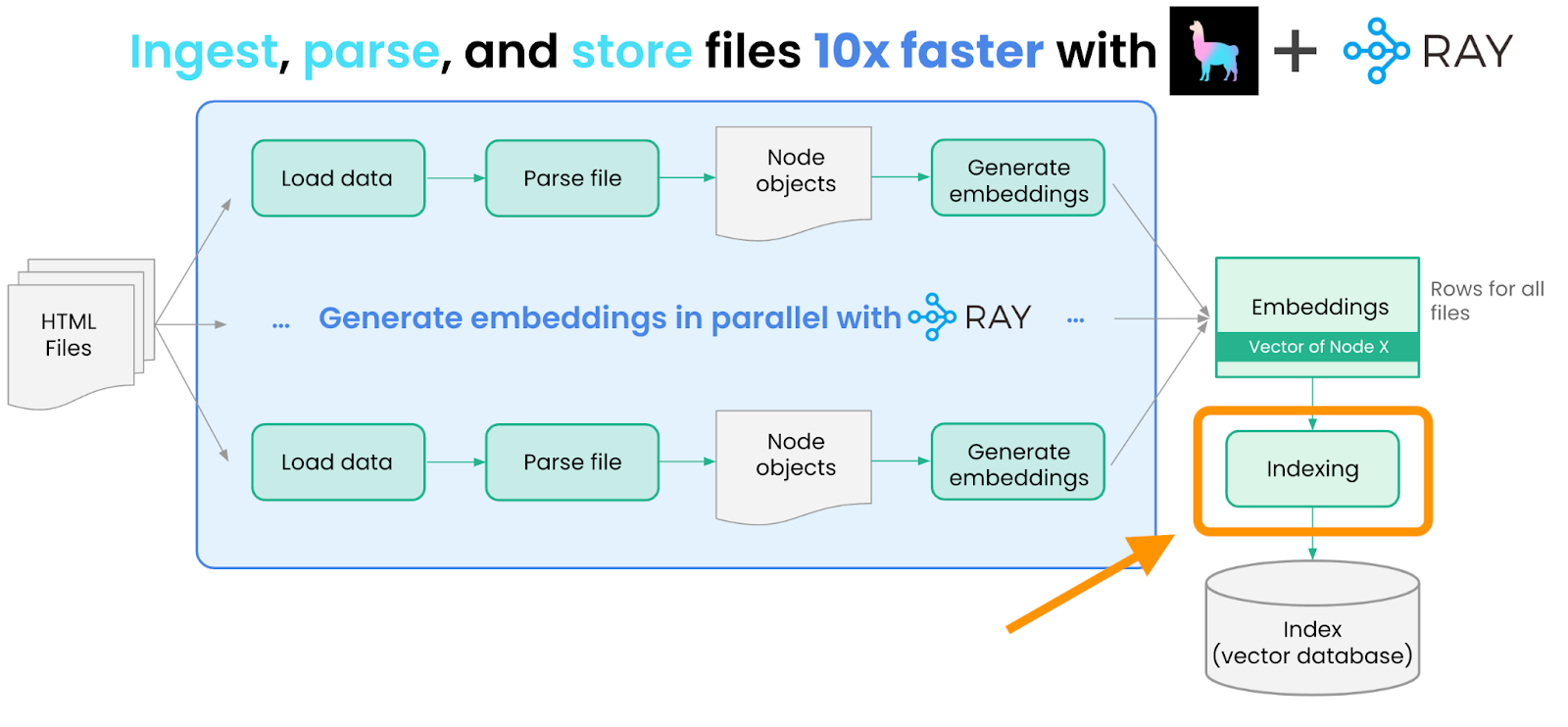

Sequential pipeline with “ingest”, “parse” and “embed” stages. Files are processed sequentially resulting in poor hardware utilization and long computation time.

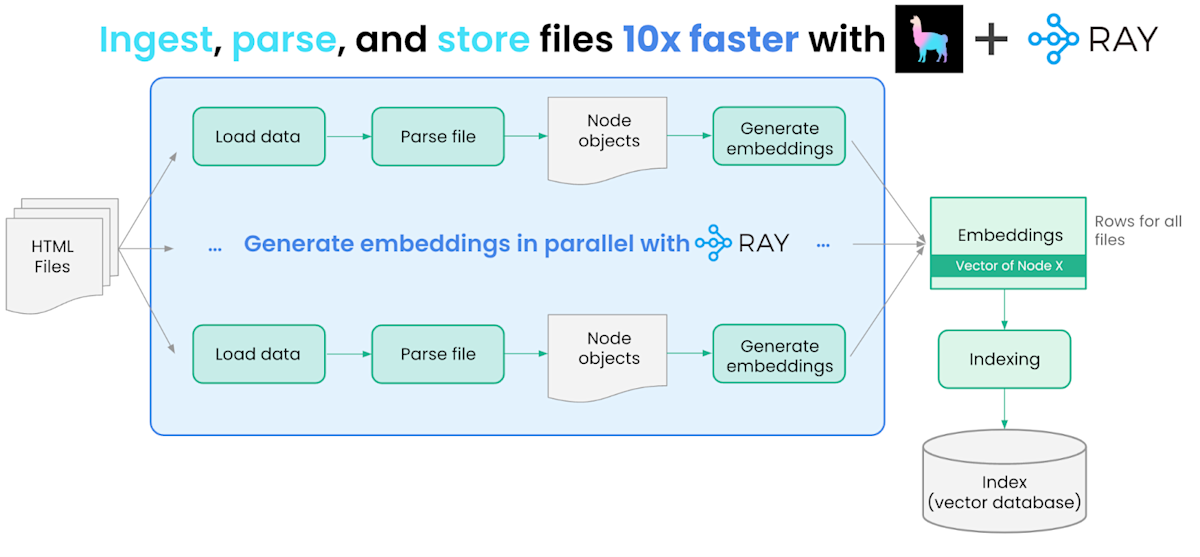

Parallel pipeline. Thanks to Ray we can process multiple input files simultaneously. Parallel processing has much better performance, because hardware is better utilized.

LinkData Loading Process

We start by ingesting these two sources of data. We first fetch both data sources and download the HTML files.

We then need to load and parse these files. We can do this with the help of LlamaHub, our community-driven repository of 100+ data loaders from various API’s, file formats (.pdf, .html, .docx), and databases. We use an HTML data loader offered by Unstructured.

1from typing import Dict, List

2from pathlib import Path

3

4from llama_index import download_loader

5from llama_index import Document

6

7# Step 1: Logic for loading and parsing the files into llama_index documents.

8UnstructuredReader = download_loader("UnstructuredReader")

9loader = UnstructuredReader()

10

11def load_and_parse_files(file_row: Dict[str, Path]) -> List[Dict[str, Document]]:

12 documents = []

13 file = file_row["path"]

14 if file.is_dir():

15 return []

16 # Skip all non-html files like png, jpg, etc.

17 if file.suffix.lower() == ".html":

18 loaded_doc = loader.load_data(file=file, split_documents=False)

19 loaded_doc[0].extra_info = {"path": str(file)}

20 documents.extend(loaded_doc)

21 return [{"doc": doc} for doc in documents]

22Unstructured offers a robust suite of parsing tools on top of various files. It is able to help sanitize HTML documents by stripping out information like tags and formatting the text accordingly.

LinkScaling Data Ingest

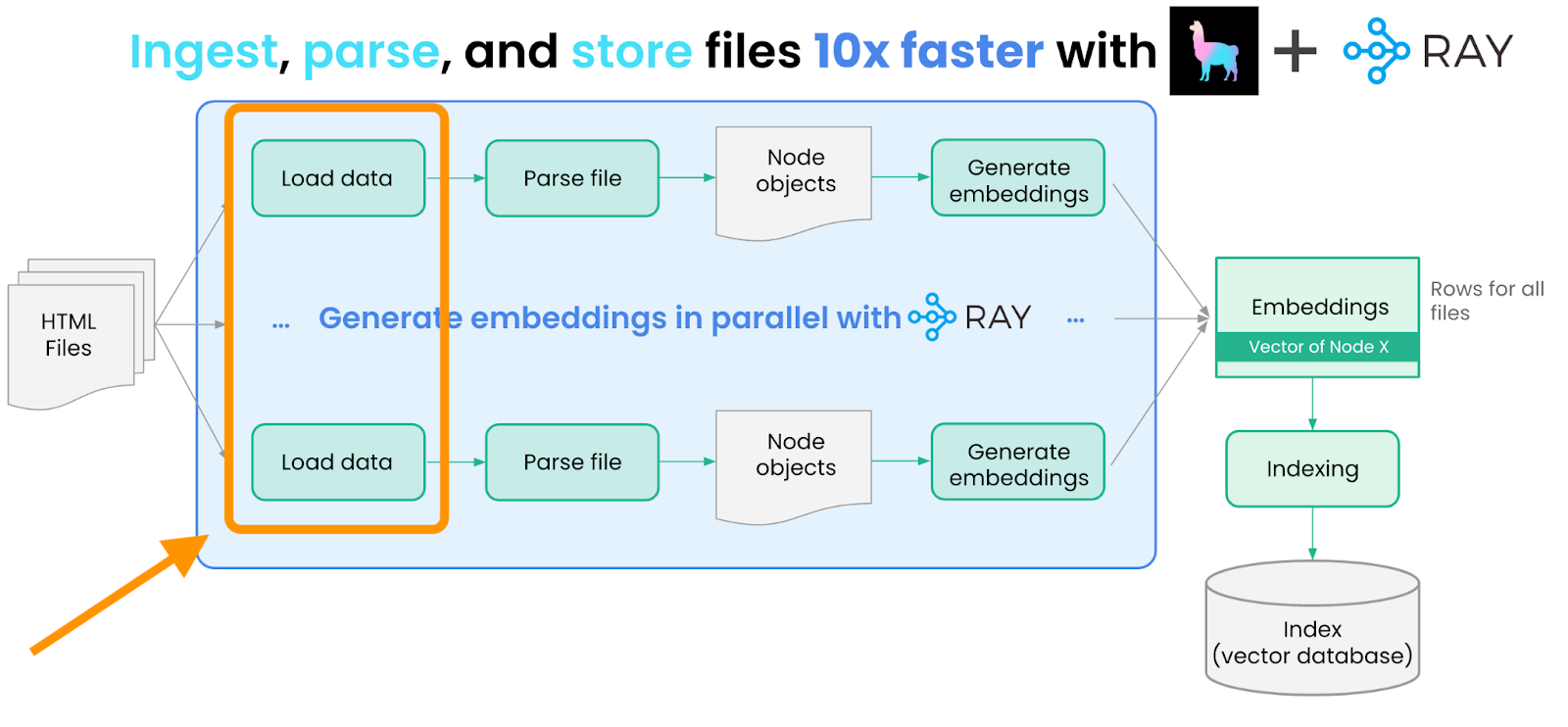

Since we have many HTML documents to process, loading/processing each one serially is inefficient and slow. This is an opportunity to use Ray and distribute execution of the `load_and_parse_files` method across multiple CPUs or GPUs.

1import ray

2

3# Get the paths for the locally downloaded documentation.

4all_docs_gen = Path("./docs.ray.io/").rglob("*")

5all_docs = [{"path": doc.resolve()} for doc in all_docs_gen]

6

7# Create the Ray Dataset pipeline

8ds = ray.data.from_items(all_docs)

9

10# Use `flat_map` since there is a 1:N relationship.

11# Each filepath returns multiple documents.

12loaded_docs = ds.flat_map(load_and_parse_files)

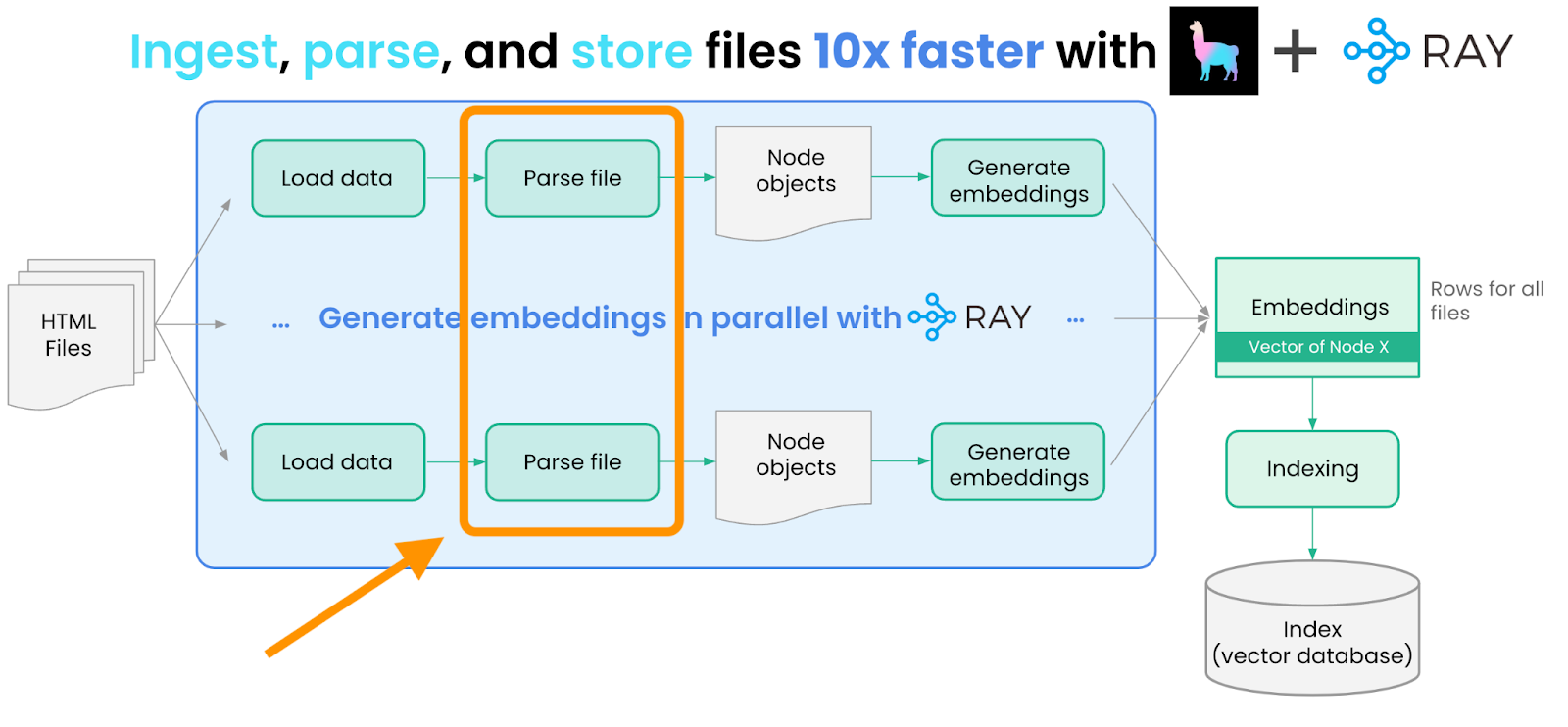

13LinkParse Files

Now that we’ve loaded the documents, the next step is to parse them into Node objects - a “Node” object represents a more granular chunk of text, derived from the source documents. Node objects can be used in the input prompt as context; by setting a small enough chunk size, we can make sure that inserting Node objects do not overflow the context limits.

We define a function called `convert_documents_into_nodes` which converts documents into nodes using a simple text splitting strategy.

1# Step 2: Convert the loaded documents into llama_index Nodes. This will split the documents into chunks.

2from llama_index.node_parser import SimpleNodeParser

3from llama_index.data_structs import Node

4

5def convert_documents_into_nodes(documents: Dict[str, Document]) -> List[Dict[str, Node]]:

6 parser = SimpleNodeParser()

7 document = documents["doc"]

8 nodes = parser.get_nodes_from_documents([document])

9 return [{"node": node} for node in nodes]

10LinkRun Parsing in Parallel

Since we have many documents, processing each document into nodes serially is inefficient and slow. We use Ray `flat_map` method to process documents into nodes in parallel:

1# Use `flat_map` since there is a 1:N relationship. Each document returns multiple nodes.

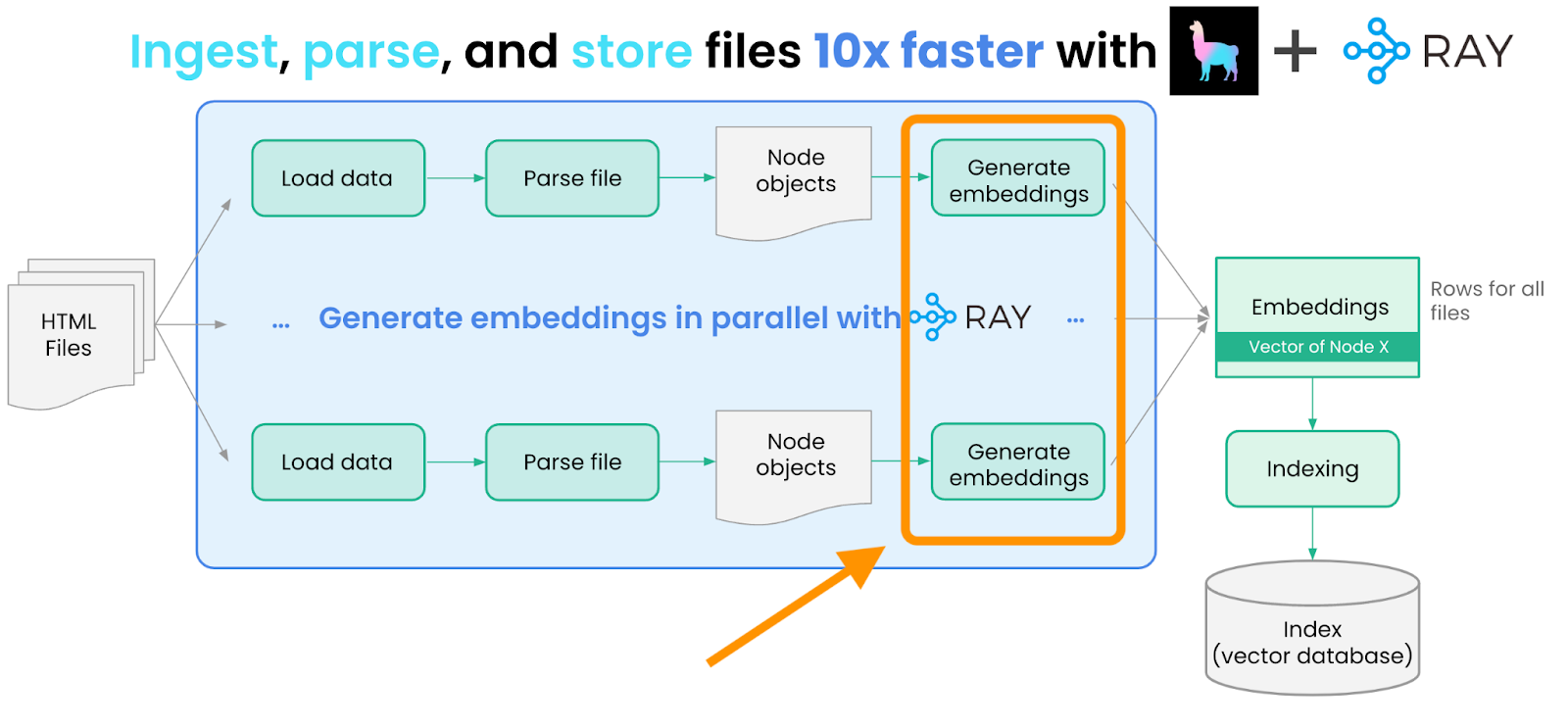

2nodes = loaded_docs.flat_map(convert_documents_into_nodes)LinkGenerate Embeddings

We then generate embeddings for each Node using a Hugging Face Sentence Transformers model. We can do this with the help of LangChain’s embedding abstraction.

Similar to document loading/parsing, embedding generation can similarly be parallelized with Ray. We wrap these embedding operations into a helper class, called `EmbedNodes`, to take advantage of Ray abstractions.

1# Step 3: Embed each node using a local embedding model.

2from langchain.embeddings.huggingface import HuggingFaceEmbeddings

3

4class EmbedNodes:

5 def __init__(self):

6 self.embedding_model = HuggingFaceEmbeddings(

7 # Use all-mpnet-base-v2 Sentence_transformer.

8 # This is the default embedding model for LlamaIndex/Langchain.

9 model_name="sentence-transformers/all-mpnet-base-v2",

10 model_kwargs={"device": "cuda"},

11 # Use GPU for embedding and specify a large enough batch size to maximize GPU utilization.

12 # Remove the "device": "cuda" to use CPU instead.

13 encode_kwargs={"device": "cuda", "batch_size": 100}

14 )

15

16 def __call__(self, node_batch: Dict[str, List[Node]]) -> Dict[str, List[Node]]:

17 nodes = node_batch["node"]

18 text = [node.text for node in nodes]

19 embeddings = self.embedding_model.embed_documents(text)

20 assert len(nodes) == len(embeddings)

21

22 for node, embedding in zip(nodes, embeddings):

23 node.embedding = embedding

24 return {"embedded_nodes": nodes}

25Afterwards, generating an embedding for each node is as simple as calling the following operation in Ray:

1# Use `map_batches` to specify a batch size to maximize GPU utilization.

2# We define `EmbedNodes` as a class instead of a function so we only initialize the embedding model once.

3

4# This state can be reused for multiple batches.

5embedded_nodes = nodes.map_batches(

6 EmbedNodes,

7 batch_size=100,

8 # Use 1 GPU per actor.

9 num_gpus=1,

10 # There are 4 GPUs in the cluster. Each actor uses 1 GPU. So we want 4 total actors.

11 compute=ActorPoolStrategy(size=4))

12

13# Step 5: Trigger execution and collect all the embedded nodes.

14ray_docs_nodes = []

15for row in embedded_nodes.iter_rows():

16 node = row["embedded_nodes"]

17 assert node.embedding is not None

18 ray_docs_nodes.append(node)

19LinkData Indexing

The next step is to store these nodes within an “index” in LlamaIndex. An index is a core abstraction in LlamaIndex to “structure” your data in a certain way - this structure can then be used for downstream LLM retrieval + querying. An index can interface with a storage or vector store abstraction.

The most commonly used index abstraction within LlamaIndex is our vector index, where each node is stored along with an embedding. In this example, we use a simple in-memory vector store, but you can also choose to specify any one of LlamaIndex’s 10+ vector store integrations as the storage provider (e.g. Pinecone, Weaviate, Chroma).

We build two vector indices: one over the documentation nodes, and another over the blog post nodes and persist them to disk. Code is available here.

1from llama_index import GPTVectorStoreIndex

2

3# Store Ray Documentation embeddings

4ray_docs_index = GPTVectorStoreIndex(nodes=ray_docs_nodes)

5ray_docs_index.storage_context.persist(persist_dir="/tmp/ray_docs_index")

6

7# Store Anyscale blog post embeddings

8ray_blogs_index = GPTVectorStoreIndex(nodes=ray_blogs_nodes)

9ray_blogs_index.storage_context.persist(persist_dir="/tmp/ray_blogs_index")

10That’s it in terms of building a data pipeline using LlamaIndex + Ray Data!

Your data is now ready to be used within your LLM application. Check out our next section for how to use advanced LlamaIndex query capabilities on top of your data.

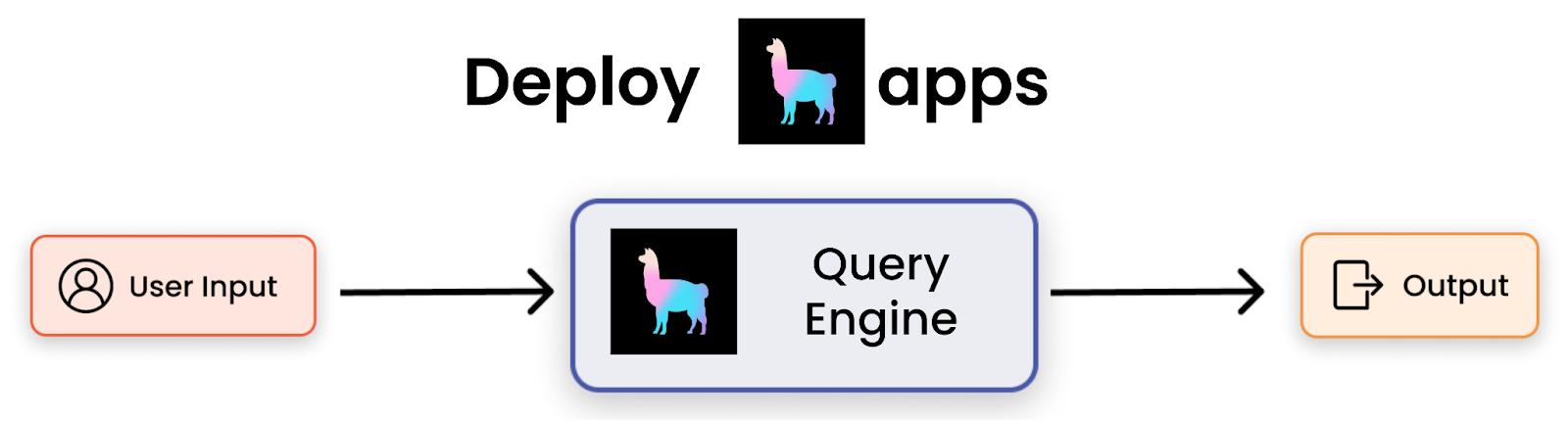

LinkData Querying

LlamaIndex provides both simple and advanced query capabilities on top of your data + indices. The central abstraction within LlamaIndex is called a “query engine.” A query engine takes in a natural language query input and returns a natural language “output”. Each index has a “default” corresponding query engine. For instance, the default query engine for a vector index first performs top-k retrieval over the vector store to fetch the most relevant documents.

These query engines can be easily derived from each index:

1ray_docs_engine = ray_docs_index.as_query_engine(similarity_top_k=5, service_context=service_context)

2

3ray_blogs_engine = ray_blogs_index.as_query_engine(similarity_top_k=5, service_context=service_context)LlamaIndex also provides more advanced query engines for multi-document use cases - for instance, we may want to ask how a given feature in Ray is highlighted in both the documentation and blog. `SubQuestionQueryEngine` can take in other query engines as input. Given an existing question, it can decide to break down the question into simpler questions over any subset of query engines; it will execute the simpler questions and combine results at the top-level.

This abstraction is quite powerful; it can perform semantic search over one document, or combine results across multiple documents.

For instance, given the following question “What is Ray?”, we can break this into sub-questions “What is Ray according to the documentation”, and “What is Ray according to the blog posts” over the document query engine and blog query engine respectively.

1# Define a sub-question query engine, that can use the individual query engines as tools.

2 query_engine_tools = [

3 QueryEngineTool(

4 query_engine=self.ray_docs_engine,

5 metadata=ToolMetadata(name="ray_docs_engine", description="Provides information about the Ray documentation")

6 ),

7 QueryEngineTool(

8 query_engine=self.ray_blogs_engine,

9 metadata=ToolMetadata(name="ray_blogs_engine", description="Provides information about Ray blog posts")

10 ),

11 ]

12

13sub_query_engine = SubQuestionQueryEngine.from_defaults(query_engine_tools=query_engine_tools, service_context=service_context, use_async=False)

14Have a look at deploy_app.py to review the full implementation.

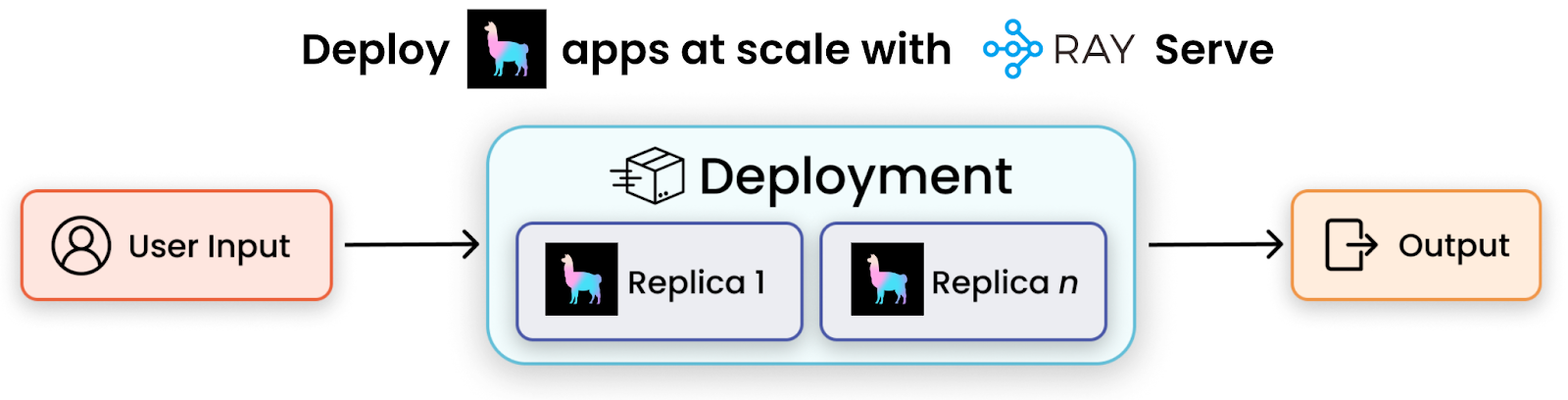

LinkDeploying with Ray Serve

We’ve now created an incredibly powerful query module over your data. As a next step, what if we could seamlessly deploy this function to production and serve users? Ray Serve makes this incredibly easy to do. Ray Serve is a scalable compute layer for serving ML models and LLMs that enables serving individual models or creating composite model pipelines where you can independently deploy, update, and scale individual components.

To do this, you just need to do the following steps:

Define an outer class that can “wrap” a query engine, and expose a “query” endpoint

Add a `@ray.serve.deployment` decorator on this class

Deploy the Ray Serve application

It will look something like the following:

1from ray import serve

2

3@serve.deployment

4class QADeployment:

5 def __init__(self):

6 self.query_engine = ...

7

8 def query(self, query: str):

9 response = self.query_engine.query(query)

10 source_nodes = response.source_nodes

11 source_str = ""

12 for i in range(len(source_nodes)):

13 node = source_nodes[i]

14 source_str += f"Sub-question {i+1}:\n"

15 source_str += node.node.text

16 source_str += "\n\n"

17 return f"Response: {str(response)} \n\n\n {source_str}\n"

18

19 async def __call__(self, request: Request):

20 query = request.query_params["query"]

21 return str(self.query(query))

22

23# Deploy the Ray Serve application.

24deployment = QADeployment.bind()

25Have a look at the deploy_app.py for full implementation.

LinkExample Queries

Once we’ve deployed the application, we can query it with questions about Ray.

We can query just one of the data sources:

1Q: "What is Ray Serve?"

2

3Ray Serve is a system for deploying and managing applications on a Ray

4cluster. It provides APIs for deploying applications, managing replicas, and

5making requests to applications. It also provides a command line interface

6(CLI) for managing applications and a dashboard for monitoring applications.But, we can also provide complex queries that require synthesis across both the documentation and the blog posts. These complex queries are easily handled by the subquestion-query engine that we defined.

1Q: "Compare and contrast how the Ray docs and the Ray blogs present Ray Serve"

2

3Response:

4The Ray docs and the Ray blogs both present Ray Serve as a web interface

5that provides metrics, charts, and other features to help Ray users

6understand and debug Ray applications. However, the Ray docs provide more

7detailed information, such as a Quick Start guide, user guide, production

8guide, performance tuning guide, development workflow guide, API reference,

9experimental Java API, and experimental gRPC support. Additionally, the Ray

10docs provide a guide for migrating from 1.x to 2.x. On the other hand, the

11Ray blogs provide a Quick Start guide, a User Guide, and Advanced Guides to

12help users get started and understand the features of Ray Serve.

13Additionally, the Ray blogs provide examples and use cases to help users

14understand how to use Ray Serve in their own projects.

15

16---

17

18Sub-question 1

19

20Sub question: How does the Ray docs present Ray Serve

21

22Response:

23The Ray docs present Ray Serve as a web interface that provides metrics,

24charts, and other features to help Ray users understand and debug Ray

25applications. It provides a Quick Start guide, user guide, production guide,

26performance tuning guide, and development workflow guide. It also provides

27an API reference, experimental Java API, and experimental gRPC support.

28Finally, it provides a guide for migrating from 1.x to 2.x.

29

30---

31

32Sub-question 2

33

34Sub question: How does the Ray blogs present Ray Serve

35

36Response:

37The Ray blog presents Ray Serve as a framework for distributed applications

38that enables users to handle HTTP requests, scale and allocate resources,

39compose models, and more. It provides a Quick Start guide, a User Guide, and

40Advanced Guides to help users get started and understand the features of Ray

41Serve. Additionally, it provides examples and use cases to help users

42understand how to use Ray Serve in their own projects.LinkConclusion

In this example, we showed how you can build a scalable data pipeline and a powerful query engine using LlamaIndex + Ray. We also demonstrated how to deploy LlamaIndex applications using Ray Serve. This allows you to effortlessly ask questions and synthesize insights about Ray across disparate data sources!

We used LlamaIndex - a data framework for building LLM applications - to load, parse, embed and index the data. We ensured efficient and fast parallel execution by using Ray. Then, we used LlamaIndex querying capabilities to perform semantic search over a single document, or combine results across multiple documents. Finally, we used Ray Serve to package the application for production use.

Implementation in open source, code is available on GitHub: LlamaIndex-Ray-app

LinkWhat’s next?

Visit LlamaIndex site and docs to learn more about this data framework for building LLM applications.

Visit Ray docs to learn more about how to build and deploy scalable LLM apps.

Join our communities!

Join Ray community on Slack and Ray #LLM channel.

You can also join the LlamaIndex community on discord.

We have our Ray Summit 2023 early-bird registration open until 6/30. Secure your spot, save some money, savor the community camaraderie at the summit.