Challenges of deploying ML models in production

“AI is the new electricity.” The number of projects and initiatives that are implementing ML is increasing overall among enterprises, indicating that we have passed the “peak of inflated expectations” on the Gartner hype cycle. However, enterprises are still struggling to infuse ML into their products and services.

According to McKinsey’s 2021 State of AI report, AI adoption has been on a steady rise, with 56% of respondents reporting AI adoption in at least one function, up from 50% in 2020. But O'Reilly's 2022 survey on AI adoption in the enterprise found that only 26% of respondents currently have models deployed in production. It is apparent that MLOps remains challenging for many enterprises, and many companies have yet to see a return on their AI investment.

In this blog post we will dive deeper into some of the challenges and uncover some of the potential reasons.

LinkWhat is MLOps (ML for DevOps)?

It might be worthwhile to define the term MLOps and provide some context.

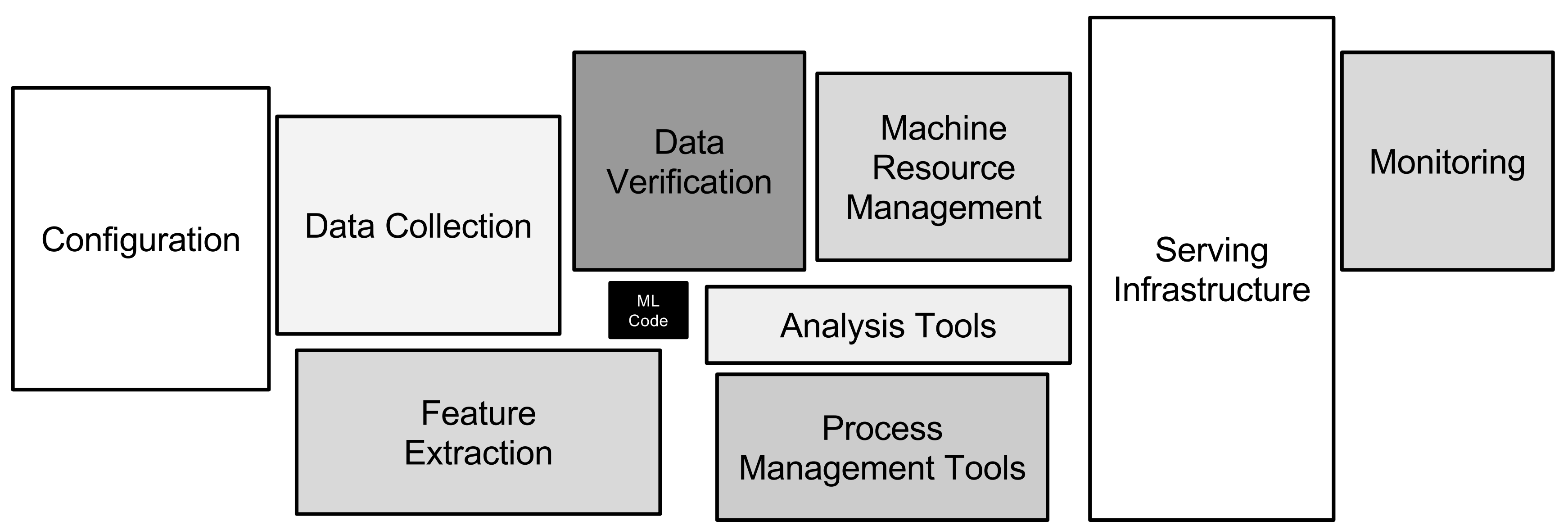

Only a small fraction of real-world ML systems is composed of the ML code, as shown

by the small black box in the middle. The required surrounding infrastructure is vast and complex. Source: Sculley, D. et al. “Hidden Technical Debt in Machine Learning Systems.” NIPS (2015).

Only a small fraction of real-world ML systems is composed of the ML code, as shown

by the small black box in the middle. The required surrounding infrastructure is vast and complex. Source: Sculley, D. et al. “Hidden Technical Debt in Machine Learning Systems.” NIPS (2015).The seminal 2015 paper from Google, “Hidden Technical Debt in Machine Learning Systems,” is widely considered to be the first paper that describes what MLOps entails. The paper says that most people and media focus on model accuracy (black box in the middle) but very few people speak about the different systems that are needed to deploy and maintain a model in production.

The fundamental difference between machine learning (ML) and traditional software development is that ML is probabilistic in nature, while software development is deterministic. Phrased another way, software behaves as it is programmed to behave, while in ML, there is a possibility for random or less predetermined events to occur. This also means that you need different data stores, observability and monitoring tools so that you can refresh your data and/or your model at a different rate than your software release cadence.

MLOps also requires an organizational change that combines teams, process, and technology to deploy ML solutions in a robust, scalable, reliable, and automated way that minimizes friction between teams.

Industry regulations coupled with the fact that some algorithms, especially deep learning, can be black boxes make it very challenging to define what can be audited and explained. Establishing and defining a ML governance framework can be bureaucratic and hinder ML adoption in some sectors or use cases.

With the exponential growth of data and model sizes, distributed computing is becoming the norm in many ML tech stack. The challenges are that those ML libraries are not well integrated together and operate in silos. For example you can use Spark or Dask for scaling your data preprocessing, then Horovod or PyTorch for scaling your training. You may experience an impedance mismatch (Java vs Python) or the lack of cohesion between those libraries, which requires you to materialize intermediate results on disk or cloud storage and necessitates the use of a workflow orchestrator to unify all of those steps together.

These are all challenges facing enterprises deploying ML models today.

LinkML tools are maturing rapidly

We have come a long way since Google’s paper was published. ML tooling and solutions such as feature store, ML monitoring, and workflow orchestration have emerged, and common practices and processes such as responsible AI have been established. But many companies still need to build bespoke ML CI/CD pipelines in order to ship their models in production.

We are also seeing some progress with specialized ML serving solutions (Ray Serve, KServe, Triton, BentoML, SageMaker) that provide ML serving-specific features as well as first-class support for AI accelerators.

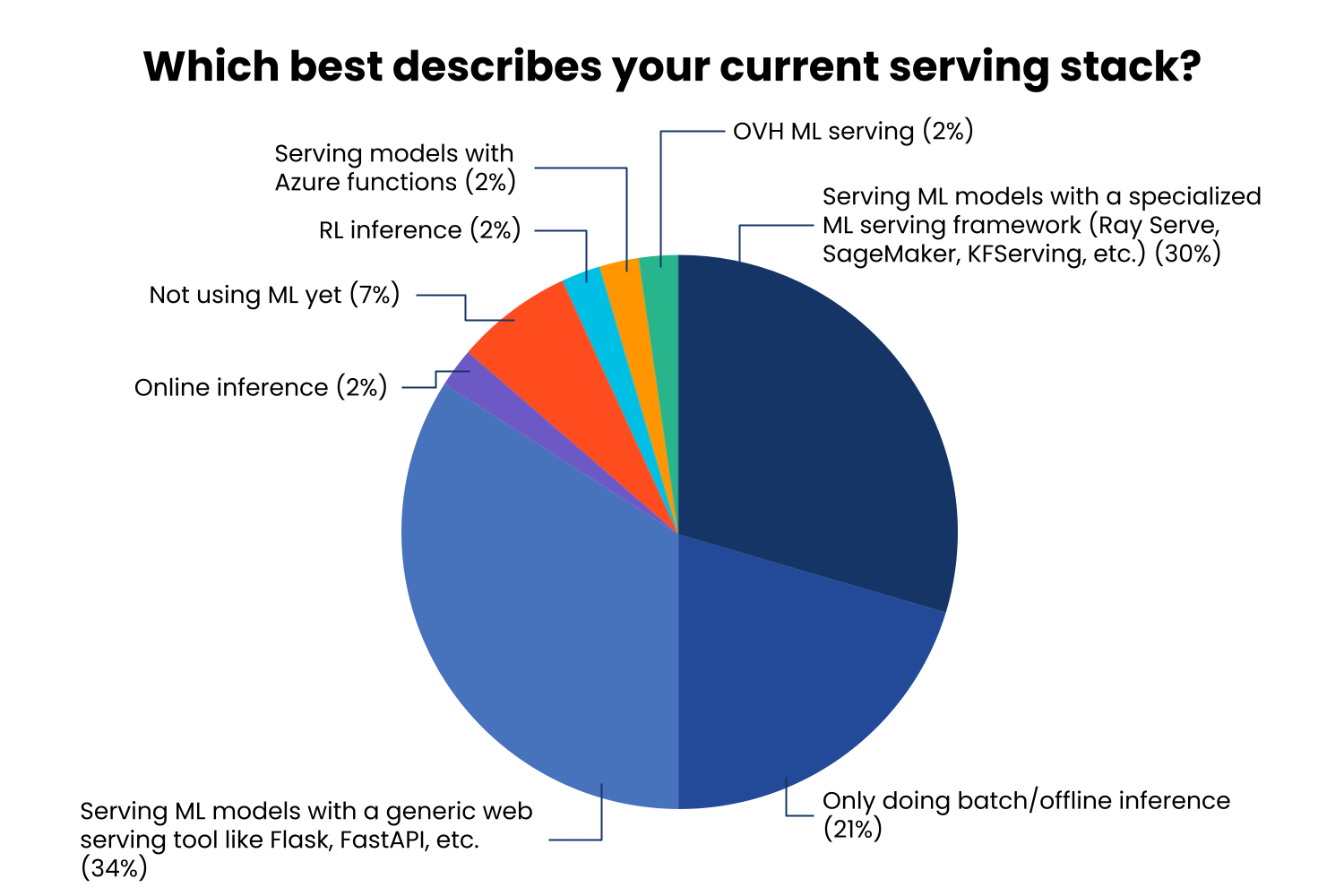

According to our Ray Serve user survey and the O’Reilly survey cited above, we see almost an even split between users using a Python-based web server such as FastAPI versus a specialized ML serving solution.

Results of Ray Serve user survey.

Results of Ray Serve user survey.A generic web server such as FastAPI provides a proven ground to build APIs and microservices and is likely already entrenched in the last-mile deployment technical stack — something that an infrastructure or a backend engineer is already familiar with.

On the other hand, a ML engineer may prefer a specialized model serving solution which comes with many features in order to improve efficiency, but this may introduce more challenges in packaging it as a software component part of a bigger standardized microservices architecture.

If you use a simpler model (one model behind an endpoint), a generic web server allows you to containerize your ML model fairly easily. However, I would argue that this is rarely the case as you mature your ML practice. As discussed in the common patterns of serving ML models in production blog post, you often require many models mixed with business logic in order to cover more ground and provide a robust solution on the use cases you are serving.

LinkTeams, roles, and organizations

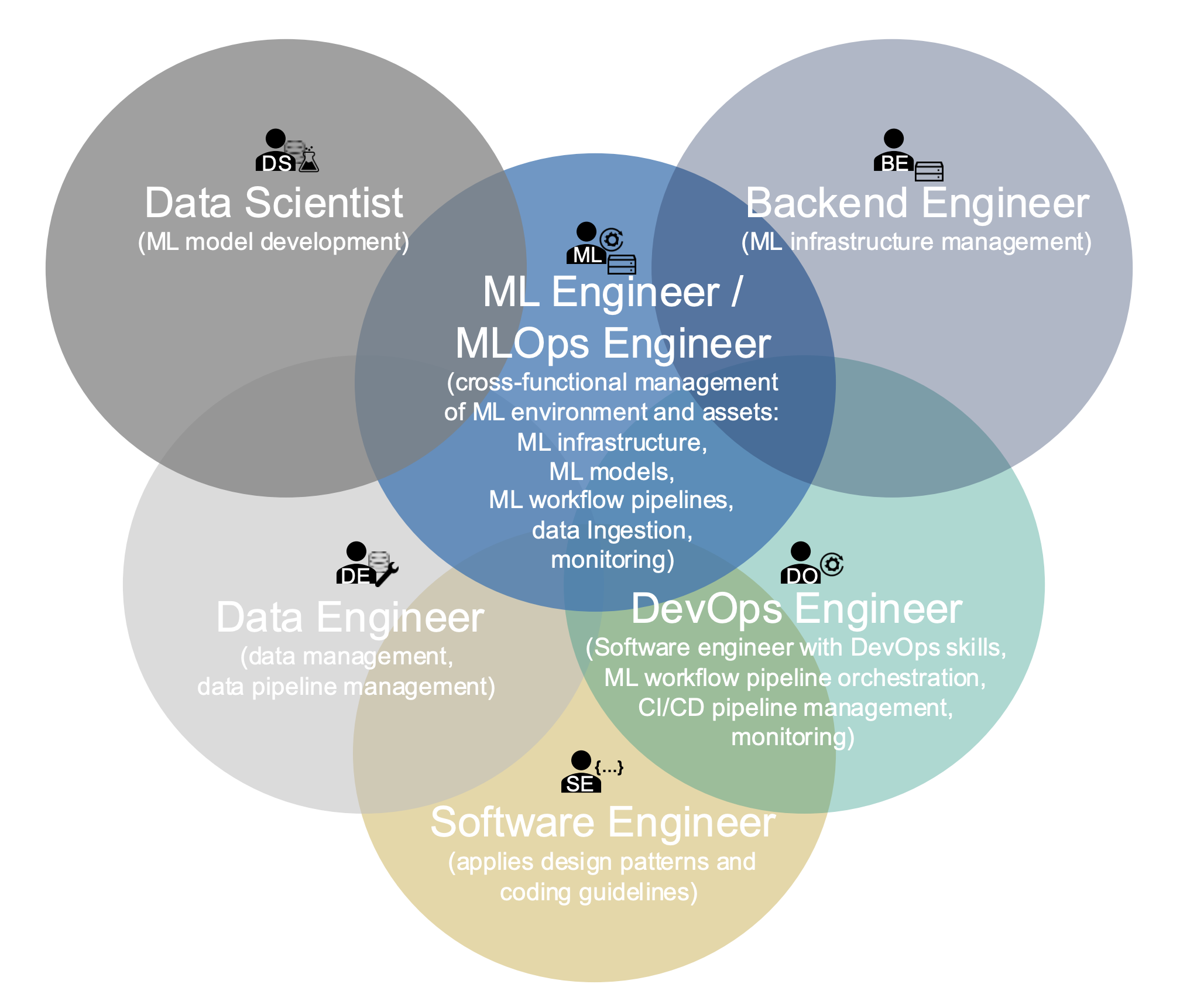

Roles and their intersections contributing to the MLOps paradigm. Source: arXiv:2205.02302

Roles and their intersections contributing to the MLOps paradigm. Source: arXiv:2205.02302Note that I assume infra/backend engineers will want to use a generic web server framework while ML engineers will lean towards a specialized ML serving framework. One of the possible explanations of the divide between the serving tools of choice is “Who owns the last mile deployment?”

Is asking ML practitioners to master Docker files and Kubernetes clusters not a good use of their time?

Is asking a backend engineer to understand the nuances of optimizing deployment of serving ML models in production outside of their comfort zone?

Do we need a full-stack ML engineer rockstar in order to deploy models in production?

How do you translate a multi-model ensemble into microservices?

Do you have the ML engineer and the infra engineer pair program on top of YAML files?

In our next blog post, we will look into how Ray can simplify your MLOps and Ray Serve provides a clear boundary between developing a real-time multi-model pipeline and the deployment. We will also go over how Ray Serve and FastAPI bridge both worlds: generic web server and specialized ML serving solutions.