Simplify your MLOps with Ray & Ray Serve

In the first blog post in this series, we covered how AI is becoming increasingly strategic for many enterprises, but MLOps and deploying models in production are still very difficult.

We also mentioned that although machine learning (ML) tooling is maturing, deploying a model in production is still a high-friction process.

In this blog post, we’ll explore how you can simplify your MLOps with Ray. Ray provides a scalable, unified ML framework and a flexible backbone to build your experiments quickly by unifying data preprocessing, training, and tuning in a single script. Ray Serve lets you compose complex inference pipelines using a simple Python API and deploy them in real time using YAML.

LinkContainerizing an ML application

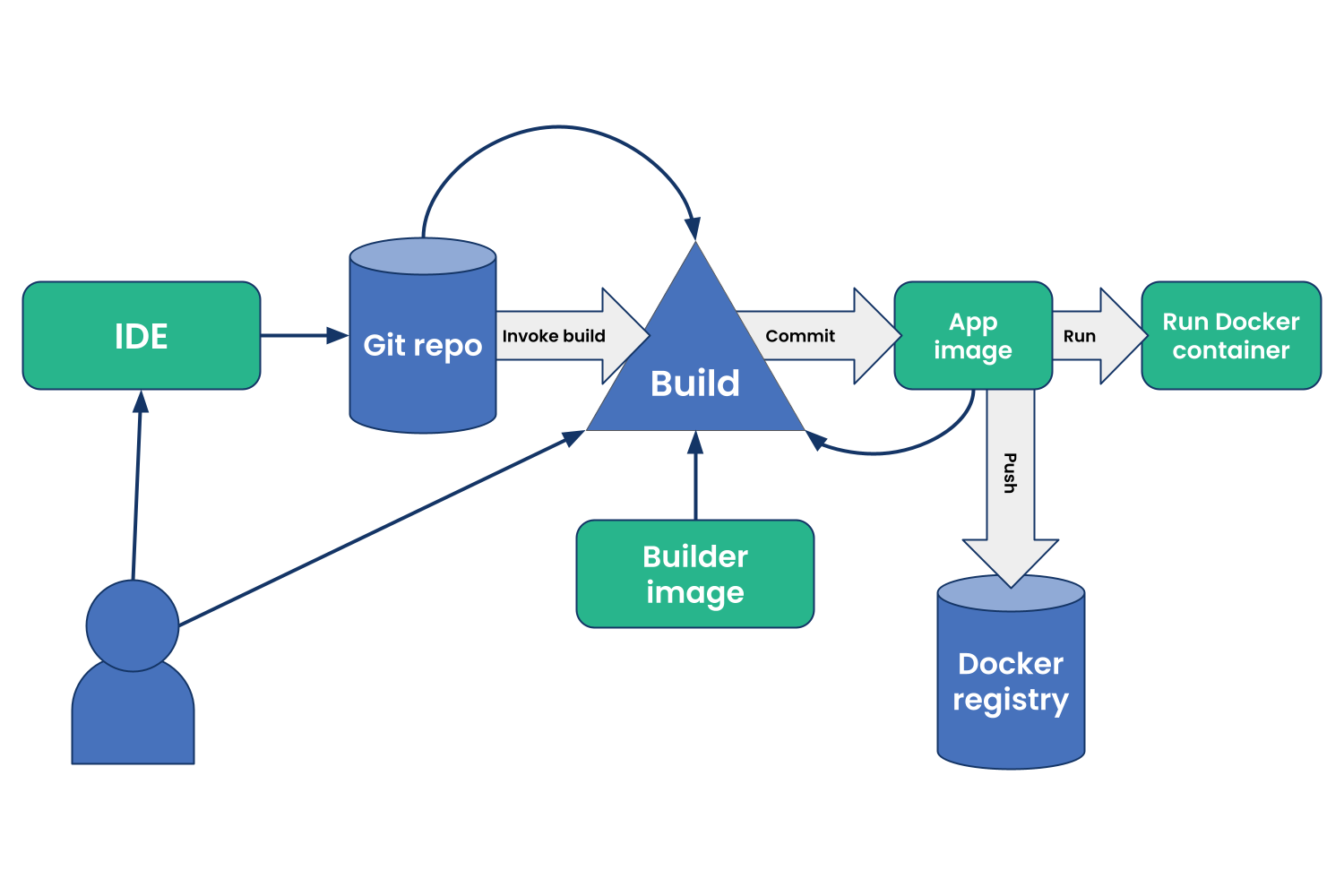

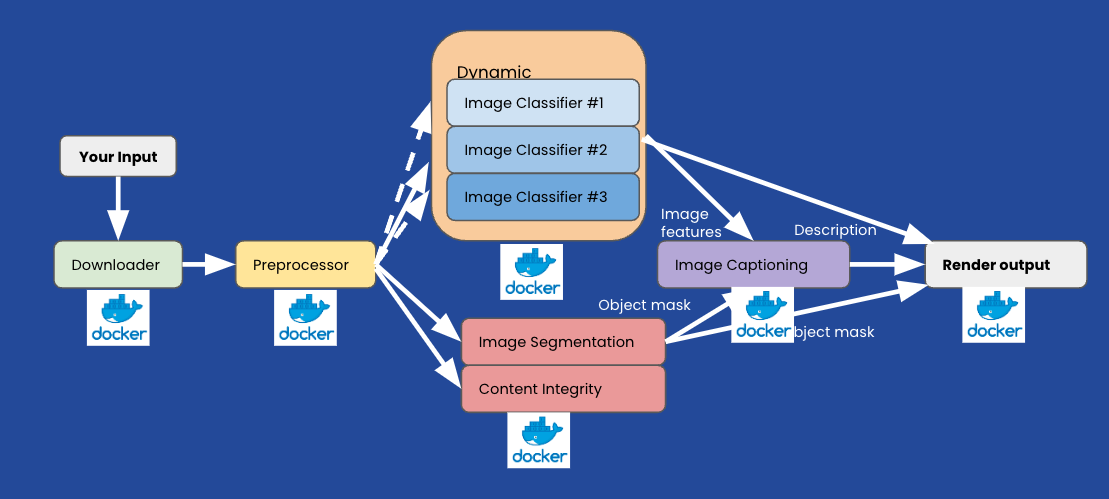

As an example, let’s see what the step-by-step workflow for containerizing an ML application looks like.

Step-by-step workflow for developing Docker containerized apps

Step-by-step workflow for developing Docker containerized appsIf you have a simple model (one model behind an endpoint), the standard workflow above can be applied to easily containerize your ML application.

However, ML requires different data stores and logging and observability, because ML models require different sets of metrics and data inputs that need to be integrated. All of this ML-specific tooling would also need to be accounted for when coding, packaging, and deploying your ML application as part of a larger software component.

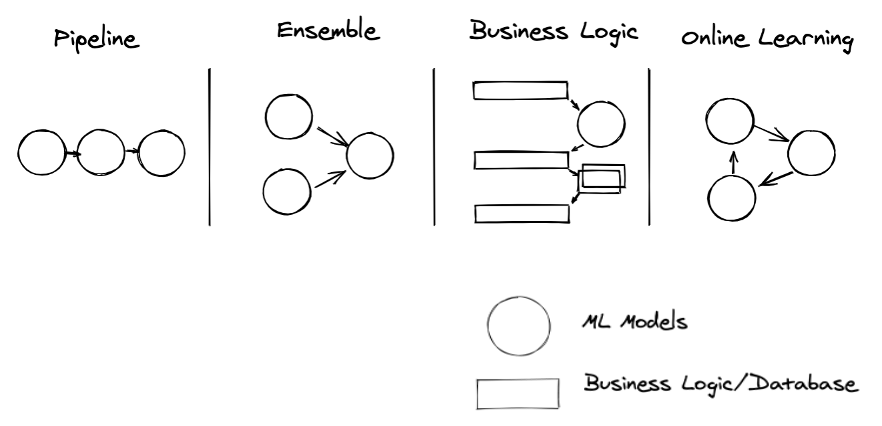

In the previous blog post, we mentioned that as enterprise ML capabilities mature, we often need more than one step and/or model in order to serve a robust and safe ML application (also explained in the blog post on common patterns of serving ML models in production).

This means that you may need to stack your models, create ensembles, incorporate business logic, or interact with a feature store to get fresh data or log data in order to serve a robust and safe ML application to your customers.

LinkMulti-model composition

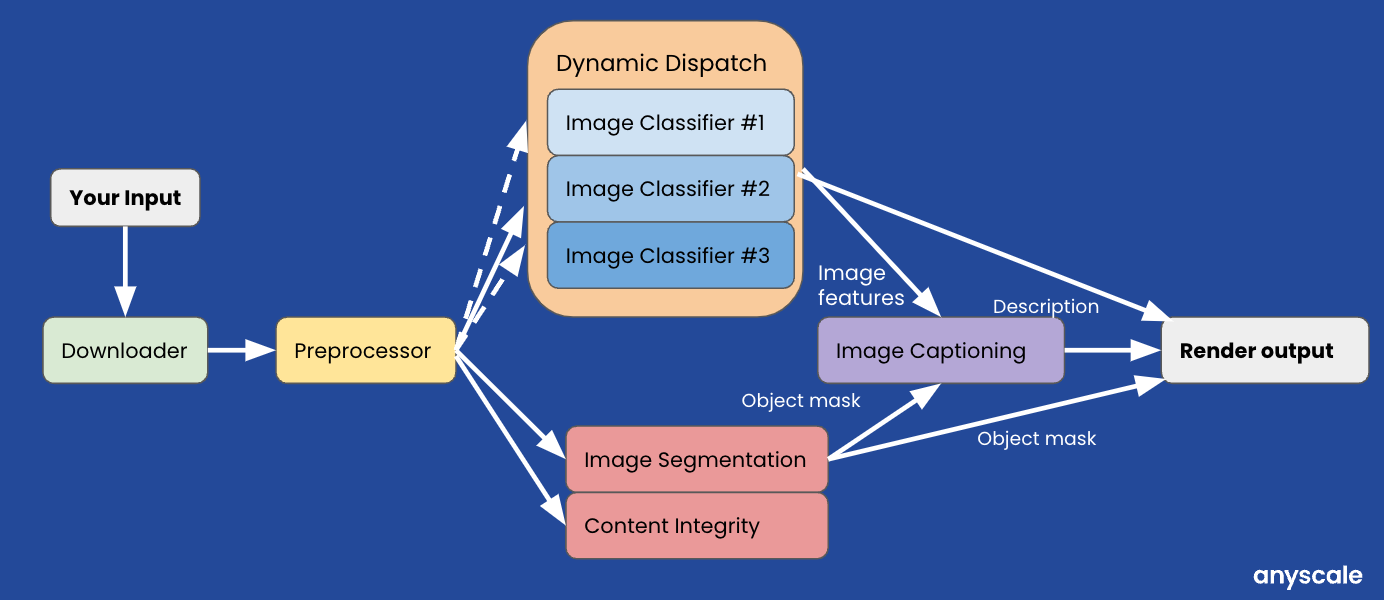

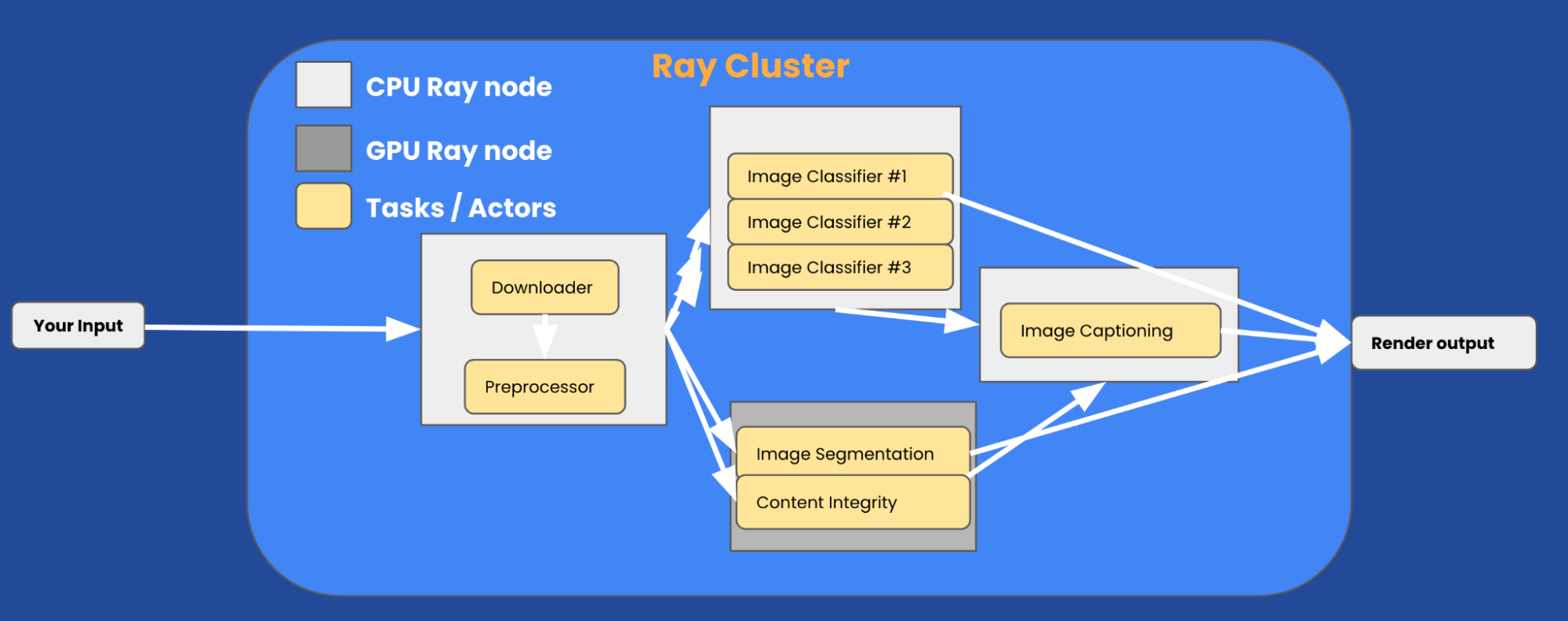

Let’s take a concrete example of a real-time pipeline for content understanding and tagging of an image uploaded by a user:

The example above requires preprocessing steps followed by many models that can run on CPU and/or GPU as well as other post-processing tasks. This is a common use case if you need to categorize your product for an e-commerce product, for example.

This brings us to the following question:

How do you translate a multi-model ensemble into microservices?

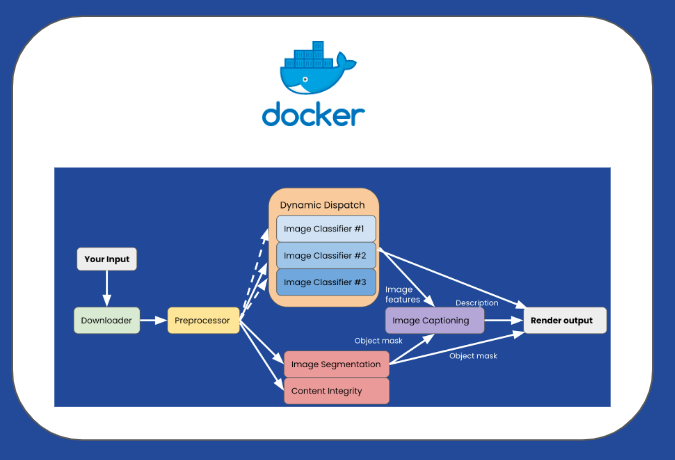

The first naive approach is to containerize the whole pipeline as one application:

While this would work, it would not be adequate for many reasons:

It is a monolithic application! You have to update the whole pipeline every time.

You have to size your application for all the compute of your pipeline (load all the models in memory), which would be highly inefficient as you would not use all the resources at the same time.

You have to autoscale your whole pipeline (coarse-grained autoscaling).

This approach is highly inefficient. It leads to higher latency for end users and increased cost due to coarse-grained resource allocation.

Another approach would be to treat each task as a service:

While this addresses the efficiency problems of the monolith, it comes with added complexity.

How do you define the boundaries between development and deployment and their respective role (infra/backend vs ML engineer)?

More YAML! You have to containerize each task and wire them together.

You have business logic interwoven in your code and YAML files.

You need other systems in order to make this solution performant (microservices, Redis, Kafka, etc.). How do you fan out, multiplex, combine tasks using asynchronous messages?

LinkRay and Ray Serve

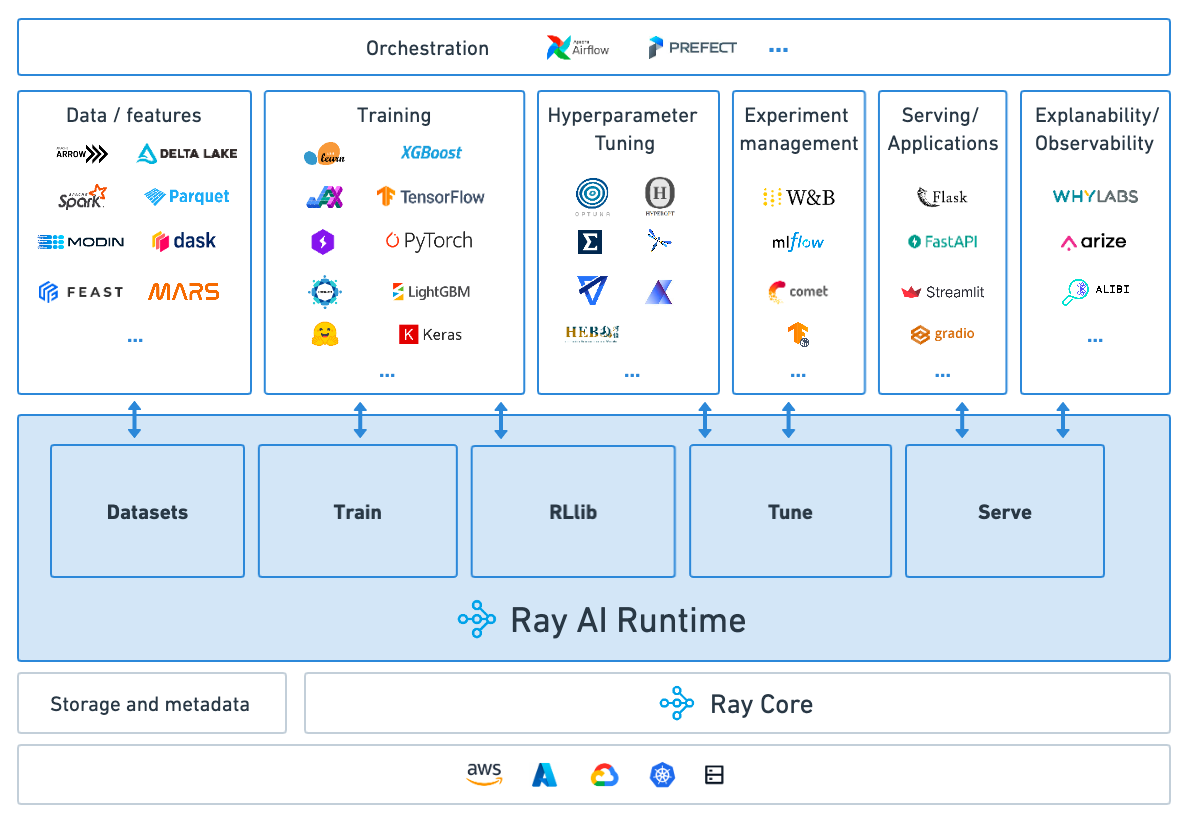

Ray provides a scalable, flexible, unified runtime for the entire ML lifecycle with the Ray AI Runtime (AIR)* project. Ray AIR provides integrations with other best-in-their-category libraries as part of a best-of-breed MLOps ecosystem, while Ray Serve can help reduce the friction between the backend and the ML engineer in the last mile deployment by providing a clear abstraction between development and deployment.

Ray Serve provides many benefits, such as scalable, efficient, composable, and flexible ML serving compute solutions. In addition to providing a better developer experience and abstraction, Ray Serve was built on top of Ray and comes with stateful and asynchronous messages. This allows you to simplify your ML technical stack without having to integrate across web services, Redis, and Kafka, for example.

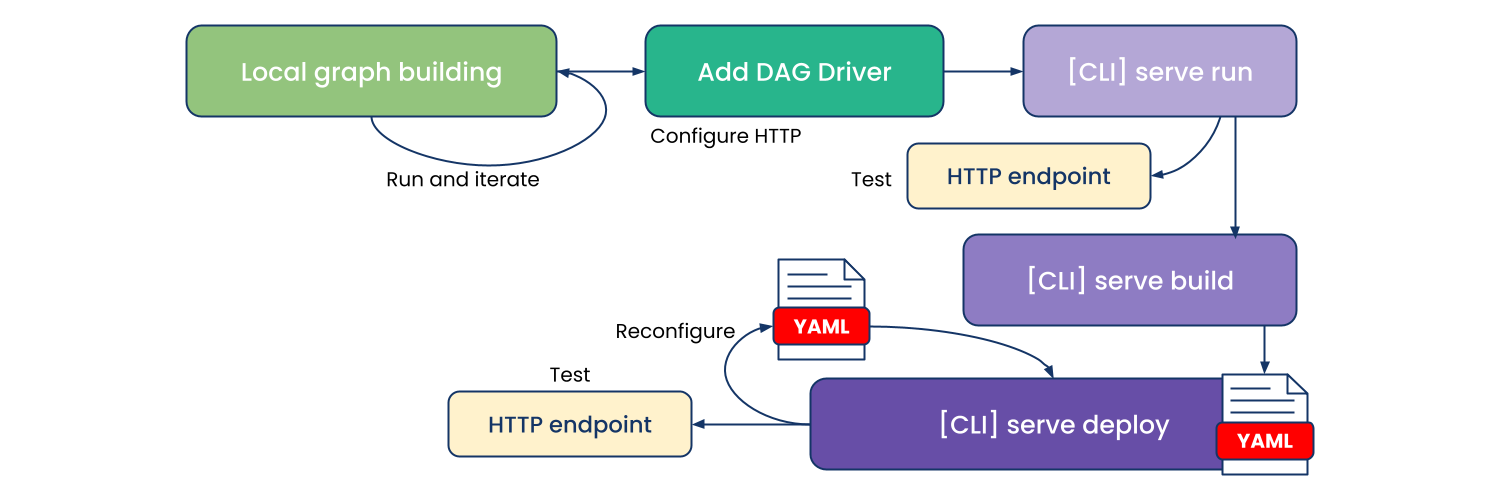

With Ray Serve you can compose your real-time pipeline logic using the Deployment Graph API in Python. You can benefit from the expressivity of Python and compose your multi-model pipeline, test it locally on your laptop, and easily deploy onto a cluster with no code changes.

Ray will automatically schedule and allocate your tasks efficiently across many worker nodes across the cluster. In Ray, you can define heterogeneous worker node types, allowing you to take advantage of popular spot pool capacity. Also, you can make use of fractional resource allocation (ray_actor_options={"num_gpus":0.5}). This allows you to overprovision tasks/actors on the same host and maximize your premium resources or AI accelerators such as GPU, provided that they are not using all the resources when run concurrently.

When you have completed your development you can generate a YAML file using the CLI to operationalize your real-time pipeline. YAML has become the format of choice for deployment resources and workloads, especially on Kubernetes, and allows you to standardize on all the known deployment patterns such as canary, blue/green, and rollback.

For example, Widas, a digital transformation consulting company, was using microservices, Redis, and Kafka before simplifying their technical stack with Ray Serve in order to implement a complex online video authentication service.

Other Ray libraries such as Ray Datasets, Ray Train, and Ray Tune can also help simplify MLOps by unifying and consolidating scalable data preprocessing, tuning, training, and inference across the more popular ML frameworks such as XGBoost, TensorFlow, and PyTorch.

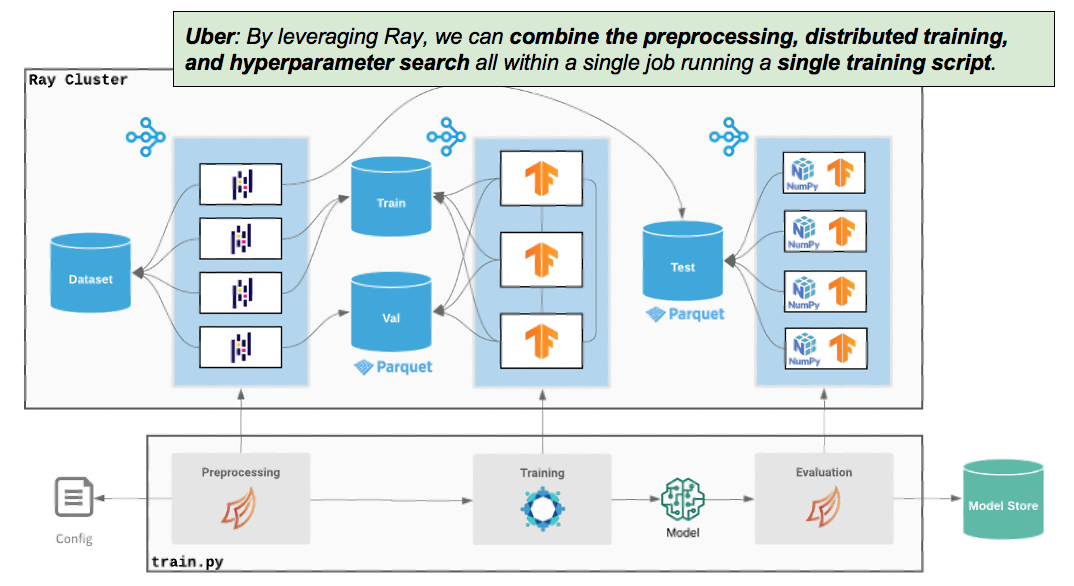

Uber’s internal ML-as-a-service platform, Michelangelo, was one of the first of its kind. Uber is now using Ray to simplify their end-to-end ML workloads and get better cost performance.

MLOps has many hurdles, with infrastructure provisioning, complex ML tech stack, and team friction being the primary ones. Ray reduces the complexity of managing multiple distributed frameworks such as Spark, Horovod, Dask, and PyTorch by unifying data preprocessing, training, and tuning. You may not need to use a workflow orchestrator to unify all the steps — you can use just one Python script instead.

Ray Serve can also accelerate the last mile deployment. ML engineers can author real-time pipelines in Python on their laptops, and deploy onto a cluster with no code changes all the way to production. Ray Serve provides an ideal abstraction between development and deployment, while Ray provides a portable framework that hides the complexity of infrastructure and distributed computing.

Check out Ray and the Ray Serve documentation and give it a try!

In the next blog post we will go over how Ray Serve and FastAPI give you the best of both worlds: a generic Python web server and a specialized ML serving solution.

*Update Sep 16, 2023: We are sunsetting the "Ray AIR" concept and namespace starting with Ray 2.7. The changes follow the proposal outlined in this REP.