Foobot optimizes building energy efficiency by training fully autonomous control agents for HVAC systems, bringing energy savings to office buildings, hospitals, schools and commercial buildings.

Ray reduces 70% of model training time and accelerates time-to-market by 2x.

Foobot (EnergyWise) provides an AI-based solution that optimizes building energy efficiency by training fully autonomous control agents for Heating, Ventilation and Air Conditioning (HVAC) systems. It is currently used by large companies with real estate assets, property owners, portfolio managers, and facility management firms such as L’Oreal, Dassault Systems and Credit Agricole. The solution is versatile, scalable and proven to bring large energy savings in small to large office buildings, hospitals, or schools.

Buildings account for 40% of energy consumption in the US and in Europe. Half of this energy goes into HVAC systems.Yet they are among the most complex systems in terms of control and management. From design to operation, hundreds of equipment and controllers are interacting together with the aim of keeping indoor conditions comfortable and safe. This makes it very challenging to manage and regulate buildings internal controls in an energy efficient manner.

On top of that, today’s challenges of energy cost crisis and greenhouse gas emissions reduction require a rapid and global effort. This calls for a paradigm shift: there are lots of old, energy-inefficient buildings (half of the commercial buildings were built before 1980 according to EIA), yet classic hardware retrofits are slow and capital-intensive.

Foobot has built a solution based on software and AI to address these challenges. We aim to make buildings’ HVAC controls efficient and autonomous - just as if you had a team of engineers tweaking buildings’ parameters 24/7.

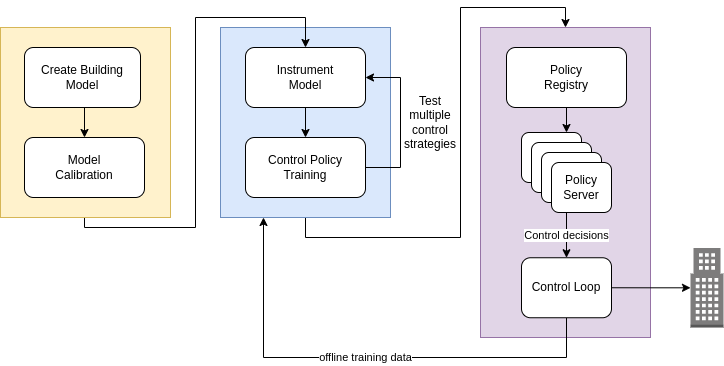

Foobot’s solution is based on creating digital twins of buildings and using them as training environments for reinforcement learning (RL) agents. Capturing the building’s thermal dynamics in the twin is key and requires a physics simulation engine that is CPU-intensive: each environment step can last several seconds in the worst cases. Without distributed parallel training, a single experiment could last several days, reducing our ability to iterate over the training process in order to find the best control strategies.

The nature of our training pipeline involves multiple complex steps. Early versions of our training platform were based on multiple frameworks, some of them giving us maintenance headaches or unstable results. This was brittle, we were having hard times trying to build a stable and reproducible training flow.

Fig. 1 Flow of a typical building control optimization project

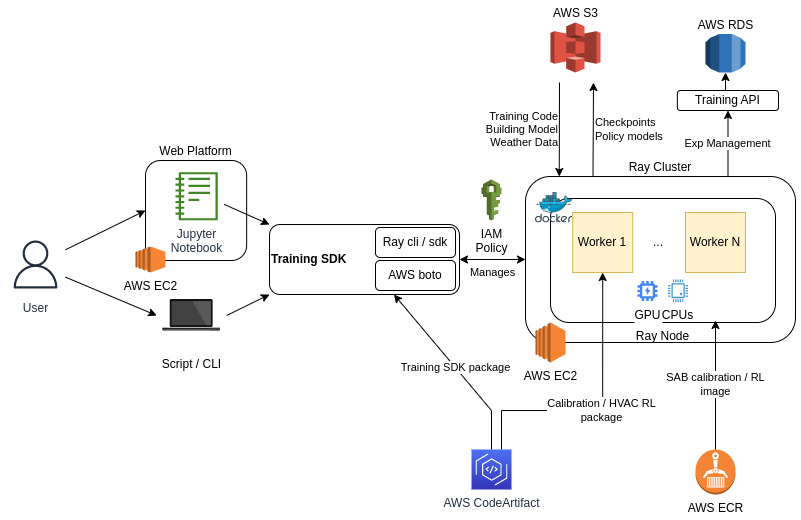

Ray Tune and RLlib provide us with the ability to distribute training across multiple CPUs and machines in a cluster.

We find it particularly performant for our calibration engine which requires a Ray Tune optimizer to find the best set of twin parameters to minimize error between actual and simulated buildings data. Each sample requires to run a simulation on a whole calibration period, hence can last several seconds. Ray can distribute samples across many CPUs and drastically reduce search time. We can also benefit from optimized schedulers available in Tune like Hyperband to automatically allocate cluster resources to most promising samples.

We also make great use of Ray RLlib for executing many parallel environments in a RL training. Since each training batch can require tens of thousands of samples as input, and a single time step is CPU-intensive due to physics engine calculations, a GPU could be waiting idle most of the time. With Ray, we can maximize GPU usage in clusters made of hundreds of CPUs and significantly reduce training wall time.

Ray also brings the necessary abstractions like actors and data locality seamlessly and allows you to move from local development to hundreds of machines effortlessly. An example of this is how we used distributed queues to redesign some of our RL environments. This allows more flexibility in communications between our simulator API and the Gym environment.

Creating efficient and customizable RL environments can be challenging when complex simulation engines are involved, so we open-sourced it (see: https://github.com/airboxlab/rllib-energyplus)

Finally, Ray command line interface helps us alleviate our training cluster management. We’ve built an internal platform that leverages Ray cli on top of AWS, allowing us to manage the entire life cycle of multiple clusters where multiple experiments can run in parallel. Ray out of the box supports many of the options we needed in AWS and Docker, that allows us to fulfill important aspects like continuous testing and delivery of training components like docker images and internal SDKs with minimal custom development. At the end of the day, our cloud bill and operational burden are drastically reduced.

Fig. 2 Components of the training platform

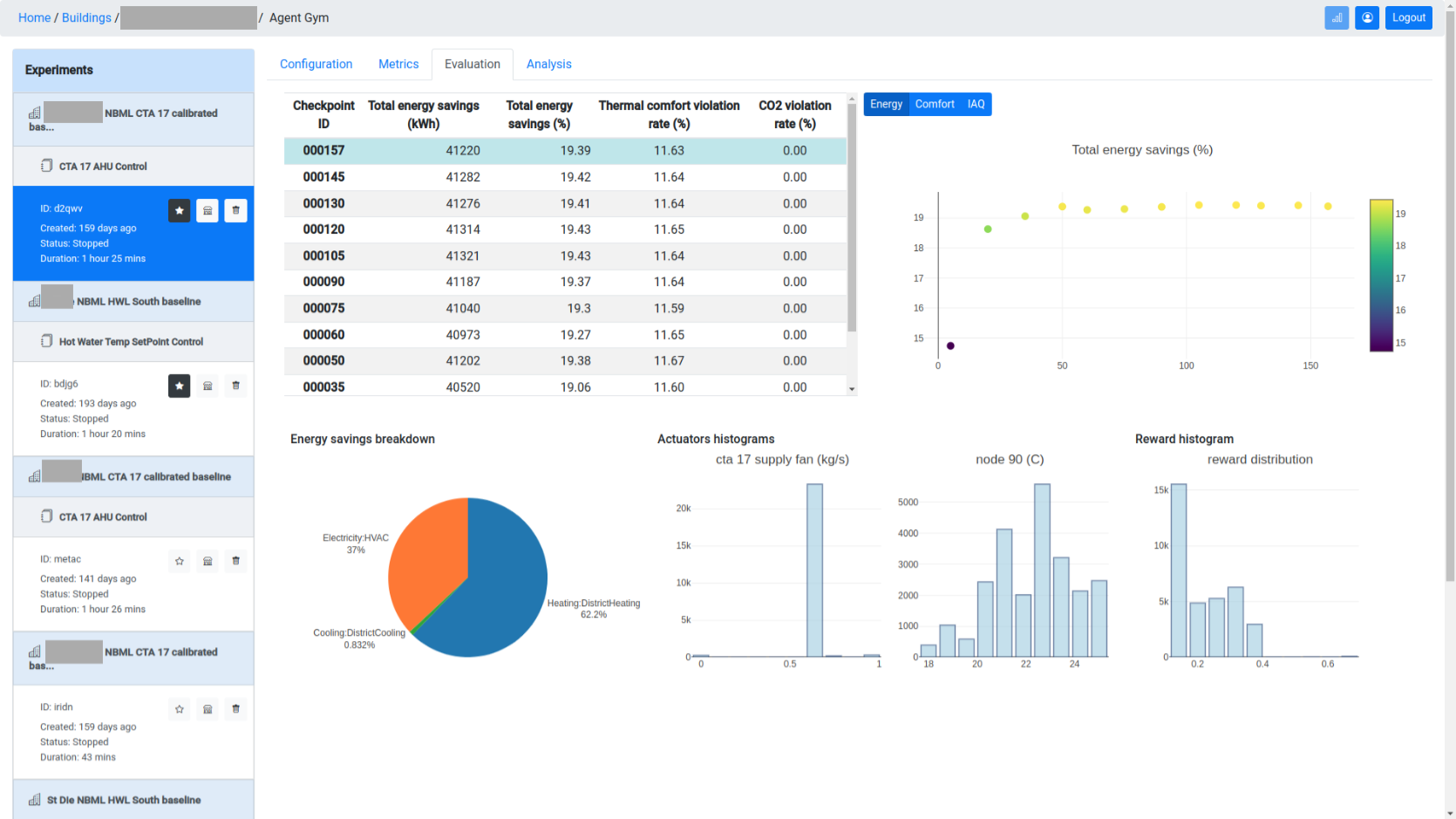

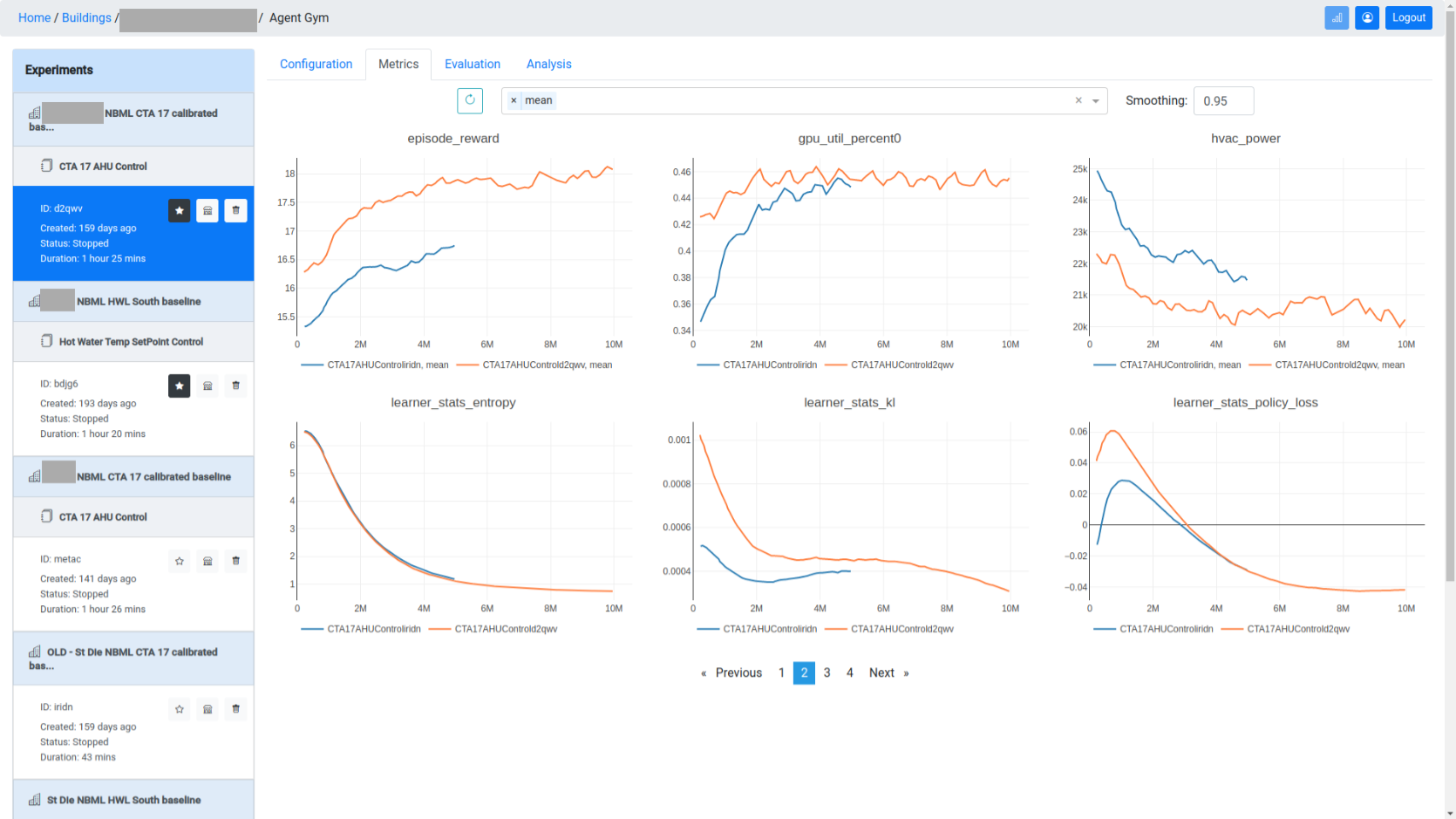

Fig. 3 Views from Foobot Training Platform