How Anastasia accelerated their ML processes 9x with Ray and Anyscale

A view of the Anastasia Platform for forecasting using AI.

A view of the Anastasia Platform for forecasting using AI.

Juan Roberto Honorato is an AI Tech Lead working at Anastasia.ai since its inception more than 4 years ago. There he helps develop an ML driven platform to deliver business solutions for its customers. Anastasia’s mission is to democratize AI for every business, requiring scalable and cost effective technologies to do so. This has recently led him to be an active Ray advocate.

Anastasia.ai provides a powerful platform that enables organizations to operate AI capacities at scale with a fraction of the resources and effort traditionally required. This post covers a demand prediction problem we had and how using Ray to solve this problem led to astonishing results. Specifically, compared to our AWS Batch implementation, our Ray implementation is 9x faster and has reduced the cost by 87%.

This blog explores the following:

What the Anastasia Platform does.

How we initially tried to solve our demand prediction problem using Python’s built in parallel modules.

How we realized the need to quickly scale horizontally with training and hyperparameter tuning.

How Ray helps us scale easily.

How Anyscale lets us optimize the system even further.

LinkWhat the Anastasia Platform Does

Anastasia’s customers are operationally intensive businesses of all sizes that need to forecast something into the future, i.e. demand, stock outs, material usage, spare parts needs, etc. Using historical data and context information we train a model that learns the underlying dynamic in order to forecast future events.

As an example, our customers need to forecast things as diverse as:

The number of spare parts to purchase for ATM maintenance.

How many bicycles will be sold in the month of August.

The total amount of salad dressing products to stock.

How many small T-shirts of a certain Stock-Keeping-Unit (SKU) to buy for the spring season.

By better forecasting how sales (or other events) happen in the future based on previous trends, our customers can transform their business substantially by reducing expenses/waste and increasing margins.

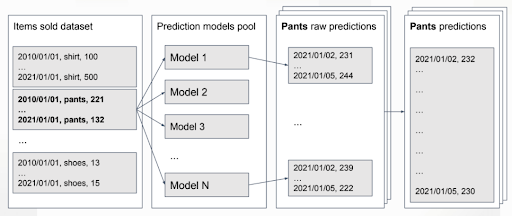

At the heart of the Anastasia Platform is our AI Core library (more details about this in a future post!), and our shining star is our time series forecasting engine. We train a multimodel ensemble for every item or item group (users can control this grouping), and make it effortless for customers to train and retrain forecasting models over time as conditions change on the ground.

LinkOur Initial Pure Python Approach

Originally, we had one EC2 instance for each type of model and parallelization within each instance. Instances reported full CPU usage. We were initially happy with the results as we considered the code very optimized, but we had hit a limit.

Vertical scaling was maxed out and big machines mean big costs. We couldn’t scale down, so costs added up. Additionally big data would take forever to process. We also had vendor lock in, and wanted a more flexible system that we could build on as time went on.

LinkThe need to scale horizontally

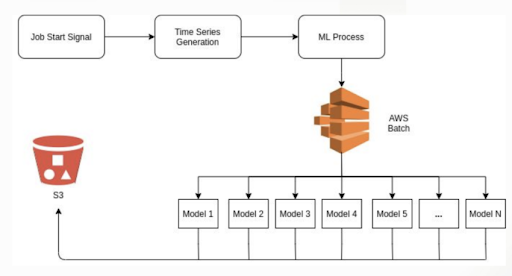

Our next attempt was horizontally scaling using AWS Batch. Unfortunately, we found this would require large code changes, and even then we couldn't scale automatically or use spot instances.

A few other approaches we considered included:

AWS Batch’s multi-node parallel jobs: Here fault tolerance was difficult.

AWS EMR: This seemed designed for Spark workloads, and we found the overhead to be too high.

AWS Sagemaker: We found it rigid and extensibility was tough to figure out. Also, scaling wasn’t straightforward unless using built-in algorithms, plus the high cost of serving endpoints. We also feared vendor lock in.

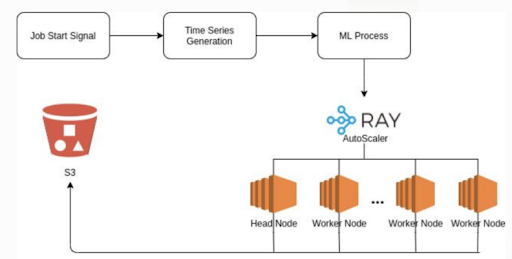

Eventually we landed on the Ray project and its built-in autoscaler. With Ray, only very small code changes were needed, and the autoscaler solved our scaling and cost issues elegantly and without any added effort.

Most notably, it unlocked the usage of Ray Tune for hyperparameter searches to tune our models and get the best performance. It seamlessly integrated with training and a variety of optimization hyperparameters search engines like SigOpt and HyperOpt.

LinkHow Ray helps us scale easily

A rough depiction of how we scale our problem using Ray

A rough depiction of how we scale our problem using RayOnce a customer starts a forecasting job, our system generates the time series in numeric feature format and feeds them to the ML process actor on Ray. We train an ensemble of multiple models per item or group of items.

We found it best to subsample and do hyperparameters search on the most relevant time series examples in each customer’s dataset instead of using all of them. We found that selected time series represented most of the trend and let us a) cut down training time/cost, and b) prevent overfitting.

Then the ML pipeline is started and the trained models are serialized to S3. This implementation lowers the development time for new models — anything we can run in Python works well with Ray, and we get scale out of the box.

LinkAdvantages of Ray Tune for hyperparameter search

Finally, when training the models we use Ray Tune. It’s an easy way to wrap the training code we already had, and depending on the model type, we could pick a searcher that worked best for us:

SigOpt running on Ray Tune for more complex XGBoost and RNN models

HyperOpt running on Ray Tune for simple statistical models

Ray Tune helps to distribute the computation for workers and facilitate communication between them. Workers then execute on a particular searcher’s (SigOpt or HyperOpt) next recommended hyperparameter set.

We found no other open-source hyperparameter search framework that simplified the distributed execution this easily. Ray Tune freed us from managing and scaling cloud resources for searches.

LinkImmediate returns when using Ray

When we ran our Ray implementation and measured the results we were quite pleased with what we found:

We managed to reduce cost by lowering the number of CPU cores used.

We got faster results because we were not using a Batch instance per model, but all instances for all models. This means that all the machines are used all the time.

There was a noticeable improvement on per-core speed.

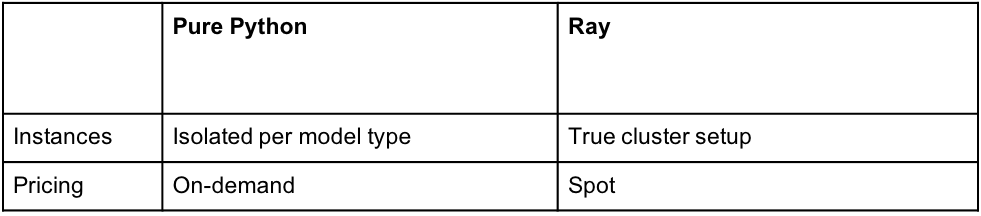

Pure Python vs Ray

Pure Python vs RayOverall, for a test job consisting of 100,000 time series, 120 data points per item and 384 CPU cores, our Ray implementation is 9x faster and it has reduced the cost by 87% compared to our AWS Batch implementation (ie: if the cost was $100 it became $13!)

LinkFurther speed gains with Anyscale

Originally we had reached out to the Ray developers at Anyscale for help in designing our system. Over time we decided to give their managed platform a test, and found a few advantages.

Using a managed platform is always a tradeoff between cost and our own development time, resources, and staffing. We wanted to focus on our core competencies and not on infrastructure management or operations. Thus, the gains with Anyscale.

Anyscale sped up our development cycles in a number of ways::

Spinning up a new cluster with Ray Client is faster.

Executing our local code on an Anyscale-managed cluster is as easy as changing an env var (RAY_ADDRESS=<cluster address>).

Jobs governance where we know who did what and when.

Persistent and clear logs for code logic, cluster autoscaling, virtual environments, etc.

Automatic code dependencies management via an easy CI/CD integration.

Production-like testing environment.

We’ve also found the Anyscale team to be exceptionally responsive, and the platform to gracefully handle new Ray releases to keep us on the cutting edge of new features without any concern that things will break.

LinkSummary

In summary, we’re very happy with our choice of Ray & Anyscale.

The Ray ecosystem is very strong and we could always find ways to do things through the forum or a simple Google search. It’s an easy way to get scale capacities since it’s also identical to writing normal Python code. The built-in autoscaler was a huge cost saver in terms of infra development time and expertise needed, and this became even more favorable when using Anyscale.

If you’re a business who would like to give our AI-powered forecasting a try, please sign up for Anastasia here, and start taking control of your future!