LLM-based summarization: A case study of human, Llama 2 70b and GPT-4 summarization quality

TL;DR: In a blind test of a legal expert, Llama 2 70b is better at summarizing legislative bills than human legislative interns. GPT-4 was better than both (0.9 better on a 5-point scale); possibly because it was already trained on the legislation but also because it was better able to guess at what the user wanted. GPT-4’s superior performance gave us the insights we needed to improve the Llama 2 70b prompt to be higher quality. We extracted insights from how GPT-4 performed better than Llama 2 70b summaries that we built into the prompt. We believe these approaches might be a generalizable approach to improving summarization at the point where it is already better than human.

LinkDetails

One of the most immediate and practical applications of LLM is summarizing text. But how does the quality compare to human summaries? How do open source models compare to closed source models?

Fortunately at Anyscale, we are blessed to have outstanding legal counsel (Justin Olsson, Anyscale’s General Counsel) who is willing to be a human guinea pig for the sake of science. We took 28 legal bills from the BillSum dataset, where legislative interns created summaries. We generated three summaries for each bill: the original bill from the intern, one generated by Llama 2 70b and one generated by GPT-4. Justin then scored each summary on a scale from 1 to 5.

Note that we attempted to keep him from knowing whether a given summary was written by a human, GPT-4 or Llama 2 70b author, but as it turns out, while it may be a generally difficult problem to determine whether a single piece of text is written by AI, generative AI authors, like human authors, have clearly discernible styles that make it impossible to treat it as blind. To prove this point, he challenged me that he could get 100% on telling me which summary was written by which author - and indeed received a 100% score. Whether this biases the results on which summary was better is an open question, though he tells us he doesn’t think he is biased against our arriving machine learning overlords.

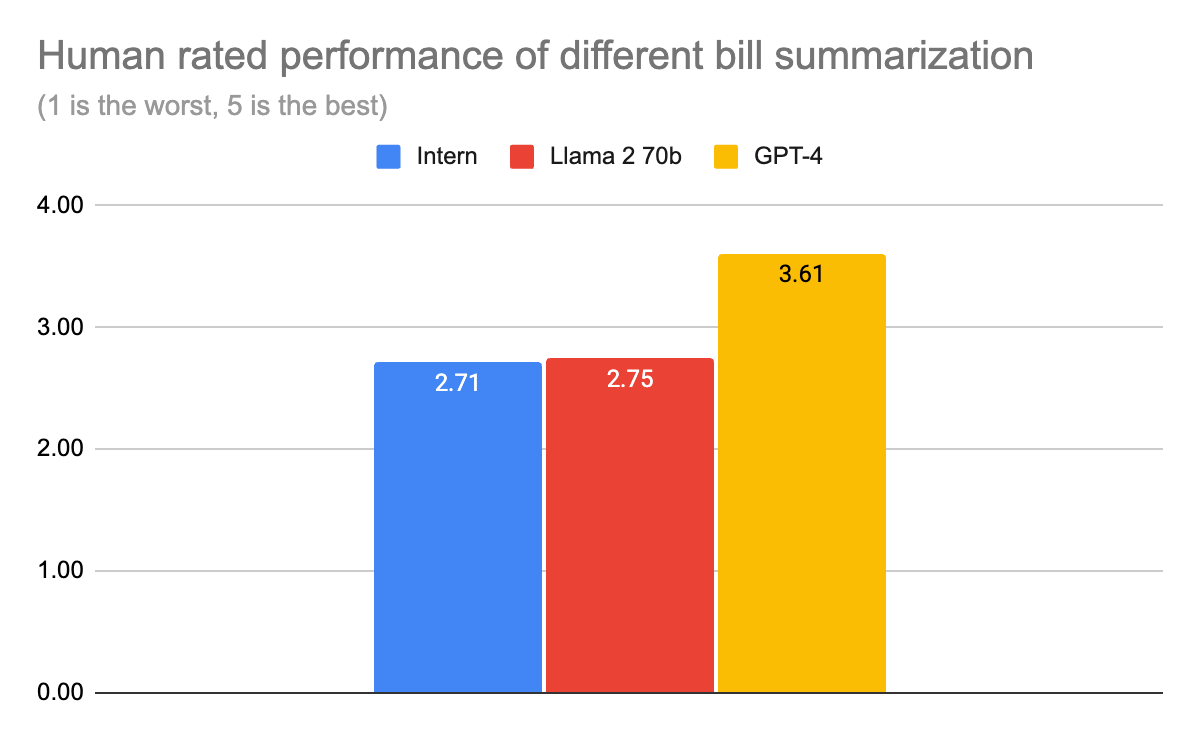

Here are the results:

We can see from these results that Llama 2 70b just slightly outperformed the humans but GPT-4 considerably outperformed both of them.

We then revealed to Justin which summaries were from whom. Justin identified that there were some times when GPT-4 was absolutely amazing (though he professed concern that it might be hallucinating, as some summaries contained analysis not present in or discernable from the bills themselves).

LinkHow do you improve summarizations when you’re already superhuman?

Given that Llama 2 70b costs approximately 3% of what GPT-4 costs, is there a way we can improve the performance of Llama 2 70b?

We considered two possible approaches: modifying the prompt and adding a single example (i.e. one-shot). The second one did not pan out because it too adversely affected the context length (Llama 2 70b has a 4K context window, and some of the bills were more than 3000 tokens long and you need enough room for both the example and the new legislation). So we focused on the first.

Justin identified four main issues with the Llama 2 70b prompts:

It kept using evasive or propositional language. As an example, if you took the summary literally, you’d walk away assuming that the effect of a law was to propose something, rather than, after passage, causing it to be the case. Strictly speaking, this is indeed true for a bill (before it is passed), but it is worth comparing how the human summaries operate here. The human legislative summaries are typically structured in the form of [Name of Bill] - [active verb describing what it does, e.g., “Amends” or “Authorizes and makes appropriations”] followed by a high level description of the actual effect. In other words, the human understands the effect of the bill.

It would sometimes treat all sections of the bill with an equal level of summarization, including spending too much time summarizing the introductory sections, which have no force and effect and are often just useless political grandstanding.

What most people care about in this context is the effect of the bill, not to get a section by section summary of it.

We think GPT-4 may have been using external sources of information that were encoded in its 1.4 trillion parameters that Llama 2 70b did not. One example of this is that in one of the summaries, it mentions that the bill became law on a particular date.

For example, consider the “Global Service Fellowship Program Act of 2007.” It includes a section on grants. GPT-4 does a great job of summarizing the aspects, e.g.

Grant provisions for fellowships ranging from 7 days to one year, with funding levels from $1,000 to $7,500.

Whereas the same section from Llama 2 70b had details that probably weren’t worth including in the summary such as:

The program will have the following components:

Fellowships between 7 and 14 days in duration will be funded at levels of up to $1,000.Fellowships between 15 and 90 days in duration will be funded at levels of up to $2,500.Fellowships between 91 and 180 days will be funded at levels of up to $5,000.Fellowships between 181 days and one year will be funded at levels of up to $7,500.

We really can’t do anything about 4, but we can try to tweak the prompt to help address 1, 2 and 3.

Here is the original prompt:

System

You are an assistant that tries to extract the most important information from what is presented to you and summarize it factually, accurately and concisely. You use paragraphs and bullet points when helpful.

User

Summarize the following text:

{text}

Here is the tweaked prompt:

User

Summarize the following bill.

Focus your summary on the most important aspects of the bill. You do not have to summarize everything. Particularly focus on questions related to appropriation and the effects and impacts of the bill. However, you do not need to go into complex details, it is acceptable to provide ranges.

Use active verbs to describe the bill, like "amends" or "changes." Do not use ambivalent verbs like "proposes" or "suggests."

{text}

Unfortunately, we can’t repeat the experiment, but Justin’s assessment on some of the worst summaries were that they were significantly improved.

For example, after we added the prompt above, it did express more “summary-like” features like “The program will provide funding for fellowships that range from 7 days to 180 days in duration.”

LinkConclusions

We’ve shown that Llama 2 70b can match legislative interns in summary quality, but we also saw that GPT-4 outdid both of them.

However, using GPT-4 by using it to direct how we should tweak the prompt did lead to an improvement in quality for Llama 2 70b.

We think this approach – of using GPT-4 to make it easier to extract ideas for strengthening the prompts of less capable learners is a powerful idea and an example of a trend we’re already seeing: GPT-4 is a key tool of refinement, development and evaluation, even if it is too expensive to use in the final product.