Ray Summit 2025: Anyscale Platform Updates

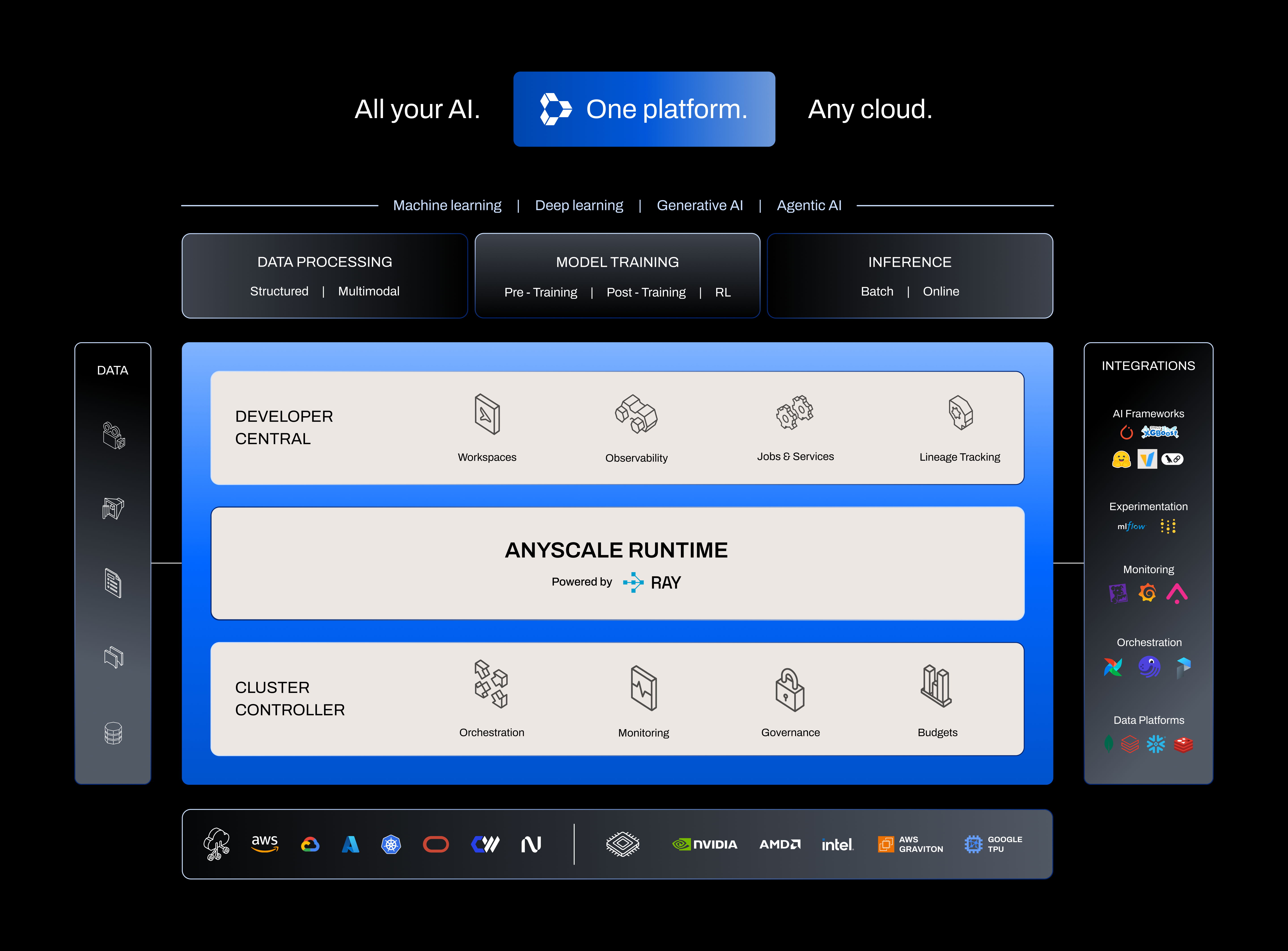

Ray Summit 2025 was packed with keynotes, deep dive breakouts, lightning demos, and hands-on training. The main keynote was filled with insights from AI builders at Google, vLLM, Meta, Microsoft, Nvidia, Cursor, and of course Anyscale, which unveiled major new updates across both Ray and the Anyscale Platform. On stage we introduced new capabilities across the Anyscale Platform that:

Accelerate developer velocity with the new Lineage Tracking for Anyscale-managed Ray compute clusters that comes integrated with MLflow, Weights&Biases and Unity Catalog.

Boost cost efficiency and performance with the new Anyscale Runtime, the API compatible engine for Ray that speeds data, training, and serving workloads without code changes .

Strengthen production resilience and availability across clouds with the new Anyscale on Azure, a first party offering accessible from Azure Portal and more advanced job scheduling with the Global Resource Scheduler (GRS) and the new version of Multi-Resource Clouds (MRC).

The Anyscale Platform where teams can run Ray on every AI workload, on one enterprise-grade platform, on any cloud

LinkDeveloper Central

Most AI teams build their ML pipelines across a wide range of tools. New users fight setup guides, containers drift as they move from laptops to clusters, and data/models context lives scattered across dashboards and registries. Prototypes that work on a single node stall when exposed to real data volumes in production. Debugging turns into a chase means jumping between logs, metrics, and code – with no shared map of datasets, models, and compute. Meanwhile, platform teams carry the burden to both address service tickets, manage clusters and maintain tooling with custom scripts – Developer Central is the answer to scalable AI development on top of Ray. Ray removes the scale limits, Anyscale removes development friction with a unified experience for building, debugging and deploying, always backed with scalable Ray clusters. Under the suite of features in Developer Central teams get:

Cloud IDE – VS Code or Jupyter, install and cache packages, and can easily connect to data with integrations to popular data stores that have the right secrets and policies in place.

Observability is built in with more scalable dashboards for tasks and dedicated dashboards for Ray libraries including Ray Data and Ray Train.

Self-service deployment Jobs and Services – each to support batch and online services respectively.

To continue expanding on the foundation of developer tools and infrastructure to Accelerate development of production-ready AI, at Ray Summit 2025 we announced:

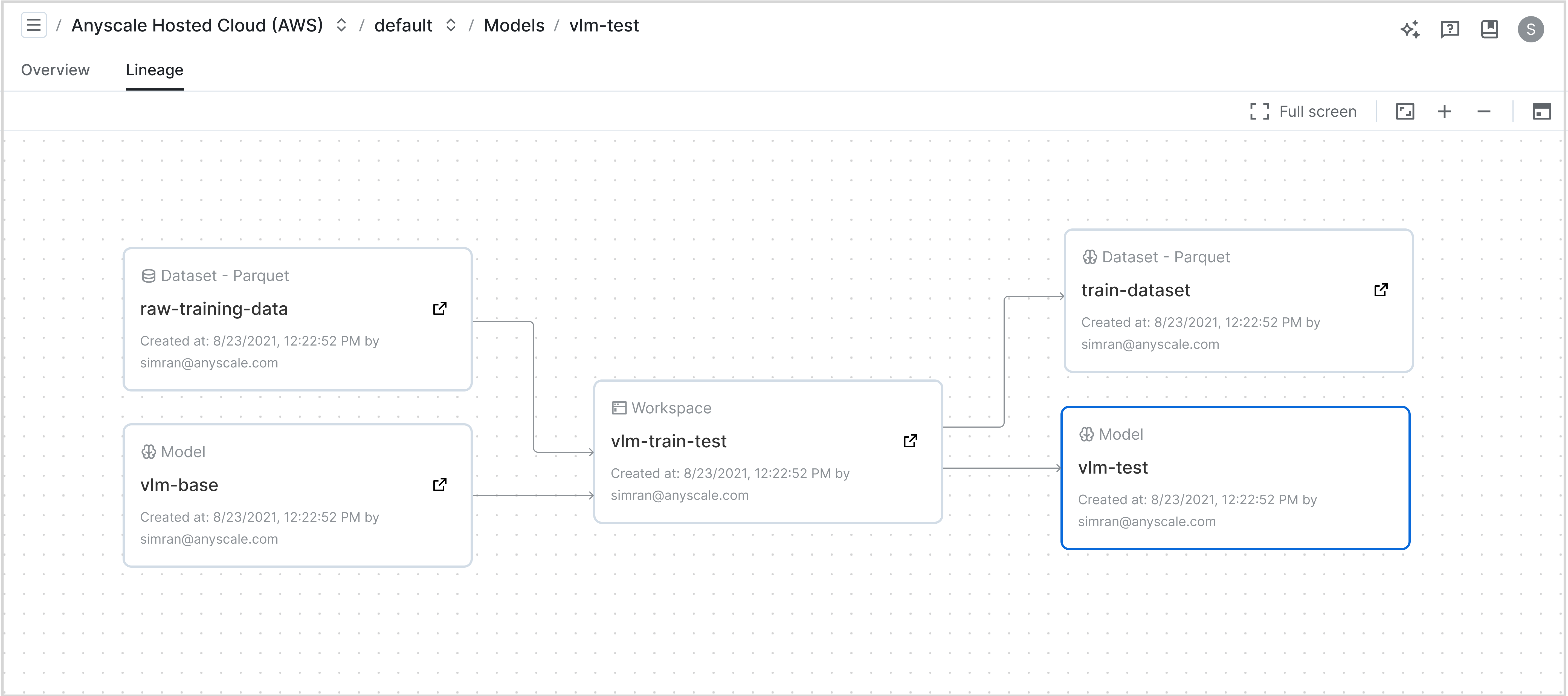

LinkLineage Tracking

When model behavior looks off or a compliance review is required, teams need to know which datasets, tables, and model versions were used by which Ray workloads, and with what parameters and code. Lineage Tracking lets teams visualize the pipeline with an interactive graph that maps datasets and models to the exact Workspaces, Jobs, and Services that produced or consumed them.

Built-in lineage graphs that visualize how datasets and models connect across Workspaces, showing inputs, outputs, and the compute jobs that link them.

For any run, teams trace artifacts to the right workload with logs, parameters, environment, and code references in context, and can jump to data or model based views leveraging native integrations in Unity Catalog, MLflow, and Weights & Biases. The feature is built on OpenLineage, so lineage stays portable across tools and clouds. With these new capabilities, developers get a clear map to the right run, platform and MLOps teams can reproduce pipelines from prototype to production with fewer handoffs, and security /compliance teams gain end-to-end auditability of the model lifecycle. Now in private beta.

For a full deep dive and walk through on how to use this feature, read the announcement blog.

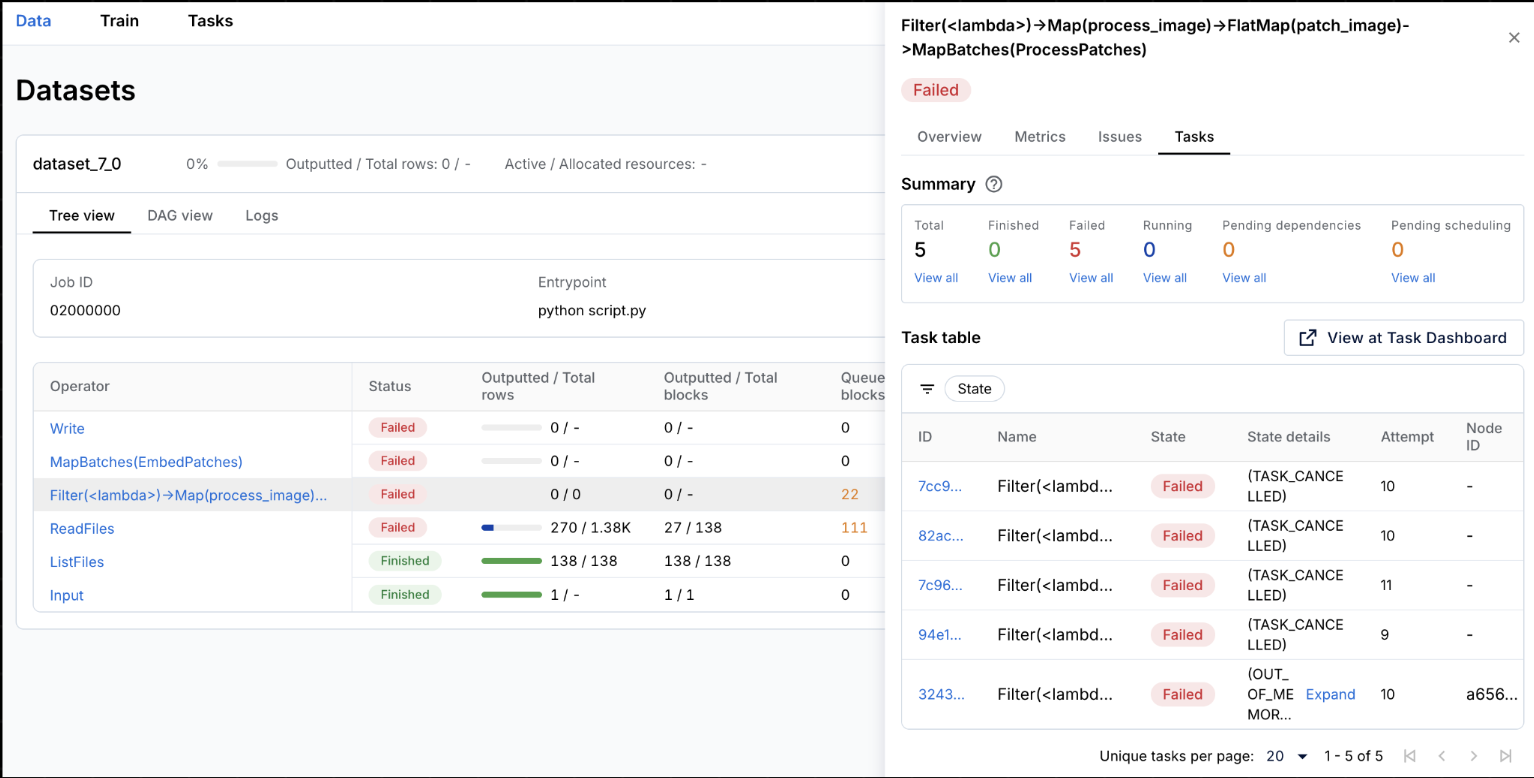

LinkObservability: Ray Data, Ray Train, Task Dashboard & Cluster Dashboard

We are introducing new and improved dashboards in Anyscale that make it easier to understand and debug Ray workloads. These dashboards leverage the new Ray Event Export framework, and workload specific views that highlight the most important insights for training and data jobs.

Troubleshoot and debug common production issues like fixing CPU OOM with Ray Workloads dashboards

With the persistent logs that power dashboards, users can debug even after clusters shut down, explore detailed logs and metrics in one place, and use built in profiling to pinpoint performance issues. The dashboards scale to thousands of tasks.

Ray Data and Ray Train dashboards are generally available, Task Dashboard is available in beta (opt-in required) and Cluster Dashboard enters beta soon.

LinkCustom Workspace Templates

When you’re new to Anyscale, templates offer an easy way to get started — with prebuilt images, notebooks, and example code ready to run. But what if organizations could create their own to accelerate developer onboarding? Many teams today rely on long wiki pages and manual setup steps that often break.

Custom Workspace Templates solve this by packaging internal workflows into ready-to-use blueprints.. Admins can create from a workspace or repo, set the container image and dependencies, attach compute configs, write a README, and publish at the Anyscale cloud level. Templates are versioned with drafts and reviews and appear in a shared catalog that supports deep links from internal docs. New workspaces inherit the right environment and policy, while existing ones stay unchanged. This results in faster onboarding, reusable setups, and consistent compliance with less maintenance. Now in Beta. Learn how to create and use one.

LinkAnyscale Runtime

Modern AI workloads — from multimodal data processing to distributed training and real-time inference — are pushing infrastructure to its limits, demanding efficient orchestration across heterogeneous (CPU + GPU) compute and rapid recovery from failure. Anyscale Runtime is a production-grade, Ray-compatible runtime built to meet those challenges — delivering higher resilience, faster performance, and lower compute cost vs. open-source Ray without requiring code changes or lock-in.

Teams running workloads like image inference, feature preprocessing, or online video processing have seen faster performance and dramatic gains in efficiency.

To see more details on the benchmarks below and how to replicate them, check out our Anyscale Runtime announcement blog.

Workload | Benchmark Results |

|---|---|

Image Batch Inference | 6x cheaper |

Feature Preprocessing | 10x faster |

Structured Data | 2.5x faster on select TPC-H queries |

Reinforcement Learning | 8x more scalable in APPO |

Video Serving (Online) | 40% faster per request |

High Throughput Serving | 7x higher requests per second |

LinkCluster Controller

The modern compute landscape is fragmented. Teams juggle workloads between GPU reservations, on demand availability, and spot capacity across regions and clouds while trying to keep spend in check and innovation high. For Ray users, that complexity grows further with the day to day work of deploying, scaling, upgrading, and maintaining healthy clusters, all while handling queues, preemption, and failover. This complexity results in underutilized GPUs, and too much time spent managing infrastructure instead of shipping models and apps to production.

The Anyscale Cluster Controller removes that operational drag for AI teams looking to scale their AI initiatives with Ray. It is the control plane for reliable Ray operations at scale, abstracting complexity of managing compute resources across providers and regions, juggling priorities and budgets, and automating cluster lifecycle tasks from launch to repair. Health is monitored continuously, unhealthy nodes are replaced without disruption, preemption and failover are handled automatically by the platform, and governance and cost controls are all available in one place. Integrate with external observability tools like Datadog for further historical investigation. With this managed experience, clusters have faster starts, GPUs achieve higher utilization, and workloads run reliably wherever data and GPUs live, including AWS, GCP, and now natively as a first-party service on Azure.

To further support that, we announced:

LinkAnyscale on Azure

Available in private preview, this new offering brings Anyscale to Azure as a first party service. This enables enterprises and Azure developers to build and run AI workloads inside Azure’s trusted and secure infrastructure with the flexibility of open-source with the speed and trust they expect from a managed service on Azure . As part of a first-party experience, teams can provision from the Azure Portal like any other Azure service. With Azure Entra ID integration for authentication and an architecture that runs Anyscale-managed Ray clusters on Azure Kubernetes Service (AKS) inside the customer account, teams can extend IAM, secure data access, and unified governance across all AI workloads. To learn more and request access to this new offering more check out this getting started guide.

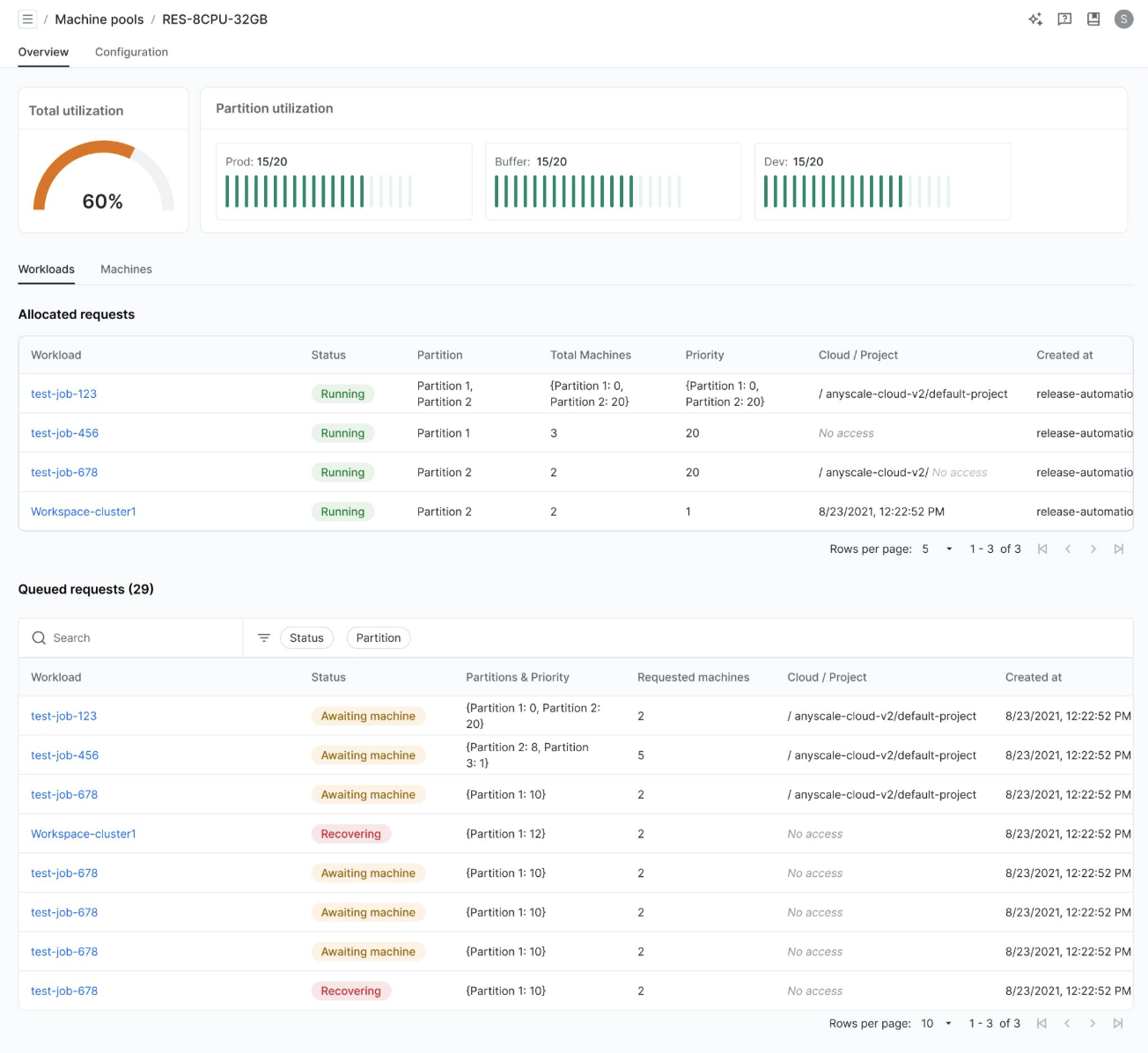

LinkGlobal Resource Scheduler

Scheduling often feels like a black box with little insight into queue order, wait times, preemption causes, or whether heterogeneous compute sit idle. The Global Resource Scheduler assigns Anyscale workloads to fixed capacity machine pools and partitions using admin defined priorities and policies, then manages preemption to maximize utilization across reservation, on demand, and spot. In practice, this means a lower priority experimental job can run on a reserved H200 pool, get safely preempted and shifted to on demand or spot the moment a production job requires the reservation, then move back once the higher priority job scales down – all without manual intervention.

GRS automatically queues jobs and workspaces based on priority and executes them as resources become available.

The new observability makes this behavior visible with utilization by partition and machine type, live queue stats for running and waiting jobs with average wait times, and preemption and machine history. Every workload row links to the job with priority, partition, machine, and recent queue events, while the Machines view shows which jobs are on which instances. Together, platform teams can forecast start times, reprioritize with confidence, explain delays, and keep expensive accelerators busy instead of idle. Currently in Beta.

LinkMulti-Resource Cloud (MRC)

Compute capacity is fragmented, volatile, and often in shortage. ChasingGPUs wherever they are available – across regions and providers – requires that teams maintain separate configs and tooling to deploy workloads across different infrastructure providers. Multi-Resource Cloud, currently in beta, extends the Anyscale Cloud, so infra teams can hook up multiple cloud resources to one Anyscale cloud. These can be in the same cloud provider but different regions, different cloud providers, or even on different stacks (VM or K8s).

For jobs, Anyscale searches across all the defined compute resources (e.g. cloud 1 on us east using VMs) for the desired compute resources (e.g. H100s) and launches job only if resources are available.. For Services, a single Service can run across resources in parallel and global DNS routes traffic by latency, availability, cost, or rotation with automatic failover. You still have to consider data transfer and latency tradeoffs, but when the goal is to find any H200 and start, this scheduling model increases job start rates and reduces backlogs so teams can innovate without the limits of cloud providers or regions. , lowers latency for users, improves availability, and makes better use of expensive accelerators without application changes.

LinkGet started

Anyscale – All your AI. One platform. On any cloud.

Anyscale lets developers build and iterate faster with the Ray-compatible Anyscale Runtime, while platform teams avoid the burden of cluster setup, scaling, and upkeep. With higher performance at lower cost and production resilience built in, you can ship more, scale confidently, and run reliably across AWS, GCP, Azure, CoreWeave, Nebius or any Kubernetes environment.

Try Anyscale free with $100 and explore the multimodal AI pipeline template.

Join the Lineage Tracking launch webinar November 20th to see a live walkthrough.

Subscribe to our monthly newsletter for product updates and best practices.