Ray 1.11: Redisless Ray, a docs redesign, and Python 3.9 support

Ray 1.11 is here! The highlights in this release include:

Ray no longer starts Redis by default, opening up the possibility for better support of fault tolerance and high availability in future releases.

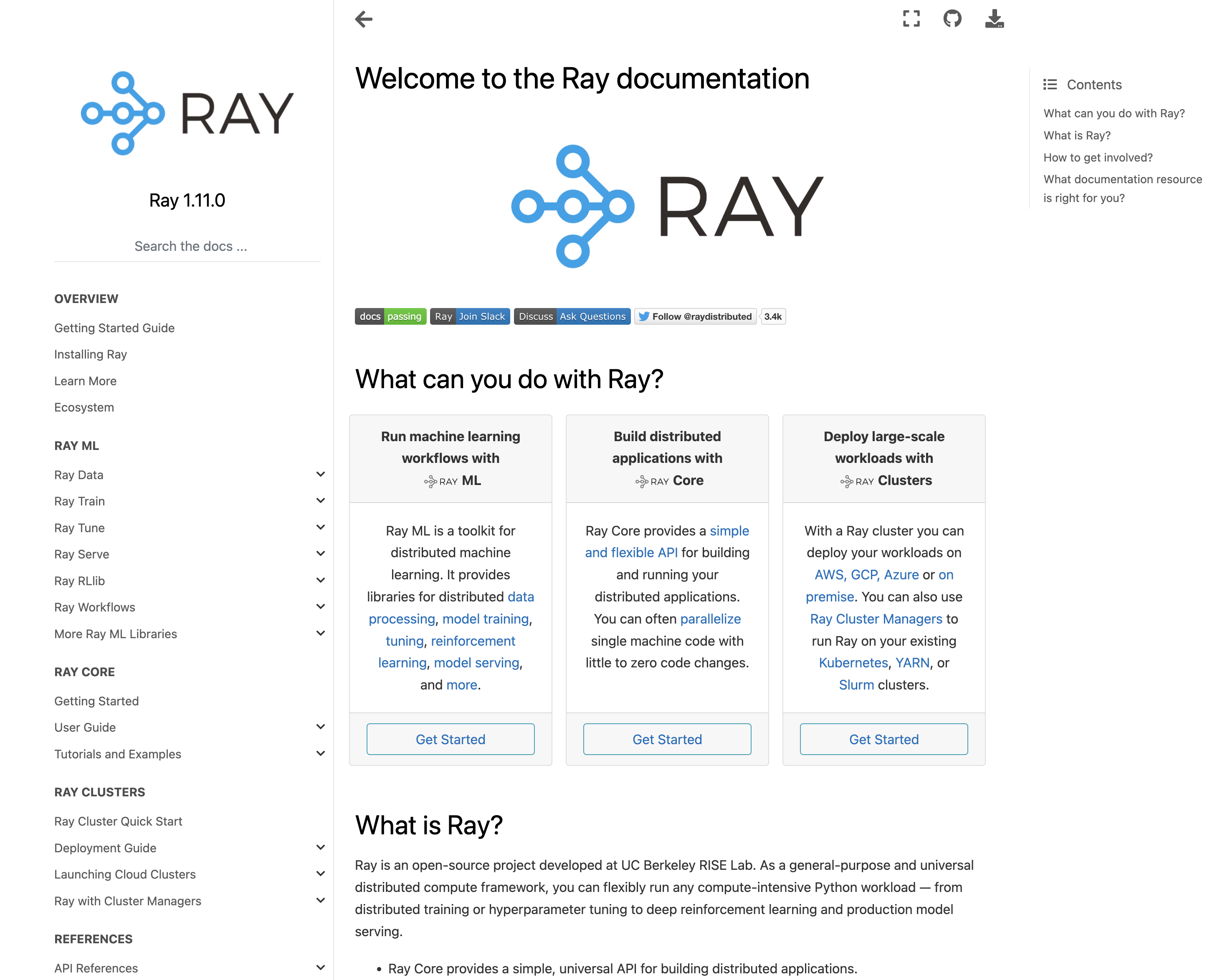

The Ray docs have a new, more intuitive structure, organized around the three primary ways we see the community using Ray: Ray ML, Ray Core, and Ray Clusters.

Ray and its core dependencies are now stable for Python 3.9, so Python 3.9 can be used with Ray in production.

You can run pip install -U ray to access these features and more. With that, let’s dive in.

LinkRay no longer launches Redis by default

Since its inception, Ray has relied on Redis to provide key-value storage and message pubsub. After moving object reference counting from GCS to workers in the Ray 1.0 re-architecture, data stored in Redis was primarily cluster-level metadata. Messages published and subscribed through Redis include actor/node/worker state notifications and logs forwarded to the driver. There are a few issues with these use cases for Redis in Ray:

Redis is not persistent. For scenarios demanding fault tolerance, we would like to store cluster metadata in persistent storage.

Operations modifying cluster state always go through GCS. Offloading metadata storage from GCS to Redis is unnecessary and increases operation burden when persistence is not required.

The Ray 1.x architecture white paper mentioned a plan to remove the Redis dependency and enable pluggable persistent storage. The Ray 1.11 release marks the first step by removing the default Redis runtime dependency. GCS now stores its metadata in its own structure and message pubsub uses Ray’s internal implementations, allowing us to focus on adding better support for fault tolerance and high availability in Ray.

Another significant change is that, previously, the Ray cluster address (the address passed to ray start –address=... and ray.init(...)) was implicitly the Redis address. Now it is the cluster’s GCS server address.

Although Ray no longer stores metadata in Redis by default starting in version 1.11, Ray still supports Redis as an external store. Check out our follow-up blog post to read about the change in more detail. If you have further questions about the change, feel free to head to discuss.ray.io or the Ray Slack channel and discuss the issues with us!

LinkA new Ray documentation experience

With version 1.11, we’re excited to roll out a new, redesigned experience in the Ray docs. The goal is to organize information more intuitively, centered around how users are actually using Ray. Here are a few of the major enhancements you’ll notice in the Ray docs:

The new overview page immediately presents you with three starting points, based on the three primary ways we see the community using Ray: running machine learning workloads with Ray ML, building distributed applications with Ray Core, and deploying large-scale workloads with Ray Clusters.

Clicking on Get Started for Ray ML, Ray Core, or Ray Clusters will bring you a collection of resources for getting up and running with that aspect of Ray. The Getting Started Guide includes instructions for installing Ray as well as a collection of quick start guides for each Ray library.

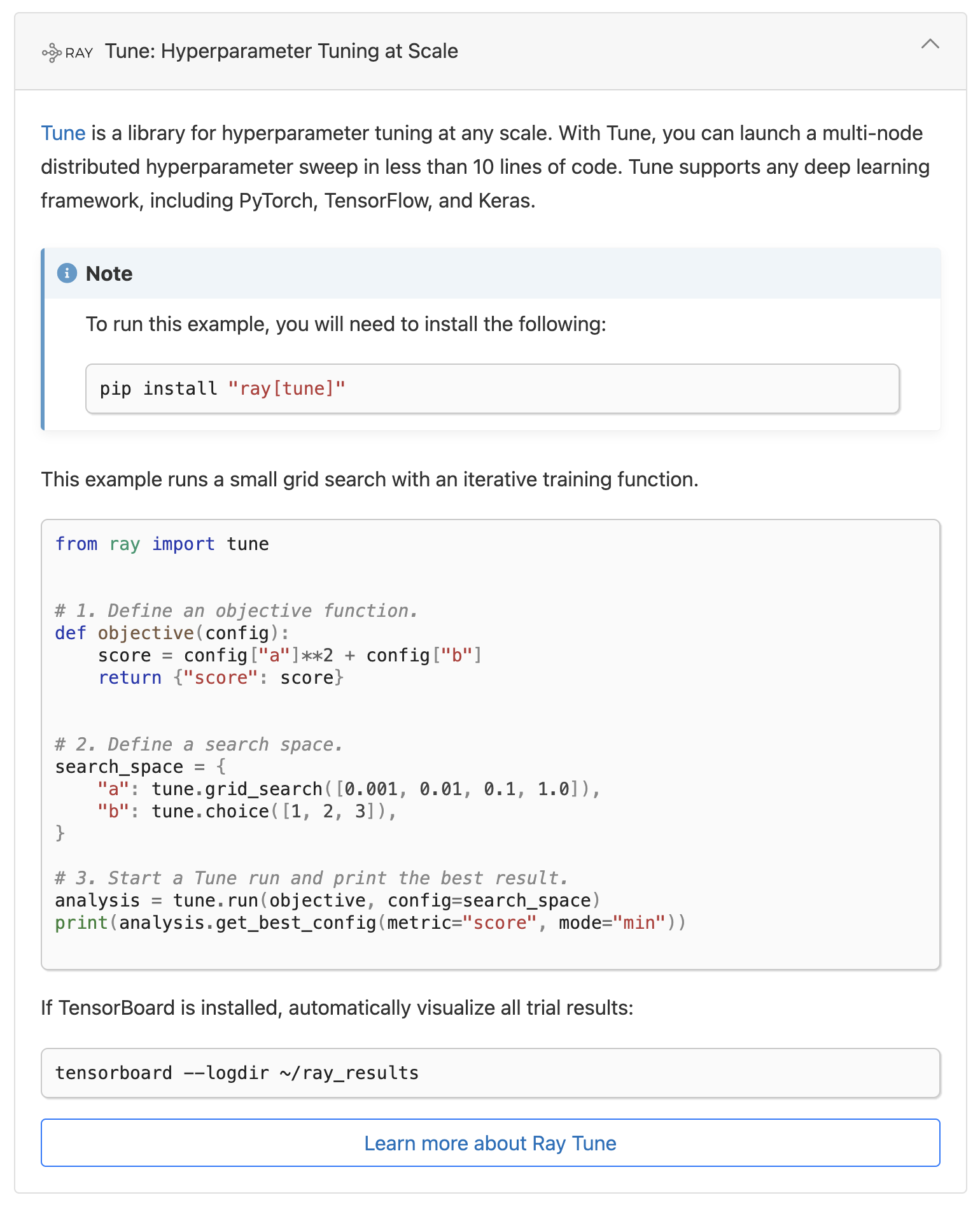

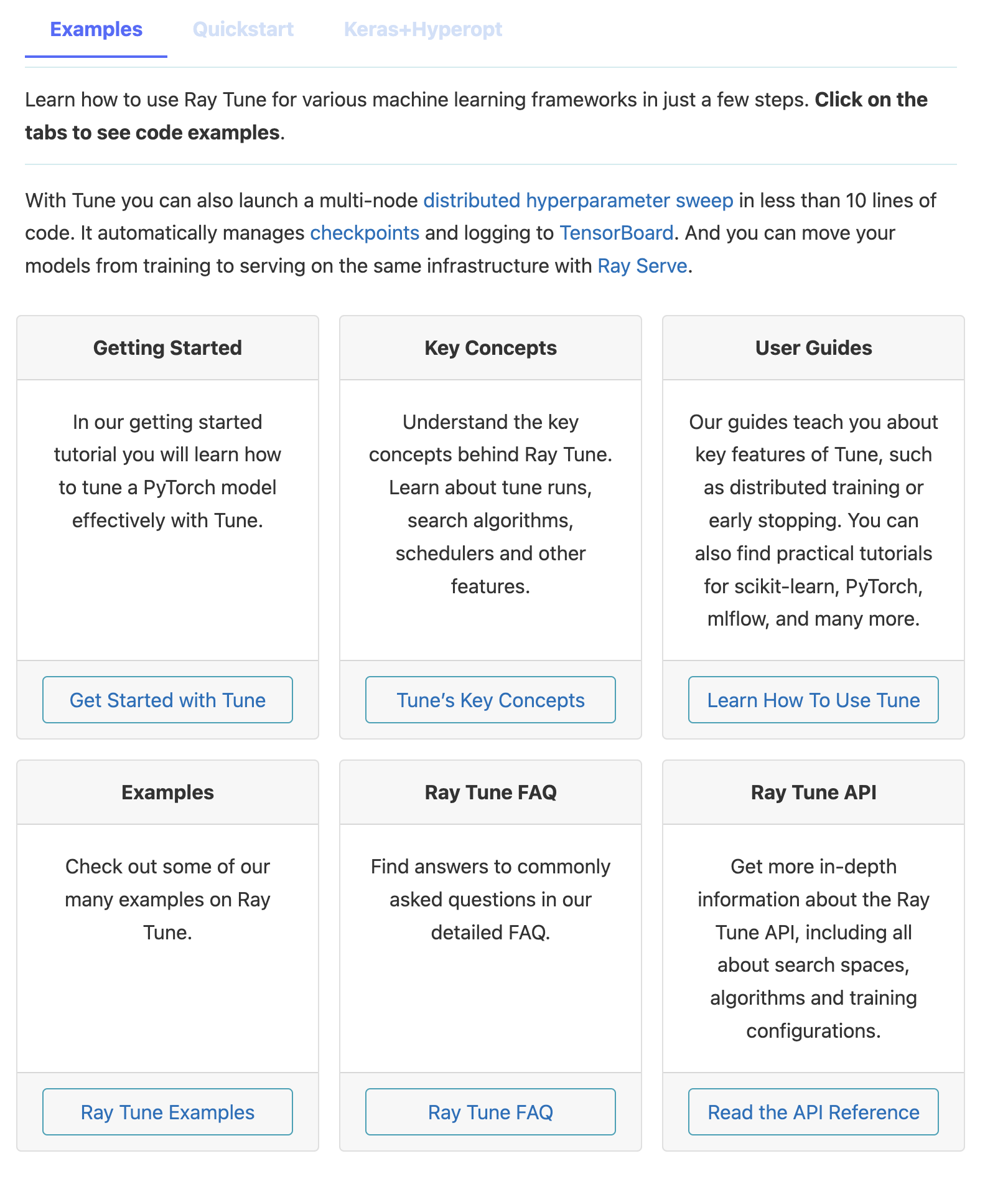

You can find the individual pages for each Ray library underneath Ray ML in the left-side navigation. We’ve also made improvements to the top-level library pages, such as the Ray Tune overview page, where we’ve added a tabbed experience with easy access to examples, a quick start guide, and instructions for tuning Keras models with Hyperopt.

We encourage everyone to explore the docs and let us know where they can be improved even further. If you’re interested in contributing to Ray, here’s how to get started.

LinkPython 3.9 support is now stable

We’ve had experimental support for Python 3.9 since version 1.4.1. With the 1.11 release, we’re removing the “experimental” label. Ray and its core dependencies are now stable for Python 3.9, so feel free to use it in production. We’d like to thank all the pioneers who beta tested it and reported issues.

LinkLearn more

Many thanks to all those who contributed to this release. To learn about all the features and enhancements in this release, check out the Ray 1.11.0 release notes. If you would like to keep up to date with all things Ray, follow @raydistributed on Twitter and sign up for the Ray newsletter.