Faster stable diffusion fine-tuning with Ray AIR

This is part 3 of our generative AI blog series that dives into a concrete example of how you can use Ray to scale the training of generative AI models. To learn more using Ray to productionize generative model workloads, see part 1. To learn about how Ray empowers LLM frameworks such as Alpa, see part 2.

In this blog post, we explore how to use Ray AIR to scale and accelerate the fine-tuning process of a stable diffusion model.

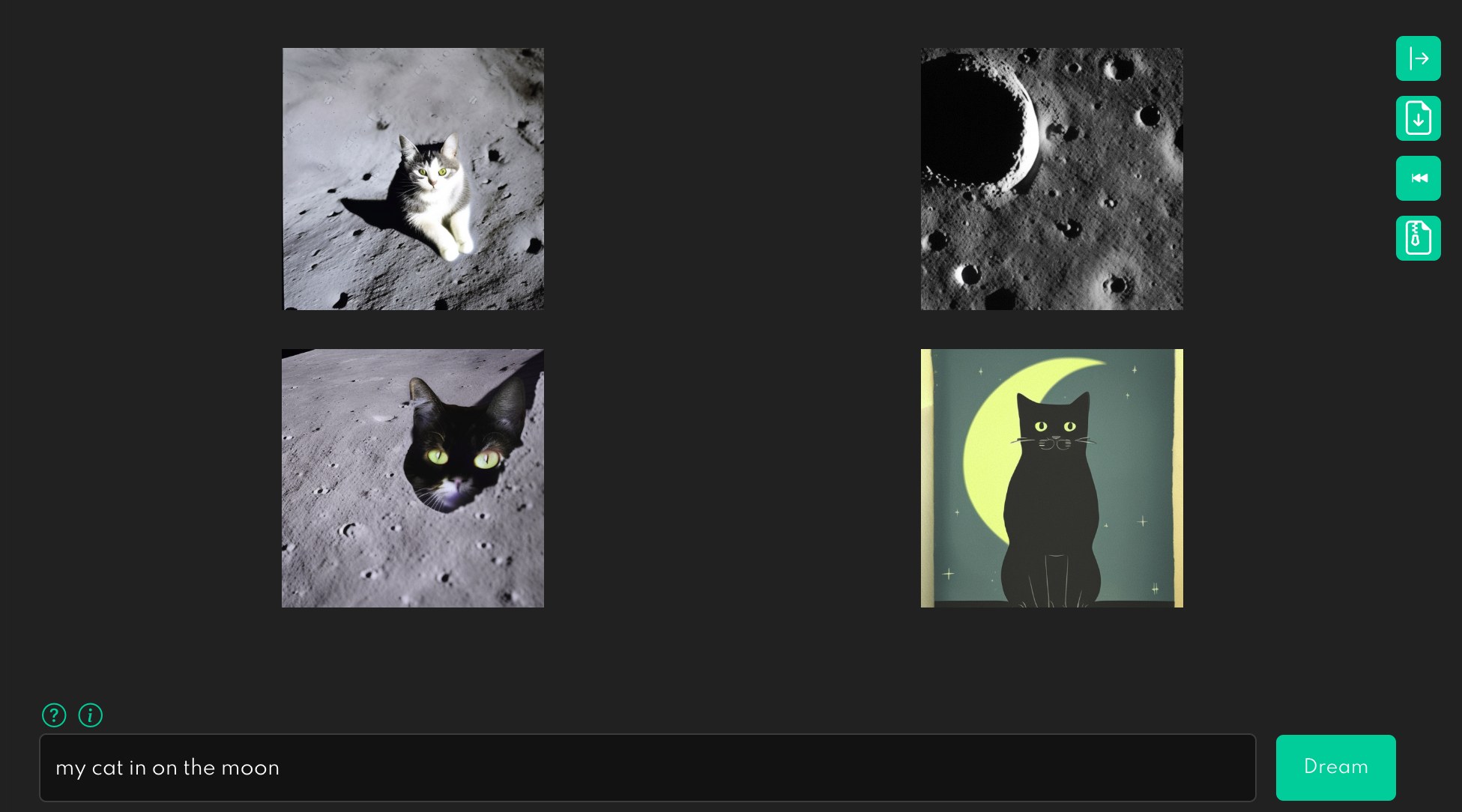

When Stable Diffusion was released last year, it took the internet by storm: This magic machine learning model is able to convert a textual description into a realistic image by taking a textual prompt and converting into its relevant image. For example, below are a couple of prompts to Stability AI’s DreamStudio, with resulting respective images.

Figure1. With a prompt: My cat on the moon

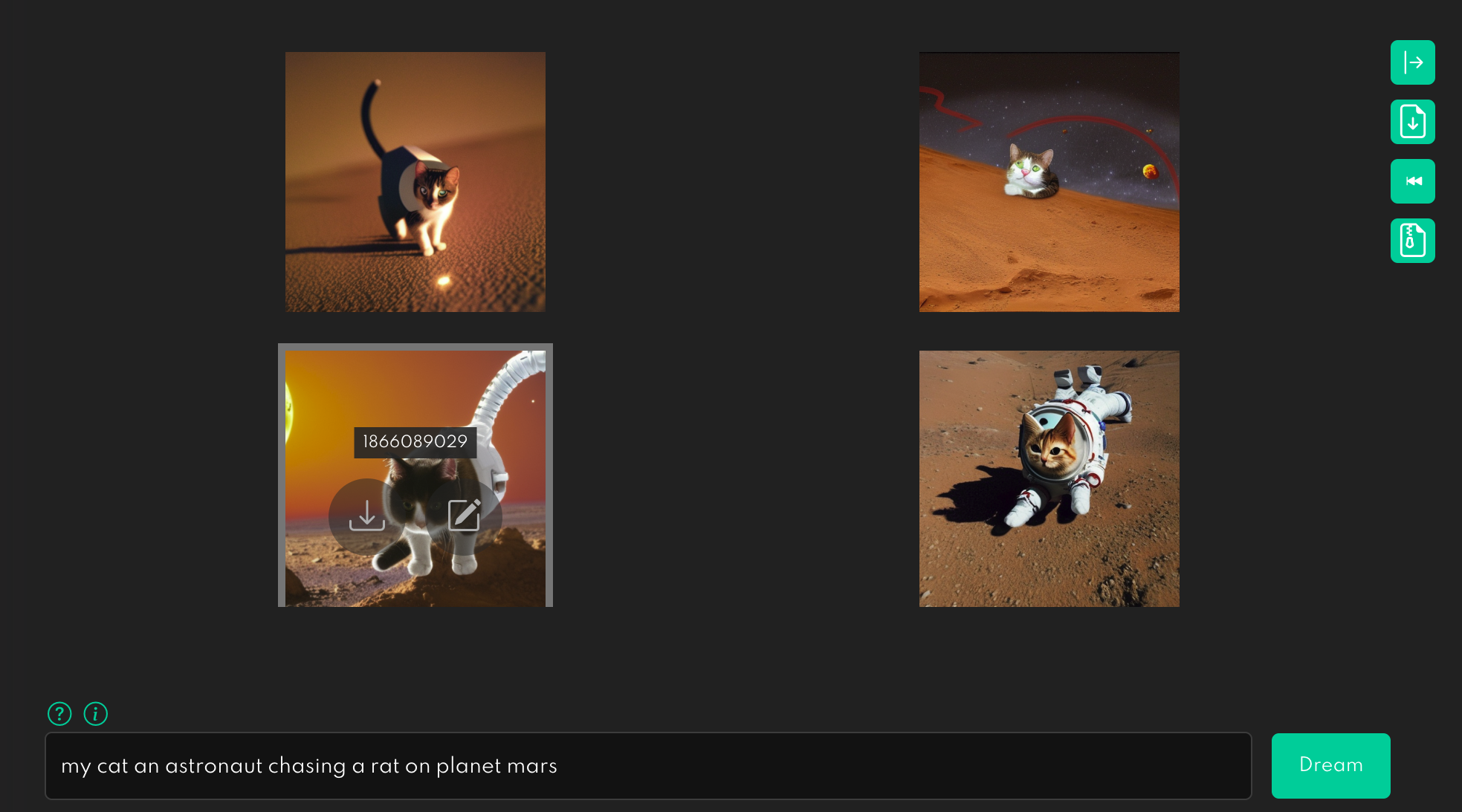

Figure1. With a prompt: My cat on the moon Figure 2. With a prompt: My cat is an astronaut chasing a rat on the planet mars.

Figure 2. With a prompt: My cat is an astronaut chasing a rat on the planet mars.

Although this is an exciting new capability, particularly for creative content creators, it may be hard to control exactly what the output will look like and personalize it. For instance, if you want to generate a picture of your cat on the moon, you will want to fine-tune the stable diffusion model to adjust it to the task at hand.

To fine-tune a model, to accommodate your task at hand, you take an existing model and train it on some of your own data to get an individualized version. For instance, you can start with a pre-trained diffuser model and train it to recognize your cat. It can then use your cat in subsequent prompts for image generation.

This all sounds easy enough - but in practice, behind the scenes with respect to code and infra, there are some challenges to overcome.

LinkChallenges when fine-tuning diffusion models

Fine-tuning a stable diffusion model can take a long time, and you may want to distribute your training process in order to speed things up.

However, there are three main challenges when it comes to scaling the fine-tuning diffusion models:

Converting your script to do distributed training: Figuring out how to convert your training script to use distributed data parallel training for multi GPUs and multiple nodes can add a lot of complexity to your training script.

Distributed data loading: Data loading, especially in distributed settings, is painful. Existing PyTorch native solutions can be especially hard to scale if you have a larger dataset and need to efficiently read from cloud storage.

Distributed orchestration: Configuring and setting up a distributed training cluster is one of the biggest headaches for a machine learning (ML) practitioner. You have to set up Kubernetes or manually manage the machines, make sure they can communicate with each other, and that every worker has the correct environment variables set.

To address and mitigate the above challenges, you can use Ray AIR, allowing you to distribute your training data, converting your script into a distributed application, and taking advantage of accelerators on your multi-node cluster, which the subsequent sections describe what Ray AIR is and how to use it.

LinkWhat is Ray and Ray AIR?

Ray is a popular open-source distributed Python framework that makes it easy to scale AI and Python workloads.

Ray AIR (AI Runtime) is a native set of scalable machine libraries built on top of Ray. In particular, two AIR libraries are built specifically for scalable model training -- Ray Train, which simplifies distributed training for PyTorch & other common ML frameworks, and Ray Data, which simplifies data loading and data ingestion from the cloud. If you're new to Ray AIR, check out this Ray Summit 2022 talk.

With Ray, we can address the above three challenges:

Scaling across multiple nodes: Ray Train provides a unified interface for you to take an existing training script and enable distributed multi-GPU multi-node training.

Distributed Data Loading: Ray Data has a simple interface for reading files from cloud storage and efficiently loading and sharding data into your training GPUs.

Distributed orchestration: Ray’s open-source cluster launcher allows you to create Ray clusters with a single line of code on AWS, Azure, Google Cloud.

LinkFine-tuning a diffusion model with Ray AIR

Let's explore what the training code looks like for our fine-tuning example.

The full code can be found here on GitHub. It includes instructions and the data loading and preprocessing part. In the rest of the post, we will only focus on the training code.

Central to our training code is the training function. This function accepts a configuration dict that contains the hyperparameters. It then defines a regular PyTorch training loop.

There are only a few locations in our training code where we interact with the Ray AIR API. These are preceded by Ray AIR comments in the code below.

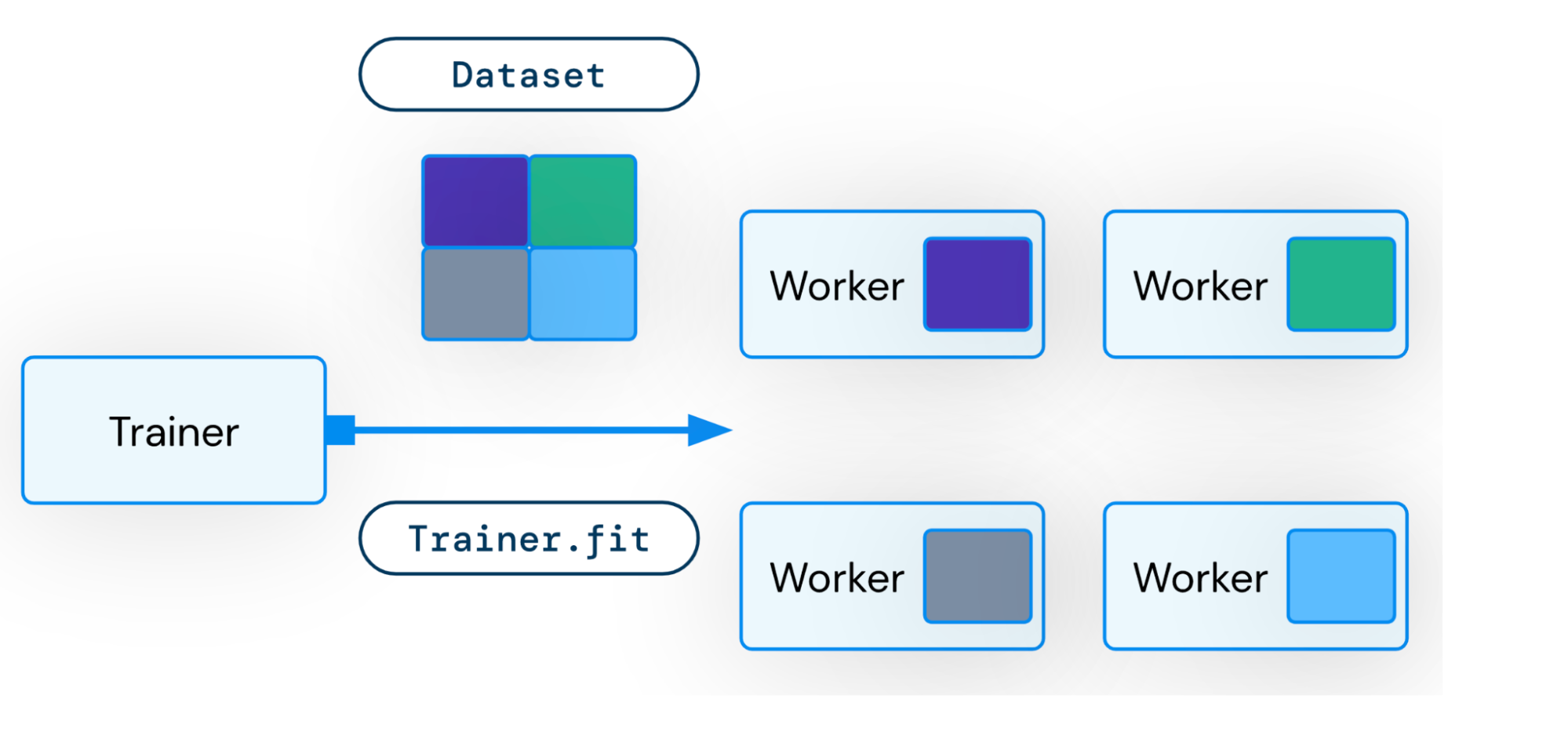

Remember that we want to do data-parallel training for all our models.

We load the data shard for each worker with

session.get_dataset_shard("train")We iterate over the dataset with

train_dataset.iter_torch_batches()We report results to Ray AIR with

session.report(results)

Figure 3. Ray data shards a slice of data to each worker for distributed training

Figure 3. Ray data shards a slice of data to each worker for distributed training The code snippet below is compacted for brevity. The full code is more thoroughly annotated.

1import torch

2from torch.nn.parallel import DistributedDataParallel

3from torch.nn.utils import clip_grad_norm_

4from ray.air import session

5

6def train_fn(config):

7 cuda = get_cuda_devices()

8

9 text_encoder, noise_scheduler, vae, unet = load_models(config, cuda)

10 text_encoder = DistributedDataParallel(

11 text_encoder, device_ids=[cuda[1]], output_device=cuda[1]

12 )

13 unet = DistributedDataParallel(unet, device_ids=[cuda[0]], output_device=cuda[0])

14 optimizer = torch.optim.AdamW(

15 itertools.chain(text_encoder.parameters(), unet.parameters()),

16 lr=config["lr"],

17 )

18 # Ray AIR code

19 train_dataset = session.get_dataset_shard("train")

20 num_train_epochs = config["num_epochs"]

21

22 global_step = 0

23 for epoch in range(num_train_epochs):

24 for step, batch in enumerate(

25 # Ray AIR code

26 train_dataset.iter_torch_batches(

27 batch_size=config["train_batch_size"], device=cuda[1]

28 )

29 ):

30 batch = collate(batch, cuda[1], torch.bfloat16)

31 optimizer.zero_grad()

32 latents = vae.encode(batch["images"]).latent_dist.sample() * 0.18215

33 noise = torch.randn_like(latents)

34 bsz = latents.shape[0]

35 timesteps = torch.randint(

36 0,

37 noise_scheduler.config.num_train_timesteps,

38 (bsz,),

39 device=latents.device,

40 )

41 timesteps = timesteps.long()

42 noisy_latents = noise_scheduler.add_noise(latents, noise, timesteps)

43

44 encoder_hidden_states = text_encoder(batch["prompt_ids"])[0]

45 model_pred = unet(

46 noisy_latents.to(cuda[0]),

47 timesteps.to(cuda[0]),

48 encoder_hidden_states.to(cuda[0]),

49 ).sample

50 target = get_target(noise_scheduler, noise, latents, timesteps).to(cuda[0])

51 loss = prior_preserving_loss(

52 model_pred, target, config["prior_loss_weight"]

53 )

54 loss.backward()

55 clip_grad_norm_(

56 itertools.chain(text_encoder.parameters(), unet.parameters()),

57 config["max_grad_norm"],

58 )

59 optimizer.step() # Step all optimizers.

60 global_step += 1

61 results = {

62 "step": global_step,

63 "loss": loss.detach().item(),

64 }

65 # Ray AIR code

66 session.report(results)

67We can then run this training loop with Ray AIR's TorchTrainer:

1args = train_arguments().parse_args()

2

3# Build training dataset.

4train_dataset = get_train_dataset(args)

5

6print(f"Loaded training dataset (size: {train_dataset.count()})")

7

8# Train with Ray AIR TorchTrainer.

9trainer = TorchTrainer(

10 train_fn,

11 train_loop_config=vars(args),

12 scaling_config=ScalingConfig(

13 use_gpu=True,

14 num_workers=args.num_workers,

15 resources_per_worker={

16 "GPU": 2,

17 },

18 ),

19 datasets={

20 "train": train_dataset,

21 },

22)

23result = trainer.fit()

In the TorchTrainer, we can easily configure our scale. The above example runs training on 2 workers with 2 GPUs each - i.e., on 4 GPUs. To run the example on 8 GPUs, just simply set the number of workers to 4!

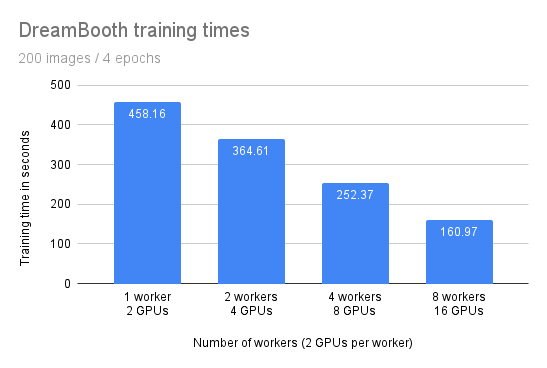

Figure 4. Training times for number of GPUs

Figure 4. Training times for number of GPUs

The training time decreases linearly with the number of workers. The scaling is not perfect: In an ideal world, doubling the number of workers should cut the training time in half. The communication of large model weights incurs some overhead.

This can likely be fixed by using a larger batch size, and hence by optimizing GPU memory usage with libraries such as DeepSpeed - which we'll explore in another blog post.

LinkLaunching a Ray cluster on the AWS, GCP, or Kubernetes

Ray ships with built-in support for launching AWS and GCP clusters and also has community-maintained integrations for Azure and Aliyun.

To keep this blog post short, we refer you to the Ray Cluster Launcher documentation.

If you want to run on Kubernetes, you can check out the KubeRay documentation as well.

And if you’re interested in a managed offering for Ray in general, feel free to sign up for Anyscale.

LinkPutting it all together

Our example comes with a few scripts that can be easily run from the command line.

You can always find the latest version of this code here!

1# Get the Ray repo for the example code

2git clone https://github.com/ray-project/ray.git

3cd doc/source/templates/05_dreambooth_finetuning/dreambooth

4pip install -Ur requirements.txt

5

6# Set some environment variables

7export DATA_PREFIX="./"

8export ORIG_MODEL_NAME="CompVis/stable-diffusion-v1-4"

9export ORIG_MODEL_HASH="249dd2d739844dea6a0bc7fc27b3c1d014720b28"

10export ORIG_MODEL_DIR="$DATA_PREFIX/model-orig"

11export ORIG_MODEL_PATH="$ORIG_MODEL_DIR/models--${ORIG_MODEL_NAME/\//--}/snapshots/$ORIG_MODEL_HASH"

12export TUNED_MODEL_DIR="$DATA_PREFIX/model-tuned"

13export IMAGES_REG_DIR="$DATA_PREFIX/images-reg"

14export IMAGES_OWN_DIR="$DATA_PREFIX/images-own"

15export IMAGES_NEW_DIR="$DATA_PREFIX/images-new"

16

17export CLASS_NAME="cat"

18

19mkdir -p $ORIG_MODEL_DIR $TUNED_MODEL_DIR $IMAGES_REG_DIR $IMAGES_OWN_DIR $IMAGES_NEW_DIR

20

21# AT THIS POINT YOU SHOULD COPY YOUR OWN IMAGES INTO

22# $IMAGES_OWN_DIR

23

24# Download pre-trained model

25python cache_model.py --model_dir=$ORIG_MODEL_DIR --model_name=$ORIG_MODEL_NAME --revision=$ORIG_MODEL_HASH

26

27# Generate regularization images

28python run_model.py \

29 --model_dir=$ORIG_MODEL_PATH \

30 --output_dir=$IMAGES_REG_DIR \

31 --prompts="photo of a $CLASS_NAME" \

32 --num_samples_per_prompt=200

33

34# Train our model

35python train.py \

36 --model_dir=$ORIG_MODEL_PATH \

37 --output_dir=$TUNED_MODEL_DIR \

38 --instance_images_dir=$IMAGES_OWN_DIR \

39 --instance_prompt="a photo of unqtkn $CLASS_NAME" \

40 --class_images_dir=$IMAGES_REG_DIR \

41 --class_prompt="a photo of a $CLASS_NAME"

42

43# Generate our images

44python run_model.py \

45 --model_dir=$TUNED_MODEL_DIR \

46 --output_dir=$IMAGES_NEW_DIR \

47 --prompts="photo of a unqtkn $CLASS_NAME" \

48 --num_samples_per_prompt=20

49And, finally, my cat on the moon, looks like:

Figure 4. My cat on the moon. Not any cat :)

Figure 4. My cat on the moon. Not any cat :)LinkConclusion

With Ray AIR, fine-tuning a stable diffusion model is super simple. And super scalable: You can just add more machines to your cluster, and Ray can automatically use them. No code changes needed! Just tell Ray how many workers and GPUs you want to use.

Also, with Ray AIR, you worry less about cluster environment or management. Instead, you focus on writing your distributed training code with AIR's expressive and composable APIs.

Finally, you can leverage all the power from the cloud - and put your cat on the moon!

LinkNext Steps

For further exploration of Ray and Ray AIR:

Peruse overview of Ray and what it is used for

Visit our gallery of Ray AIR examples in the documentation

Join our Ray monthly Ray Meetup, where we discuss all things Ray

Connect with the Ray community via forums: slack and discuss

Early-bird registration of Ray Summit 2023 is open. Grab your spot early