Ray Serve + FastAPI: The best of both worlds

In the first blog post in this series, we covered how AI is becoming increasingly strategic for many enterprises, but MLOps and the ability to deploy models in production are still very difficult.

In the second blog post, we covered how Ray can help simplify your MLOps practice by providing a single script to perform your data preprocessing, training, and tuning at scale, while Ray Serve provides abstraction between the development of your real-time pipeline and the deployment. This allows teams to simplify their tech stack without sacrificing scaling, align better, and minimize friction overall.

We also highlighted in the first blog post that there is almost an even divide between developers choosing a generic Python web server such as FastAPI and a specialized ML serving solution framework.

Let’s dive into each option and surface some of the features and why you might choose one over the other.

LinkGeneric Python web server

Python has become the lingua franca for data science but Python is also growing in popularity in general as a programming language.

According to the recent Python developer survey by the Python Software Foundation and JetBrains, FastAPI, a modern, fast web framework, has experienced the fastest growth among Python web frameworks, having grown by 9 percentage points compared to the previous year.

The creator of FastAPI created the library out of frustration with existing Python web frameworks. FastAPI aims to optimize the developer experience using an integrated development environment (IDE) while leveraging common standards such as OAuth and Open API on top of a highly performant asynchronous server gateway interface (ASGI) engine. The result is “a modern, fast (high-performance) web framework for building APIs with Python 3.6+ based on standard Python type hints.”

Here are some highlighted features from the FastAPI website:

Fast: Very high performance, on par with NodeJS and Go (thanks to Starlette and Pydantic). One of the fastest Python frameworks available.

Fast to code: Increase the speed to develop features by about 200% to 300%.

Fewer bugs: Reduce about 40% of human (developer) induced errors.

Intuitive: Great editor support. Auto-completion everywhere. Less time debugging.

Easy: Designed to be easy to use and learn. Less time reading docs.

Short: Minimize code duplication. Multiple features from each parameter declaration. Fewer bugs.

Robust: Get production-ready code. With automatic interactive documentation.

Standards-based: Based on (and fully compatible with) the open standards for APIs: OpenAPI (previously known as Swagger) and JSON Schema.

These features are now common for a web server framework and aim at optimizing the developer experience to build microservices. Typical web frameworks allow for path management, healthcheck, endpoint testing, type checking and support for open standards.

While generic Python web servers were designed to build microservices, they were not designed to serve ML models.

LinkSpecialized ML serving

With models growing in size (foundational models) requiring AI accelerators (GPU, TPU, AWS Inferentia) for better performance and specific features for serving ML models, a number of ML serving frameworks have emerged (Seldon Core, KServe, TorchServe, Tensorflow Serving, etc.).

They all aim to optimize throughput without sacrificing latency while also providing specific features for serving real-time ML models.

Different model compilation techniques such as pruning and quantization have emerged to help reduce the model size without sacrificing too much accuracy and therefore reducing the time it takes to compute inference and the overall memory footprint.

Other techniques such as microbatching help to maximize the AI accelerator compute and increase throughput without trying to sacrifice latency. AI accelerators such as GPU can take vectorized instructions to perform computations in parallel. Performing inference with batching can increase the throughput of the model as well as utilization of the hardware.

Bin packing models allow you to co-locate multiple models on the host and share the resources as those models would not be invoked at the same time, allowing you to reduce idle time on the host.

“Scale to zero” allows you to release your resources when there is no traffic. The tradeoff is that there is typically a cold start penalty as you invoke the model the first time.

Autoscaling might require different metrics. You may want to set your policies based on CPU/GPU utilization threshold, for example.

Different handlers to accommodate complex data types such as text, audio, video are often packaged with those specialized ML serving libraries.

These features are common among specialized libraries and allow ML practitioners to optimize their real-time model serving in production.

LinkRay Serve + FastAPI

Ray Serve provides the best of both worlds: a performant Python web server and a specialized ML serving library.

Ray Serve allows you to plug in a web server such as aiohttp or FastAPI easily.

For example, Ray Serve allows you to integrate with FastAPI using the @serve.ingress decorator.

1import requests

2from fastapi import FastAPI

3from ray import serve

4

5# 1: Define a FastAPI app and wrap it in a deployment with a route handler.

6app = FastAPI()

7

8@serve.deployment(route_prefix="/")

9@serve.ingress(app)

10class FastAPIDeployment:

11 # FastAPI will automatically parse the HTTP request for us.

12 @app.get("/hello")

13 def say_hello(self, name: str):

14 return f"Hello {name}!"

15

16# 2: Deploy the deployment.

17serve.start()

18FastAPIDeployment.deploy()

19

20# 3: Query the deployment and print the result.

21print(requests.get("http://localhost:8000/hello", params={"name": "Theodore"}).json())

22# "Hello Theodore!"This allows you to make use of all the FastAPI features such as variable routes, automatic type validation, and dependency injection combined with Ray Serve ML serving features.

As covered in a previous blog post, Ray Serve allows you to compose a multi-model inference pipeline in Python using the Deployment Graph API. By design, each step of the real-time pipeline can be scaled independently on different hardware (CPU, GPU, etc.) and/or nodes by annotating the serve.deployment decorator.

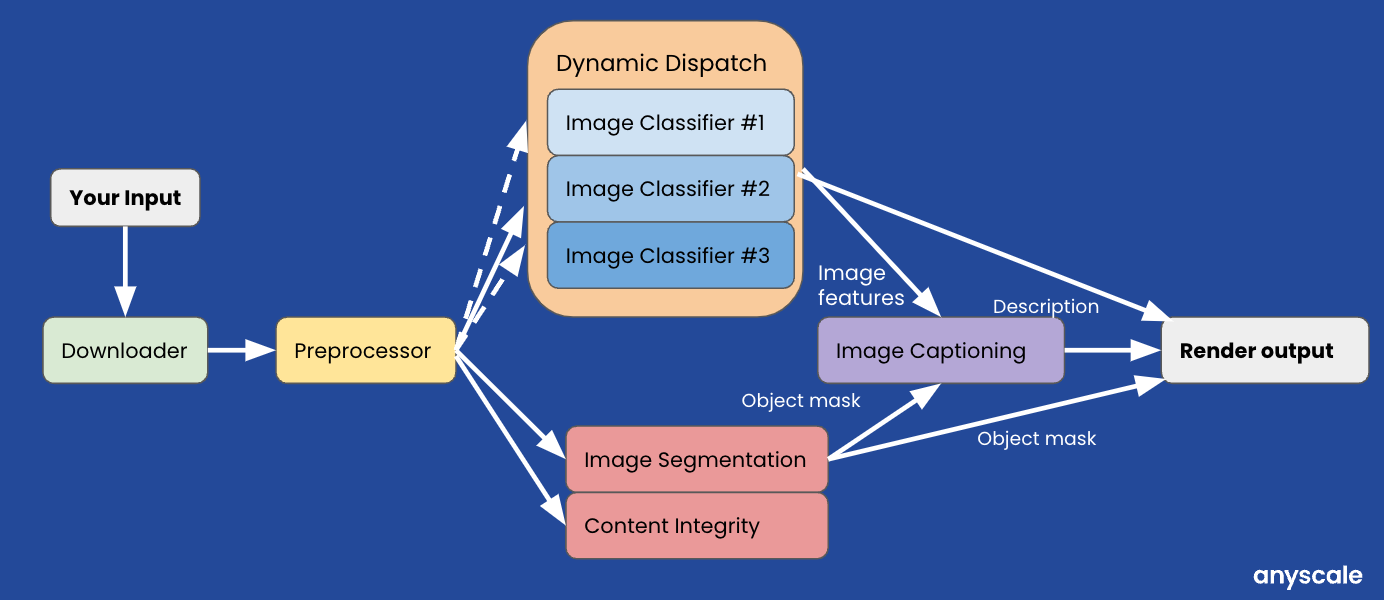

If you recall we used a product tagging/content understanding real-time inference pipeline in the second blog post.

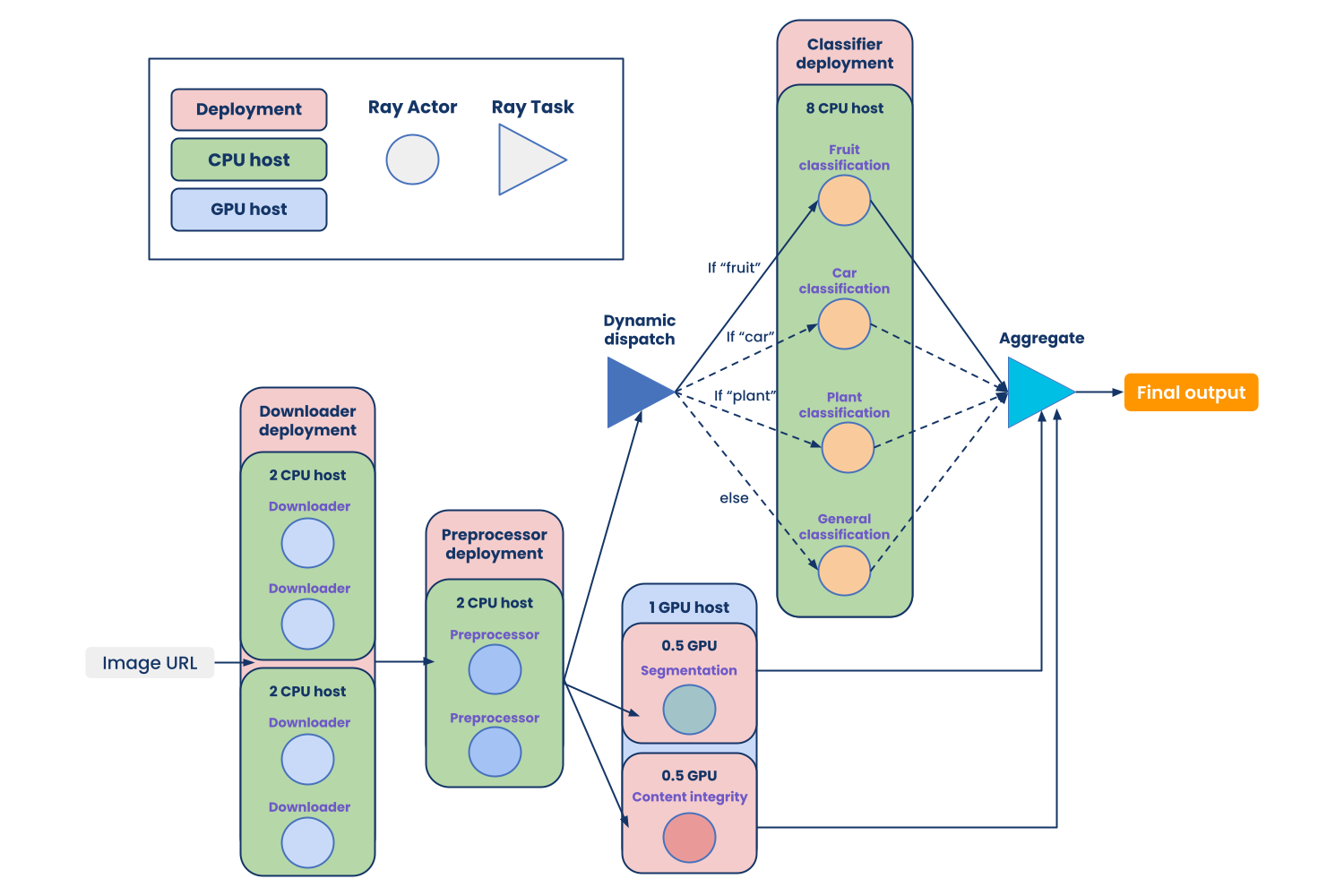

If we were to implement the above use case using the Ray Serve deployment graph it would look like this:

Scalable product tagging deployment graph

Scalable product tagging deployment graphNote that each of those tasks/actors can have fine grained resources allocation allowing for more efficiency utilization on each host without sacrificing latency (i.e., ray_actor_options={"num_cpus": 0.5}).

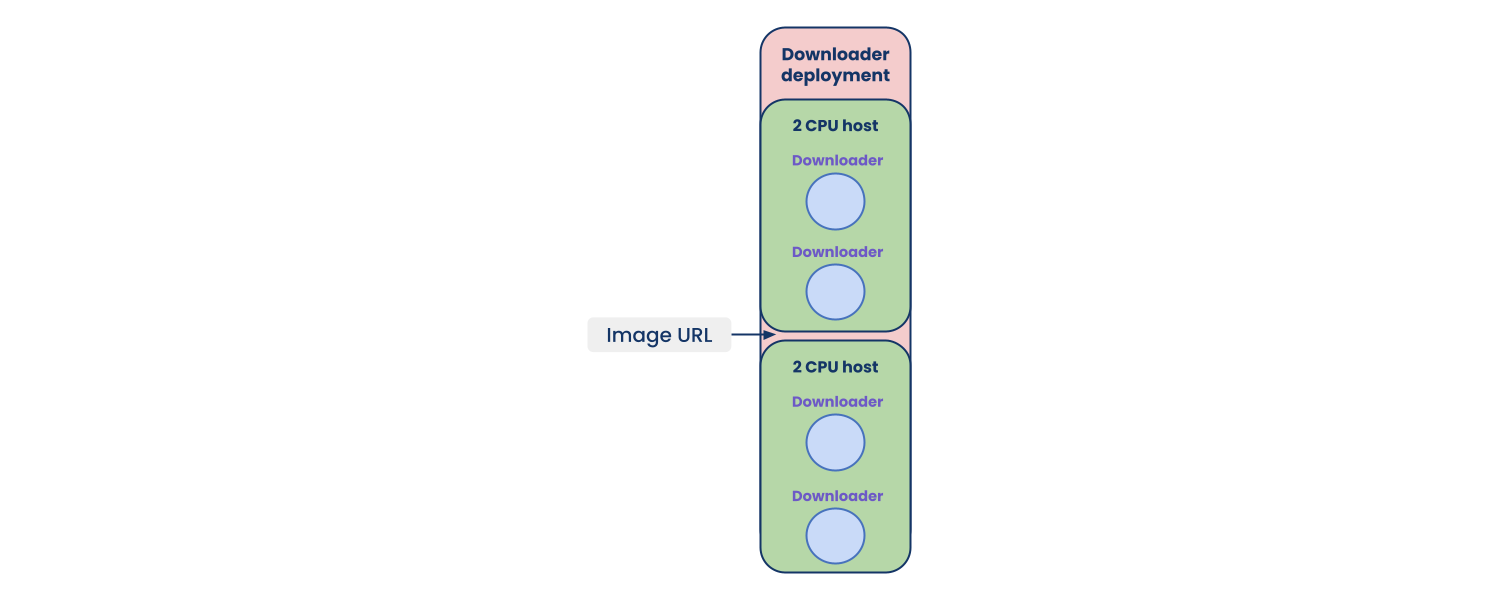

Ray Serve allows you to configure the number of replicas at each step of your pipeline. This allows you to autoscale your ML serving application in milliseconds granularly.

Autoscaling replicas on the downloader deployment step.

Autoscaling replicas on the downloader deployment step.Microbatching requests can also be implemented by using the @serve.batch decorator. This not only gives the developer a reusable abstraction, but also more flexibility and customization opportunity as part of their batching logic.

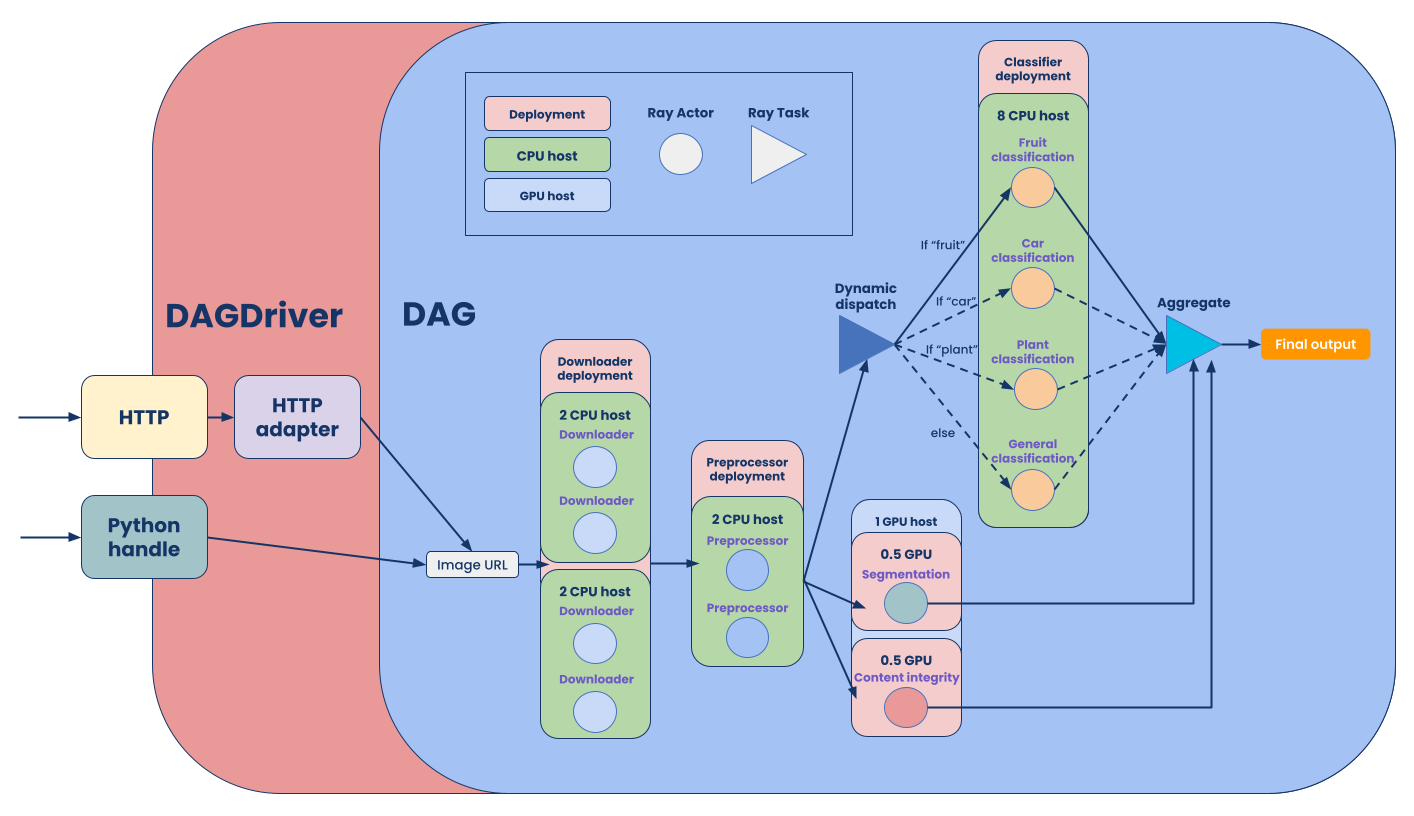

Using a proven web server framework provides a well established standard and solution for deploying your microservices. Routing, endpoint testing, type checking, and healthcheck can all continue to follow your current microservices paradigm on top of FastAPI while Ray Serve provides all the features to optimize serving your real-time ML model.

HTTP entry point for the deployment graph

HTTP entry point for the deployment graphWhy compromise when you can get the best of both worlds!

On the roadmap, Ray Serve will further optimize your compute for serving your models with zero copy load and model caching.

Zero copy load allows for loading large models in milliseconds or 340x times faster using Ray. Model caching will allow you to keep a pool of models in the Ray internal memory and allow you to hotswap with models for a given endpoint. This allows you to have many more models available than what your host can handle and allows you to optimize resources on your endpoint host depending on traffic, demand, or business rules.

Check out the documentation, join the Slack channel, and get involved!