Three Key Elements of a Scalable ML Platform

ML platform builders need a unified extensible platform to meet current and future scaling challenges. As machine learning gains a foothold in more and more companies, teams are struggling with the intricacies of managing the machine learning lifecycle.

These platforms often start organically. Individual users using their laptops or an instance in the cloud to develop a machine learning model. This might work for an experiment or two, but beyond trivial use cases, teams must stitch together more and more tools and frameworks to cover the variety of use cases. With organic growth, these machine learning platforms becomes a mess, becoming difficult to manage and even harder to use.

As a result, more teams are looking for machine learning platforms to standardize their processes and tooling to save costs and streamline and scale the deployment process. Several startups and cloud providers are now offering end-to-end machine learning platforms including AWS (SageMaker), Azure (Machine Learning Studio), Databricks (MLflow), Google (Cloud AI Platform), and others. Many other companies choose to build their own including Uber (Michelangelo), Airbnb (BigHead), Facebook (FBLearner), Netflix, and Apple (Overton).

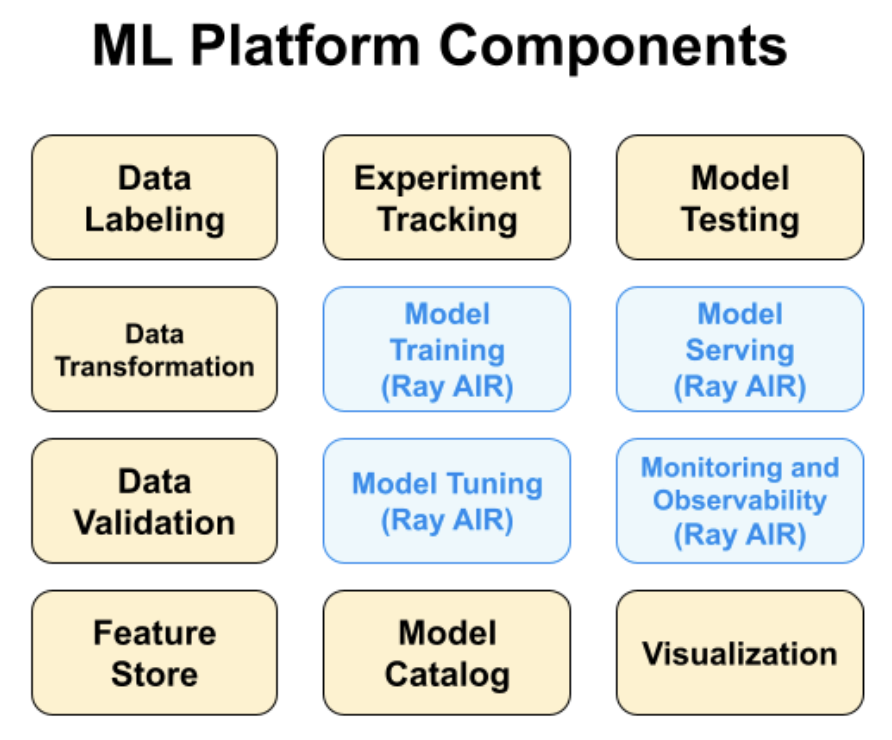

Figure 1: Ray can be used to build pluggable components for machine learning platforms. The boxes in blue are components for which there are some libraries built on top of Ray.

In our previous post we examined the reasons behind the growing popularity of Ray. In this post, we explain why more and more developers are choosing Ray to build their ML tools and platforms.

Ray makes building ML platforms easier than ever with the various Ray Libraries that enables simple scaling of individual workloads, end-to-end workflows, and popular ecosystem frameworks, all in Python. Ray has Libraries covering model training, data preprocessing, and batch inference.

Unlike monolithic platforms, users have the flexibility to pick and choose which python libraries to use in their workflow, or to use Ray to build their own libraries. Ray is an open ecosystem and it can be used alongside other ML platform components or point solutions. A partial list of machine learning platforms that already incorporate Ray include:

Azure Machine Learning provides Ray for distributed reinforcement learning

Amazon SageMaker provides Ray for distributed reinforcement learning

Shopify uses Ray as the compute core of its next-generation ML platform, Merlin

Uber uses Ray for distributed training and hyperparameter tuning in its ML platform, Michelangelo

In this post, we share insights derived from conversations with many ML platform builders. We list the 3 key features that will be critical to ensuring that your ML platform is well-positioned for modern AI applications.

Ability to Bridge the Gap between Development and Production

Easy ML Scaling

Ecosystem Integration

We also make the case that developers building ML platforms and ML components should consider Ray because it has an ecosystem of standalone libraries that can be used to address some of the items we list below.

Link1. Ability to Bridge the Gap between Development and Production

Machine learning platforms often need to serve two personas: model developers and ML engineers. Model developers need to iterate quickly and prefer to stay close to Python as their main responsibility is to validate a machine learning model to improve a key business metric. On the other hand, ML engineers are responsible for building production pipelines that automate the training of the model on new data and scale out the training.

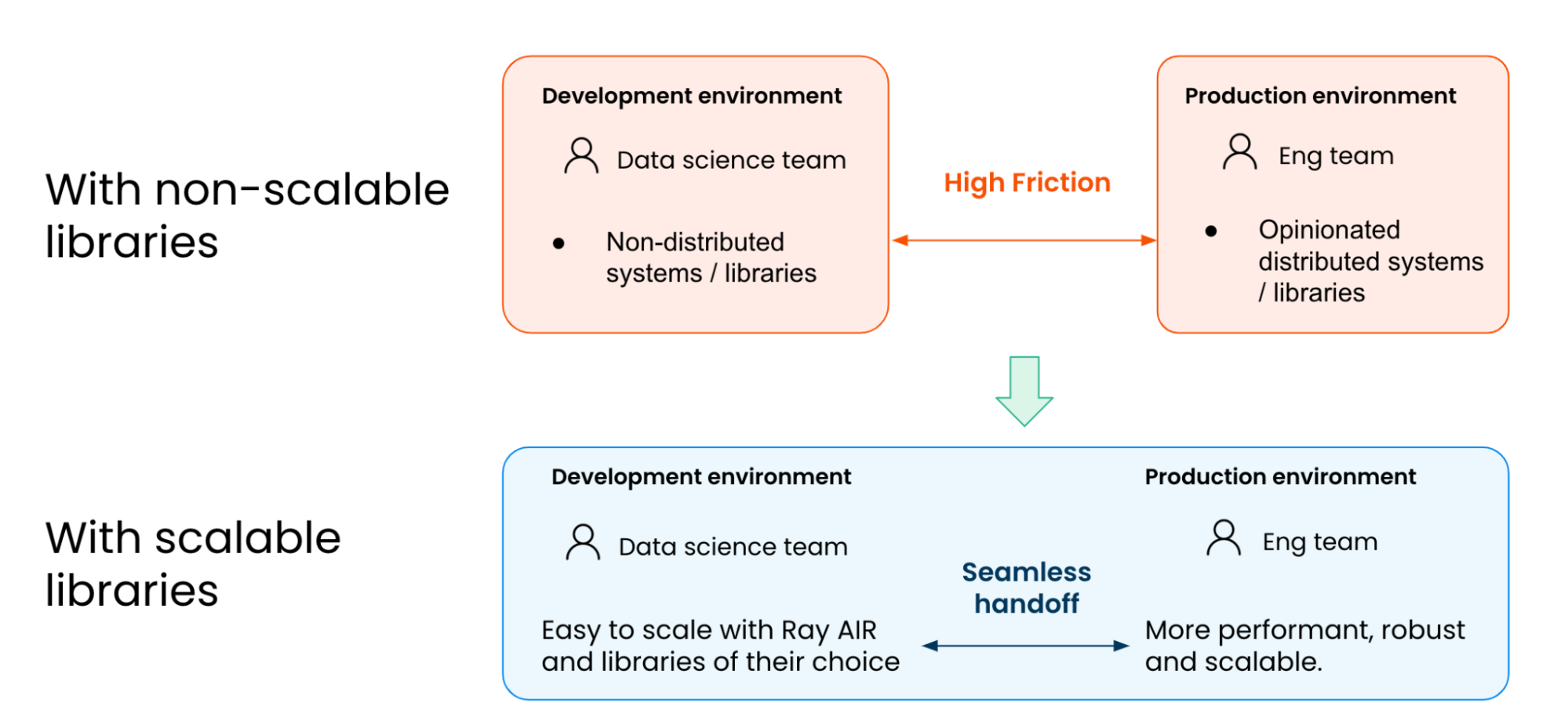

Existing ML platforms often fail to service both personas as the code written for development is often rewritten in a different framework for productionization. The frameworks used for production ML pipelines often are the only tools that can be used to scale out the training but are extremely hard to iterate against, slowing down the rate of deployment.

What’s needed is scalable machine learning libraries - machine learning libraries that can scale and run reliably in production but are easy for model developers to to develop against. In particular, model developers need tools to build any kind of distributed ML pipeline or application in just a single Python script, using best in class ecosystem libraries.

Ray and Ray’s AI Runtime provide exactly that - a suite of scalable machine learning libraries that can be used and composed just like any other python library, thereby simplifying the gap between ML development and production.

Link2. Easy ML Scaling

As we noted in a previous post (“The Future of Computing is Distributed”) the “demands of machine learning applications are increasing at breakneck speed”. The rise of deep learning and new workloads means that distributed computing will be common for machine learning. Unfortunately many developers have relatively little experience in distributed computing.

Scaling and distributed computation are areas where Ray has been helpful to many users. Ray allows developers to focus on their applications instead of on the intricacies of distributed computing. Using Ray brings several benefits to developers needing to scale machine learning applications:

Ray lets you easily scale your existing applications to a cluster - This could be as simple as scaling your favorite library to a compute cluster (see this recent post on using Ray to scale scikit-learn). Or it could involve using the Ray API to scale an existing program to a cluster. The latter scenario is one we’ve seen happen for applications in NLP, online learning, fraud detection, financial time-series, OCR, and many other use cases.

Ray Train simplifies writing and maintaining distributed machine learning workloads, such as batch inference and distributed training, for key ML frameworks such as XGBoost, PyTorch and TensorFlow. This is good news for the many companies and developers who struggle with scaling out their machine learning infrastructure or applying machine learning to their data.

Ray is portable, meaning that it can run on a wide range of platforms. You can develop and run Ray applications on your laptop. You can then run the same application on a cluster created by the Ray cluster launcher, or on a Kubernetes cluster using KubeRay.

Link3. Ecosystem Integration

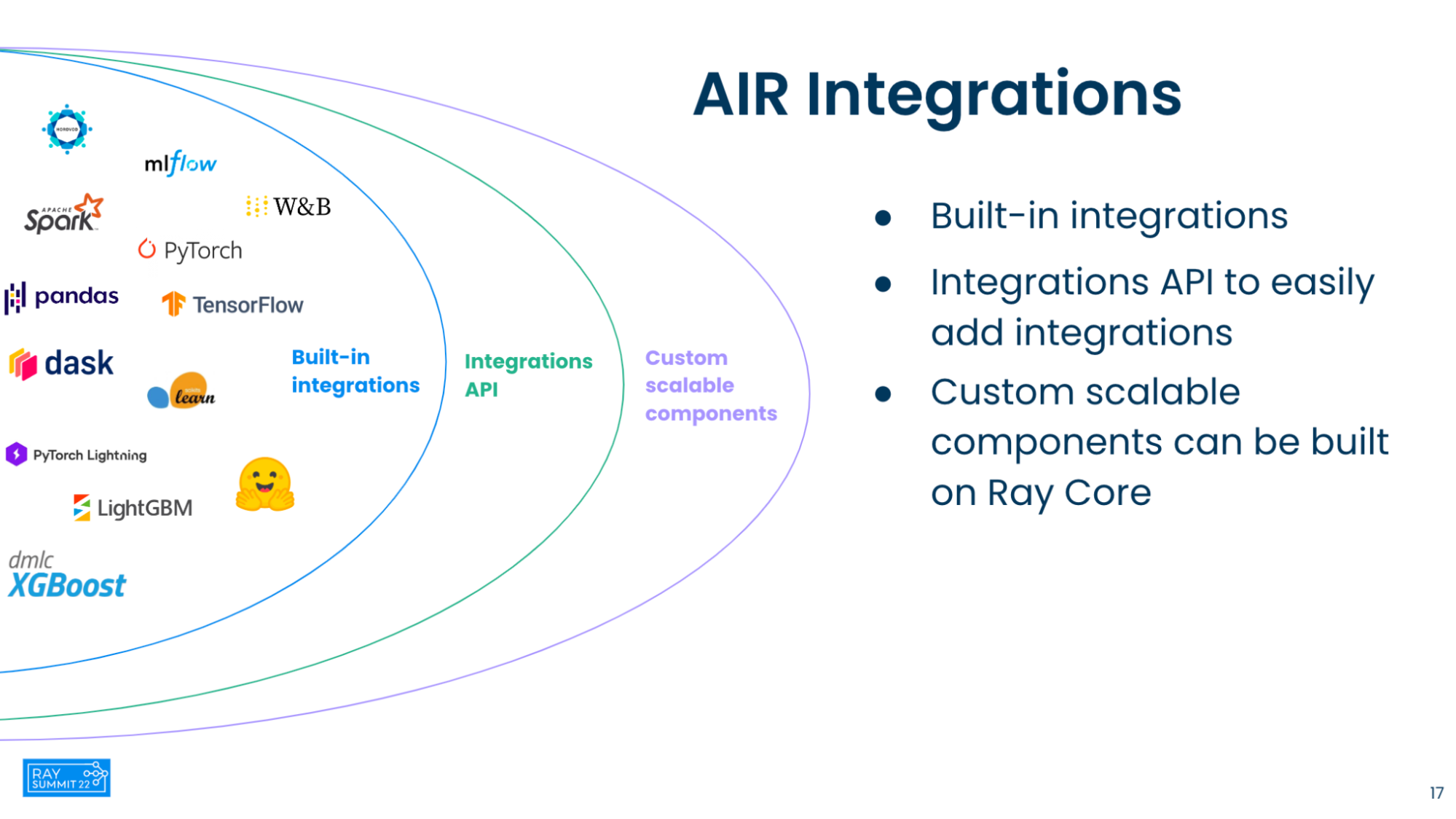

Figure 3: Ray has a large set of ecosystem integrations built in but also has APIs for extending and building your own custom components.

There is a vast and growing ecosystem of data and machine learning libraries, tools, and frameworks. Seamlessly integrating these components is a key to building successful machine learning applications.

Developers and machine learning engineers use a variety of tools and programming languages (R, Python, Julia, SAS, etc.). But with the rise of deep learning, Python has become the dominant programming language for machine learning. So if anything, an ML platform needs to be Python and the Python ecosystem as a first-class citizen.

As a practical matter, developers and machine learning engineers rely on many different Python libraries and tools. The most widely used libraries include deep learning tools (TensorFlow, PyTorch), machine learning and statistical modeling libraries (scikit-learn, statsmodel), NLP tools (spaCy, Hugging Face, AllenNLP), and model tuning (Hyperopt, Tune).

Vendored ML platforms such as SageMaker and Vertex AI don’t work for many teams for several reasons. Firstly, they’re often lacking a complete, end to end experience that makes teams productive. They’re often built of individual piece-meal solutions and the lack of integration hinders teams moving to production. Secondly, these platforms often lag behind in supporting the most up-to-date library versions or even modern capabilities like deep learning. This slows the rate of development in ML teams and makes production even more challenging. Lastly, these tools don’t integrate with the broader ML ecosystem, biasing towards the walled-gardens of the cloud platforms themselves.

Due to its large thriving ecosystem, including integrations with major ML projects such as XGBoost, Tensorflow, Pytorch, and Huggingface, many developers are leveraging Ray for building machine learning tools. Ray is a unified framework for building and scaling Python libraries and applications. Ray also has a growing collection of standalone libraries available to Python developers. Furthermore, Ray Core’s task and actor primitives allow you to easily develop scalable libraries to meet any emerging AI needs.

In addition to these ML libraries and frameworks, teams of engineers need to collaborate to build complex end-to-end ML applications, such as ML platforms. As such, these teams need access to systems that enable both sharing and discovery. When considering an ML platform, consider the key stages of model development and operations, and assume that teams of people with different backgrounds will collaborate during each of those phases.

For example, feature stores are useful because they allow developers to share and discover features that they might otherwise not have thought about. Teams also need to be able to collaborate during the model development lifecycle. This includes managing, tracking, and reproducing experiments. The leading open source project in this area is MLflow, but we’ve come across users of other tools like Weights & Biases and comet.ml, as well as users who have built their own tools to manage ML experiments.

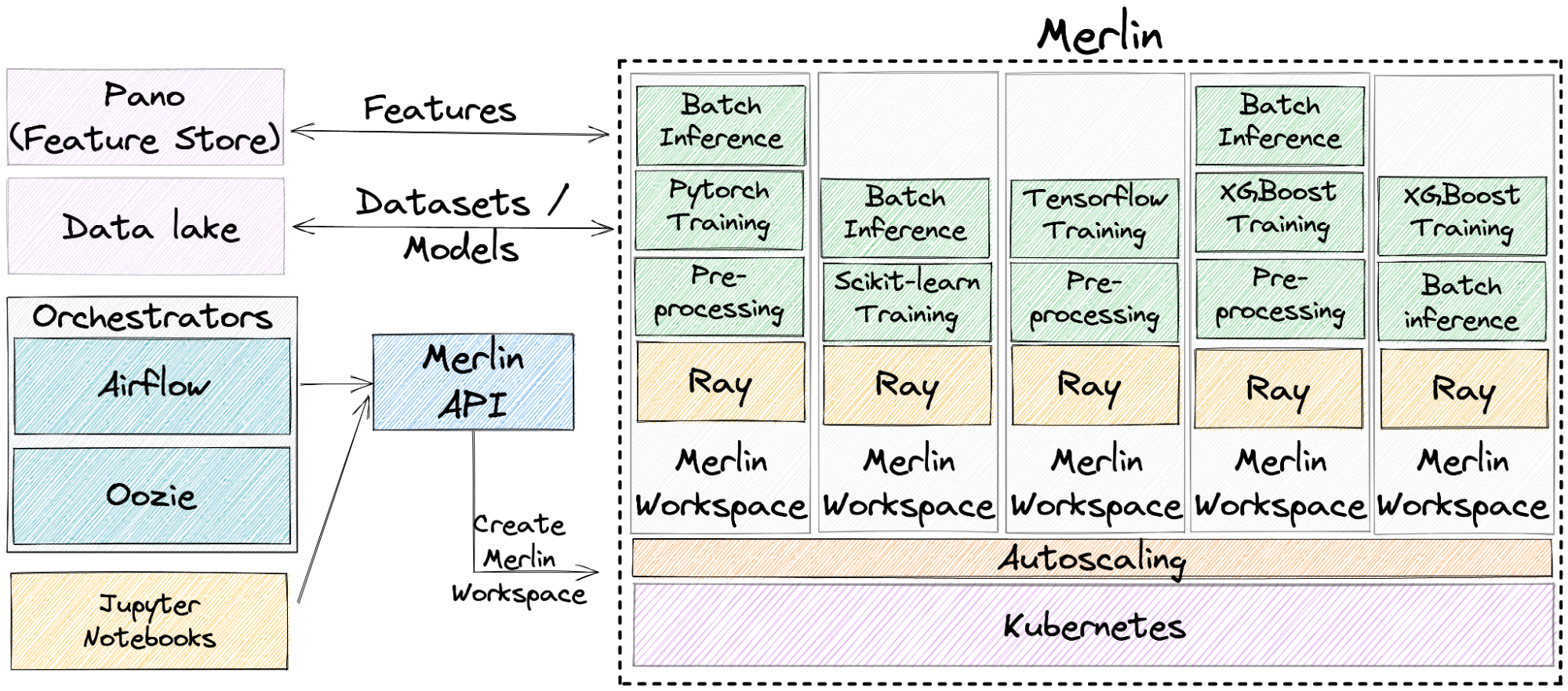

Figure 4: Shopify’s Merlin platform demonstrates an architecture of using multiple non-Ray tools together, including a feature store and Jupyter notebooks. Learn more about this on the Shopify blog.

Enterprises will require additional features – including security and access control. This means integrations with model catalogs (analogs of similar systems for managing data) to provide model governance, and to share and deploy models to production.

By providing a Python-native, unifying compute framework to scale all ML workloads, Ray enables developers to easily integrate all these systems to build the next generation of ML platforms, end-to-end applications and services. Figure 3 shows Merlin, an example of such a ML Platform built entirely on top of Ray by Shopify’s engineers.

LinkSummary

We listed key elements that machine learning platforms should possess. Our baseline assumptions are that Python will remain the language of choice for ML, distributed computing will increasingly be needed, new workloads like hyperparameter tuning and RL will need to be supported, and tools for enabling collaboration and MLOps need to be available.

With these assumptions in mind, we believe that Ray will be the foundation of future ML platforms. Many developers and engineers are already using Ray for their machine learning applications, including training, optimization, and serving. Ray not only addresses current challenges like scale and performance but is well positioned to support future workloads and data types. Ray, and the growing number of libraries built on top of it, will help scale ML platforms for years to come. We look forward to seeing what you build on top of Ray!

To engage with the Ray community, please find us on GitHub or join our Slack. To learn more, register for the upcoming Ray Summit!